Caused by: java.nio.channels.ClosedChannelException

Caused by: java.nio.channels.ClosedChannelException

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(...)(Unknown Source)

// 解决方案 -- 不好使

-- yarn-site.xml

// 或者

---------------------

作者:清霄

来源:CSDN

原文:https://blog.csdn.net/cwg_1992/article/details/77574182

版权声明:本文为博主原创文章,转载请附上博文链接!

[spark@master bin]$ cd /opt/spark-2.2.1-bin-hadoop2.7/bin

[spark@master bin]$ ./spark-shell --master yarn-client

Warning: Master yarn-client is deprecated since 2.0. Please use master "yarn" with specified deploy mode instead.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

18/06/30 23:13:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/06/30 23:13:51 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

Spark context Web UI available at http://192.168.0.120:4040

Spark context available as 'sc' (master = yarn, app id = application_1530369937777_0003).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.2.1

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_171)

Type in expressions to have them evaluated.

Type :help for more information.

scala> 18/06/30 23:14:42 ERROR cluster.YarnClientSchedulerBackend: Yarn application has already exited with state FAILED!

18/06/30 23:14:42 ERROR client.TransportClient: Failed to send RPC 6363337827096152590 to /192.168.0.121:42926: java.nio.channels.ClosedChannelException

java.nio.channels.ClosedChannelException

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(...)(Unknown Source)

18/06/30 23:14:42 ERROR cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: Sending RequestExecutors(0,0,Map(),Set()) to AM was unsuccessful

java.io.IOException: Failed to send RPC 6363337827096152590 to /192.168.0.121:42926: java.nio.channels.ClosedChannelException

at org.apache.spark.network.client.TransportClient.lambda$sendRpc$2(TransportClient.java:237)

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:507)

at io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:481)

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:420)

at io.netty.util.concurrent.DefaultPromise.tryFailure(DefaultPromise.java:122)

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetFailure(AbstractChannel.java:852)

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(AbstractChannel.java:738)

at io.netty.channel.DefaultChannelPipeline$HeadContext.write(DefaultChannelPipeline.java:1251)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite0(AbstractChannelHandlerContext.java:733)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite(AbstractChannelHandlerContext.java:725)

at io.netty.channel.AbstractChannelHandlerContext.access$1900(AbstractChannelHandlerContext.java:35)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.write(AbstractChannelHandlerContext.java:1062)

at io.netty.channel.AbstractChannelHandlerContext$WriteAndFlushTask.write(AbstractChannelHandlerContext.java:1116)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.run(AbstractChannelHandlerContext.java:1051)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:399)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:446)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:131)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.nio.channels.ClosedChannelException

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(...)(Unknown Source)

18/06/30 23:14:42 ERROR util.Utils: Uncaught exception in thread Yarn application state monitor

org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:205)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.requestTotalExecutors(CoarseGrainedSchedulerBackend.scala:551)

at org.apache.spark.scheduler.cluster.YarnSchedulerBackend.stop(YarnSchedulerBackend.scala:97)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.stop(YarnClientSchedulerBackend.scala:151)

at org.apache.spark.scheduler.TaskSchedulerImpl.stop(TaskSchedulerImpl.scala:517)

at org.apache.spark.scheduler.DAGScheduler.stop(DAGScheduler.scala:1670)

at org.apache.spark.SparkContext$$anonfun$stop$8.apply$mcV$sp(SparkContext.scala:1928)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1317)

at org.apache.spark.SparkContext.stop(SparkContext.scala:1927)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend$MonitorThread.run(YarnClientSchedulerBackend.scala:108)

Caused by: java.io.IOException: Failed to send RPC 6363337827096152590 to /192.168.0.121:42926: java.nio.channels.ClosedChannelException

at org.apache.spark.network.client.TransportClient.lambda$sendRpc$2(TransportClient.java:237)

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:507)

at io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:481)

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:420)

at io.netty.util.concurrent.DefaultPromise.tryFailure(DefaultPromise.java:122)

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetFailure(AbstractChannel.java:852)

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(AbstractChannel.java:738)

at io.netty.channel.DefaultChannelPipeline$HeadContext.write(DefaultChannelPipeline.java:1251)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite0(AbstractChannelHandlerContext.java:733)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite(AbstractChannelHandlerContext.java:725)

at io.netty.channel.AbstractChannelHandlerContext.access$1900(AbstractChannelHandlerContext.java:35)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.write(AbstractChannelHandlerContext.java:1062)

at io.netty.channel.AbstractChannelHandlerContext$WriteAndFlushTask.write(AbstractChannelHandlerContext.java:1116)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.run(AbstractChannelHandlerContext.java:1051)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:399)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:446)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:131)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.nio.channels.ClosedChannelException

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(...)(Unknown Source)

![]()

解决方案:

主要是给节点分配的内存少,yarn kill了spark application。

给yarn-site.xml增加配置:

![]()

yarn.nodemanager.pmem-check-enabled false yarn.nodemanager.vmem-check-enabled false Whether virtual memory limits will be enforced for containers yarn.nodemanager.vmem-pmem-ratio 4 Ratio between virtual memory to physical memory when setting memory limits for containers

![]()

重启hadoop。然后再重新执行./spark-shell --master yarn-client即可。

问题解决过程记录:

1)在master上将hadoop,spark服务停掉

[spark@master hadoop]$ cd /opt/hadoop-2.9.0 [spark@master hadoop]$ sbin/stop-all.sh [spark@master hadoop]$ cd /opt/spark-2.2.1-bin-hadoop2.7 [spark@master hadoop]$ sbin/stop-all.sh

2)在master上修改yarn-site.xml

![]()

[spark@master hadoop]$ cd /opt/hadoop-2.9.0/etc/hadoop [spark@master hadoop]$ ls capacity-scheduler.xml hadoop-env.cmd hadoop-policy.xml httpfs-signature.secret kms-log4j.properties mapred-env.sh slaves yarn-env.sh configuration.xsl hadoop-env.sh hdfs-site.xml httpfs-site.xml kms-site.xml mapred-queues.xml.template ssl-client.xml.example yarn-site.xml container-executor.cfg hadoop-metrics2.properties httpfs-env.sh kms-acls.xml log4j.properties mapred-site.xml ssl-server.xml.example core-site.xml hadoop-metrics.properties httpfs-log4j.properties kms-env.sh mapred-env.cmd mapred-site.xml.template yarn-env.cmd [spark@master hadoop]$ vi yarn-site.xml~ ~ ~ "yarn-site.xml" 66L, 2285C written yarn.nodemanager.aux-services mapreduce_shuffle yarn.nodemanager.aux-services.mapreduce.shuffle.class org.apache.hadoop.mapred.ShuffleHandler yarn.resourcemanager.address master:8032 yarn.resourcemanager.scheduler.address master:8030 yarn.resourcemanager.resource-tracker.address master:8035 yarn.resourcemanager.admin.address master:8033 yarn.resourcemanager.webapp.address master:8088 yarn.resourcemanager.hostname master yarn.nodemanager.resource.memory-mb 2048 yarn.nodemanager.pmem-check-enabled false yarn.nodemanager.vmem-check-enabled false Whether virtual memory limits will be enforced for containers yarn.nodemanager.vmem-pmem-ratio 4 Ratio between virtual memory to physical memory when setting memory limits for containers

![]()

3)将master上将l修改后的yarn-site.xm文件覆盖到各个slaves节点

![]()

[spark@master hadoop]$ scp -r /opt/hadoop-2.9.0/etc/hadoop/yarn-site.xml spark@slave1:/opt/hadoop-2.9.0/etc/hadoop/ yarn-site.xml 100% 2285 577.6KB/s 00:00 [spark@master hadoop]$ scp -r /opt/hadoop-2.9.0/etc/hadoop/yarn-site.xml spark@slave2:/opt/hadoop-2.9.0/etc/hadoop/ yarn-site.xml 100% 2285 795.3KB/s 00:00 [spark@master hadoop]$ scp -r /opt/hadoop-2.9.0/etc/hadoop/yarn-site.xml spark@slave3:/opt/hadoop-2.9.0/etc/hadoop/ yarn-site.xml 100% 2285 1.5MB/s 00:00

![]()

4)重新启动hadoop,spark服务

![]()

[spark@master hadoop]$ cd /opt/hadoop-2.9.0 [spark@master hadoop]$ sbin/start-all.sh [spark@master hadoop]$ cd /opt/spark-2.2.1-bin-hadoop2.7 [spark@master spark-2.2.1-bin-hadoop2.7]$ sbin/start-all.sh [spark@master spark-2.2.1-bin-hadoop2.7]$ jps 5938 ResourceManager 6227 Master 5780 SecondaryNameNode 6297 Jps 5579 NameNode

![]()

5)验证spark on yarn是否正常运行

![]()

[spark@master spark-2.2.1-bin-hadoop2.7]$ cd /opt/spark-2.2.1-bin-hadoop2.7/bin

[spark@master bin]$ ./spark-shell --master yarn-client

Warning: Master yarn-client is deprecated since 2.0. Please use master "yarn" with specified deploy mode instead.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

18/06/30 23:50:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/06/30 23:50:19 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

Spark context Web UI available at http://192.168.0.120:4040

Spark context available as 'sc' (master = yarn, app id = application_1530373644791_0001).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.2.1

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_171)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

[spark@master bin]$ ./spark-shell --master yarn

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

18/06/30 23:51:47 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/06/30 23:51:49 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

Spark context Web UI available at http://192.168.0.120:4040

Spark context available as 'sc' (master = yarn, app id = application_1530373644791_0002).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.2.1

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_171)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

![]()

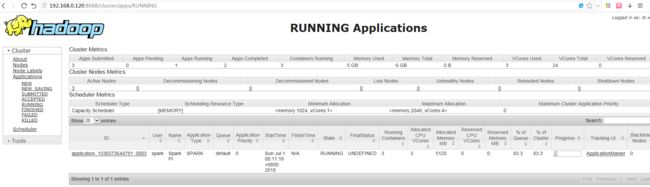

spark on yarn启动spark-shell后,可以在yarn管理界面看到一个Runing Application

6)继续验证:以yarn-cluster方式运行一个spark任务测试是否正常

![]()

[spark@master bin]$ cd /opt/spark-2.2.1-bin-hadoop2.7/

[spark@master spark-2.2.1-bin-hadoop2.7]$ ./bin/spark-submit \

> --class org.apache.spark.examples.SparkPi \

> --master yarn \

> /opt/spark-2.2.1-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.2.1.jar \

> 10

18/07/01 00:11:10 INFO spark.SparkContext: Running Spark version 2.2.1

18/07/01 00:11:11 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/07/01 00:11:11 INFO spark.SparkContext: Submitted application: Spark Pi

18/07/01 00:11:11 INFO spark.SecurityManager: Changing view acls to: spark

18/07/01 00:11:11 INFO spark.SecurityManager: Changing modify acls to: spark

18/07/01 00:11:11 INFO spark.SecurityManager: Changing view acls groups to:

18/07/01 00:11:11 INFO spark.SecurityManager: Changing modify acls groups to:

18/07/01 00:11:11 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); groups with view permissions: Set(); users with modify permissions: Set(spark); groups with modify permissions: Set()

18/07/01 00:11:11 INFO util.Utils: Successfully started service 'sparkDriver' on port 41922.

18/07/01 00:11:11 INFO spark.SparkEnv: Registering MapOutputTracker

18/07/01 00:11:11 INFO spark.SparkEnv: Registering BlockManagerMaster

18/07/01 00:11:11 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

18/07/01 00:11:11 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

18/07/01 00:11:11 INFO storage.DiskBlockManager: Created local directory at /opt/spark-2.2.1-bin-hadoop2.7/blockmgr-121559e6-2f03-4f68-9738-faf513bca0ac

18/07/01 00:11:11 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MB

18/07/01 00:11:11 INFO spark.SparkEnv: Registering OutputCommitCoordinator

18/07/01 00:11:11 INFO util.log: Logging initialized @1288ms

18/07/01 00:11:11 INFO server.Server: jetty-9.3.z-SNAPSHOT

18/07/01 00:11:11 INFO server.Server: Started @1345ms

18/07/01 00:11:11 INFO server.AbstractConnector: Started ServerConnector@596df867{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

18/07/01 00:11:11 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@425357dd{/jobs,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@52eacb4b{/jobs/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2a551a63{/jobs/job,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@ec2bf82{/jobs/job/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6cc0bcf6{/stages,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@32f61a31{/stages/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@669253b7{/stages/stage,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@49a64d82{/stages/stage/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@66d23e4a{/stages/pool,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4d9d1b69{/stages/pool/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@251f7d26{/storage,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@52d10fb8{/storage/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1fe8d51b{/storage/rdd,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@22680f52{/storage/rdd/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@39c11e6c{/environment,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@503d56b5{/environment/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@433ffad1{/executors,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2575f671{/executors/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@ecf9fb3{/executors/threadDump,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@27f9e982{/executors/threadDump/json,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@37d3d232{/static,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@21680803{/,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@c8b96ec{/api,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@58a55449{/jobs/job/kill,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6e0ff644{/stages/stage/kill,null,AVAILABLE,@Spark}

18/07/01 00:11:11 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://192.168.0.120:4040

18/07/01 00:11:11 INFO spark.SparkContext: Added JAR file:/opt/spark-2.2.1-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.2.1.jar at spark://192.168.0.120:41922/jars/spark-examples_2.11-2.2.1.jar with timestamp 1530375071834

18/07/01 00:11:12 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.0.120:8032

18/07/01 00:11:12 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers

18/07/01 00:11:12 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (2048 MB per container)

18/07/01 00:11:12 INFO yarn.Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

18/07/01 00:11:12 INFO yarn.Client: Setting up container launch context for our AM

18/07/01 00:11:12 INFO yarn.Client: Setting up the launch environment for our AM container

18/07/01 00:11:12 INFO yarn.Client: Preparing resources for our AM container

18/07/01 00:11:13 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

18/07/01 00:11:14 INFO yarn.Client: Uploading resource file:/opt/spark-2.2.1-bin-hadoop2.7/spark-c4987503-2802-4e4c-b170-301856a36773/__spark_libs__7066117465738289067.zip -> hdfs://master:9000/user/spark/.sparkStaging/application_1530373644791_0003/__spark_libs__7066117465738289067.zip

18/07/01 00:11:18 INFO yarn.Client: Uploading resource file:/opt/spark-2.2.1-bin-hadoop2.7/spark-c4987503-2802-4e4c-b170-301856a36773/__spark_conf__2688610535686541958.zip -> hdfs://master:9000/user/spark/.sparkStaging/application_1530373644791_0003/__spark_conf__.zip

18/07/01 00:11:18 INFO spark.SecurityManager: Changing view acls to: spark

18/07/01 00:11:18 INFO spark.SecurityManager: Changing modify acls to: spark

18/07/01 00:11:18 INFO spark.SecurityManager: Changing view acls groups to:

18/07/01 00:11:18 INFO spark.SecurityManager: Changing modify acls groups to:

18/07/01 00:11:18 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); groups with view permissions: Set(); users with modify permissions: Set(spark); groups with modify permissions: Set()

18/07/01 00:11:18 INFO yarn.Client: Submitting application application_1530373644791_0003 to ResourceManager

18/07/01 00:11:18 INFO impl.YarnClientImpl: Submitted application application_1530373644791_0003

18/07/01 00:11:18 INFO cluster.SchedulerExtensionServices: Starting Yarn extension services with app application_1530373644791_0003 and attemptId None

18/07/01 00:11:19 INFO yarn.Client: Application report for application_1530373644791_0003 (state: ACCEPTED)

18/07/01 00:11:19 INFO yarn.Client:

client token: N/A

diagnostics: AM container is launched, waiting for AM container to Register with RM

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1530375078885

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1530373644791_0003/

user: spark

18/07/01 00:11:20 INFO yarn.Client: Application report for application_1530373644791_0003 (state: ACCEPTED)

18/07/01 00:11:21 INFO yarn.Client: Application report for application_1530373644791_0003 (state: ACCEPTED)

18/07/01 00:11:22 INFO yarn.Client: Application report for application_1530373644791_0003 (state: ACCEPTED)

18/07/01 00:11:23 INFO yarn.Client: Application report for application_1530373644791_0003 (state: ACCEPTED)

18/07/01 00:11:24 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(spark-client://YarnAM)

18/07/01 00:11:24 INFO cluster.YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> master, PROXY_URI_BASES -> http://master:8088/proxy/application_1530373644791_0003), /proxy/application_1530373644791_0003

18/07/01 00:11:24 INFO ui.JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter

18/07/01 00:11:24 INFO yarn.Client: Application report for application_1530373644791_0003 (state: RUNNING)

18/07/01 00:11:24 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 192.168.0.121

ApplicationMaster RPC port: 0

queue: default

start time: 1530375078885

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1530373644791_0003/

user: spark

18/07/01 00:11:24 INFO cluster.YarnClientSchedulerBackend: Application application_1530373644791_0003 has started running.

18/07/01 00:11:24 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 42209.

18/07/01 00:11:24 INFO netty.NettyBlockTransferService: Server created on 192.168.0.120:42209

18/07/01 00:11:24 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

18/07/01 00:11:24 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 192.168.0.120, 42209, None)

18/07/01 00:11:24 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.0.120:42209 with 366.3 MB RAM, BlockManagerId(driver, 192.168.0.120, 42209, None)

18/07/01 00:11:24 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 192.168.0.120, 42209, None)

18/07/01 00:11:24 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, 192.168.0.120, 42209, None)

18/07/01 00:11:25 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@58aa1d72{/metrics/json,null,AVAILABLE,@Spark}

18/07/01 00:11:35 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.0.123:59710) with ID 1

18/07/01 00:11:35 INFO storage.BlockManagerMasterEndpoint: Registering block manager slave3:34708 with 366.3 MB RAM, BlockManagerId(1, slave3, 34708, None)

18/07/01 00:11:38 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.0.122:36090) with ID 2

18/07/01 00:11:38 INFO cluster.YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

18/07/01 00:11:38 INFO storage.BlockManagerMasterEndpoint: Registering block manager slave2:35749 with 366.3 MB RAM, BlockManagerId(2, slave2, 35749, None)

18/07/01 00:11:38 INFO spark.SparkContext: Starting job: reduce at SparkPi.scala:38

18/07/01 00:11:38 INFO scheduler.DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 10 output partitions

18/07/01 00:11:38 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

18/07/01 00:11:38 INFO scheduler.DAGScheduler: Parents of final stage: List()

18/07/01 00:11:38 INFO scheduler.DAGScheduler: Missing parents: List()

18/07/01 00:11:38 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

18/07/01 00:11:38 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1832.0 B, free 366.3 MB)

18/07/01 00:11:38 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1172.0 B, free 366.3 MB)

18/07/01 00:11:38 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.0.120:42209 (size: 1172.0 B, free: 366.3 MB)

18/07/01 00:11:38 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1006

18/07/01 00:11:38 INFO scheduler.DAGScheduler: Submitting 10 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9))

18/07/01 00:11:38 INFO cluster.YarnScheduler: Adding task set 0.0 with 10 tasks

18/07/01 00:11:38 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, slave2, executor 2, partition 0, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:38 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, slave3, executor 1, partition 1, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:38 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on slave3:34708 (size: 1172.0 B, free: 366.3 MB)

18/07/01 00:11:39 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on slave2:35749 (size: 1172.0 B, free: 366.3 MB)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, slave3, executor 1, partition 2, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 531 ms on slave3 (executor 1) (1/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, slave3, executor 1, partition 3, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 64 ms on slave3 (executor 1) (2/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4, slave3, executor 1, partition 4, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 44 ms on slave3 (executor 1) (3/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5, slave3, executor 1, partition 5, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 62 ms on slave3 (executor 1) (4/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6, slave2, executor 2, partition 6, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 731 ms on slave2 (executor 2) (5/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7, slave3, executor 1, partition 7, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 58 ms on slave3 (executor 1) (6/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8, slave2, executor 2, partition 8, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 49 ms on slave2 (executor 2) (7/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9, slave2, executor 2, partition 9, PROCESS_LOCAL, 4836 bytes)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 70 ms on slave3 (executor 1) (8/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 51 ms on slave2 (executor 2) (9/10)

18/07/01 00:11:39 INFO scheduler.TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 44 ms on slave2 (executor 2) (10/10)

18/07/01 00:11:39 INFO cluster.YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool

18/07/01 00:11:39 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 0.857 s

18/07/01 00:11:39 INFO scheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 1.104223 s

Pi is roughly 3.143763143763144

18/07/01 00:11:39 INFO server.AbstractConnector: Stopped Spark@596df867{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

18/07/01 00:11:39 INFO ui.SparkUI: Stopped Spark web UI at http://192.168.0.120:4040

18/07/01 00:11:39 INFO cluster.YarnClientSchedulerBackend: Interrupting monitor thread

18/07/01 00:11:39 INFO cluster.YarnClientSchedulerBackend: Shutting down all executors

18/07/01 00:11:39 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down

18/07/01 00:11:39 INFO cluster.SchedulerExtensionServices: Stopping SchedulerExtensionServices

(serviceOption=None,

services=List(),

started=false)

18/07/01 00:11:39 INFO cluster.YarnClientSchedulerBackend: Stopped

18/07/01 00:11:39 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

18/07/01 00:11:39 INFO memory.MemoryStore: MemoryStore cleared

18/07/01 00:11:39 INFO storage.BlockManager: BlockManager stopped

18/07/01 00:11:39 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

18/07/01 00:11:39 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

18/07/01 00:11:39 INFO spark.SparkContext: Successfully stopped SparkContext

18/07/01 00:11:39 INFO util.ShutdownHookManager: Shutdown hook called

18/07/01 00:11:39 INFO util.ShutdownHookManager: Deleting directory /opt/spark-2.2.1-bin-hadoop2.7/spark-c4987503-2802-4e4c-b170-301856a36773

![]()

通过yarn resource manager界面查看任务运行状态:

参考《https://blog.csdn.net/rongyongfeikai2/article/details/69361333》

《https://blog.csdn.net/chengyuqiang/article/details/77864246》