用自己的数据训练yolov3

Train YOLO V3 with my own data

Operating System: Linux on Ubuntu with CUDA 8.0 & OPENCV 3.2.0

Since YOLO V3 has a better performance on small objection detection, and we add a new class—wrist, we switched to YOLO V3. There is a great tutorial to guide me, and below is what I’ve done to train my own data.

Step 1. Prepare data

Label your pictures, I used labelImg to get this done! I labeled four classes objection: hand, skull, elbow joint, wrist; 3631 pictures in total.

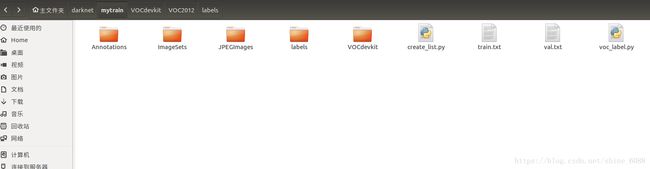

Guided by this blog, txt files for training and validation can be get by the create_list.py and voc_label.py. There are to things you should remember: (1) change the classes in line 7 in voc_label.py. (2) change the comment from line 47 to 50 to get val.txt like below.

#image_ids = open('ImageSets/Main/train.txt').read().strip().split()

#list_file = open('train.txt', 'w')

image_ids = open('ImageSets/Main/val.txt').read().strip().split()

list_file = open('val.txt', 'w') If you did everything right, you’ll get a folder like this:

Step 2. Change the configuration files

Three config files should be changed: cfg/voc.data, cfg/yolo-voc.cfg.

cfg/voc.data

According to the directory of your file, rewrite the voc.data file. In my project, I only labeled one feature: hand, so I changed the classes number from 20 to 1. I saved all files related to this project under a folder named mytrain, and got my train.txt and val.txt files guided by this blog, so I changed my voc.data to this:

classes= 4

train = /home/tec/darknet/mytrain/train.txt

valid = /home/tec/darknet/mytrain/val.txt

names = data/voc.names

backup = /home/tec/darknet/backup Don’t forget to create the voc.names file under the folder named data, in hand.names text:

hand

skull

elbow joint

wrist

cfg/yolo-voc.cfg

you can change it according to the commands in below.

Notes:

filters = (classes+ 5)* 3, in this case filters=(4+5)*3=27

If the video memory is very small, set random = 0 to turn off multiscale training, else set random=1.

[net]

# Testing

# batch=1

# subdivisions=1

# Training

batch=64

subdivisions=8

......

[convolutional]

size=1

stride=1

pad=1

filters=27 #filters = (classes+ 5)* 3

activation=linear

[yolo]

mask = 6,7,8

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

classes=4

num=9

jitter=.3

ignore_thresh = .5

truth_thresh = 1

random=0 #If the video memory is very small, set random = 0 ato turn off multiscale training, else set random=1.

......

[convolutional]

size=1

stride=1

pad=1

filters=27 #filters = (classes+ 5)* 3

activation=linear

[yolo]

mask = 3,4,5

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

classes=4###20

num=9

jitter=.3

ignore_thresh = .5

truth_thresh = 1

random=0###1

......

[convolutional]

size=1

stride=1

pad=1

filters=27 #filters = (classes+ 5)* 3

activation=linear

[yolo]

mask = 0,1,2

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

classes=4

num=9

jitter=.3

ignore_thresh = .5

truth_thresh = 1

random=0###1 Step 4 Download the pre-trained weight

wget https://pjreddie.com/media/files/darknet53.conv.74 Step 5. Train and test

Train

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg darknet53.conv.74 Test

./darknet detector test cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc.backup data/test/7.jpg #test picture

./darknet detector demo cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc.backup #test by video 批量测试图片,参考博客1,博客2;