faceswap-GAN之adversarial_loss_loss(对抗loss)

一,faceswap-GAN之adversarial_loss_loss(对抗loss)

二,adversarial_loss,对抗loss,包含生成loss与分辨loss。

def adversarial_loss(netD, real, fake_abgr, distorted, gan_training="mixup_LSGAN", **weights):

alpha = Lambda(lambda x: x[:,:,:, :1])(fake_abgr)

fake_bgr = Lambda(lambda x: x[:,:,:, 1:])(fake_abgr)

fake = alpha * fake_bgr + (1-alpha) * distorted #mask combine input&output

#mixup_LSGA

if gan_training == "mixup_LSGAN":

dist = Beta(0.2, 0.2)

lam = dist.sample()

mixup = lam * concatenate([real, distorted]) + (1 - lam) * concatenate([fake, distorted]) # fake(mask)&real mixup

pred_fake = netD(concatenate([fake, distorted])) #Discriminator

pred_mixup = netD(mixup)#Discriminator

loss_D = calc_loss(pred_mixup, lam * K.ones_like(pred_mixup), "l2")#one false and zero true for Discriminator

loss_G = weights['w_D'] * calc_loss(pred_fake, K.ones_like(pred_fake), "l2")#one true and zero false for generator

mixup2 = lam * concatenate([real, distorted]) + (1 - lam) * concatenate([fake_bgr, distorted]) # fake(no mask)&real mixup

pred_fake_bgr = netD(concatenate([fake_bgr, distorted]))

pred_mixup2 = netD(mixup2)

loss_D += calc_loss(pred_mixup2, lam * K.ones_like(pred_mixup2), "l2")#one false and zero true for Discriminator

loss_G += weights['w_D'] * calc_loss(pred_fake_bgr, K.ones_like(pred_fake_bgr), "l2")#one true and zero false for generator

elif gan_training == "relativistic_avg_LSGAN":

real_pred = netD(concatenate([real, distorted]))

fake_pred = netD(concatenate([fake, distorted]))#mask

loss_D = K.mean(K.square(real_pred - K.ones_like(fake_pred)))/2 #Discriminator real to fake loss

loss_D += K.mean(K.square(fake_pred - K.zeros_like(fake_pred)))/2 #Discriminator fake to real loss

loss_G = weights['w_D'] * K.mean(K.square(fake_pred - K.ones_like(fake_pred))) #generator pred to fake loss

#no mask

fake_pred2 = netD(concatenate([fake_bgr, distorted])) #no mask

loss_D += K.mean(K.square(real_pred - K.mean(fake_pred2,axis=0) - K.ones_like(fake_pred2)))/2 #Discriminator real to fake loss

loss_D += K.mean(K.square(fake_pred2 - K.mean(real_pred,axis=0) - K.zeros_like(fake_pred2)))/2 #Discriminator fake to real loss

loss_G += weights['w_D'] * K.mean(K.square(real_pred - K.mean(fake_pred2,axis=0) - K.zeros_like(fake_pred2)))/2

loss_G += weights['w_D'] * K.mean(K.square(fake_pred2 - K.mean(real_pred,axis=0) - K.ones_like(fake_pred2)))/2

else:

raise ValueError("Receive an unknown GAN training method: {gan_training}")

return loss_D, loss_G三,LSGAN(最小二乘Gan)

GAN为对抗生成网络,主要用于图像生成,风格转换等,主要有生成网络,识别网络,再加上目标函数loss组成对抗网络。一般对抗网络的差异主要在于目标函数loss的不同。

GAN就是警察抓小偷,是个博弈,警察总是想着如何分辨小偷和非小偷,而小偷总是想着尽可能地伪装成正常人不被发现。这个博弈达到纳什均衡时,小偷的表现就跟正常人一样,而警察也无法判断“小偷”是不是小偷…这个时候的“小偷”就不能算小偷了?

实践中,我们很难去找一个博弈的纳什均衡,一般转而采用梯度算法优化目标函数(损失函数)。

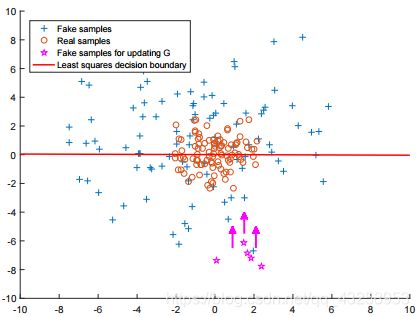

传统GAN中, D网络和G网络都是用简单的交叉熵loss做更新, 最小二乘GAN则用最小二乘(Least Squares) Loss 做更新:

选择最小二乘Loss做更新有两个好处, 1. 更严格地惩罚远离数据集的离群Fake sample, 使得生成图片更接近真实数据(同时图像也更清晰) 2. 最小二乘保证离群sample惩罚更大, 解决了原本GAN训练不充分(不稳定)的问题:

但缺点也是明显的, LSGAN对离离群点的过度惩罚, 可能导致样本生成的”多样性”降低, 生成样本很可能只是对真实样本的简单”模仿”和细微改动.

但LSGAN特别适合换脸,换脸主要还是细微的改变。