CentOS7使用本地库(Local Repository)安装Ambari-2.4.1和HDP-2.5.0

前言

大多数情况下,我们在linux环境中安装软件都是使用在线安装的方式,比如centOS的 yum,ubuntu的apt-get,但是,有些时候,我们需要安装软件,但并没有网络,或网速并不快,比如公司的集群,很可能是没有外网的,有些库是国外的库,下载速度非常慢,这个时候,如果有个本地库,这个问题就能比较好的解决了 。当然,并不是说所有的软件都做成本地库,这里只是把安装Ambari和HDP的库本地化。

1、下载压缩包

首先下载包含必要软件的压缩包(tarball)到本地,以centOS7,Ambari 2.4.1和HDP2.5.0.0为例:

Ambari-2.4.1.0 压缩包地址:

http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/2.4.1.0/ambari-2.4.1.0-centos7.tar.gz

HDP-2.5.0.0压缩包地址:

http://public-repo-1.hortonworks.com/HDP/centos7/2.x/updates/2.5.0.0/HDP-2.5.0.0-centos7-rpm.tar.gz

HDP UTILS压缩包下载地址:

http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/centos7/HDP-UTILS-1.1.0.21-centos7.tar.gz

其他版本,下载地址请参考:

Ambari:

http://docs.hortonworks.com/HDPDocuments/Ambari-2.4.1.0/bk_ambari-installation/content/ambari_repositories.html

HDP和HDP UTILS:

http://docs.hortonworks.com/HDPDocuments/Ambari-2.4.1.0/bk_ambari-installation/content/hdp_stack_repositories.html

2、设置本地库

在设置本地库之前,我们先需要完成一些准备工作,如下:

- 选择一台机器作为本地库的镜像服务器,这个机器能够被集群中的机器所访问,且有一个被支持的操作系统。

- 此台镜像服务器有软件包管理,例如centOS的yum,ubuntu的apt-get,SLES的zypper。

2.1 创建一个HTTP服务

step 1 在镜像服务器上安装一个HTTP服务(例如Apache httpd)。

这里可以使用以下方式安装http:

[root@master ~]# yum install httpd也可以下载二进制包安装,下载地址为:

http://httpd.apache.org/download.cgi

安装完成之后,可以看到有/var/www/目录了。

step 2 启动web 服务

step 3 确保防火墙允许来自集群中的节点的访问请求。

2.2 拷贝并解压tarball

把之前下载好的压缩包拷贝并解压到镜像服务机器的相应目录下。

对于Ambari Repo,解压到目录/var/www/html下

对于HDP,解压到目录/var/www/html/hdp/

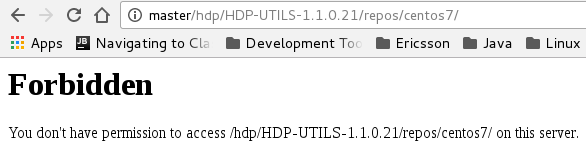

2.3 确认解压目录正常

解压结束后,在浏览器中确认是否可以访问,访问地址如下:

Ambari Base URL:

http://<web.server>/Ambari-2.4.1.0/<OS>HDP Base URL:

http:///hdp /HDP/<OS>HDP-UTILS Base URL:

http://<web.server>/hdp/HDP-UTILS-<version>/repos/<OS>其中

因此,我的这三个地址为以下:

http://master/AMBARI-2.4.1.0/centos7/

http://master/hdp/HDP/centos7/

http://master/hdp/HDP-UTILS-1.1.0.21/repos/centos7/

输入以下命令可以解决此问题:

[root@master centos7]# setenforce 0

3、安装Ambari Server

安装Ambari的步骤如下:

- 下载Ambari repository

- 设置Ambari server

- 启动Ambari server

3.1 配置Ambari repository

step 1 以root用户登录到host,这个host指的是我们需要安装ambari server的机器。

step 2 准备Ambari repository 配置文件

ambari.repo的内容如下:

#VERSION_NUMBER=2.4.1.0-22

[Updates-ambari-2.4.1.0]

name=ambari-2.4.1.0 - Updates

baseurl=INSERT-BASE-URL

gpgcheck=1

gpgkey=http://public-repo-1.hortonworks.com/ambari/centos7/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

enabled=1

priority=1

以我为例,我的ambari.repo编辑为以下内容:

#VERSION_NUMBER=2.4.1.0-22

[Updates-ambari-2.4.1.0]

name=ambari-2.4.1.0 - Updates

baseurl=http://master/AMBARI-2.4.1.0/centos7/2.4.1.0-22/

gpgcheck=1

gpgkey=http://master/AMBARI-2.4.1.0/centos7/2.4.1.0-22/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

enabled=1

priority=1可以通过设置gpgcheck=0来禁用GPG检查,如果不禁用,我们也可以修改gpgkey成本地的库源。其中,INSERT-BASE-URL为之前设置好的本地镜像Ambari的URL地址。以我为例,我是安装在机器系统为centOS7的master上,所以,我的INSERT-BASE-URL为http://master/Ambari-2.4.1.0/centos7,gpgkey为http://master/Ambari-2.4.1.0/centos7/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

ambari.repo文件编辑好后,放置到目录/etc/yum.repos.d/下。

如果有网络连接的话,可以直接下载Ambari repository,然后修改内容,如下:

wget -nv http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/2.4.1.0/ambari.repo -O /etc/yum.repos.d/ambari.repo

vi /etc/yum.repos.d/ambari.repo

修改的内容如前面一样。

3.2 安装Ambari server

yum install ambari-server3.3 设置Ambari server

在启动Ambari server 之前,我们必须进行设置Ambari server,命令如下:

ambari-server setup以后如果想改变jdk的目录,也可以使用这个命令进行修改设置,在接下来的设置中,会有选择jdk的目录,选择custom jdk,然后再输入新的jdk目录。

3.4 启动Ambari server

启动命令:

ambari-server start

检查服务开启状态:

ambari-server status停止服务:

ambari-server stop在启动Ambari server的时候,Ambari会运行一个数据库一致性检查来发现问题,如果发现问题,则server会中断,控制台会输出:

DB configs consistency check failed.更多的详细信息会记录到以下日志文件中:

/var/log/ambari-server/ambari-server-check-database.log这个时候,我们可以强制启动服务,跳过这个检查:

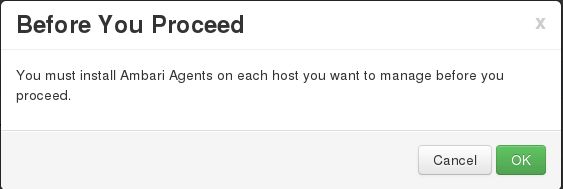

ambari-server start --skip-database-check4、安装Ambari Agent

安装Ambari Agent分为两步:

- 下载Ambari Repo

- 安装Ambari Agent

4.1 下载Ambari Repo

step 1 以root用户登录到host,这个host指的是我们需要安装ambari agent的机器。(这一步与安装Ambari server的第一步类似)

step 2 下载Ambari repository到host.(这一步与安装Ambari server的第二步类似,命令是一样的,Ambari Repo也是一样的)

wget -nv http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/2.4.1.0/ambari.repo -O /etc/yum.repos.d/ambari.repo

注意:如果没有网络连接的话,上面这条命令是无法执行的,本文讲述的是使用本地库进行安装Ambari,因此,我们可以直接编辑/etc/yum.repos.d/ambari.repo文件,修改内容与前面讲的一样,请参考配置Ambari Repository那节。

4.2 安装Ambari agent(与安装ambari server命令类似,一个是server,一个是agent)

yum install ambari-agent4.3 设置Ambari Server Host Name

编辑ambari-agent配置文件,设置之前安装过Ambari-server 的主机名:

vi /etc/ambari-agent/conf/ambari-agent.ini

[server]

hostname=.ambari.server.hostname>

url_port=8440

secured_url_port=8441 4.4 启动ambari agent

ambari-agent start相关的命令有以下:

ambari-agent status # 检查agent服务运行状态

ambari-agent stop # 停止agent服务

5、安装HDP

打开浏览器,使用Ambari安装向导进行安装,配置,和部署你的集群,步骤如下:

- 登录到Apache Ambari

- 给集群命名

- 选择版本

- 安装选项

- 确认主机

- 选择服务

- 分配Masters

- 分配Slaves和Clients

- 定制服务

- Review

- 安装,启动和测试

- 完成

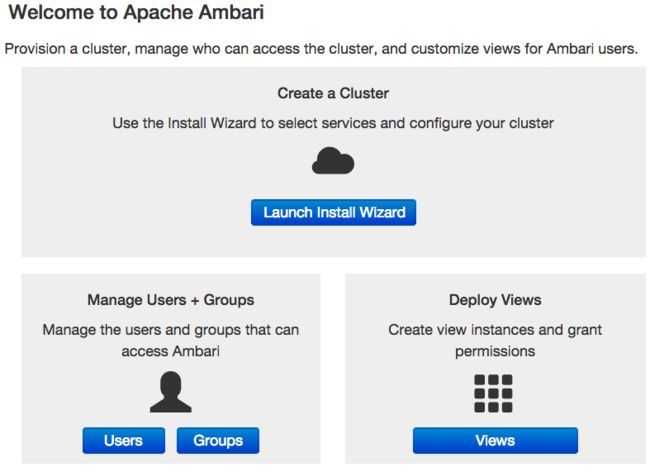

5.1 登录到Apache Ambari

在浏览器中输入

http://.ambari.server>:8080 其中,

在启动Ambari 服务之后,使用web浏览器,打开Ambari Web。在浏览器的地址栏输入:

http://{your.ambari.server}:8080其中,{your.ambari.server}是你的ambari server所在的主机名,例如,我的ambari server的主机名为master,则我输入:

http://master:8080进入网页之后,使用默认用户名/密码:admin,稍后可以修改密码。

对于一个新的集群,Ambari 安装向导会显示一个欢迎页面。

5.2 开启Ambari安装向导

5.3 为集群命名

在文本框中输入集群的名字

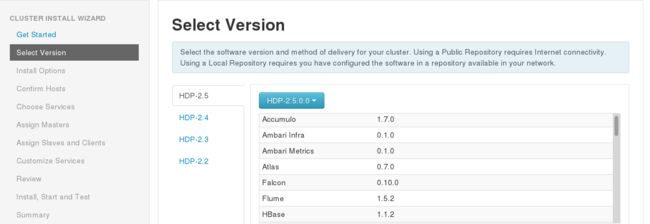

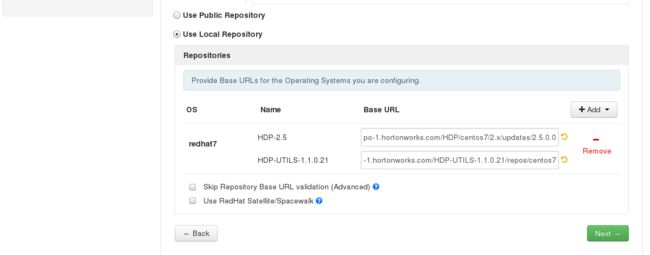

5.4 选择版本

这里选中“Use Local Repository”,使用本地源安装,然后找到自己的系统,我的是centos7,其实就是选这个redhat7,然后把其他的都删除掉,如果不知道自己的系统对应的哪个OS,请参考以下:

http://docs.hortonworks.com/HDPDocuments/Ambari-2.4.1.0/bk_ambari-installation/content/select_version.html

然后,填上自己相应的URL,URL就是前面本地源的地址。

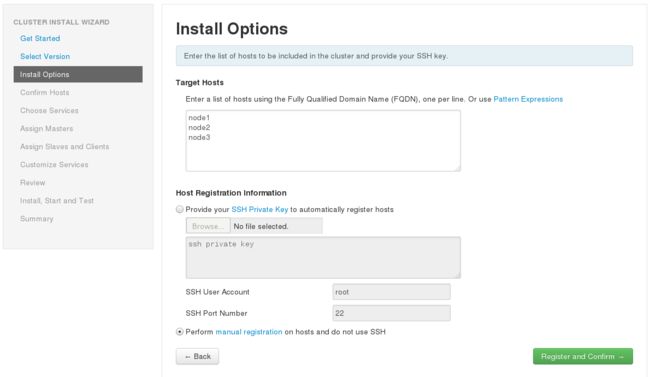

5.5 安装选项

在“Target Hosts”里填上主机名,主机名一定要是全限定名。一行一个主机名。

在“Host Registration information”,我使用的是下面那个,即不使用ssh,使用这种方式需要注意:需要提前在host上安装好ambari-agent,并启动服务。

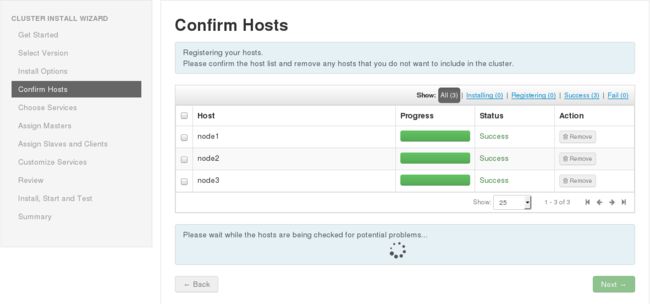

5.6 确认Hosts

等待验证,这一步主要是检查一下hosts的环境,如果提示哪些环境有问题,照着修改就好了。主要是一些jdk,目录啊,防火墙啊什么的。可参考以下官网:

http://docs.hortonworks.com/HDPDocuments/Ambari-2.4.1.0/bk_ambari-installation/content/prepare_the_environment.html

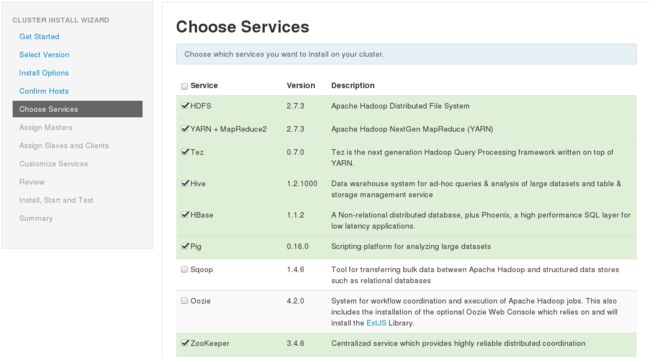

5.7 选择服务

选择自己需要的服务,这里的服务(Services)指的是各个组件,比如hbase, hive , spark等等。

现在没有选择的服务,在以后也可以添加,所以,看着选就好了。

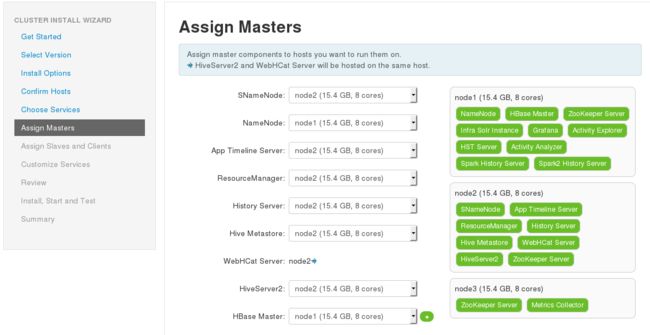

5.8 分配Master

这里主要是对hosts上安装的服务进行master选择,比如我安装hdfs的话,那么hdfs的master,即namenode需要安装到哪个节点,类似云云的。

5.8 分配slave和clients

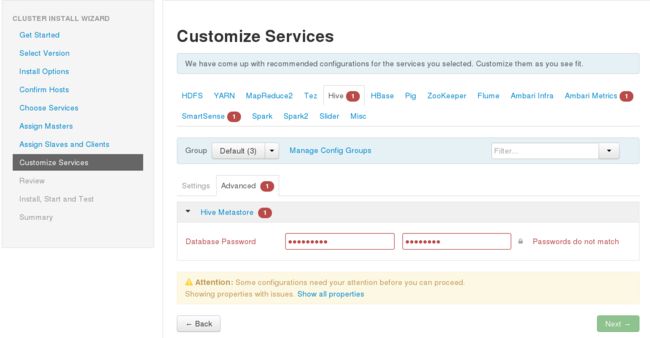

5.9 定制服务

这里主要是对安装的服务进行配置,很多配置我们可以使用默认的,但有些必须让我们手动输入,比如hive的数据库的密码,因为hive的元数据是存储在关系型数据库中的,因此,这里在给我们安装hive的时候,会自动帮我们安装一个关系型数据库,这里需要输入的就是这个数据库的密码。当然,看着红色的数字,那就是需要我们手动输入的配置。然后下一步。

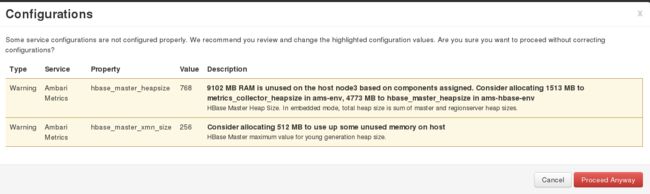

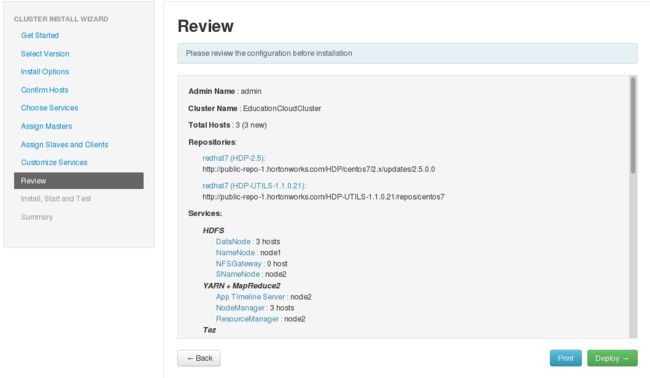

5.10 Review

5.11 安装,启动和测试

这一步主要是在各个hosts上安装服务并启动。可能会出现很多问题,通常是对报错进行修复,然后retry。我也把我所遇到的问题贴在了文章的后面。可以参考一下,大多是源的一些问题。

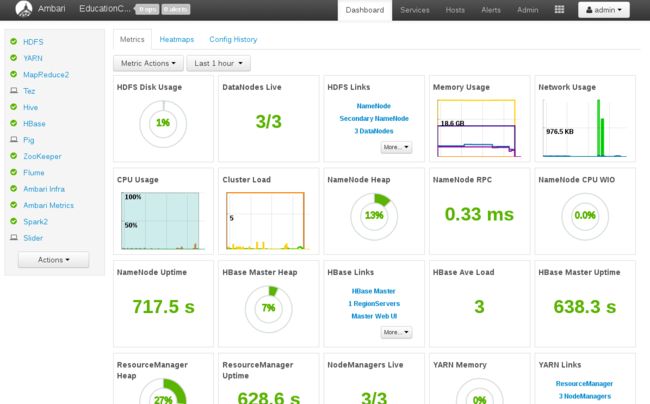

成功之后可以看到dashboard:

如果发现哪个service有问题,可以删除这个service,然后重新安装这个service。

异常

异常1

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-INSTALL/scripts/hook.py", line 37, in

BeforeInstallHook().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-INSTALL/scripts/hook.py", line 34, in hook

install_packages()

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-INSTALL/scripts/shared_initialization.py", line 37, in install_packages

retry_count=params.agent_stack_retry_count)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 54, in action_install

self.install_package(package_name, self.resource.use_repos, self.resource.skip_repos)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/yumrpm.py", line 49, in install_package

self.checked_call_with_retries(cmd, sudo=True, logoutput=self.get_logoutput())

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 83, in checked_call_with_retries

return self._call_with_retries(cmd, is_checked=True, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 91, in _call_with_retries

code, out = func(cmd, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of '/usr/bin/yum -d 0 -e 0 -y install hdp-select' returned 1. One of the configured repositories failed (HDP-2.5),

and yum doesn't have enough cached data to continue. At this point the only

safe thing yum can do is fail. There are a few ways to work "fix" this:

1. Contact the upstream for the repository and get them to fix the problem.

2. Reconfigure the baseurl/etc. for the repository, to point to a working

upstream. This is most often useful if you are using a newer

distribution release than is supported by the repository (and the

packages for the previous distribution release still work).

3. Disable the repository, so yum won't use it by default. Yum will then

just ignore the repository until you permanently enable it again or use

--enablerepo for temporary usage:

yum-config-manager --disable HDP-2.5

4. Configure the failing repository to be skipped, if it is unavailable.

Note that yum will try to contact the repo. when it runs most commands,

so will have to try and fail each time (and thus. yum will be be much

slower). If it is a very temporary problem though, this is often a nice

compromise:

yum-config-manager --save --setopt=HDP-2.5.skip_if_unavailable=true

failure: repodata/repomd.xml from HDP-2.5: [Errno 256] No more mirrors to try.

http://public-repo-1.hortonworks.com/HDP/centos7/2.x/updates/2.5.0.0/repodata/repomd.xml: [Errno 14] curl#6 - "Could not resolve host: public-repo-1.hortonworks.com; Name or service not known" 解决方法:将源换成本地源,请参考前面的修改源的方法,编辑ambari.repo文件。

Error downloading packages:

hadoop_2_5_0_0_1245-2.7.3.2.5.0.0-1245.el6.x86_64: [Errno 256] No more mirrors to try.

zookeeper_2_5_0_0_1245-3.4.6.2.5.0.0-1245.el6.noarch: [Errno 256] No more mirrors to try.

spark_2_5_0_0_1245-yarn-shuffle-1.6.2.2.5.0.0-1245.el6.noarch: [Errno 256] No more mirrors to try.

spark2_2_5_0_0_1245-yarn-shuffle-2.0.0.2.5.0.0-1245.el6.noarch: [Errno 256] No more mirrors to try.

mailcap-2.1.41-2.el7.noarch: [Errno 256] No more mirrors to try.解决方法:这个也是源的问题,解决方法如下:

yum clean all

yum update异常2

Failed to execute command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm; Exit code: 1; stdout: Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

No package smartsense-hst available.

; stderr: http://master/hdp/HDP/centos7/repodata/repomd.xml: [Errno 14] HTTP Error 403 - Forbidden

Trying other mirror.

To address this issue please refer to the below knowledge base article

https://access.redhat.com/solutions/69319

If above article doesn't help to resolve this issue please create a bug on https://bugs.centos.org/

http://master/hdp/HDP-UTILS-1.1.0.21/repos/centos7/repodata/repomd.xml: [Errno 14] HTTP Error 403 - Forbidden

Trying other mirror.

Error: Nothing to do

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

stdout: /var/lib/ambari-agent/data/output-8245.txt

2016-11-14 14:20:22,975 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-11-14 14:20:22,975 - Group['spark'] {}

2016-11-14 14:20:22,995 - Group['hadoop'] {}

2016-11-14 14:20:22,995 - Group['users'] {}

2016-11-14 14:20:22,995 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:22,995 - Adding user User['hive']

2016-11-14 14:20:23,631 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:23,632 - Adding user User['zookeeper']

2016-11-14 14:20:24,248 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:24,249 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:24,249 - Adding user User['ams']

2016-11-14 14:20:24,949 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2016-11-14 14:20:24,949 - Adding user User['tez']

2016-11-14 14:20:25,566 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:25,566 - Adding user User['spark']

2016-11-14 14:20:26,200 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2016-11-14 14:20:26,200 - Adding user User['ambari-qa']

2016-11-14 14:20:26,827 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:26,827 - Adding user User['flume']

2016-11-14 14:20:27,533 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:27,534 - Adding user User['hdfs']

2016-11-14 14:20:28,260 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:28,260 - Adding user User['yarn']

2016-11-14 14:20:28,927 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:28,927 - Adding user User['mapred']

2016-11-14 14:20:29,553 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:29,554 - Adding user User['hbase']

2016-11-14 14:20:30,170 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2016-11-14 14:20:30,170 - Adding user User['hcat']

2016-11-14 14:20:31,087 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-11-14 14:20:31,130 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2016-11-14 14:20:31,135 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2016-11-14 14:20:31,135 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2016-11-14 14:20:31,136 - Changing owner for /tmp/hbase-hbase from 1013 to hbase

2016-11-14 14:20:31,136 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-11-14 14:20:31,137 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2016-11-14 14:20:31,140 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2016-11-14 14:20:31,140 - Group['hdfs'] {}

2016-11-14 14:20:31,141 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': [u'hadoop', u'hdfs']}

2016-11-14 14:20:31,141 - Modifying user hdfs

2016-11-14 14:20:32,157 - FS Type:

2016-11-14 14:20:32,158 - Directory['/etc/hadoop'] {'mode': 0755}

2016-11-14 14:20:32,158 - Creating directory Directory['/etc/hadoop'] since it doesn't exist.

2016-11-14 14:20:32,159 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2016-11-14 14:20:32,160 - Changing owner for /var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir from 1013 to hdfs

2016-11-14 14:20:32,184 - Initializing 2 repositories

2016-11-14 14:20:32,185 - Repository['HDP-2.5'] {'base_url': 'http://master/hdp/HDP/centos7/', 'action': ['create'], 'components': [u'HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP', 'mirror_list': None}

2016-11-14 14:20:32,250 - File['/etc/yum.repos.d/HDP.repo'] {'content': '[HDP-2.5]\nname=HDP-2.5\nbaseurl=http://master/hdp/HDP/centos7/\n\npath=/\nenabled=1\ngpgcheck=0'}

2016-11-14 14:20:32,251 - Writing File['/etc/yum.repos.d/HDP.repo'] because contents don't match

2016-11-14 14:20:32,251 - Repository['HDP-UTILS-1.1.0.21'] {'base_url': 'http://master/hdp/HDP-UTILS-1.1.0.21/repos/centos7/', 'action': ['create'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP-UTILS', 'mirror_list': None}

2016-11-14 14:20:32,254 - File['/etc/yum.repos.d/HDP-UTILS.repo'] {'content': '[HDP-UTILS-1.1.0.21]\nname=HDP-UTILS-1.1.0.21\nbaseurl=http://master/hdp/HDP-UTILS-1.1.0.21/repos/centos7/\n\npath=/\nenabled=1\ngpgcheck=0'}

2016-11-14 14:20:32,254 - Writing File['/etc/yum.repos.d/HDP-UTILS.repo'] because contents don't match

2016-11-14 14:20:32,254 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2016-11-14 14:20:33,429 - Skipping installation of existing package unzip

2016-11-14 14:20:33,429 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2016-11-14 14:20:33,438 - Skipping installation of existing package curl

2016-11-14 14:20:33,438 - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2016-11-14 14:20:33,445 - Skipping installation of existing package hdp-select

installing using command: {sudo} rpm -qa | grep smartsense- || {sudo} yum -y install smartsense-hst || {sudo} rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Exit code: 1

Std Out: Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

No package smartsense-hst available.

Std Err: http://master/hdp/HDP/centos7/repodata/repomd.xml: [Errno 14] HTTP Error 403 - Forbidden

Trying other mirror.

To address this issue please refer to the below knowledge base article

https://access.redhat.com/solutions/69319

If above article doesn't help to resolve this issue please create a bug on https://bugs.centos.org/

http://master/hdp/HDP-UTILS-1.1.0.21/repos/centos7/repodata/repomd.xml: [Errno 14] HTTP Error 403 - Forbidden

Trying other mirror.

Error: Nothing to do

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Command failed after 1 tries解决方法:在提供镜像服务的机器上执行以下命令:

[root@master centos7]# setenforce 0参考:

http://docs.hortonworks.com/HDPDocuments/Ambari-2.4.1.0/bk_ambari-installation/content/disable_selinux_and_packagekit_and_check_the_umask_value.html

异常3

Failed to execute command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm; Exit code: 1; stdout: Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

No package smartsense-hst available.

; stderr: Error: Nothing to do

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm问题原因:由于smartsense包是在ambari里面的,检查ambari repo是否正常。如果使用本地源,请查检base url。

异常4

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_client.py", line 82, in

HbaseClient().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_client.py", line 37, in install

self.configure(env)

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_client.py", line 42, in configure

hbase(name='client')

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase.py", line 120, in hbase

group=params.user_group

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/providers/xml_config.py", line 66, in action_create

encoding = self.resource.encoding

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 120, in action_create

raise Fail("Applying %s failed, parent directory %s doesn't exist" % (self.resource, dirname))

resource_management.core.exceptions.Fail: Applying File['/usr/hdp/current/hadoop-client/conf/hdfs-site.xml'] failed, parent directory /usr/hdp/current/hadoop-client/conf doesn't exist

问题原因:可能是由于之前安装过,但没有安装成功,导致在文件系统中存在一些文件,致使后续重试的安装出现问题。

解决方法:输入以下命令

yum -y erase hdp-select参考:

https://community.hortonworks.com/questions/6470/usrhdpcurrenthadoop-clientconf-doesnt-exist-error.html

异常5

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/mysql_server.py", line 64, in

MysqlServer().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/mysql_server.py", line 33, in install

self.install_packages(env)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 567, in install_packages

retry_count=agent_stack_retry_count)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 54, in action_install

self.install_package(package_name, self.resource.use_repos, self.resource.skip_repos)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/yumrpm.py", line 49, in install_package

self.checked_call_with_retries(cmd, sudo=True, logoutput=self.get_logoutput())

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 83, in checked_call_with_retries

return self._call_with_retries(cmd, is_checked=True, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 91, in _call_with_retries

code, out = func(cmd, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of '/usr/bin/yum -d 0 -e 0 -y install mysql-community-server' returned 1. One of the configured repositories failed (MySQL Connectors Community),

and yum doesn't have enough cached data to continue. At this point the only

safe thing yum can do is fail. There are a few ways to work "fix" this:

1. Contact the upstream for the repository and get them to fix the problem.

2. Reconfigure the baseurl/etc. for the repository, to point to a working

upstream. This is most often useful if you are using a newer

distribution release than is supported by the repository (and the

packages for the previous distribution release still work).

3. Disable the repository, so yum won't use it by default. Yum will then

just ignore the repository until you permanently enable it again or use

--enablerepo for temporary usage:

yum-config-manager --disable mysql-connectors-community

4. Configure the failing repository to be skipped, if it is unavailable.

Note that yum will try to contact the repo. when it runs most commands,

so will have to try and fail each time (and thus. yum will be be much

slower). If it is a very temporary problem though, this is often a nice

compromise:

yum-config-manager --save --setopt=mysql-connectors-community.skip_if_unavailable=true

failure: repodata/repomd.xml from mysql-connectors-community: [Errno 256] No more mirrors to try.

http://repo.mysql.com/yum/mysql-connectors-community/el/7/x86_64/repodata/repomd.xml: [Errno 14] curl#6 - "Could not resolve host: repo.mysql.com; Name or service not known" 问题原因:

从以上最后两句可以看到,在repodata/下有个来自mysql-connectors-community的repomd.xml文件,而repodata这个目录是在ambari源下的,因此,导致无法找到mysql安装源的原因应该是刚才刚刚加入的源没有完成识别出来,使用以下命令解决问题:

[root@node2 Shell]# yum makecache如果还是不能解决这个问题,可以尝试手动安装mysql community server和client。先安装client,再安装server,下载地址如下:

http://repo.mysql.com/yum/mysql-5.6-community/el/7/x86_64/mysql-community-server-5.6.34-2.el7.x86_64.rpm

http://repo.mysql.com/yum/mysql-5.6-community/el/7/x86_64/mysql-community-client-5.6.34-2.el7.x86_64.rpm

疑惑???

官方给的是使用本地库(Local Repository),为什么还会出现需要从mysql 官方库下载mysql安装,不应该是将这些mysql rpm包一起放在ambari里面吗。

异常6

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/datanode.py", line 174, in

DataNode().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/datanode.py", line 49, in install

self.install_packages(env)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 567, in install_packages

retry_count=agent_stack_retry_count)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 54, in action_install

self.install_package(package_name, self.resource.use_repos, self.resource.skip_repos)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/yumrpm.py", line 49, in install_package

self.checked_call_with_retries(cmd, sudo=True, logoutput=self.get_logoutput())

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 83, in checked_call_with_retries

return self._call_with_retries(cmd, is_checked=True, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 91, in _call_with_retries

code, out = func(cmd, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of '/usr/bin/yum -d 0 -e 0 -y install hadoop_2_5_0_0_1245' returned 1. No Presto metadata available for base

Error downloading packages:

mailcap-2.1.41-2.el7.noarch: [Errno 256] No more mirrors to try. 问题原因:no more mirrors to try,很明显,这是由于源有问题,但是,我们明明已经把源做成了本地源,肯定是没问题的,那么可以使用以下命令进行更新源:

yum clean all

yum update异常7

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/FLUME/1.4.0.2.0/package/scripts/flume_check.py", line 49, in

FlumeServiceCheck().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/FLUME/1.4.0.2.0/package/scripts/flume_check.py", line 46, in service_check

try_sleep = 20)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 273, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'env JAVA_HOME=/usr/java/jdk1.8.0_111 /usr/hdp/current/flume-server/bin/flume-ng version' returned 127. env: /usr/hdp/current/flume-server/bin/flume-ng: No such file or directory 异常8

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_regionserver.py", line 166, in

HbaseRegionServer().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_regionserver.py", line 92, in start

hbase_service('regionserver', action='start')

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_service.py", line 41, in hbase_service

user = params.hbase_user

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 273, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of '/usr/hdp/current/hbase-regionserver/bin/hbase-daemon.sh --config /usr/hdp/current/hbase-regionserver/conf start regionserver' returned 127. -bash: /usr/hdp/current/hbase-regionserver/bin/hbase-daemon.sh: No such file or directory 异常9

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_client.py", line 82, in

HbaseClient().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HBASE/0.96.0.2.0/package/scripts/hbase_client.py", line 36, in install

self.install_packages(env)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 567, in install_packages

retry_count=agent_stack_retry_count)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 54, in action_install

self.install_package(package_name, self.resource.use_repos, self.resource.skip_repos)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/yumrpm.py", line 49, in install_package

self.checked_call_with_retries(cmd, sudo=True, logoutput=self.get_logoutput())

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 83, in checked_call_with_retries

return self._call_with_retries(cmd, is_checked=True, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 91, in _call_with_retries

code, out = func(cmd, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of '/usr/bin/yum -d 0 -e 0 -y install hbase_2_5_0_0_1245' returned 1. Error unpacking rpm package hbase_2_5_0_0_1245-1.1.2.2.5.0.0-1245.el6.noarch

error: unpacking of archive failed on file /usr/hdp/2.5.0.0-1245/hbase/conf: cpio: rename failed - Is a directory 解决方法:删除conf这个目录

[root@node2 hbase-client]# cd /usr/hdp/2.5.0.0-1245/hbase/

[root@node2 hbase]# rm -rf conf/

本文安装过程主要参考:

http://docs.hortonworks.com/HDPDocuments/Ambari-2.4.1.0/bk_ambari-installation/content/ch_Getting_Ready.html