Python 爬虫~Prison Oriented Programming。

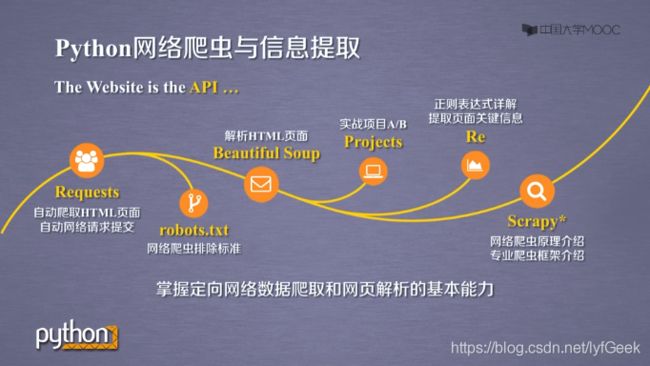

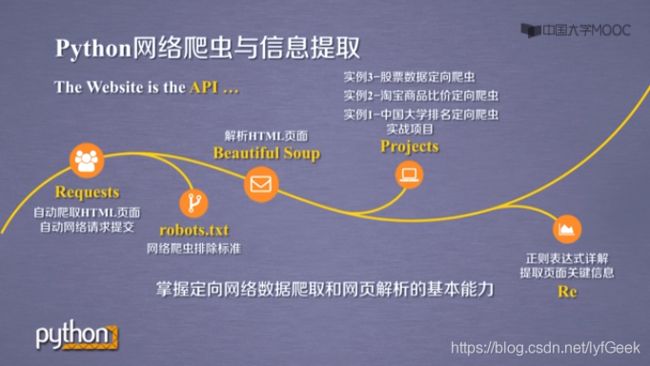

Python 网络爬虫与信息提取。

文章目录

-

- Python 网络爬虫与信息提取。

-

- 工具。

- Requests 库。

-

-

- 安装。

- 使用。

- Requests 库主要方法。

-

- requests.request()

- GET() 方法。

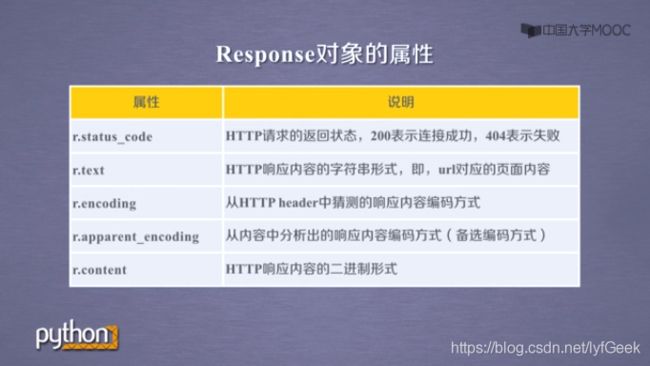

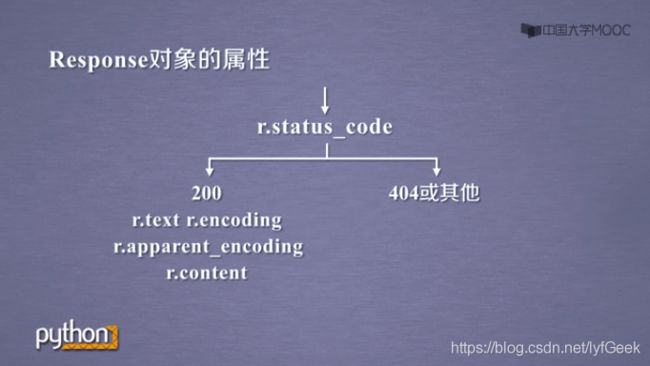

- Requests 库两个重要对象。

-

- Requewst 对象包含向服务器请求的资源。

- Response 对象返回包含爬虫返回的内容。

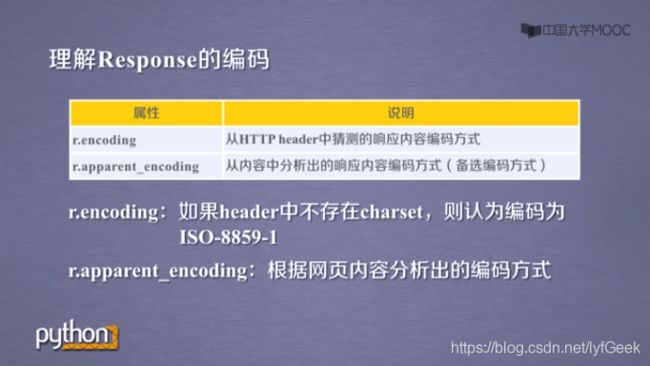

- 编码。

-

- r.encoding

- r.apparent_encoding

- 爬取网页的通用代码框架。

-

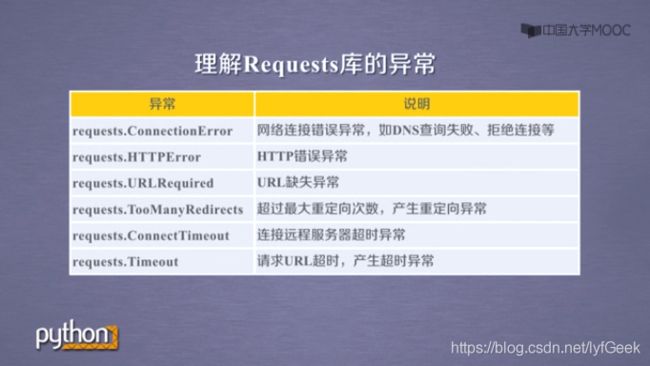

- 异常。

- Requests 库很重要。

- Response 对象。r.raise_for_status()。

- 通用代码。

-

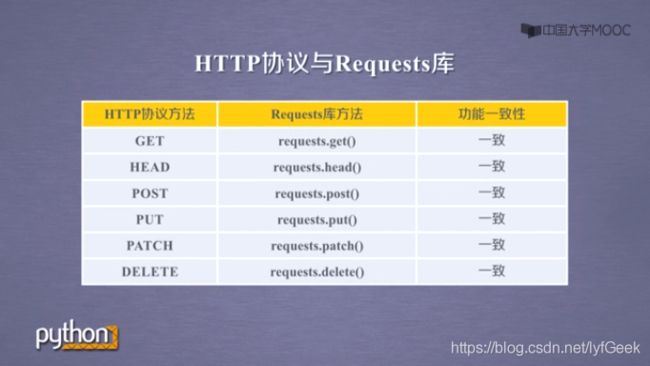

- HTTP 协议及 Requests 库方法。

-

-

- HTTP, Hypertext Transfer Protocol,超文本传输协议。

-

- HTTP 协议对资源的操作。

- PATCH 和 PUT 的区别。

- HTTP 协议与 Requests 库。

-

- Requests 库的 post() 方法。

- Requests 库的 put() 方法。

- Requests 库主要方法解析。13 个。

-

- def request(method, url, **kwargs):

- requests.get(url, params=None, **kwargs)

- requests.head(url, **kwargs)

- requests.post(url, data=None, json=None, **kwargs)

- requests.put(url, data=None, **kwargs)

- requests.patch(url, data=None, **kwargs)

- requests.delete(url, **kwargs)

-

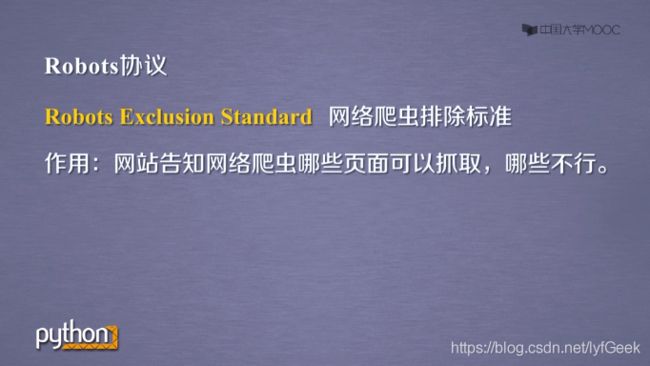

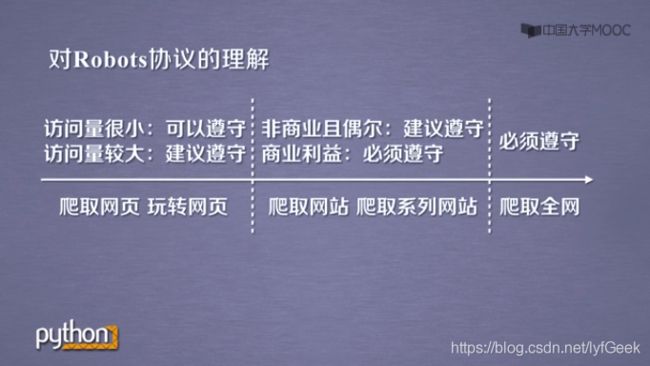

- robots.txt

-

-

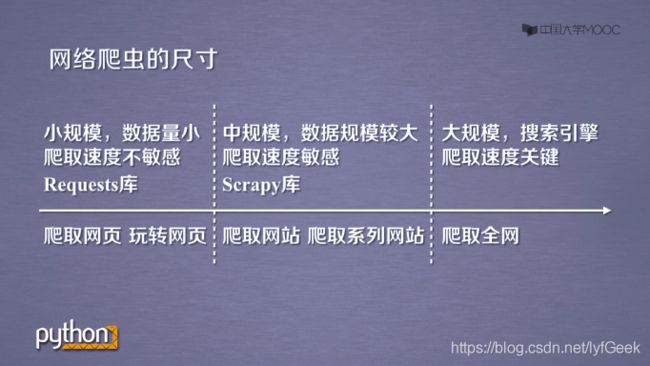

- 网络爬虫引发的问题。

- 对网络爬虫采取限制。

-

- 来源审查:判断 User-Agent 进行限制。

- 发布公告:Robots 协议。

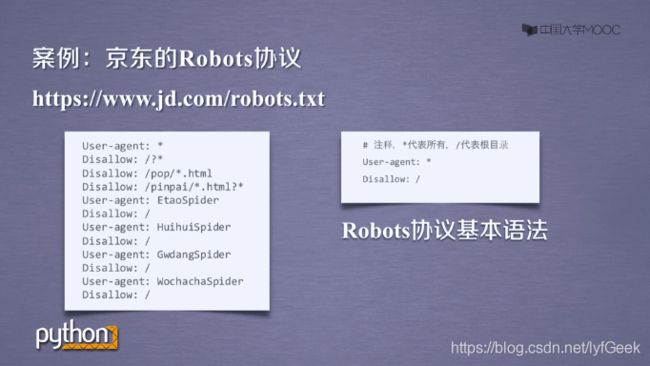

- Robots 协议。

-

- https://www.jd.com/robots.txt

- Robots 协议的遵守方式。

-

- Robots 协议的使用。

-

- 实例~京东。

- 实例~亚马逊。

- 实例~百度、360。

- 实例~网络图片的爬取和存储。

- 实例~IP 地址归属地的自动查询。

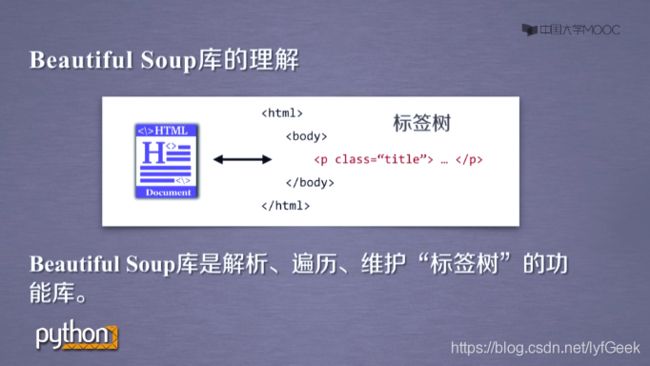

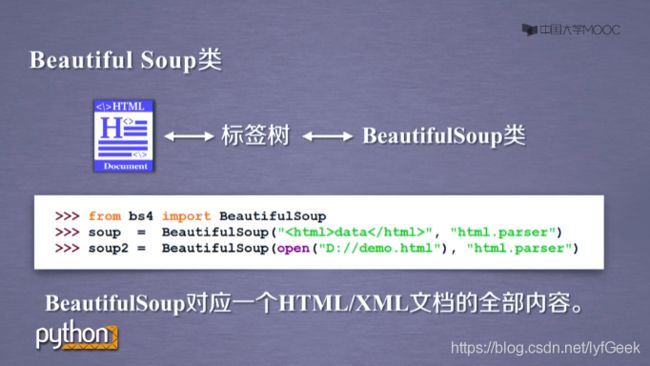

- Beautiful Soup。

-

-

- Beautiful Soup 库的安装。

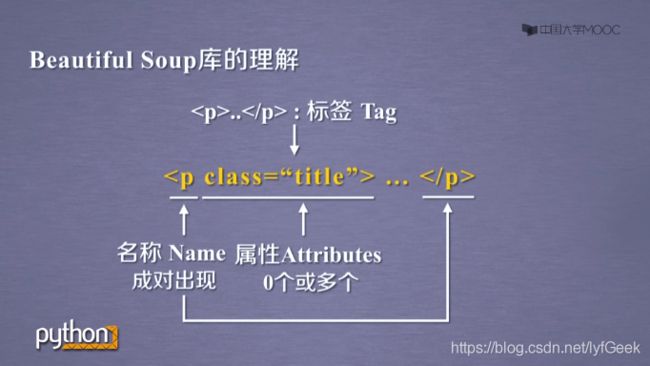

- Beautiful Soup 库的基本元素。

- Beautiful Soup 库的引用。

- Beautiful Soup 库解析器。

- Beautiful Soup 类的基本元素。

- 小结。

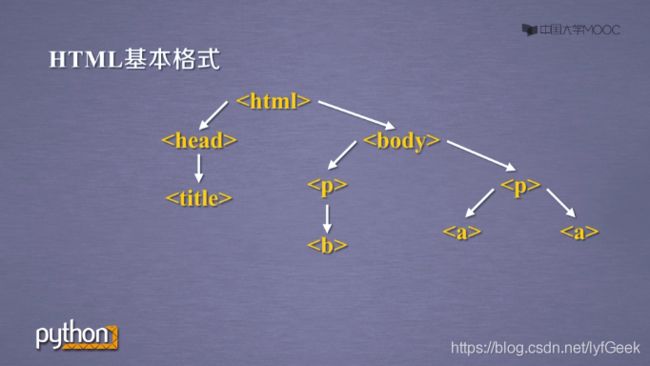

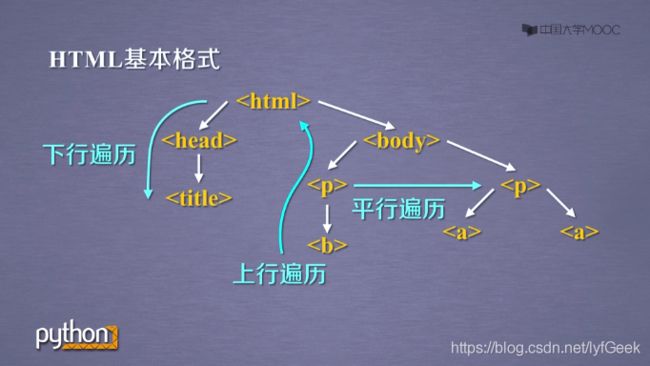

- 基于 bs4 库的 HTML 内容遍历方法。

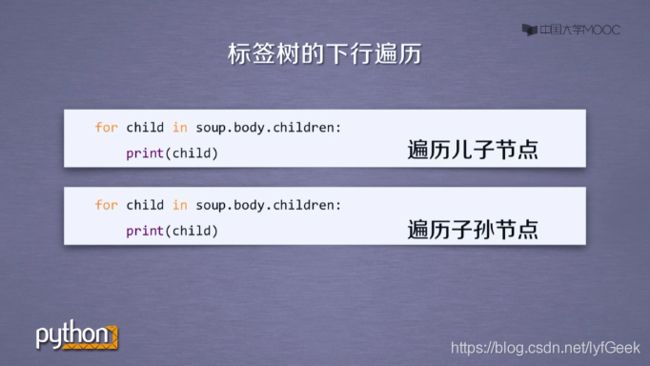

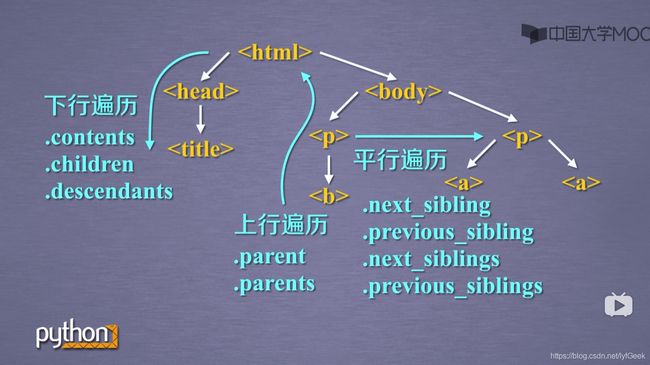

- 遍历方式。

-

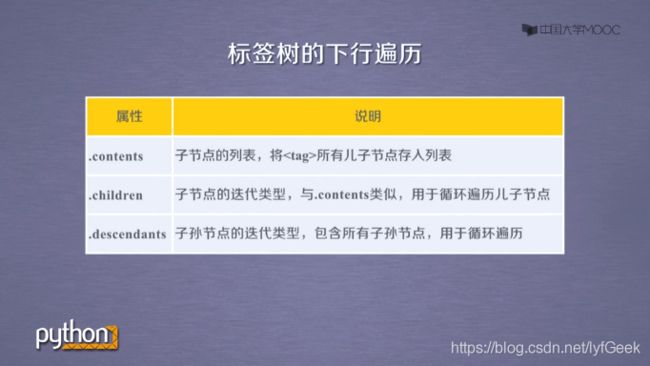

- 标签树的下行遍历。

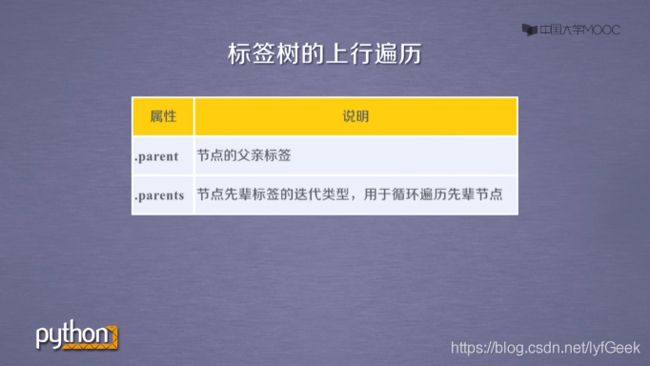

- 标签树的上行遍历。

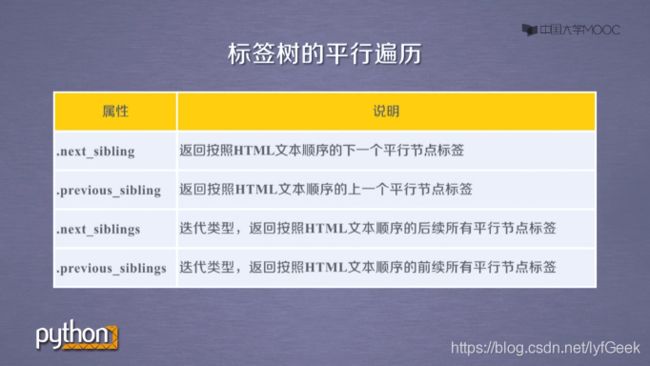

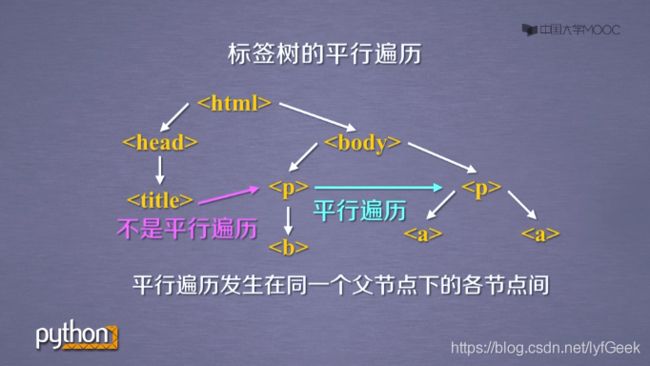

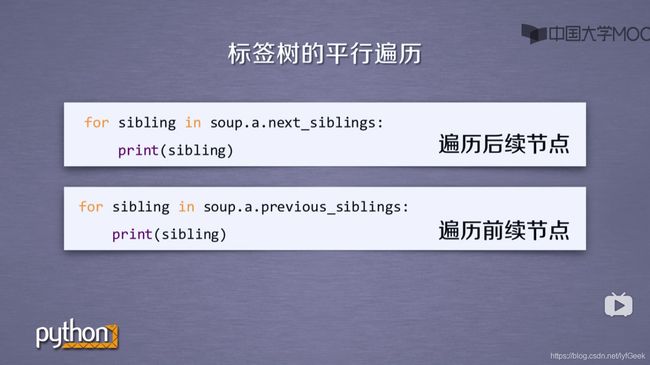

- 标签树的平行遍历。

- 小结。

- 基于 bs4 库的 HTML 格式输出。

-

- 编码。Python3 默认使用 `UTF-8`。

-

- 信息提取。

-

-

- 信息标记的三种形式。

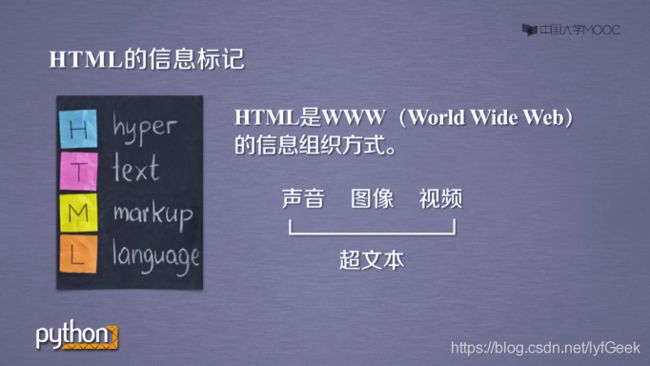

- HTML 的信息标记。

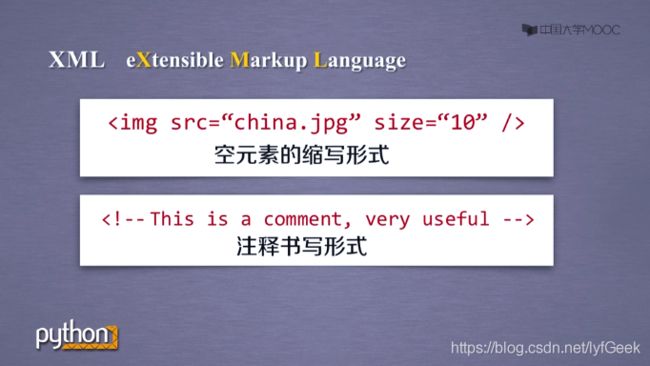

- XML。

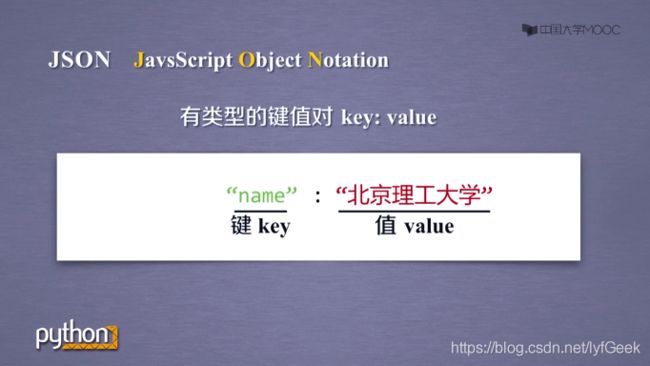

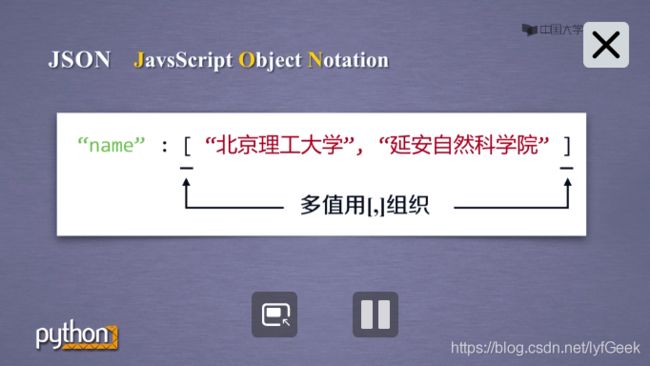

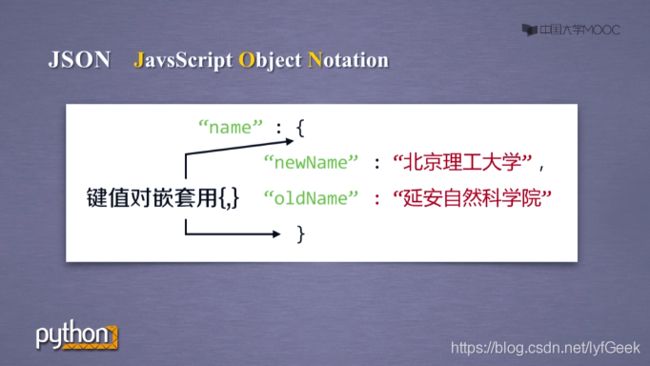

- json。

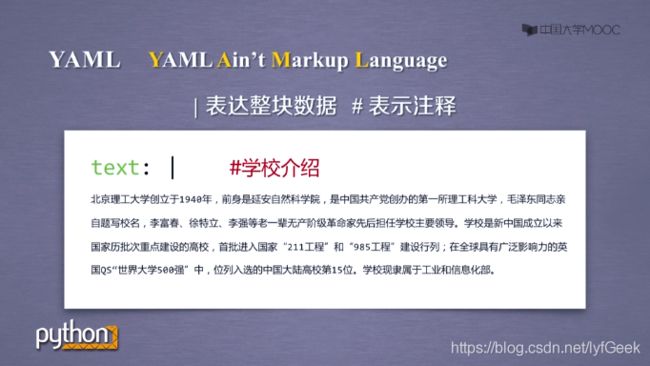

- yaml。

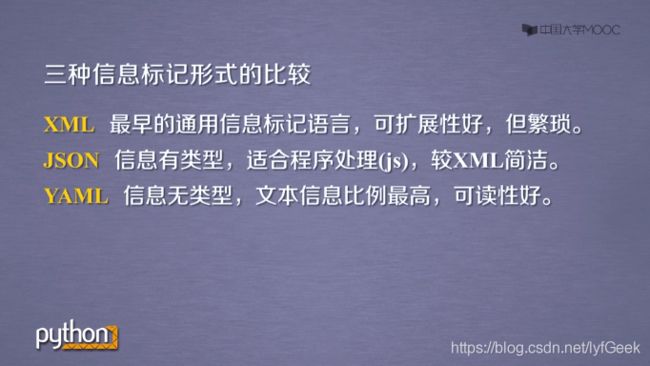

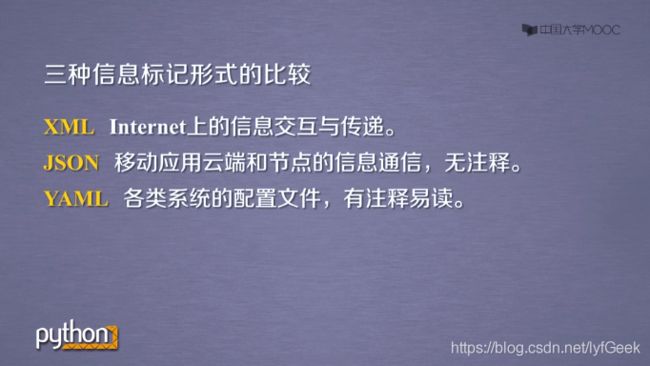

- 三种信息标记形式的比较。

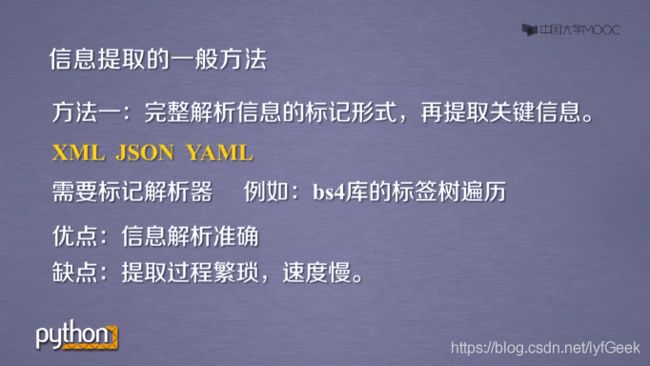

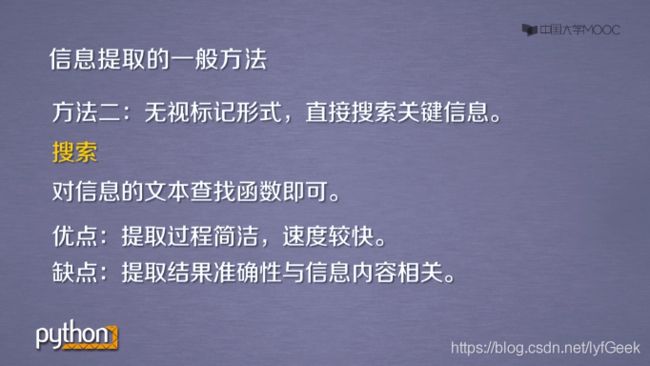

- 信息提取的一般方法。

-

- 完整解析信息的标记形式,再提取关键信息。

- 无视标记形式,直接搜索关键信息。

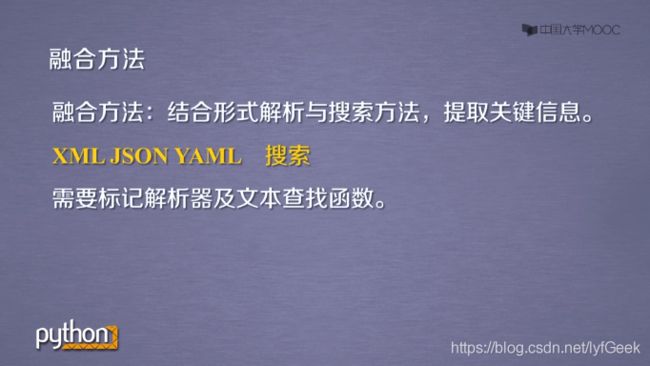

- 融合方法。

- 实例。

- bs4.BeautifulSoup 的方法。

- def find_all(self, name=None, attrs={}, recursive=True, text=None, limit=None, **kwargs):

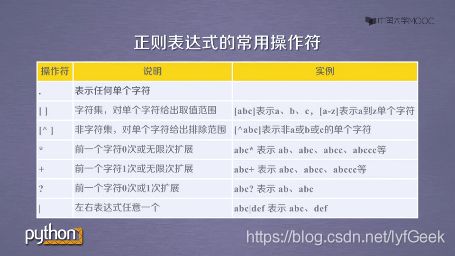

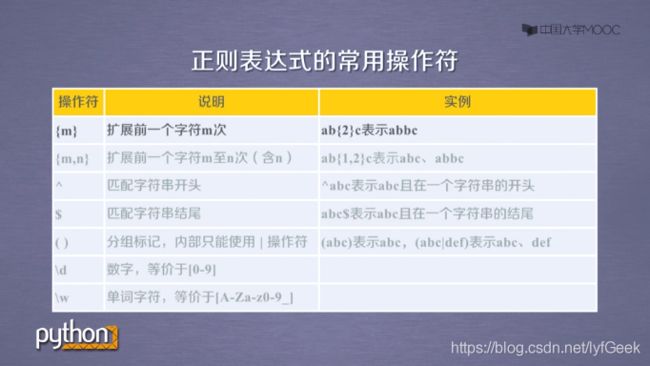

- 正则表达式库。

-

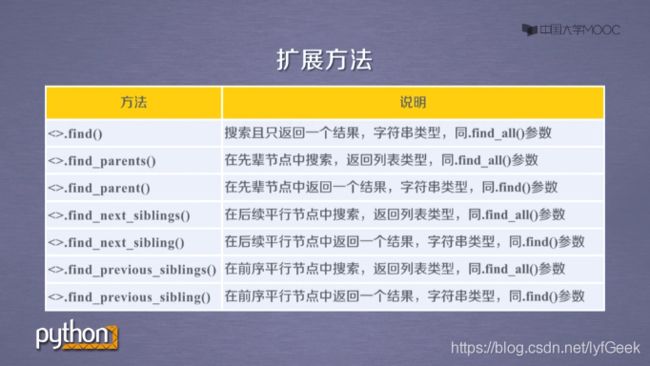

- 扩展方法。

-

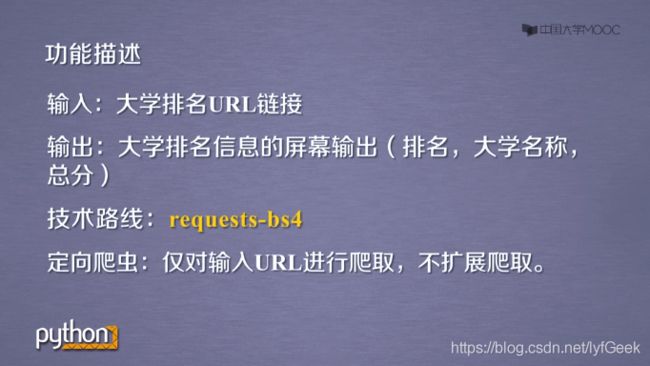

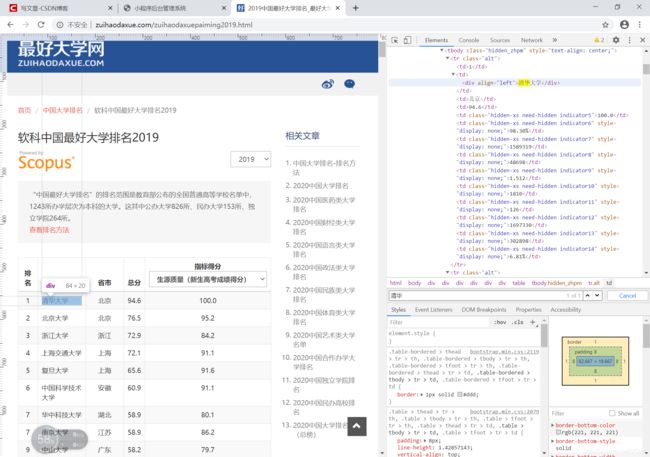

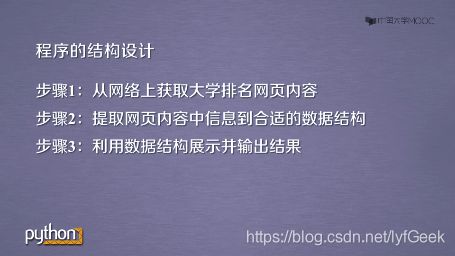

- 实例~中国大学排名定向爬虫。

- Projects

- 正则表达式 Re~一行胜千言。

-

-

- 正则表达式语法。

- 正则实例。

- 匹配 ip 地址的正则分析。

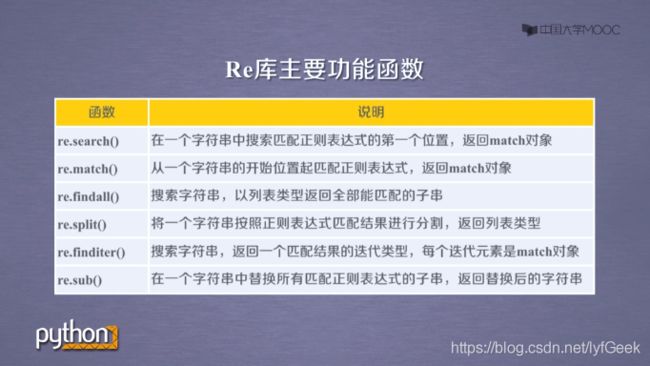

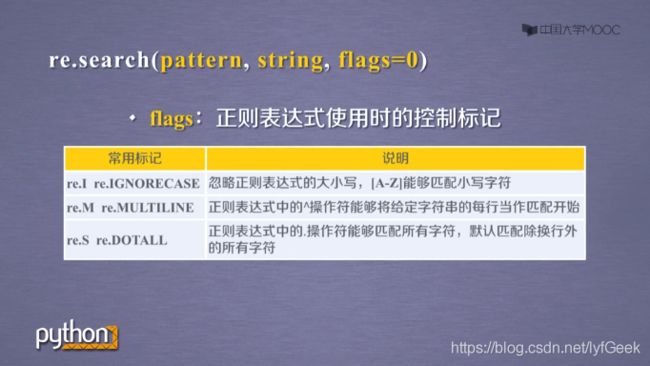

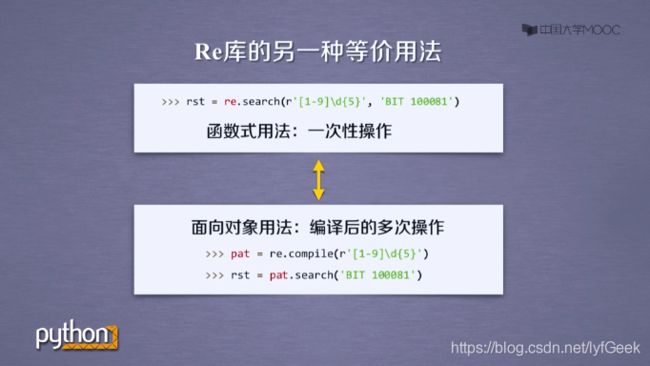

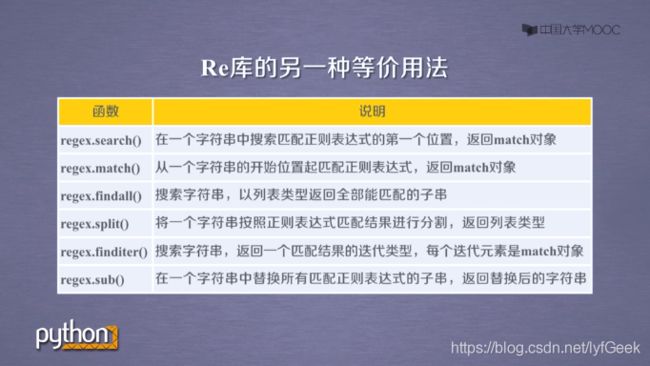

- re 库。

-

- raw string 类型(原生字符串类型)。

- re 编译。

- re Match 对象。

- Match 对象属性。

- Match 对象方法。

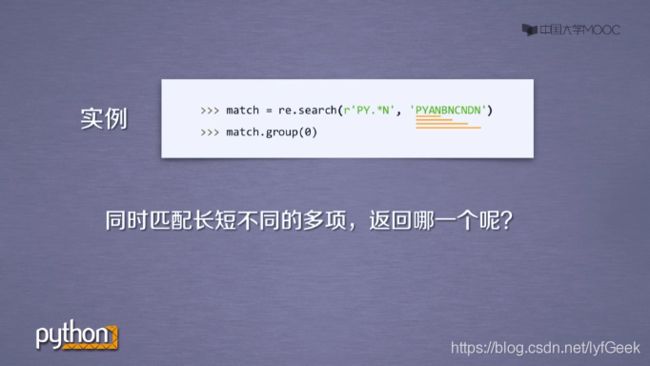

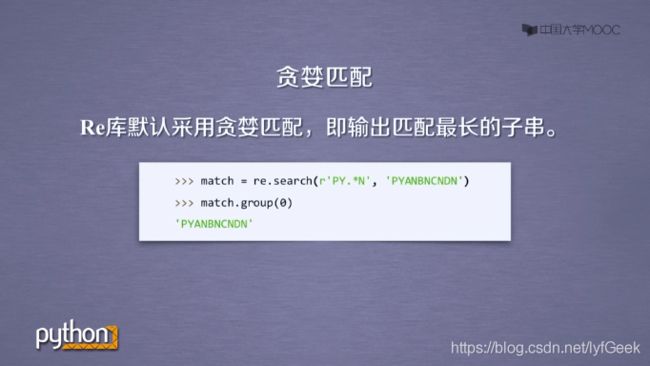

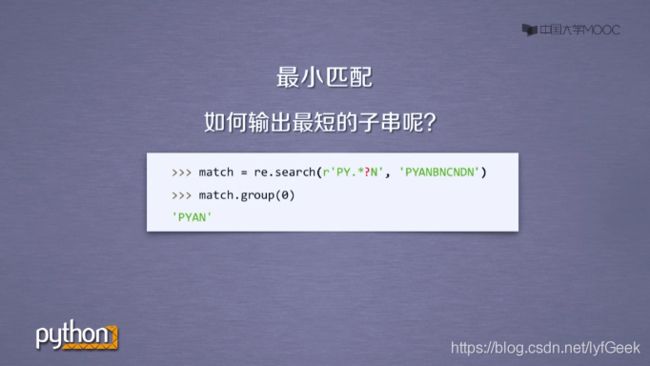

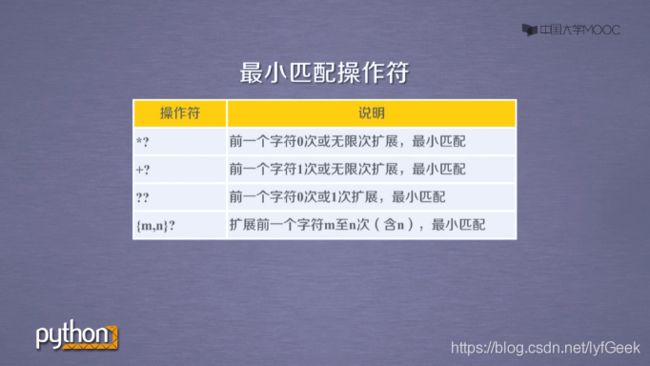

- re 库的贪婪匹配的最小匹配。

-

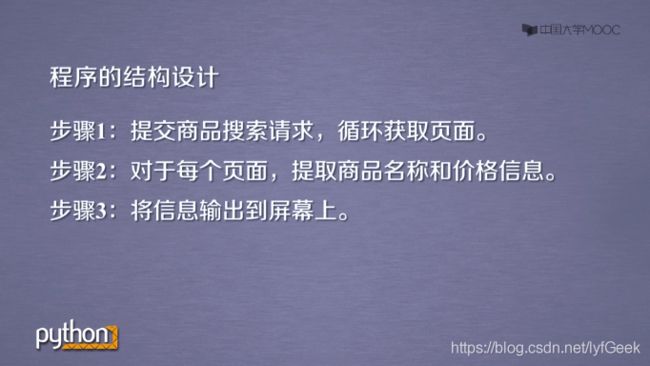

- 淘宝商品比价定向爬虫。

- 股票数据定向爬虫。

-

-

- 优化程序速度。

-

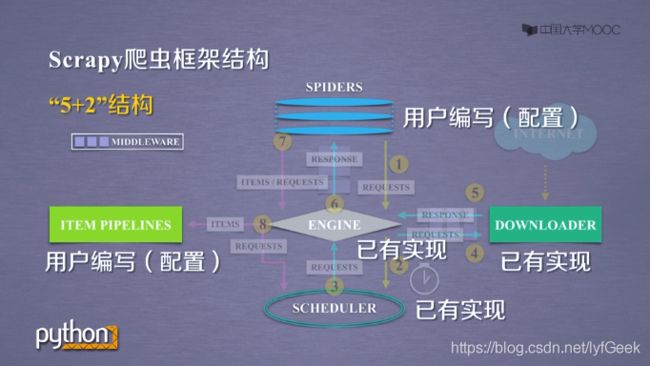

- Scrapy

-

-

- 安装。

- Requests vs. Scrapy。

- Scrapy 命令行。

- 常用命令。

- 实例。

- yield 关键字。

- scrapy 步骤。

- scrapy.Request 类。

- scrapy.Response 类。

- Item 类。

- CSS Selector。

-

- 实例。

-

-

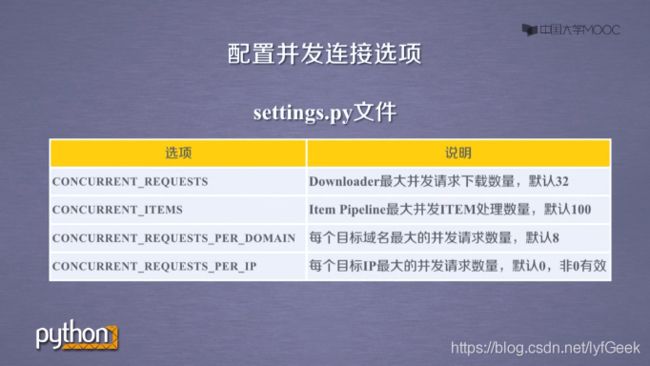

- 优化、配置。

-

The Website is the API…

掌握定向网络爬取和网页解析的基本能力。

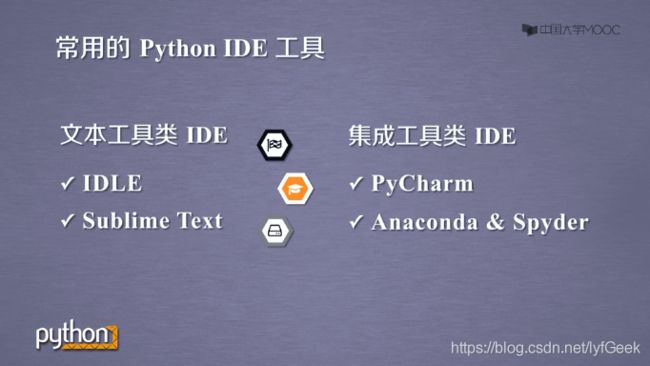

工具。

IDLE

Sublime Text

PyCharm

Anaconda & Spyder

Wing

Visual Studio & PTVS

Eclipse + PyDev

PyCharm

- 科学计算、数据分析。

Canopy(收费)。

Anaconda(开源免费)。

Requests 库。

http://2.python-requests.org/zh_CN/latest/

安装。

pip install requests

C:\Users\geek>pip install requests

Collecting requests

Using cached requests-2.23.0-py2.py3-none-any.whl (58 kB)

Collecting urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1

Using cached urllib3-1.25.9-py2.py3-none-any.whl (126 kB)

Collecting chardet<4,>=3.0.2

Using cached chardet-3.0.4-py2.py3-none-any.whl (133 kB)

Collecting idna<3,>=2.5

Using cached idna-2.9-py2.py3-none-any.whl (58 kB)

Collecting certifi>=2017.4.17

Using cached certifi-2020.4.5.1-py2.py3-none-any.whl (157 kB)

Installing collected packages: urllib3, chardet, idna, certifi, requests

Successfully installed certifi-2020.4.5.1 chardet-3.0.4 idna-2.9 requests-2.23.0 urllib3-1.25.9

WARNING: You are using pip version 20.0.2; however, version 20.1.1 is available.

You should consider upgrading via the 'f:\program files (x86)\python37-32\python.exe -m pip install --upgrade pip' command.

使用。

>>> import requests

>>> r = requests.get('https://www.baidu.com/')

>>> r.status_code

200

>>> r.encoding = 'utf-8'

>>> r.text

'\r\n 百度一下,你就知道 \r\n'

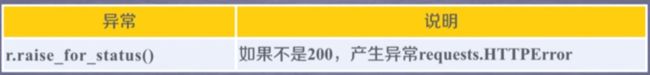

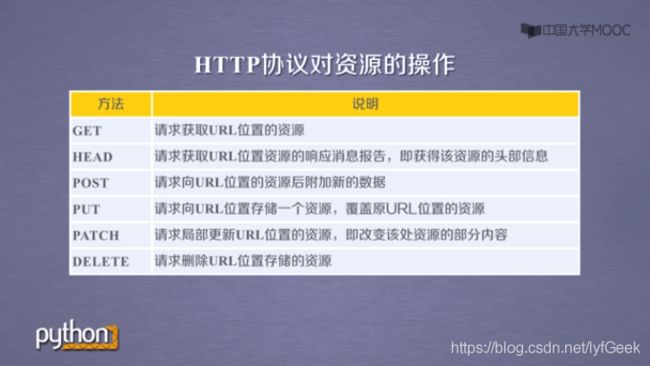

Requests 库主要方法。

| 方法 | 说明 |

|---|---|

| requests.request() | 构造一个请求,支持以下基础方法。 |

| requests.get() | 获取 HTML 网页的主要方法,对应于 HTTP 的 GET。 |

| requests.head() | 获取 HTML 网页头信息,对应于 HTTP 的 HEAD。 |

| requests.post() | 向 HTML 网页提交 POST 请求,对应于 HTTP 的 POST。 |

| requests.put() | 向 HTML 网页提交 PUT 请求,对应于 HTTP 的 PUT。 |

| requests.patch() | 向 HTML 网页提交局部修改请求,对应于 HTTP 的 PATCH。 |

| requests.delete() | 向 HTML 网页提交删除请求,对应于 HTTP 的 DELETE。 |

requests.request()

下面 6 种都是对 requests.request() 方法的封装。

return request('get', url, params=params, **kwargs)

- requests.get() 方法完整参数。

url ——> 拟获取页面的 url 链接。

params ——> url 中的额外参数,字典或字节流格式。可选。

**kwargs ——> 12 个控制访问的参数。

>>> import requests

>>> r = requests.get("http://www.baidu.com")

>>> r.status_code

200

>>> r.encoding

'ISO-8859-1'

>>> r.encoding = 'utf-8'

>>> r.text

> ... 省略内容。。。

>>> type(r)

<class 'requests.models.Response'>

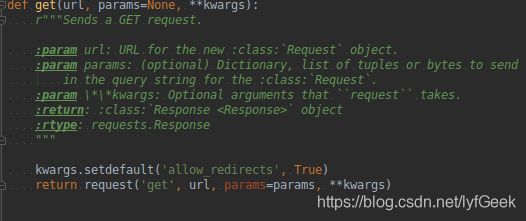

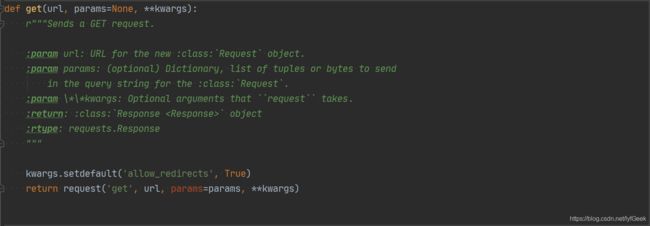

GET() 方法。

def get(url, params=None, **kwargs):

r"""Sends a GET request.

:param url: URL for the new :class:`Request` object.

:param params: (optional) Dictionary, list of tuples or bytes to send

in the query string for the :class:`Request`.

:param \*\*kwargs: Optional arguments that ``request`` takes.

:return: :class:`Response ` object

:rtype: requests.Response

"""

kwargs.setdefault('allow_redirects', True)

return request('get', url, params=params, **kwargs)

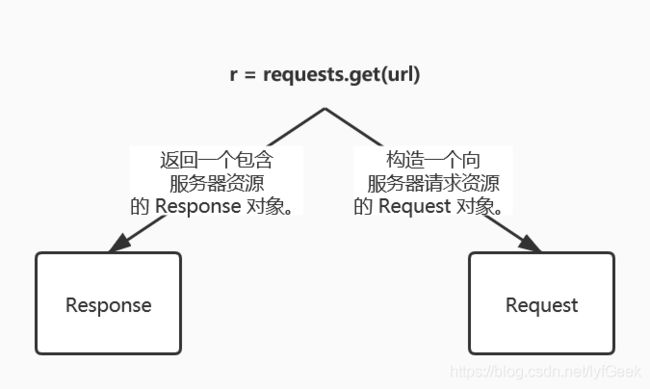

Requests 库两个重要对象。

-

Requewst 对象包含向服务器请求的资源。

-

Response 对象返回包含爬虫返回的内容。

编码。

r.encoding

从 HTTP 的 header 中猜测的。如果 header 中不存在 charset,则认为编码为 ISO-8859-1。

(不能解析中文)。

r.apparent_encoding

从 HTTP 的内容部分,而不是头部分,去分析可能的编码。(更准确)。

- 爬取一般流程。

>>> import requests

>>> r = requests.get('https://www.baidu.com/')

>>> r.encoding

'ISO-8859-1'

>>> r.apparent_encoding

'utf-8'

>>> r.text

'\r\n ç\x99¾åº¦ä¸\x80ä¸\x8bï¼\x8cä½\xa0å°±ç\x9f¥é\x81\x93 å\x85³äº\x8eç\x99¾åº¦ About Baidu

©2017 Baidu 使ç\x94¨ç\x99¾åº¦å\x89\x8då¿\x85读 æ\x84\x8fè§\x81å\x8f\x8dé¦\x88 京ICPè¯\x81030173å\x8f·

\r\n'

>>> r.encoding = r.apparent_encoding

>>> r.text

'\r\n 百度一下,你就知道 \r\n'

>>>

爬取网页的通用代码框架。

网络连接有风险,异常处理很重要。

异常。

Requests 库很重要。

Response 对象。r.raise_for_status()。

通用代码。

import requests

def getHtmlText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status() # 如果状态不是 200,引发 HTTPError 异常。

r.encoding = r.apparent_encoding

return r.text

except:

return '产生异常。'

if __name__ == '__main__':

url = "https://www.baidu.com/"

print(getHtmlText(url))

HTTP 协议及 Requests 库方法。

HTTP, Hypertext Transfer Protocol,超文本传输协议。

HTTP 是一个基于请求与响应模式的、无状态的应用层协议。

HTTP 一般采用 URL 作为定位网络资源的标识。

URL 格式。

http://host[:port] [path]

- host

合法的 Internet 主机域名或 IP 地址。- port

端口号,缺省端口为 80。- path

请求资源的路径。

HTTP URL 的理解。

URL 是通过 HTTP 协议存取资源的 Internet 路径,一个 URL 对应一个数据资源。

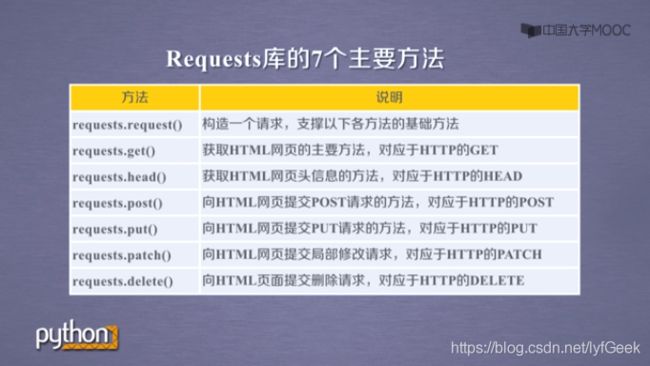

HTTP 协议对资源的操作。

PATCH 和 PUT 的区别。

假设 URL 位置有一组数据 UserInfo,包括 UserID,UserName 等 20 个字段。

需求:用户修改了 UserName,其他不变。

- 采用 PATCH,仅向 URL 提交 UserName 的局部更新请求。

- 采用 PUT,必须将所有 20 个 字段一并提交到 URL,未提交字段被删除。

PATCH ——> 节省网络带宽。

HTTP 协议与 Requests 库。

>>> import requests

>>> r = requests.head('http://www.baidu.com')

>>> r.headers

{

'Connection': 'keep-alive', 'Server': 'bfe/1.0.8.18', 'Last-Modified': 'Mon, 13 Jun 2016 02:50:08 GMT', 'Cache-Control': 'private, no-cache, no-store, proxy-revalidate, no-transform', 'Content-Encoding': 'gzip', 'Content-Type': 'text/html', 'Date': 'Sun, 15 Mar 2020 05:27:57 GMT', 'Pragma': 'no-cache'}

>>> r.text

'''

>>>

# r.text 为空。

Requests 库的 post() 方法。

- 向 URL POST 一个字典,自动编码为 form(表单)。

>>> payload = {

'key1': 'value1', 'key2': 'value2'}

>>> r = requests.post('http://httpbin.org/post', data = payload)

>>> print(r.text)

{

"args": {

},

"data": "",

"files": {

},

"form": {

"key1": "value1",

"key2": "value2"

},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Content-Length": "23",

"Content-Type": "application/x-www-form-urlencoded",

"Host": "httpbin.org",

"User-Agent": "python-requests/2.22.0",

"X-Amzn-Trace-Id": "Root=1-5ed67f9c-eeb3629d002259d0b874d010"

},

"json": null,

"origin": "183.94.69.1",

"url": "http://httpbin.org/post"

}

>>>

- 向 URL POST 一个字符串,自动编码为 data。

>>> r = requests.post('http://httpbin.org/post', data = 'geek')

>>> print(r.text)

{

"args": {

},

"data": "geek",

"files": {

},

"form": {

},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Content-Length": "4",

"Host": "httpbin.org",

"User-Agent": "python-requests/2.22.0",

"X-Amzn-Trace-Id": "Root=1-5ed68024-fa648c08009393a8e83b747c"

},

"json": null,

"origin": "183.94.69.1",

"url": "http://httpbin.org/post"

}

>>>

Requests 库的 put() 方法。

>>> payload = {

'key1': 'value1', 'key2': 'value2'}

>>> r = requests.put('http://httpbin.org/put', data = payload)

>>> print(r.text)

{

"args": {

},

"data": "",

"files": {

},

"form": {

"key1": "value1",

"key2": "value2"

},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Content-Length": "23",

"Content-Type": "application/x-www-form-urlencoded",

"Host": "httpbin.org",

"User-Agent": "python-requests/2.12.4",

"X-Amzn-Trace-Id": "Root=1-5e6dc16b-6bf16d909e60eba2379976b6"

},

"json": null,

"origin": "111.47.210.49",

"url": "http://httpbin.org/put"

}

>>>

Requests 库主要方法解析。13 个。

def request(method, url, **kwargs):

method ——> 请求方法,对应 get / put / post 等 7 种。

url ——> 拟获取页面的 url 链接。

**kwargs ——> 控制访问的参数,共 13 个。

- method ——> 请求方式。

request('get', url, params=params, **kwargs)

request('options', url, **kwargs)

request('head', url, **kwargs)

request('post', url, data=data, json=json, **kwargs)

request('put', url, data=data, **kwargs)

request('patch', url, data=data, **kwargs)

request('delete', url, **kwargs)

- **kwargs ——> 控制访问的参数,共 13 个。

** 开头 ——> 可选参数。

def request(method, url, **kwargs):

"""Constructs and sends a :class:`Request `.

:param method: method for the new :class:`Request` object: ``GET``, ``OPTIONS``, ``HEAD``, ``POST``, ``PUT``, ``PATCH``, or ``DELETE``.

:param url: URL for the new :class:`Request` object.

:param params: (optional) Dictionary, list of tuples or bytes to send

in the query string for the :class:`Request`.

:param data: (optional) Dictionary, list of tuples, bytes, or file-like

object to send in the body of the :class:`Request`.

:param json: (optional) A JSON serializable Python object to send in the body of the :class:`Request`.

:param headers: (optional) Dictionary of HTTP Headers to send with the :class:`Request`.

:param cookies: (optional) Dict or CookieJar object to send with the :class:`Request`.

:param files: (optional) Dictionary of ``'name': file-like-objects`` (or ``{'name': file-tuple}``) for multipart encoding upload.

``file-tuple`` can be a 2-tuple ``('filename', fileobj)``, 3-tuple ``('filename', fileobj, 'content_type')``

or a 4-tuple ``('filename', fileobj, 'content_type', custom_headers)``, where ``'content-type'`` is a string

defining the content type of the given file and ``custom_headers`` a dict-like object containing additional headers

to add for the file.

:param auth: (optional) Auth tuple to enable Basic/Digest/Custom HTTP Auth.

:param timeout: (optional) How many seconds to wait for the server to send data

before giving up, as a float, or a :ref:`(connect timeout, read

timeout) ` tuple.

:type timeout: float or tuple

:param allow_redirects: (optional) Boolean. Enable/disable GET/OPTIONS/POST/PUT/PATCH/DELETE/HEAD redirection. Defaults to ``True``.

:type allow_redirects: bool

:param proxies: (optional) Dictionary mapping protocol to the URL of the proxy.

:param verify: (optional) Either a boolean, in which case it controls whether we verify

the server's TLS certificate, or a string, in which case it must be a path

to a CA bundle to use. Defaults to ``True``.

:param stream: (optional) if ``False``, the response content will be immediately downloaded.

:param cert: (optional) if String, path to ssl client cert file (.pem). If Tuple, ('cert', 'key') pair.

:return: :class:`Response ` object

:rtype: requests.Response

Usage::

>>> import requests

>>> req = requests.request('GET', 'https://httpbin.org/get')

>>> req

"""

# By using the 'with' statement we are sure the session is closed, thus we

# avoid leaving sockets open which can trigger a ResourceWarning in some

# cases, and look like a memory leak in others.

with sessions.Session() as session:

return session.request(method=method, url=url, **kwargs)

params ——> 字典或字节序列,作为参数增加到 url 中。

>>> kv = {

'key1': 'value1', 'key2': 'value2'}

>>> r = requests.request('GET', 'http://python123.io/ws', params=kv)

>>> print(r.url)

https://python123.io/ws?key1=value1&key2=value2

data ——> 字典、字节序列或文件对象,作为 Request 的内容。

>>> kv = {

'key1': 'value1', 'key2': 'value2'}

>>> r = requests.request('POST', 'http://python123.io/ws', data=kv)

>>> body = '主体内容'

>>> r = requests.request('POST', 'http://python123.io/ws', data=body.encode('utf-8'))

Json ——> JSON 格式的数据,作为 Request 的内容。

>>> kv = {

'key1': 'value1'}

>>> r = requests.request('POST', 'http://python123.io/ws', json=kv)

headers ——> 字典,HTTP 定制头。

>>> kv = {

'user-agent': 'Chrome/10'}

>>> r = requests.request('POST', 'http://python123.io/ws', headers=hd)

cookies ——> 字典或 CookieJar,Request 中的cookie。

auth ——> 元组,支持 HTTP 认证功能。

files ——> 字典类型,传输文件。

>>> fs = {

'file': open('data.xls', 'rb')}

>>> r = requests.request('POST', 'http://python123.io/ws', files=fs)

timeout ——> 设定超时时间,单位:秒。

>>> r = requests.request('GET', 'http://www.baidu.com', timeout=10)

proxies ——> 字典类型,设定访问代理服务器,可以增加登录认证。(隐藏用户原 IP 信息,防止爬虫逆追踪)。

>>> pxs = {

'http': 'http://user:[email protected]:1234', \

... 'https': 'https://10.10.10.1:4321'}

>>> r = requests.request('GET', 'http://www.baudi.com', proxies=pxs)

allow_redirects ——> True / False,默认为 True,重定向开关。

stream ——> True / False,默认为 True,获取内容立即下载开关。

verify ——> True / False,默认为 True,认证 SSL 证书开关。

cert ——> 本地 SSL 证书路径。

requests.get(url, params=None, **kwargs)

requests.head(url, **kwargs)

requests.post(url, data=None, json=None, **kwargs)

requests.put(url, data=None, **kwargs)

requests.patch(url, data=None, **kwargs)

requests.delete(url, **kwargs)

robots.txt

网络爬虫排队标准。

网络爬虫引发的问题。

- 网络爬虫的法律风险。

服务器上的数据有产权归属。

网络爬虫获取数据后牟利将带来法律风险。

- 网络爬虫泄露隐私。

网络爬虫可能具备突破简单访问控制的能力,获得被保护数据从而泄露个人隐私。

对网络爬虫采取限制。

-

来源审查:判断 User-Agent 进行限制。

检查来访 HTTP 协议头的 User-Agent 域,只响应浏览器或友好爬虫的访问。

-

发布公告:Robots 协议。

告知所有爬虫网站的看爬取策略,要求爬虫遵守。

Robots 协议。

放在根目录下。

http://www.baudi.com/robots.txt

http://news.sina.com.cn/robots.txt

http://www.qq.com/robots.txt

http://news.qq.com/robots.txt

http://www.moe.edu.cn/robots.txt(无 robots 协议)。

https://www.jd.com/robots.txt

User-agent: *

Disallow: /?*

Disallow: /pop/*.html

Disallow: /pinpai/*.html?*

User-agent: EtaoSpider

Disallow: /

User-agent: HuihuiSpider

Disallow: /

User-agent: GwdangSpider

Disallow: /

User-agent: WochachaSpider

Disallow: /

Robots 协议的遵守方式。

Robots 协议的使用。

网络爬虫:自动或人工识别 robots.txt,再进行内容爬取。

- 约束性:Robots 协议是建议但非约束性,网络爬虫可以不遵守,但存在法律风险。

类人行为可以不参考 Robots 协议。(小程序)。

实例~京东。

https://item.jd.com/100012749270.html

geek@ubuntu:~$ python3

Python 3.8.2 (default, Apr 27 2020, 15:53:34)

[GCC 9.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import requests

>>> r = requests.get('https://item.jd.com/100012749270.html')

>>> r.status_code

200

>>> r.encoding

'UTF-8'

# 可以从头部分解析编码。

>>> import requests

>>> r = requests.get('https://item.jd.com/100012749270.html')

>>> r.status_code

200

>>> r.encoding

'gbk'

# 可以从头部分解析编码。

>>> r.text[:1000]

'\n\n\n \n \n 【小米Ruby】小米 (MI)Ruby 15.6英寸 网课 学习轻薄笔记本电脑(英特尔酷睿i5-8250U 8G 512G SSD 2G GDDR5独显 FHD 全键盘 Office Win10) 深空灰 电脑【行情 报价 价格 评测】-京东 \n \n \n \n \n \n \n \n \n import requests

def getHtmlText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status() # 如果状态不是 200,引发 HTTPError 异常。

r.encoding = r.apparent_encoding

return r.text

except:

return '产生异常。'

if __name__ == '__main__':

url = "https://item.jd.com/100004364088.html"

print(getHtmlText(url))

实例~亚马逊。

https://www.amazon.cn/dp/B07K1SMRGS/ref=lp_1454005071_1_3?s=pc&ie=UTF8&qid=1592061720&sr=1-3

>>>> r = requests.get('https://www.amazon.cn/dp/B07K1SMRGS/ref=lp_1454005071_1_3?s=pc&ie=UTF8&qid=1592061720&sr=1-3')

>>> r.status_code

503

Python 忠实的告诉了服务器这是由 Python 的 Requests 库请求的。

>>> r.headers

{

'Server': 'Server', 'Date': 'Sat, 13 Jun 2020 15:22:59 GMT', 'Content-Type': 'text/html', 'Content-Length': '2424', 'Connection': 'keep-alive', 'Vary': 'Content-Type,Accept-Encoding,X-Amzn-CDN-Cache,X-Amzn-AX-Treatment,User-Agent', 'Content-Encoding': 'gzip', 'x-amz-rid': 'W3MN17256ZJS4Z80FT44'}

>>> r.request.headers

{

'User-Agent': 'python-requests/2.22.0', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive'}

>>> r.request.headers

{

'Connection': 'keep-alive', 'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'User-Agent': 'python-requests/2.12.4'}

>>> r.encoding

'ISO-8859-1'

>>> r.encoding = r.apparent_encoding

>>> r.text

'\n\n\n\n\n\n\n\n\nAmazon CAPTCHA \n\n\n\n\n\n\n\n\n\n\n\n \n\n'

>>>

修改 user-agent。

>>> kv = {

'user-agent': 'Mozilla/5.0'}

>>> url = 'https://www.amazon.cn/dp/B07K1SMRGS/ref=lp_1454005071_1_3?s=pc&ie=UTF8&qid=1592061720&sr=1-3'

>>> r = requests.get(url, headers = kv)

>>> r.status_code

200

>>> r.request.headers

{

'user-agent': 'Mozilla/5.0', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive'}

实例~百度、360。

- 百度接口。

https://www.baidu.com/s?wd=

keyword

>>> import requests

>>> kv = {

'wd': 'Python'}

>>> r = requests.get('http://www.baidu.com/s', params = kv)

>>> r.status_code

200

>>> r.request.url

'https://wappass.baidu.com/static/captcha/tuxing.html?&ak=c27bbc89afca0463650ac9bde68ebe06&backurl=https%3A%2F%2Fwww.baidu.com%2Fs%3Fwd%3DPython&logid=7724667852627971382&signature=c3ac95f01cbdb0bb8de7cee9cf6cedba×tamp=1592062402'

>>>

>>> len(r.text)

1519

- 360 接口。

https://www.so.com/s?q=

keyword

实例~网络图片的爬取和存储。

- 网络图片链接格式。

http://www.example.com/

picture.jpg

- 国家地理中文网。

http://www.ngchina.com.cn/

每日一图。

http://www.ngchina.com.cn/photography/photo_of_the_day/6245.html

http://image.ngchina.com.cn/2020/0310/20200310021330655.jpg

>>> import requests

>>> path = './marry.jpg'

>>> url = 'http://www.ngchina.com.cn/photography/photo_of_the_day/6245.html'

>>> r = requests.get(url)

>>> r.status_code

200

>>> with open(path, 'wb') as f:

... f.write(r.content)

...

26666

>>> f.close()

>>>

- 完整代码。

import os

import requests

root = r'/home/geek/geek/crawler_demo/'

url = 'http://image.ngchina.com.cn/2020/0310/20200310021330655.jpg'

path = root + url.split('/')[-1]

try:

if not os.path.exists(root):

os.mkdir(root)

if not os.path.exists(path):

with open(path, 'wb') as f:

r = requests.get(url)

f.write(r.content) # r.content 是二进制形式。

f.close()

print('文件保存成功。')

else:

print('文件已存在。')

except Exception as e:

print(e)

print('爬取失败。')

结果。

geek@geek-PC:~/geek/crawler_demo$ ls

marry.jpg

geek@geek-PC:~/geek/crawler_demo$ ls

20200310021330655.jpg marry.jpg

实例~IP 地址归属地的自动查询。

首先需要一个 IP 地址库。

www.ip138.com iP查询(搜索iP地址的地理位置)

接口。

http://www.ip138.com/iplookup.asp?ip=

IP 地址&action=2

import requests

url = 'https://www.ip138.com/iplookup.asp?ip=69.30.201.82&action=2'

headers = {

'Host': 'www.ip138.com', 'User-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:77.0) Gecko/20100101 Firefox/77.0', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 'Accept-Language': 'en-US,en;q=0.5', 'Accept-Encoding: gzip, deflate, br', 'Connection': 'keep-alive', 'Referer'': 'https://www.ip138.com/iplookup.asp?ip=69.30.201.82&action=2', 'Upgrade-Insecure-Requests': 1}

r = requests.get(url, headers=headers)

代码。

import requests

url = 'http://www.ip138.com/iplookup.asp?ip=111.47.210.37&action=2'

kv = {

'user-agent': 'Mozilla/5.0'}

try:

r = requests.get(url, headers=kv)

r.raise_for_status()

r.encoding = r.apparent_encoding

print(r.text[:1000])

except:

print('爬取失败。')

#!/usr/bin/python

# -*- coding: utf-8 -*-

import httplib2

from urllib.parse import urlencode #python3

#from urllib import urlencode #python2

params = urlencode({

'ip':'9.8.8.8','datatype':'jsonp','callback':'find'})

url = 'http://api.ip138.com/query/?'+params

headers = {

"token":"8594766483a2d65d76804906dd1a1c6a"}#token为示例

http = httplib2.Http()

response, content = http.request(url,'GET',headers=headers)

print(content.decode("utf-8"))

Beautiful Soup。

解析 HTML 页面。

文档。

https://beautifulsoup.readthedocs.io/zh_CN/v4.4.0/

Beautiful Soup 库的安装。

geek@geek-PC:~$ pip install beautifulsoup4

测试页面。

https://python123.io/ws/demo.html

页面源码。

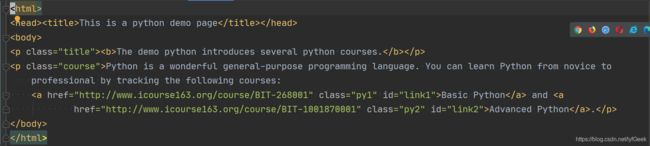

<html><head><title>This is a python demo pagetitle>head>

<body>

<p class="title"><b>The demo python introduces several python courses.b>p>

<p class="course">Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a href="http://www.icourse163.org/course/BIT-268001" class="py1" id="link1">Basic Pythona> and <a href="http://www.icourse163.org/course/BIT-1001870001" class="py2" id="link2">Advanced Pythona>.p>

body>html>

- Requests 获取源码。

geek@ubuntu:~$ python3

Python 3.8.2 (default, Apr 27 2020, 15:53:34)

[GCC 9.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import requests

>>> r = requests.get('https://python123.io/ws/demo.html')

>>> demo = r.text

>>> demo

'This is a python demo page \r\n\r\nThe demo python introduces several python courses.

\r\nPython is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:\r\nBasic Python and Advanced Python.

\r\n'

>>> from bs4 import BeautifulSoup

>>> soup = BeautifulSoup(demo, 'html.parser')

>>> soup.prettify()

'\n \n \n This is a python demo page\n \n \n \n \n \n The demo python introduces several python courses.\n \n

\n \n Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:\n \n Basic Python\n \n and\n \n Advanced Python\n \n .\n

\n \n'

>>> print(soup.prettify())

<html>

<head>

<title>

This is a python demo page

</title>

</head>

<body>

<p class="title">

<b>

The demo python introduces several python courses.

</b>

</p>

<p class="course">

Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">

Basic Python

</a>

and

<a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">

Advanced Python

</a>

.

</p>

</body>

</html>

>>>

Beautiful Soup 库的基本元素。

Beautiful Soup 库是解析、遍历、维护“标签树”的功能库。

Beautiful Soup 库的引用。

Beautiful Soup 库,也叫

beautifulsoup4或bs4。

from bs4 import BeautifulSoup

import bs4

使用时,可以认为他们等价。

Beautiful Soup 库解析器。

Beautiful Soup 类的基本元素。

>>> from bs4 import BeautifulSoup

>>> soup = BeautifulSoup(demo, 'html.parser')

>>> soup.title

<title>This is a python demo page</title>

>>> tag = soup.a

>>> tag

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a>

>>> soup.a.name

'a'

>>> soup.a.parent.name

'p'

>>> soup.a.parent

<p class="course">Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a> and <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>.</p>

>>>

- 标签属性。——> 字典。

>>> tag = soup.a

>>> tag.attrs

{

'href': 'http://www.icourse163.org/course/BIT-268001', 'class': ['py1'], 'id': 'link1'}

>>> tag.attrs['class']

['py1']

>>> tag.attrs['href']

'http://www.icourse163.org/course/BIT-268001'

>>> type(tag.attrs)

<class 'dict'>

>>> type(tag)

<class 'bs4.element.Tag'>

>>>

- 标签的内容。NavigableString。

.string

>>> soup.a

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a>

>>> soup.a.string

'Basic Python'

>>> soup.p

<p class="title"><b>The demo python introduces several python courses.</b></p>

>>> soup.p.string

'The demo python introduces several python courses.'

>>> type(soup.p.string)

<class 'bs4.element.NavigableString'>

>>>

- Comment ——> 注释。

>>> newsoup = BeautifulSoup('This is not a comment.

', 'html.parser')

>>> newsoup.b.string

' This is a comment.'

>>> type(newsoup.b.string)

<class 'bs4.element.Comment'>

>>> newsoup.p.string

'This is not a comment.'

>>> type(newsoup.p.string)

<class 'bs4.element.NavigableString'>

>>>

小结。

基于 bs4 库的 HTML 内容遍历方法。

- HTML 基本格式。

遍历方式。

标签树的下行遍历。

>>> soup = BeautifulSoup(demo, 'html.parser')

>>> soup.head

<head><title>This is a python demo page</title></head>

>>> soup.head.contents

[<title>This is a python demo page</title>]

>>> soup.body.contents

['\n', <p class="title"><b>The demo python introduces several python courses.</b></p>, '\n', <p class="course">Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a> and <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>.</p>, '\n']

>>> len(soup.body.contents)

5

# 列表 list。('\n' 算一项)。

>> soup.body.contents[1]

<p class="title"><b>The demo python introduces several python courses.</b></p>

标签树的上行遍历。

>>> soup = BeautifulSoup(demo, 'html.parser')

>>> soup.title.parent

<head><title>This is a python demo page</title></head>

>>> soup.html.parent

<html><head><title>This is a python demo page</title></head>

<body>

<p class="title"><b>The demo python introduces several python courses.</b></p>

<p class="course">Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a> and <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>.</p>

</body></html>

>>> soup.parent

>>> soup = BeautifulSoup(demo, 'html.parser')

>>> for parent in soup.a.parents:

... if parent is None:

... print(parent)

... else:

... print(parent.name)

...

p

body

html

[document]

标签树的平行遍历。

小结。

基于 bs4 库的 HTML 格式输出。

如何让 内容更加“友好”的显示。

>>> import requests

>>> r = requests.get("http://python123.io/ws/demo.html")

>>> demo = r.text

>>> demo

'This is a python demo page \r\n\r\nThe demo python introduces several python courses.

\r\nPython is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:\r\nBasic Python and Advanced Python.

\r\n'

>>> from bs4 import BeautifulSoup

>>> soup = BeautifulSoup(demo, 'html.parser')

>>> soup

<html><head><title>This is a python demo page</title></head>

<body>

<p class="title"><b>The demo python introduces several python courses.</b></p>

<p class="course">Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a> and <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>.</p>

</body></html>

>>> soup.prettify()

'\n \n \n This is a python demo page\n \n \n \n \n \n The demo python introduces several python courses.\n \n

\n \n Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:\n \n Basic Python\n \n and\n \n Advanced Python\n \n .\n

\n \n'

// 在每一组标签后加了 \n。

>>> print(soup.prettify())

<html>

<head>

<title>

This is a python demo page

</title>

</head>

<body>

<p class="title">

<b>

The demo python introduces several python courses.

</b>

</p>

<p class="course">

Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">

Basic Python

</a>

and

<a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">

Advanced Python

</a>

.

</p>

</body>

</html>

>>>

编码。Python3 默认使用 UTF-8。

信息提取。

信息标记的三种形式。

- 标记后的信息可形成信息组织结构,增加了信息维度。

- 标记后的信息可用于通信、存储或展示。

- 标记的结构与信息一样具有重要价值。

- 标记后的信息更利于程序理解和运用。

HTML 的信息标记。

HTML 通过预定义的 <>… 标签形式组织不同类型的信息。

XML。

e

XtensibleMarkupLanguage。

xml 是基于 html 发展的通用信息表达形式。

json。

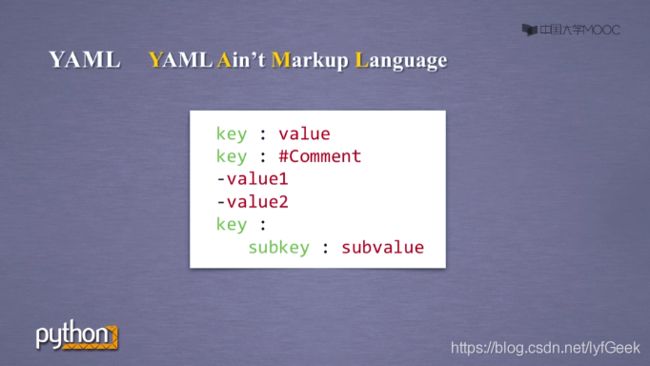

yaml。

三种信息标记形式的比较。

信息提取的一般方法。

完整解析信息的标记形式,再提取关键信息。

无视标记形式,直接搜索关键信息。

融合方法。

实例。

提取 HTML 中所有的 URL 标签。

geek@ubuntu:~$ python3

Python 3.8.2 (default, Apr 27 2020, 15:53:34)

[GCC 9.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import requests

>>> from bs4 import BeautifulSoup

>>> r = requests.get('https://python123.io/ws/demo.html')

>>> demo = r.text

>>> soup = BeautifulSoup(demo, 'html.parser')

>>> for link in soup.find_all('a'):

... print(link.get('href'))

...

http://www.icourse163.org/course/BIT-268001

http://www.icourse163.org/course/BIT-1001870001

bs4.BeautifulSoup 的方法。

>>> type(soup)

<class 'bs4.BeautifulSoup'>

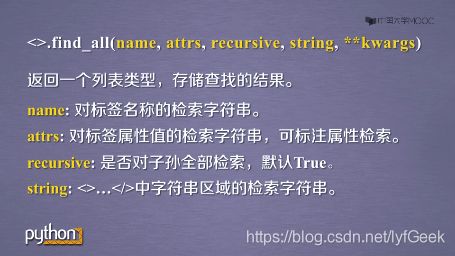

def find_all(self, name=None, attrs={}, recursive=True, text=None, limit=None, **kwargs):

返回一个列表类型,存储查找的结果。

>>> soup.find_all('a')

[<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a>, <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>]

>>> soup.find_all(['a', 'b'])

[<b>The demo python introduces several python courses.</b>, <a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a>, <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>]

>>> for tag in soup.find_all(True):

... print(tag.name)

...

html

head

title

body

p

b

p

a

a

正则表达式库。

>>> import re

>>> for tag in soup.find_all(re.compile('b')):

... print(tag.name)

...

body

b

- name。

对标签名称的检索字符串。

- attrs。

对标签属性值的检索字符串,可标注属性检索。

带有 “course” 属性值的 标签。

>>> soup.find_all('p', 'course')

[<p class="course">Python is a wonderful general-purpose programming language. You can learn Python from novice to professional by tracking the following courses:

<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a> and <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>.</p>]

id 域为 link1 的标签。

>>> soup.find_all(id='link1')

[<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a>]

>>> soup.find_all(id='link')

[]

正则。

>>> import re

>>> soup.find_all(id=re.compile('link'))

[<a class="py1" href="http://www.icourse163.org/course/BIT-268001" id="link1">Basic Python</a>, <a class="py2" href="http://www.icourse163.org/course/BIT-1001870001" id="link2">Advanced Python</a>]

- recursive。

是否对子孙全部检索。默认 True。

- string。

<>… 中字符串区域的检索字符串。

>>> soup.find_all(string = 'Basic Python')

['Basic Python']

>>> import re

>>> soup.find_all(string = re.compile('python'))

['This is a python demo page', 'The demo python introduces several python courses.']

扩展方法。

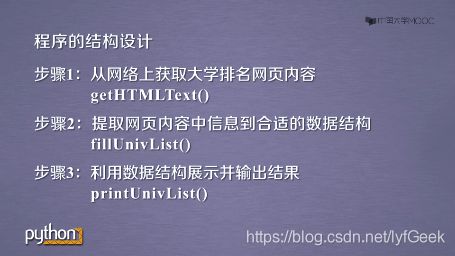

实例~中国大学排名定向爬虫。

http://www.zuihaodaxue.com/zuihaodaxuepaiming2019.html

- 程序分析。

使用二维数组。

import requests

from bs4 import BeautifulSoup

import bs4

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ''

def fillUnivList(ulist, html):

soup = BeautifulSoup(html, 'html.parser')

for tr in soup.find('tbody').children:

if isinstance(tr, bs4.element.Tag):

tds = tr('td')

ulist.append([tds[0].string, tds[1].string, tds[2].string])

def printUnivList(ulist, num):

print('{:^10}\t{:^6}\t{:^10}'.format('排名', '学校名称', '总分'))

for i in range(num):

u = ulist[i]

print('{:^10}\t{:^6}\t{:^10}'.format(u[0], u[1], u[2]))

print('Sum' + str(num))

def main():

unifo = []

url = 'http://www.zuihaodaxue.com/zuihaodaxuepaiming2019.html'

html = getHTMLText(url)

fillUnivList(unifo, html)

printUnivList(unifo, 20)

main()

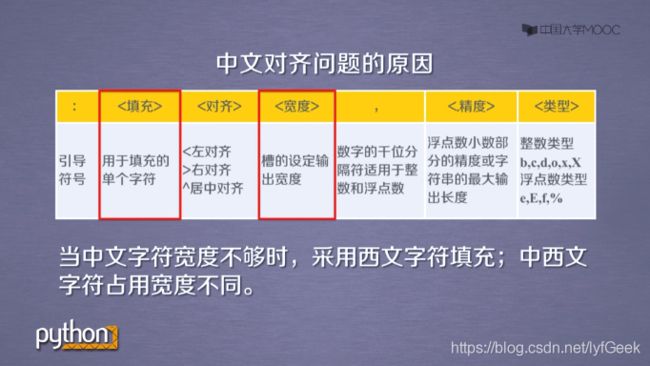

def printUnivList(ulist, num):

# print('{:^10}\t{:^6}\t{:^10}'.format('排名', '学校名称', '总分'))

tplt = '{0:^10}\t{1:{3}^10}\t{2:^10}'

# 使用 tplt.format() 的第三个参数进行填充。

print(tplt.format('排名', '学校名称', '总分', chr(12288)))

for i in range(num):

u = ulist[i]

print(tplt.format(u[0], u[1], u[2], chr(12288)))

print('Sum' + str(num))

Projects

实战项目 A/B。

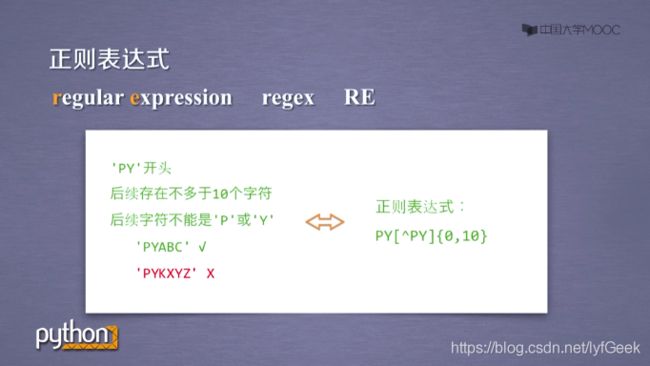

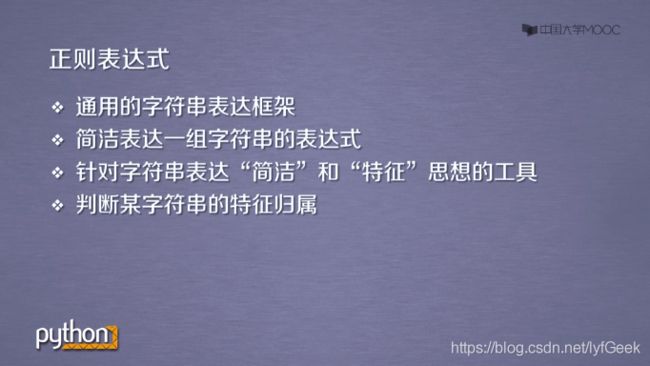

正则表达式 Re~一行胜千言。

正则表达式详解。

提取页面关键信息。

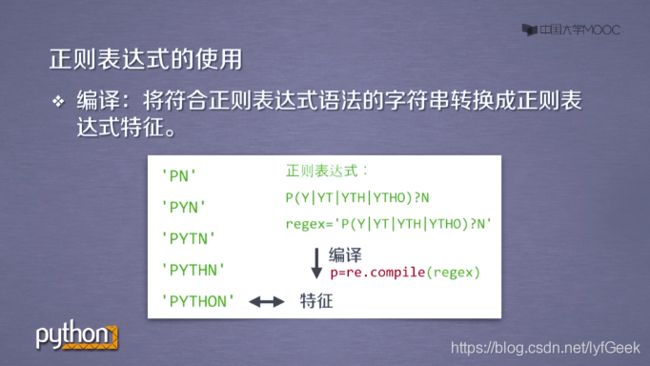

理解:编译(compile)之前 ta 仅仅是一个字符串。

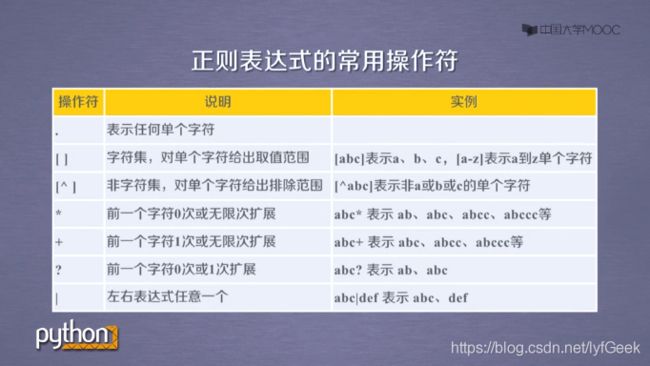

正则表达式语法。

正则实例。

- P( Y | YT | YTH | YTHO)?N

‘PN’、‘PYN’、‘PYTN’、‘PYTHN’、‘PYTHON’- PYTHON+

‘PYTHON’、‘PYTHONN’、‘PYTHONNN’、…- PY[TH]ON

‘PYTON’、‘PYHON’PY[^TH]?ON

‘PYON’、‘PYaON’、‘PYbON’、‘PYcON’、…- PY{:3}N

‘PN’、‘PYN’、‘PYYN’、‘PYYYN’

^[A-Za-z]+$

由 26 个字母组成的字符串。^[A-Za-z0-9]+$

由 26 个字母和数字组成的字符串。- ^-?\d+$

整数形式的字符串。^[0-9]*[1-9][0-9]*$

正整数形式的字符串。- [1-9]\d{5}

中国境内邮政编码,6位。- [\u4e00-\u9fa5]

匹配中文字符。- \d{3}-\d{8}|\d{4}-\d{7}

国内电话号码。010-68913536。

匹配 ip 地址的正则分析。

IP 地址分 4 段,每段 0~255。

\d+.\d+.\d+.\d+

\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}

0-99:[1-9]?\d

100-199:1\d{2}

200-249:2[0-4]\d

250-255:25[0-5]

(([1-9]?\d|1\d{2}|2[0-4]\d|25[0-5]).){3}(([1-9]?\d|1\d{2}|2[0-4]\d|25[0-5])

re 库。

Python 的标准库。

raw string 类型(原生字符串类型)。

re 库采用 raw string 类型表示正则表达式。r'text'。

eg. r’[1-9]\d{5}’

raw string 是批不包含转义符的字符串。

也可以直接使用 string 类型,更繁琐。

eg. r’[1-9]|d{5}’

def search(pattern, string, flags=0):

"""Scan through string looking for a match to the pattern, returning

a Match object, or None if no match was found."""

return _compile(pattern, flags).search(string)

- pattern。正则表达式的字符串或原生字符串的表示。

- string。待匹配字符串。

- flags。正则表达式使用时的控制标记。

>>> import re

>>> match = re.search(r'[1-9]\d{5}', 'BIT 100081')

>>> if match:

... print(match.group(0))

...

100081

>>>

def match(pattern, string, flags=0):

"""Try to apply the pattern at the start of the string, returning

a Match object, or None if no match was found."""

return _compile(pattern, flags).match(string)

从一个字符串的开始位置起匹配正则表达式,返回 match 对象。

- pattern。正则表达式的字符串或原生字符串的表示。

- string。待匹配字符串。

- flags。正则表达式使用时的控制标记。

>>> import re

>>> match = re.match(r'[1-9]\d{5}', 'BIT 100081')

>>> if match:

... match.group(0)

...

>>> match.group(0)

Traceback (most recent call last):

File "" , line 1, in <module>

AttributeError: 'NoneType' object has no attribute 'group'

>>>

>>> match = re.match(r'[1-9]\d{5}', '100081 BIT')

>>> if match:

... print(match.group(0))

...

100081

def findall(pattern, string, flags=0):

"""Return a list of all non-overlapping matches in the string.

If one or more capturing groups are present in the pattern, return

a list of groups; this will be a list of tuples if the pattern

has more than one group.

Empty matches are included in the result."""

return _compile(pattern, flags).findall(string)

搜索字符串,以列表类型返回全部能匹配的子串。

- pattern。正则表达式的字符串或原生字符串的表示。

- string。待匹配字符串。

- flags。正则表达式使用时的控制标记。

>>> import re

>>> ls = re.findall(r'[1-9]\d{5}', 'BIT100081 TSU100084')

>>> ls

['100081', '100084']

def split(pattern, string, maxsplit=0, flags=0):

"""Split the source string by the occurrences of the pattern,

returning a list containing the resulting substrings. If

capturing parentheses are used in pattern, then the text of all

groups in the pattern are also returned as part of the resulting

list. If maxsplit is nonzero, at most maxsplit splits occur,

and the remainder of the string is returned as the final element

of the list."""

return _compile(pattern, flags).split(string, maxsplit)

将一个字符串按照正则表达式匹配结果进行分割,返回列表类型。

- pattern。正则表达式的字符串或原生字符串的表示。

- string。待匹配字符串。

- maxsplit。最大分割数,剩余部分作为最后一个元素输出。

- flags。正则表达式使用时的控制标记。

>>> import re

>>> ls = re.split(r'[1-9]\d{5}', 'BIT100081 TSU100084')

>>> import re

>>> re.split(r'[1-9]\d{5}', 'BIT100081 TSU100084')

['BIT', ' TSU', '']

>>> re.split(r'[1-9]\d{5}', 'BIT100081 TSU100084', maxsplit=1)

['BIT', ' TSU100084']

def finditer(pattern, string, flags=0):

"""Return an iterator over all non-overlapping matches in the

string. For each match, the iterator returns a Match object.

Empty matches are included in the result."""

return _compile(pattern, flags).finditer(string)

搜索字符串,返回一个匹配结果的迭代类型,每个迭代元素是 match 对象。

- pattern。正则表达式的字符串或原生字符串的表示。

- string。待匹配字符串。

- flags。正则表达式使用时的控制标记。

>>> import re

>>> for m in re.finditer(r'[1-9]\d{5}', 'BIT100081 TSU100084'):

... if m:

... print(m.group(0))

...

100081

100084

def sub(pattern, repl, string, count=0, flags=0):

"""Return the string obtained by replacing the leftmost

non-overlapping occurrences of the pattern in string by the

replacement repl. repl can be either a string or a callable;

if a string, backslash escapes in it are processed. If it is

a callable, it's passed the Match object and must return

a replacement string to be used."""

return _compile(pattern, flags).sub(repl, string, count)

在一个字符串中匹配替换所有匹配正则表达式的字串,返回替换后的字符串。

- pattern。正则表达式的字符串或原生字符串的表示。

- repl。替换匹配字符串的字符串。

- string。待匹配字符串。

- count。匹配的最大替换次数。

- flags。正则表达式使用时的控制标记。

>>> import re

>>> for m in re.finditer(r'[1-9]\d{5}', 'BIT100081 TSU100084'):

... if m:

... print(m.group(0))

...

100081

100084

>>> import re

>>> re.sub(r'[1-9]\d{5}', ':zipcode', 'BIT100081 TSU100084')

'BIT:zipcode TSU:zipcode'

re 编译。

def compile(pattern, flags=0):

"Compile a regular expression pattern, returning a Pattern object."

return _compile(pattern, flags)

将正则表达式的字符串形式编译成正则表达式对象。

regex = recompile(r’[1-9]\d{5}’)

re Match 对象。

>>> import re

>>> match = re.search(r'[1-9]\d{5}', 'BIT 100081')

>>> if match:

... print(match.group(0))

...

100081

>>> type(match)

<class 're.Match'>

Match 对象属性。

Match 对象方法。

>>> import re

>>> m = re.search(r'[1-9]\d{5}', 'BIT100081 TSU100084')

>>> m.string

'BIT100081 TSU100084'

>>> m.re

re.compile('[1-9]\\d{5}')

>>> m.pos

0

>>> m.endpos

19

>>> m.group(0)

'100081'

>>> m.start()

3

>>> m.end()

9

>>> m.span()

(3, 9)

re 库的贪婪匹配的最小匹配。

淘宝商品比价定向爬虫。

搜索链接。

https://s.taobao.com/search?q=手机&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306

第二页。

https://s.taobao.com/search?q=%E6%89%8B%E6%9C%BA&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306&bcoffset=3&ntoffset=3&p4ppushleft=1%2C48&

s=44

第三页。

https://s.taobao.com/search?q=%E6%89%8B%E6%9C%BA&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm=a21bo.2017.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20170306&bcoffset=0&ntoffset=6&p4ppushleft=1%2C48&

s=88

- https://s.taobao.com/robots.txt。

User-agent: *

Disallow: /

import re

import requests

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ''

def pasePage(ilt, html):

try:

plt = re.findall(r'\"view_price\":\"[\d.]*\"', html)

tlt = re.findall(r'\"raw_title\":\".*?\"', html)

for i in range(len(plt)):

price = eval(plt[i].split(':')[1])

title = eval(tlt[i].split(':')[1])

ilt.append([price, title])

except:

print('')

def printGoodsList(ilt):

tplt = '{:4}\t{:8}\t{:16}'

print(tplt.format('序号', '价格', '商品名称'))

count = 0

for g in ilt:

count = count + 1

print(tplt.format(count, g[0], g[1]))

def main():

goods = '手机'

depth = 2

start_url = 'https://s.taobao.com/search?q=' + goods

infoList = []

for i in range(depth):

try:

url = start_url + '&s=' + str(44 * i)

html = getHTMLText(url)

pasePage(infoList, html)

except:

continue

printGoodsList(infoList)

if __name__ == '__main__':

main()

股票数据定向爬虫。

-

新浪股票。https://finance.sina.com.cn/stock/

股票信息是通过 js 获得的,暂不适合爬虫。 -

百度股市通。https://gupiao.baidu.com/stock/(gg)

-

东方财富网。

https://www.eastmoney.com/

https://quote.eastmoney.com/center/gridlist.html#hs_a_board

import re

import traceback

import requests

from bs4 import BeautifulSoup

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ''

def getStockList(lst, stockURL):

html = getHTMLText(stockURL)

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs('href')

lst.append(re.findall(r'[s][hz]\d{6}', href)[0])

except:

continue

return ''

def getStockInfo(lst, stockURL, fpath):

for stock in lst:

url = stockURL + '.html'

html = getHTMLText(url)

try:

if html == '':

continue

infoDict = {

}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div', attrs={

'class': 'stock-bets'})

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val

with open(fpath, 'a', encoding='utf-8') as f:

f.write(str(infoDict) + '\n')

except:

traceback.print_exc()

continue

return ''

def main():

stock_list_url = 'https://quote.eastmoney.com/center/gridlist.html#hs_a_board'

stock_info_url = 'https://gupiao.baidu.com/stock/'

output_file = './BaiduStockInfo.txt'

slist = []

getStockList(slist, stock_list_url)

getStockInfo(slist, stock_info_url, output_file)

if __name__ == '__main__':

main()

优化程序速度。

def getHTMLText(url, code='utf-8'):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = code

# r.encoding = r.apparent_encoding

# r.encoding 从 header 中直接读取 encoding

# r.apparent_encoding。分析文本内容。速度较慢。

return r.text

except:

return ''

def getStockList(lst, stockURL):

html = getHTMLText(stockURL, 'GB2312')

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs('href')

lst.append(re.findall(r'[s][hz]\d{6}', href)[0])

except:

continue

return ''

进度条效果。

def getStockInfo(lst, stockURL, fpath):

count = 0 # 进度条。

for stock in lst:

url = stockURL + '.html'

html = getHTMLText(url)

try:

if html == '':

continue

infoDict = {

}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div', attrs={

'class': 'stock-bets'})

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val

with open(fpath, 'a', encoding='utf-8') as f:

f.write(str(infoDict) + '\n')

count = count + 1

print('\r当前进度:{:.2f}%'.format(count * 100 / len(lst)), end='') # \r。将光标提到当前行首。

except:

count = count + 1

print('\r当前进度:{:.2f}%'.format(count * 100 / len(lst)), end='') # \r。将光标提到当前行首。

traceback.print_exc()

continue

return ''

Scrapy

安装。

geek@ubuntu:~$ pip3 install scrapy

Collecting scrapy

Downloading Scrapy-2.1.0-py2.py3-none-any.whl (239 kB)

|████████████████████████████████| 239 kB 367 kB/s

Collecting cssselect>=0.9.1

Downloading cssselect-1.1.0-py2.py3-none-any.whl (16 kB)

Collecting Twisted>=17.9.0

Downloading Twisted-20.3.0.tar.bz2 (3.1 MB)

|████████████████████████████████| 3.1 MB 5.3 MB/s

Requirement already satisfied: cryptography>=2.0 in /usr/lib/python3/dist-packages (from scrapy) (2.8)

Collecting pyOpenSSL>=16.2.0

Downloading pyOpenSSL-19.1.0-py2.py3-none-any.whl (53 kB)

|████████████████████████████████| 53 kB 2.9 MB/s

Collecting w3lib>=1.17.0

Downloading w3lib-1.22.0-py2.py3-none-any.whl (20 kB)

Collecting lxml>=3.5.0

Downloading lxml-4.5.1-cp38-cp38-manylinux1_x86_64.whl (5.4 MB)

|████████████████████████████████| 5.4 MB 8.2 MB/s

Collecting service-identity>=16.0.0

Downloading service_identity-18.1.0-py2.py3-none-any.whl (11 kB)

Collecting PyDispatcher>=2.0.5

Downloading PyDispatcher-2.0.5.tar.gz (34 kB)

Collecting zope.interface>=4.1.3

Downloading zope.interface-5.1.0-cp38-cp38-manylinux2010_x86_64.whl (243 kB)

|████████████████████████████████| 243 kB 5.1 MB/s

Collecting protego>=0.1.15

Downloading Protego-0.1.16.tar.gz (3.2 MB)

|████████████████████████████████| 3.2 MB 7.4 MB/s

Collecting queuelib>=1.4.2

Downloading queuelib-1.5.0-py2.py3-none-any.whl (13 kB)

Collecting parsel>=1.5.0

Downloading parsel-1.6.0-py2.py3-none-any.whl (13 kB)

Collecting Automat>=0.3.0

Downloading Automat-20.2.0-py2.py3-none-any.whl (31 kB)

Collecting PyHamcrest!=1.10.0,>=1.9.0

Downloading PyHamcrest-2.0.2-py3-none-any.whl (52 kB)

|████████████████████████████████| 52 kB 3.4 MB/s

Collecting attrs>=19.2.0

Downloading attrs-19.3.0-py2.py3-none-any.whl (39 kB)

Collecting constantly>=15.1

Downloading constantly-15.1.0-py2.py3-none-any.whl (7.9 kB)

Collecting hyperlink>=17.1.1

Downloading hyperlink-19.0.0-py2.py3-none-any.whl (38 kB)

Collecting incremental>=16.10.1

Using cached incremental-17.5.0-py2.py3-none-any.whl (16 kB)

Requirement already satisfied: six>=1.5.2 in /usr/lib/python3/dist-packages (from pyOpenSSL>=16.2.0->scrapy) (1.14.0)

Collecting pyasn1

Downloading pyasn1-0.4.8-py2.py3-none-any.whl (77 kB)

|████████████████████████████████| 77 kB 7.1 MB/s

Collecting pyasn1-modules

Downloading pyasn1_modules-0.2.8-py2.py3-none-any.whl (155 kB)

|████████████████████████████████| 155 kB 5.8 MB/s

Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from zope.interface>=4.1.3->scrapy) (45.2.0)

Requirement already satisfied: idna>=2.5 in /usr/lib/python3/dist-packages (from hyperlink>=17.1.1->Twisted>=17.9.0->scrapy) (2.8)

Building wheels for collected packages: Twisted, PyDispatcher, protego

Building wheel for Twisted (setup.py) ... done

Created wheel for Twisted: filename=Twisted-20.3.0-cp38-cp38-linux_x86_64.whl size=3085348 sha256=fbd3e6b381fbf18cd9ece9dab6a9bae4be99c4e31e951c8c0279aea9038d5bcc

Stored in directory: /home/geek/.cache/pip/wheels/f2/36/1b/99fe6d339e1559e421556c69ad7bc8c869145e86a756c403f4

Building wheel for PyDispatcher (setup.py) ... done

Created wheel for PyDispatcher: filename=PyDispatcher-2.0.5-py3-none-any.whl size=11515 sha256=4bc491a529e781cfee92188ad6afd97eeb723c5842c4d358bf617158db062eec

Stored in directory: /home/geek/.cache/pip/wheels/d1/d7/61/11b5b370ee487d38b5408ecb7e0257db9107fa622412cbe2ff

Building wheel for protego (setup.py) ... done

Created wheel for protego: filename=Protego-0.1.16-py3-none-any.whl size=7765 sha256=3796572c76e5dfadb9f26c53ba170131e3155076aa07b092a49732e561133c72

Stored in directory: /home/geek/.cache/pip/wheels/91/64/36/bd0d11306cb22a78c7f53d603c7eb74ebb6c211703bc40b686

Successfully built Twisted PyDispatcher protego

Installing collected packages: cssselect, attrs, Automat, PyHamcrest, constantly, hyperlink, incremental, zope.interface, Twisted, pyOpenSSL, w3lib, lxml, pyasn1, pyasn1-modules, service-identity, PyDispatcher, protego, queuelib, parsel, scrapy

Successfully installed Automat-20.2.0 PyDispatcher-2.0.5 PyHamcrest-2.0.2 Twisted-20.3.0 attrs-19.3.0 constantly-15.1.0 cssselect-1.1.0 hyperlink-19.0.0 incremental-17.5.0 lxml-4.5.1 parsel-1.6.0 protego-0.1.16 pyOpenSSL-19.1.0 pyasn1-0.4.8 pyasn1-modules-0.2.8 queuelib-1.5.0 scrapy-2.1.0 service-identity-18.1.0 w3lib-1.22.0 zope.interface-5.1.0

geek@ubuntu:~$

geek@ubuntu:~$ scrapy -h

Scrapy 2.1.0 - no active project

Usage:

scrapy <command> [options] [args]

Available commands:

bench Run quick benchmark test

fetch Fetch a URL using the Scrapy downloader

genspider Generate new spider using pre-defined templates

runspider Run a self-contained spider (without creating a project)

settings Get settings values

shell Interactive scraping console

startproject Create new project

version Print Scrapy version

view Open URL in browser, as seen by Scrapy

[ more ] More commands available when run from project directory

Use "scrapy -h" to see more info about a command

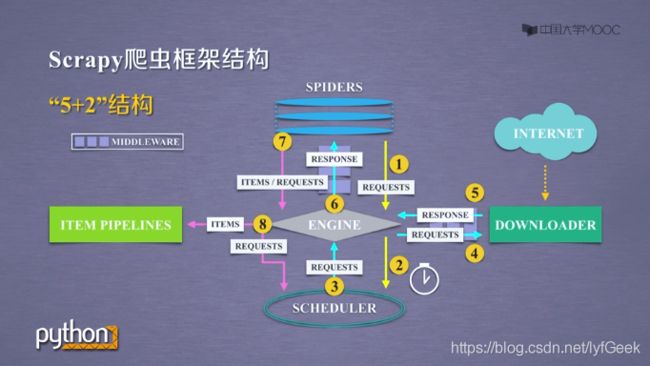

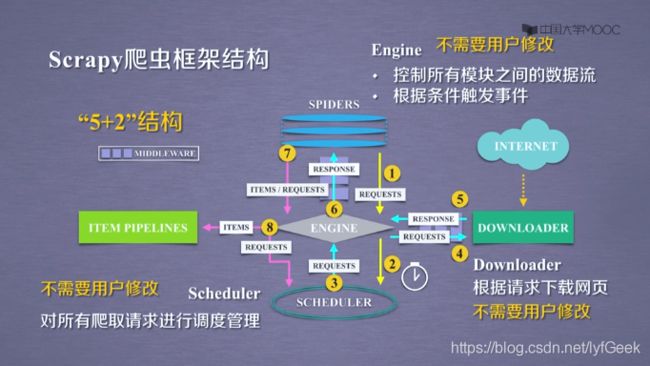

scrapy 不是一个函数功能库,而是一个爬虫框架。

爬虫框架是实现爬虫功能的一个软件结构和功能组件集合。

爬虫框架是一个半成品,能够帮助用户实现专业网络爬虫。

- Downloader Middleware。

目的:实施 Engine、Scheduler 和 Downloader 之间进行用户可配置的控制。

功能:修改、丢弃、新增请求或响应。

用户可以编写配置代码。

- Spider。

解析 Downloader 返回的相应(Response)。

产生爬取项(spider item)。

产生额外的爬取请求。

- Item Pipelines。

以流水线的方式处理 Spider 产生的爬取项。

由一组操作顺序组成,类似流水线,每个操作是一个 Item Pipeline 类型。

可能包括的操作:清理、检验和查重爬取项中的 HTML 数据、价格数据存储到数据库。

- Spider Middleware。

目的:对请求和爬取项的再处理。

功能:修改、丢弃、新增请求或爬取项。

用户可以编写配置代码。

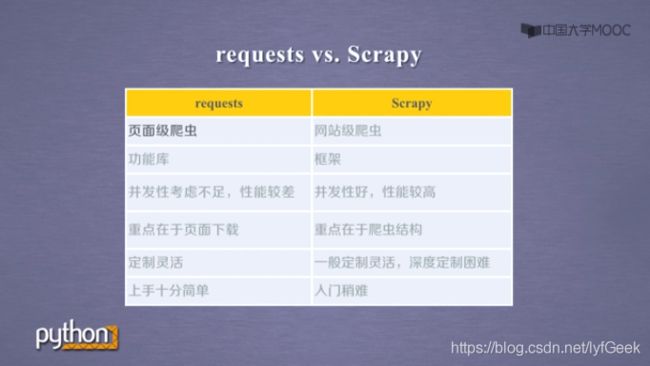

Requests vs. Scrapy。

- 相同点。

两者都可以进行页面请求和爬取,Python 爬虫的两个重要技术路线。

两者可用性都好,文档丰富,入门简单。

两者都没有处理 js、提交表单、应对验证码等功能(可扩展)。

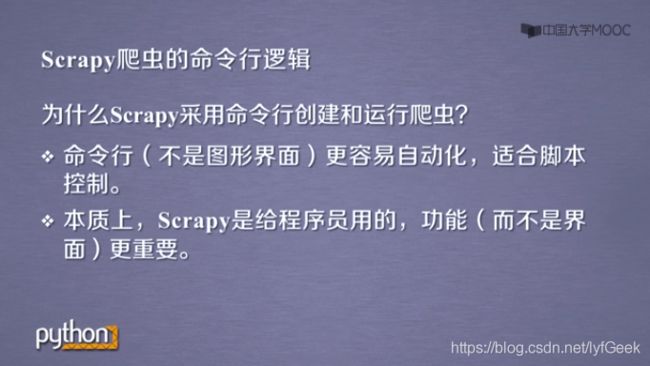

Scrapy 命令行。

Scrapy 是为持续运行设计的专业爬虫框架,提供操作 Scrapy 命令行。

geek@ubuntu:~$ scrapy

Scrapy 2.1.0 - no active project

Usage:

scrapy <command> [options] [args]

Available commands:

bench Run quick benchmark test

fetch Fetch a URL using the Scrapy downloader

genspider Generate new spider using pre-defined templates

runspider Run a self-contained spider (without creating a project)

settings Get settings values

shell Interactive scraping console

startproject Create new project

version Print Scrapy version

view Open URL in browser, as seen by Scrapy

[ more ] More commands available when run from project directory

Use "scrapy -h" to see more info about a command

geek@ubuntu:~$ scrapy -h

Scrapy 2.1.0 - no active project

Usage:

scrapy <command> [options] [args]

Available commands:

bench Run quick benchmark test

fetch Fetch a URL using the Scrapy downloader

genspider Generate new spider using pre-defined templates

runspider Run a self-contained spider (without creating a project)

settings Get settings values

shell Interactive scraping console

startproject Create new project

version Print Scrapy version

view Open URL in browser, as seen by Scrapy

[ more ] More commands available when run from project directory

Use "scrapy -h" to see more info about a command

geek@ubuntu:~$ scrapy shell

2020-06-16 12:52:02 [scrapy.utils.log] INFO: Scrapy 2.1.0 started (bot: scrapybot)

2020-06-16 12:52:02 [scrapy.utils.log] INFO: Versions: lxml 4.5.1.0, libxml2 2.9.10, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.8.2 (default, Apr 27 2020, 15:53:34) - [GCC 9.3.0], pyOpenSSL 19.1.0 (OpenSSL 1.1.1f 31 Mar 2020), cryptography 2.8, Platform Linux-5.4.0-37-generic-x86_64-with-glibc2.29

2020-06-16 12:52:02 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.epollreactor.EPollReactor

2020-06-16 12:52:02 [scrapy.crawler] INFO: Overridden settings:

{

'DUPEFILTER_CLASS': 'scrapy.dupefilters.BaseDupeFilter',

'LOGSTATS_INTERVAL': 0}

2020-06-16 12:52:02 [scrapy.extensions.telnet] INFO: Telnet Password: e2ea5129402318e1

2020-06-16 12:52:02 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.memusage.MemoryUsage']

2020-06-16 12:52:02 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2020-06-16 12:52:02 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2020-06-16 12:52:02 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2020-06-16 12:52:02 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-06-16 12:52:03 [asyncio] DEBUG: Using selector: EpollSelector

[s] Available Scrapy objects:

[s] scrapy scrapy module (contains scrapy.Request, scrapy.Selector, etc)

[s] crawler <scrapy.crawler.Crawler object at 0x7f1f2f949100>

[s] item {

}

[s] settings <scrapy.settings.Settings object at 0x7f1f2f946e20>

[s] Useful shortcuts:

[s] fetch(url[, redirect=True]) Fetch URL and update local objects (by default, redirects are followed)

[s] fetch(req) Fetch a scrapy.Request and update local objects

[s] shelp() Shell help (print this help)

[s] view(response) View response in a browser

2020-06-16 12:52:03 [asyncio] DEBUG: Using selector: EpollSelector

In [1]:

常用命令。

实例。

geek@ubuntu:~/geek/py/scrapy_geek$ scrapy startproject python123demo

New Scrapy project 'python123demo', using template directory '/home/geek/.local/lib/python3.8/site-packages/scrapy/templates/project', created in:

/home/geek/geek/py/scrapy_geek/python123demo

You can start your first spider with:

cd python123demo

scrapy genspider example example.com

geek@ubuntu:~/geek/py/scrapy_geek$ ls

python123demo

geek@ubuntu:~/geek/py/scrapy_geek$ cd python123demo/

geek@ubuntu:~/geek/py/scrapy_geek/python123demo$ ls

python123demo scrapy.cfg

geek@ubuntu:~/geek/py/scrapy_geek/python123demo$ cd python123demo/

geek@ubuntu:~/geek/py/scrapy_geek/python123demo/python123demo$ ls

__init__.py middlewares.py __pycache__ spiders

items.py pipelines.py settings.py

geek@ubuntu:~/geek/py/scrapy_geek/python123demo/python123demo$ ll

total 32

drwxrwxr-x 4 geek geek 4096 Jun 16 12:57 ./

drwxrwxr-x 3 geek geek 4096 Jun 16 12:57 ../

-rw-rw-r-- 1 geek geek 0 Jun 16 11:41 __init__.py

-rw-rw-r-- 1 geek geek 294 Jun 16 12:57 items.py

-rw-rw-r-- 1 geek geek 3595 Jun 16 12:57 middlewares.py

-rw-rw-r-- 1 geek geek 287 Jun 16 12:57 pipelines.py

drwxrwxr-x 2 geek geek 4096 Jun 16 11:41 __pycache__/

-rw-rw-r-- 1 geek geek 3159 Jun 16 12:57 settings.py

drwxrwxr-x 3 geek geek 4096 Jun 16 11:41 spiders/

geek@ubuntu:~/geek/py/scrapy_geek$ tree

locales-launch: Data of en_US locale not found, generating, please wait...

.

└── python123demo # 根目录。

├── python123demo # Scrapy 框架的用户自定义 Python 代码。

│ ├── __init__.py # 初始化脚本。

│ ├── items.py # Items 代码模板(继承类)。

│ ├── middlewares.py # Middlewares 代码模板(继承类)。

│ ├── pipelines.py # pipelines 代码模板(继承类)。

│ ├── __pycache__

│ ├── settings.py # scrapy 爬虫的配置文件。

│ └── spiders # Spriders 代码模板目录(继承类)。

│ ├── __init__.py # 初始文件,无需修改。

│ └── __pycache__ # 缓存目录,无需修改。

└── scrapy.cfg # 部署 Scrapy 爬虫的配置文件。

5 directories, 7 files

geek@ubuntu:~/geek/py/scrapy_geek$ cd python123demo/

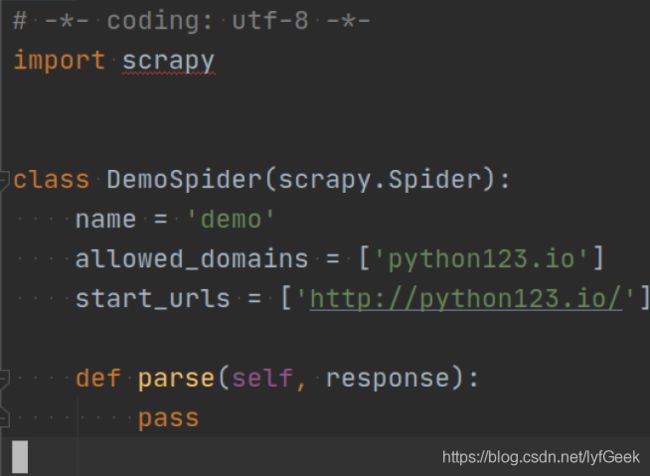

geek@ubuntu:~/geek/py/scrapy_geek/python123demo$ scrapy genspider demo python123.io

Created spider 'demo' using template 'basic' in module:

python123demo.spiders.demo

修改代码。

# -*- coding: utf-8 -*-

import scrapy

class DemoSpider(scrapy.Spider):

name = 'demo'

# allowed_domains = ['python123.io']

start_urls = ['http://python123.io/ws/demo.html']

def parse(self, response):

# pass

fname = response.url.split('/')[-1]

with open(fname, 'wb') as f:

f.write(response.body)

self.log('Saved file %s.' % name)

- 运行。

geek@ubuntu:~/geek/py/scrapy_geek/python123demo$ ls

demo.py python123demo scrapy.cfg

geek@ubuntu:~/geek/py/scrapy_geek/python123demo$ scrapy crawl demo

2020-06-16 16:26:27 [scrapy.utils.log] INFO: Scrapy 2.1.0 started (bot: python123demo)

2020-06-16 16:26:27 [scrapy.utils.log] INFO: Versions: lxml 4.5.1.0, libxml2 2.9.10, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.8.2 (default, Apr 27 2020, 15:53:34) - [GCC 9.3.0], pyOpenSSL 19.1.0 (OpenSSL 1.1.1f 31 Mar 2020), cryptography 2.8, Platform Linux-5.4.0-37-generic-x86_64-with-glibc2.29

2020-06-16 16:26:27 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.epollreactor.EPollReactor

2020-06-16 16:26:27 [scrapy.crawler] INFO: Overridden settings:

{

'BOT_NAME': 'python123demo',

'NEWSPIDER_MODULE': 'python123demo.spiders',

'ROBOTSTXT_OBEY': True,

'SPIDER_MODULES': ['python123demo.spiders']}

2020-06-16 16:26:27 [scrapy.extensions.telnet] INFO: Telnet Password: 27d56211f5500ca4

2020-06-16 16:26:27 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.memusage.MemoryUsage',

'scrapy.extensions.logstats.LogStats']

2020-06-16 16:26:27 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2020-06-16 16:26:27 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2020-06-16 16:26:27 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2020-06-16 16:26:27 [scrapy.core.engine] INFO: Spider opened

2020-06-16 16:26:27 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2020-06-16 16:26:27 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-06-16 16:26:28 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (301) to <GET https://python123.io/robots.txt> from <GET http://python123.io/robots.txt>

2020-06-16 16:26:28 [scrapy.core.engine] DEBUG: Crawled (404) <GET https://python123.io/robots.txt> (referer: None)

2020-06-16 16:26:28 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (301) to <GET https://python123.io/> from <GET http://python123.io/>

2020-06-16 16:26:28 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://python123.io/> (referer: None)

2020-06-16 16:26:28 [scrapy.core.engine] INFO: Closing spider (finished)

2020-06-16 16:26:28 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{

'downloader/request_bytes': 868,

'downloader/request_count': 4,

'downloader/request_method_count/GET': 4,

'downloader/response_bytes': 56895,

'downloader/response_count': 4,

'downloader/response_status_count/200': 1,

'downloader/response_status_count/301': 2,

'downloader/response_status_count/404': 1,

'elapsed_time_seconds': 0.516382,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2020, 6, 16, 8, 26, 28, 440746),

'log_count/DEBUG': 4,

'log_count/INFO': 10,

'memusage/max': 51879936,

'memusage/startup': 51879936,

'response_received_count': 2,

'robotstxt/request_count': 1,

'robotstxt/response_count': 1,

'robotstxt/response_status_count/404': 1,

'scheduler/dequeued': 2,

'scheduler/dequeued/memory': 2,

'scheduler/enqueued': 2,

'scheduler/enqueued/memory': 2,

'start_time': datetime.datetime(2020, 6, 16, 8, 26, 27, 924364)}

2020-06-16 16:26:28 [scrapy.core.engine] INFO: Spider closed (finished)

- 完整版代码。

# -*- coding: utf-8 -*-

import scrapy

class DemoSpider(scrapy.Spider):

name = 'demo'

def start_requests(self):

urls = [

'http://python123.io/ws/demo.html'

]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

fname = response.url.split('/')[-1]

with open(fname, 'wb') as f:

f.write(response.body)

self.log('Saved file %s.' % name)

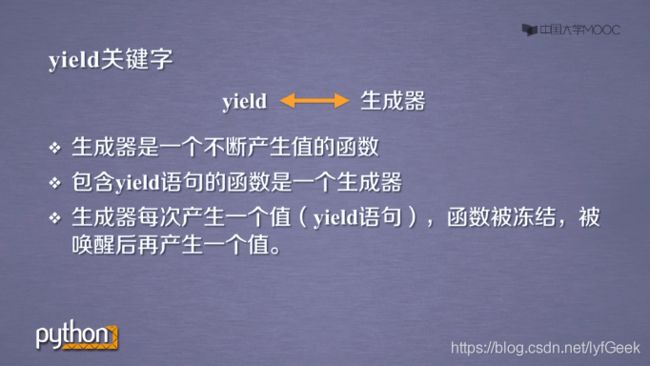

yield 关键字。

- 普通写法。

一次性存储全部数据到内存。

def square(n):

ls = [i ** 2 for i in range(n)]

return ls

- 生成器写法。

每次生成一个数据。

def gen(n):

for i in range(n):

yield i ** 2

def main():

for i in gen(5):

print(i, ' ', end='')

if __name__ == '__main__':

main()

scrapy 步骤。

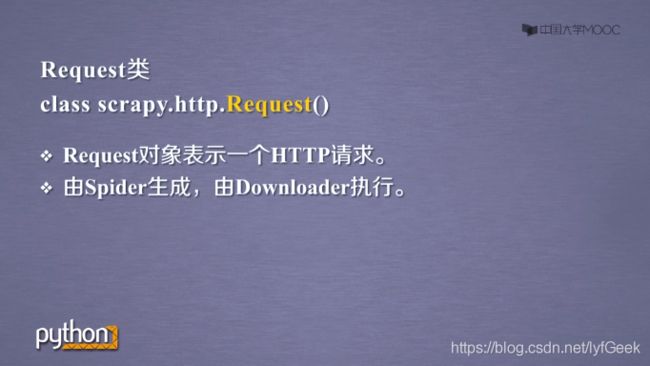

scrapy.Request 类。

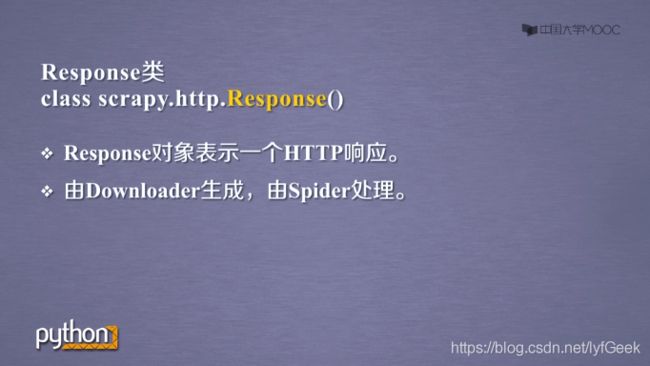

scrapy.Response 类。

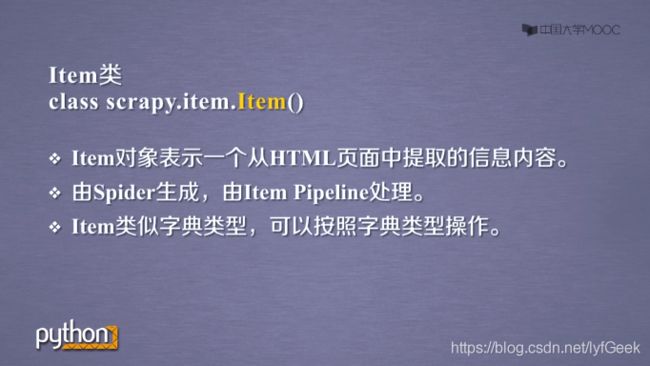

Item 类。

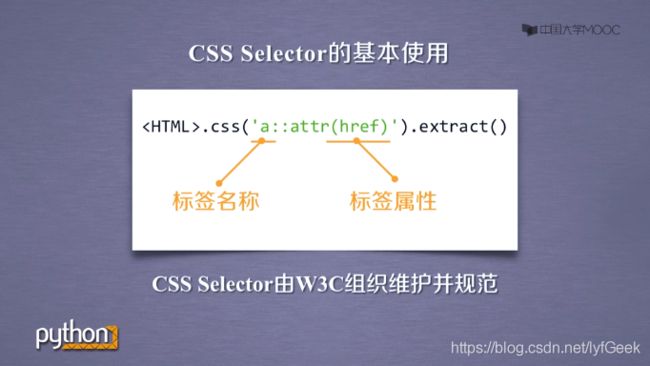

CSS Selector。

实例。

- 建立工程和 Spider 模板。

geek@ubuntu:~/geek/py$ mkdir stock_geek

geek@ubuntu:~/geek/py$ cd stock_geek/

geek@ubuntu:~/geek/py/stock_geek$ ls

geek@ubuntu:~/geek/py/stock_geek$ scrapy startproject BaiduStocks

New Scrapy project 'BaiduStocks', using template directory '/home/geek/.local/lib/python3.8/site-packages/scrapy/templates/project', created in:

/home/geek/geek/py/stock_geek/BaiduStocks

You can start your first spider with:

cd BaiduStocks

scrapy genspider example example.com

geek@ubuntu:~/geek/py/stock_geek$ ls

BaiduStocks

geek@ubuntu:~/geek/py/stock_geek$ cd BaiduStocks/

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks$ ls

BaiduStocks scrapy.cfg

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks$ scrapy genspider stocks baidu.com

Created spider 'stocks' using template 'basic' in module:

BaiduStocks.spiders.stocks

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks$

- 修改 spiders/stocks.py 文件。

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks$ ls

BaiduStocks scrapy.cfg

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks$ cd BaiduStocks/

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks$ ls

__init__.py middlewares.py __pycache__ spiders

items.py pipelines.py settings.py

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks$ cd spiders/

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks/spiders$ ls

__init__.py __pycache__ stocks.py

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks/spiders$ cp stocks.py stocks.py.bak

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks/spiders$ vim stocks.py

# -*- coding: utf-8 -*-

import scrapy

class StocksSpider(scrapy.Spider):

name = 'stocks'

allowed_domains = ['baidu.com']

start_urls = ['http://baidu.com/']

def parse(self, response):

pass

↓ ↓ ↓

# -*- coding: utf-8 -*-

import re

import scrapy

class StocksSpider(scrapy.Spider):

name = 'stocks'

# allowed_domains = ['baidu.com']

start_urls = ['http://quote/.eastmoney.com/stocklist.html']

def parse(self, response):

for href in response.css('a::attr(href)').extract():

try:

stock = re.findall(r'[s][hz]\d{6}', href)[0]

url = 'https://gupiao.baidu.com/stock' + stock + '.html'

yield scrapy.Request(url, callback=self.parse_stock)

except:

continue

def parse_stock(self, response):

infoDict = {

}

stockInfo = response.css('.stock-bets')

name = stockInfo.css('.bets-name').extract()[0]

keyList = stockInfo.css('dt').extract()

valueList = stockInfo.css('dd').extract()

for i in range(len(keyList)):

key = re.findall(r'>.*', keyList[i])[0][1:-5]

try:

val = re.findall(r'\d+\.?.*', valueList[i])[0][0:-5]

except:

value = '--'

infoDict[key] = val

infoDict.update({

'股票名称:': re.findall('\s.*\(', name)[0].split()[0] + re.findall('\>.*\<', name)[0][1:-i]})

yield infoDict

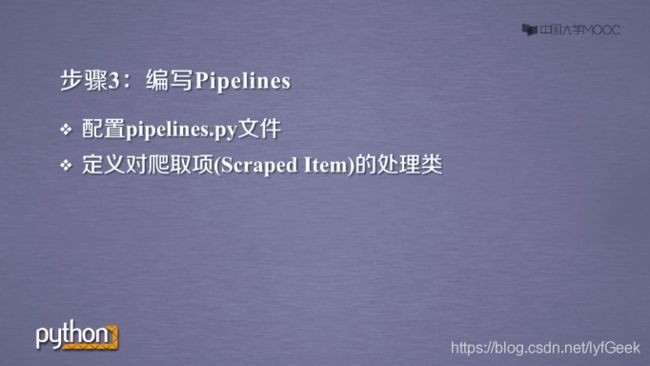

- 编写 Pipelines。

- 配置 pipelines.py 文件。

- 定义对爬取项(Scraped Item)的处理类。

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks/spiders$ cd ..

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks$ ls

__init__.py middlewares.py __pycache__ spiders

items.py pipelines.py settings.py

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks$ vim pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

class BaidustocksPipeline(object):

def process_item(self, item, spider):

return item

↓ ↓ ↓

BaidustocksInfoPipeline 类。

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

class BaidustocksPipeline:

def process_item(self, item, spider):

return item

class BaidustocksInfoPipeline(object):

def open_spider(self, spider):

self.f = open('BaiduStockInfo.txt', 'w')

def close_spider(self, spider):

self.f.close()

def process_item(self, item, spider):

try:

line = str(dict(item)) + '\n'

self.f.write(line)

except:

pass

return item

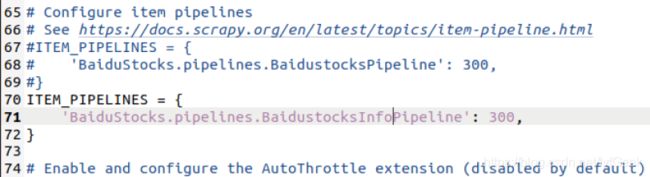

- 配置 ITEM_PIPELINES 选项。

geek@ubuntu:~/geek/py/stock_geek/BaiduStocks/BaiduStocks$ vim settings.py

- 启动。

scrapy crawl stocks