深度学习实验记录--自编码+分类器

深度学习课程记录

- 自编码分类器神经网络记录

- 1.Train the autoencoder by using unlabeled data

- 训练1(fail)

- 训练2(fail)

- 训练3(fail)

- 训练4(fail)

- 训练5

- 训练5-1

- 2.Training the network by using the new training data set

- 3.combine the two networks

- 4.test the network with the testing set

- 训练1

自编码分类器神经网络记录

本人于2019年秋季参加了学校开设的深度学习相关课程,在大作业中遇到的困难在此记录一二(part of pictures from Doc.ZhangYi),整体思路如下:

|

|

|

|

|

|

1.Train the autoencoder by using unlabeled data

Read and prepare the data. Train the autoencoder by using unlabeled data (the unlabeled set). Remove the layers behind sparse representation layer after training

数据集数量:7800数据集内容:猫狗图片单个样本大小:300*400

|

|

训练1(fail)

max_iter = 600mini_batch = 40alpha = 0.01data:80x80x200

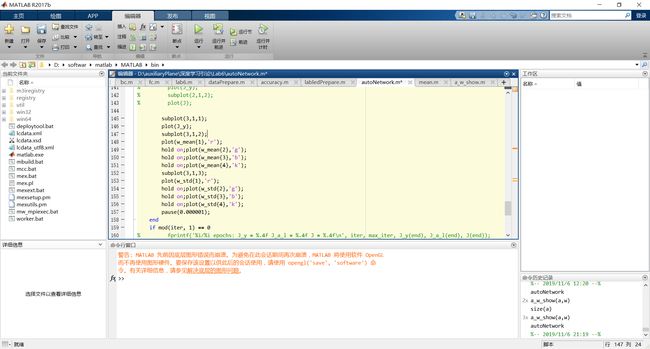

% 神经网络结构

layer_size = [input_size 1000

0 2000

0 500

0 2000

0 input_size];

% 激活函数设计

fs = {[], relu, relu, relu, relu, relu, relu, relu};

dfs = {[], drelu, drelu, drelu, drelu, drelu, drelu, drelu};

% 权值初始化

w{l} = (rand(layer_size(l+1, 2), sum(layer_size(l, :)))*2-1) * 0.01;

% 定义cost function

J_y = [J_y 1/2/mini_batch*sum((a{L}(:)-y(:)).^2)];

|

|

效果不容乐观啊

训练2(fail)

max_iter = 800mini_batch = 40alpha = 0.2data:80x80x200

% 神经网络结构

layer_size = [input_size 1000

0 2000

0 500

0 2000

0 input_size];

% 激活函数设计

fs = {[], sigm, sigm, sigm, sigm, sigm, sigm, sigm};

dfs = {[], dsigm, dsigm, dsigm, dsigm, dsigm, dsigm, dsigm};

% 权值初始化

w{l} = (randn(layer_size(l+1, 2), sum(layer_size(l, :)))*2-1) * sqrt(6/( layer_size(l+1, 2) + sum(layer_size(l,:)) ));

% 定义cost function

J_y = [J_y 1/2/mini_batch*sum((a{L}(:)-y(:)).^2)];

训练3(fail)

max_iter = 600mini_batch = 40alpha = 0.2data:80x80x200layer_size: input+1000(0);2000;1000;500;1000;2000;outputw: (2*randn()-1)*sqrt(6/(n{l}+n{l+1}))sigmoidJ: 0.5/mini_batch*Σ(a{L}-y)

训练4(fail)

max_iter = 600mini_batch = 50alpha = 0.3data:80x80x200layer_size: input+1000(0);2000;500;30;500;2000;outputw: randn()*sqrt(6/(n{l}+n{l+1}))sigmoidJ: 0.5/mini_batch*Σ(a{L}-y)

emmmm,再来600轮(上面的a和w少了几张)

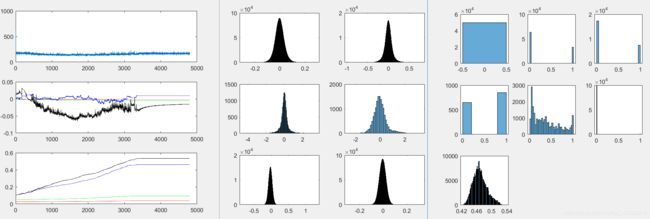

训练5

max_iter = 900mini_batch = 40alpha = 0.3data:80x80x1000layer_size: input+1000(0);2000;500;2000;outputw: randn()*sqrt(6/(n{l}+n{l+1}))sigmoidJ: 0.5/mini_batch*Σ(a{L}-y)

|

|

训练5-1

上接train5-1…max_iter = 900beta= 0.1

emmm,采用训练5所得的权值接着进行稀疏优化,可能beta还是0.1好一些

2.Training the network by using the new training data set

Form a new data set in sparse representation layer by using the labeled data set (the trianing set). Form a new training data set for supervised network (the encoded training set and its labels). Training the network by using the new training data set

3.combine the two networks

4.test the network with the testing set

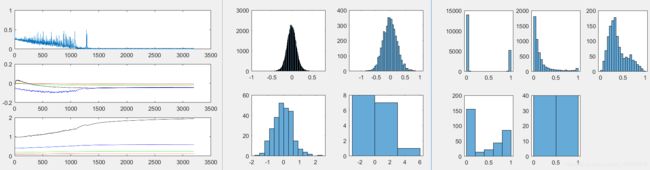

训练1

max_iter = 400mini_batch = 40alpha = 0.05data:500x320layer_size: input(500)+0;126;32;8;2w: randn()*sqrt(6/(n{l}+n{l+1}))sigmoidJ: 0.5/mini_batch*Σ(a{L}-y)

![]()