Hyper-v搭建K8s v1.18.6 单主集群环境(包括dashboard)

一、配置基本环境:

环境配置:

- Windows 10 Hyper-V虚拟化;

- 创建3个虚拟机(CentOS Linux release 7.8),1个用于master节点,2个用于work节点;master配置2CPU\4GB内存\3个网卡,Work node配置2CPU\2GB内存\3个网卡;

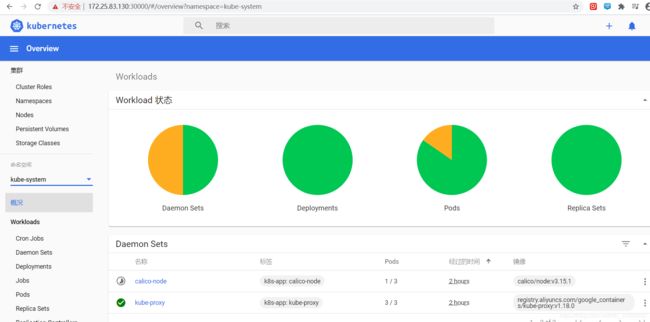

1、Hyper-v网络环境配置:配置3个虚拟交换机;

因Hyper-v dafault Switch虚拟交换机与桥接的网络,不能配置固定IP,如将这个网络用于node的管理网络,那该网卡只能是从DHCP获取,IP会变动,为在后续测试中带来不必要的麻烦,因此建议增加一个独立的内部管理网络,用于设置固定IP,且同时保留default Switch用于node虚拟机访问Internet外网;

以上交换机名称信息可自行定义

以上交换机名称信息可自行定义

2、安装Centos虚拟机

3个vm:k8s-node01、k8s-node02、k8s-node03;

操作系统版本:CentOS Linux release 7.8.2003 (Core)

3、每台VM配置3个网卡

eth0:主要用于Node管理网络、node与Hyper-v宿主机互通、为POD提供外网访问;

eth1:主要用于node内部专有通讯网络;

eth2:主要用于node虚拟机访问Internet互联网,可以下载软件或镜像;

IP配置信息如下:

K8s-node01 :eth0:172.25.83.130, eth1:10.0.0.1, eth2:自动获取DHCP地址

K8s-node02 :eth0:172.25.83.133, eth1:10.0.0.2, eth2:自动获取DHCP地址

K8s-node03:eth0: 172.25.83.131, eth1:10.0.0.3, eth2:自动获取DHCP地址

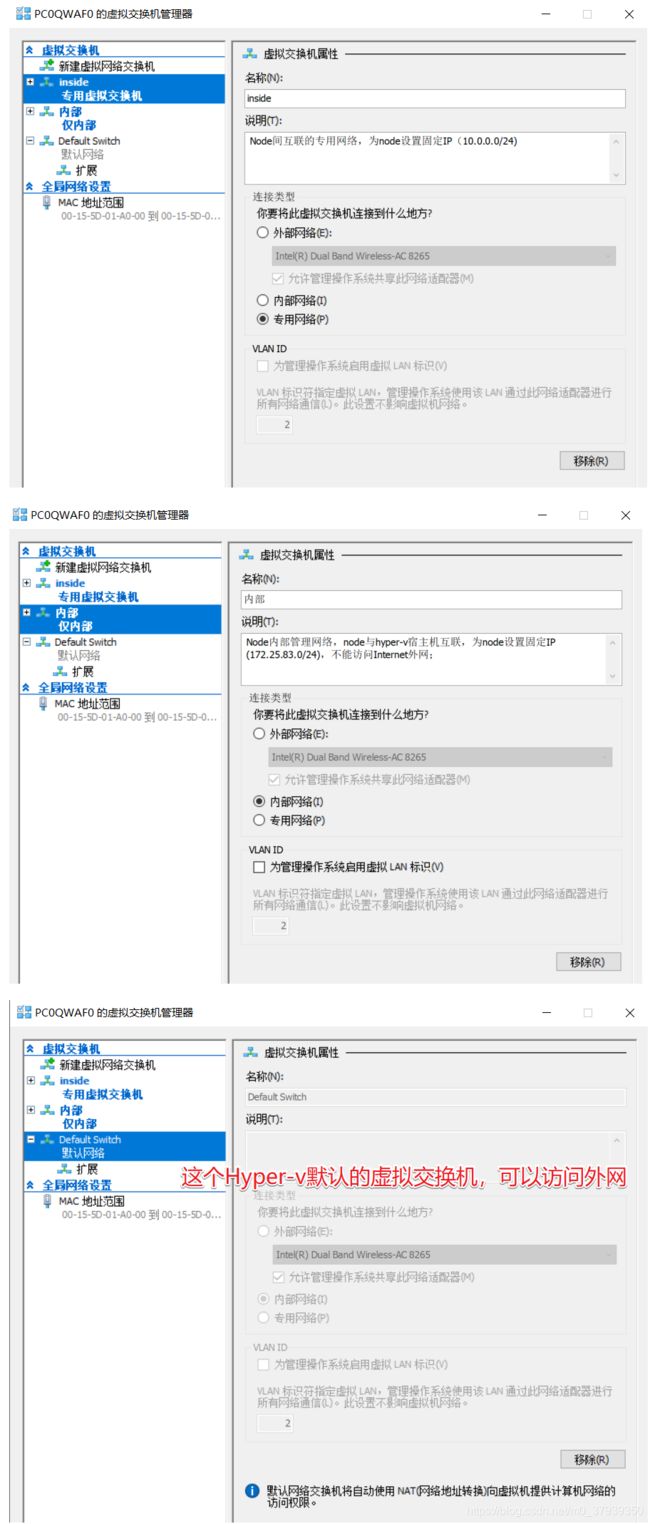

注意:多网卡下,如果发现不能访问Internet互联网,需检查路由信息,第一条默认的路由信息必须为eth2,其实只要eth0与eth1网卡在配置固定IP时不用配置网关即可,这样可以保证第一条默认路由为eth2;如下路由示图:

3个VM的网卡配置信息截图:

4、为每个node关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

5、为每个node关闭selinux:

cat /etc/selinux/config

sed -i 's/enforcing/disabled/' /etc/selinux/config

cat /etc/selinux/config

setenforce 0

6、为每个node关闭swap内存

swapoff -a #关闭临时的

cat /etc/fstab

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭

cat /etc/fstab

7、为每个node重命名

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

hostnamectl set-hostname k8s-node03

8、为每个node添加主机名与IP对应关系

vim /etc/hosts

172.25.83.130 k8s-node01

172.25.83.133 k8s-node02

172.25.83.131 k8s-node03

9、为每个node桥接的IPV4流量传递到iptables的链:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

EOF

sysctl --system #让系统应用以上规则

10、为每个node配置本地时间

yum install -y ntpdate

ntpdate time.windows.com

date

11、给VM配置还原点保存当前状态,便于后面出问题可以恢复到当前状态。

二、配置容器环境(每个节点上都需执行)

1、卸载docker,确保每个node的干净的

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

2、安装Docker的前置依赖

yum install -y yum-utils device-mapper-persistent-data lvm2

3、设置docker yum源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#默认是国外的,下载比较慢,建议使用阿里云的源安装docker

4、更新yum软件包索引

yum makecache fast

5、安装docker社区版,以及docker-cli

yum install -y docker-ce docker-ce-cli containerd.io

6、启动docker

systemctl start docker

7、查看docker版本信息(判断是否安装成功)

docker version

8、默认镜像是从Docker Hub下载,国内比较慢,推荐使用自己的阿里云加速

mkdir -p /etc/docker

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://qt4cct09.mirror.aliyuncs.com"] #该地址可以通过阿里云自己创建;

}

systemctl daemon-reload

systemctl restart docker

docker info检查配置的加载地址

8、设置docker开机自启动

systemctl enable docker

三、安装K8s基本工具(每个节点上都需执行)

1、配置阿里云yum源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all

yum makecache

2、安装kubelet\kubeadm\kubectl

yum install -y kubelet kubeadm kubectl

yum install epel-release (如果上面安装过程找不到到安装包,需要执行该操作)

3、配置kubelet开机启用

systemctl enable kubelet

systemctl start kubelet

4、检查kubelet服务状态

systemctl status kubelet #(因没有配置好其他环境,检查状态是失败的)

四、部署k8s-master(仅在Master node节点上执行)

1、master节点初始化

kubeadm init --kubernetes-version=1.18.0 \

--apiserver-advertise-address=172.25.83.130 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.10.0.0/16 --pod-network-cidr=10.122.0.0/16

POD的网段为: 10.122.0.0/16, api server地址就是master本机IP。

这一步很关键,由于kubeadm 默认从官网k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址。

2、初始化完成后,根据提示创建kubectl

[root@master01 ~]# mkdir -p $HOME/.kube

[root@master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

3、使kubectl可以自动补齐命令

[root@master01 ~]# source <(kubectl completion bash) #可按tab键补齐命令

4、查看节点,pod

[root@master01 ~]# kubectl get node #只会显示Default名称空间的pod;

[root@master01 ~]# kubectl get pod --all-namespaces

注意:node节点为NotReady,因为coredns pod没有启动,且缺少网络pod;

5、将node02与node03加入该集群:

master节点初始化完后会提示以下信息,将命令在node02与node03上执行后会自动加入该集群,以下证书2小时内有效)

kubeadm join 172.25.83.130:6443 --token c9pnxh.kcmwarwb84osgntg \

--discovery-token-ca-cert-hash sha256:2f4469200b4204cf7999c1c2fd5e8f2b8b003dc439d3b49ad8555d433a9c638c #证书可一般2小时内有效,建议在master初始化完成就将其他节点加入集群;

实时监控node加入集群的状态

watch kubectl get pod -n kube-system -o wide

查看nods状态

kubectl get nods

因没有安装K8s内部网络资源,node节点显示为未准备状态;

6、安装calico网络

[root@master01 ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

7、查看节点,pod

需要等待calico网络处于运行状态,才能进行下一步操作。

[root@master01 ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-555fc8cc5c-k8rbk 1/1 Running 0 36s

kube-system calico-node-5km27 1/1 Running 0 36s

kube-system coredns-7ff77c879f-fsj9l 1/1 Running 0 5m22s

kube-system coredns-7ff77c879f-q5ll2 1/1 Running 0 5m22s

kube-system etcd-master01.paas.com 1/1 Running 0 5m32s

kube-system kube-apiserver-master01.paas.com 1/1 Running 0 5m32s

kube-system kube-controller-manager-master01.paas.com 1/1 Running 0 5m32s

kube-system kube-proxy-th472 1/1 Running 0 5m22s

kube-system kube-scheduler-master01.paas.com 1/1 Running 0 5m32s

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01.paas.com Ready master 5m47s v1.18.0

以上操作到此,K8S集群是可以正常运行了。

接下来为k8s dashboard图形化展示工具的安装,也可以安装第三方的。

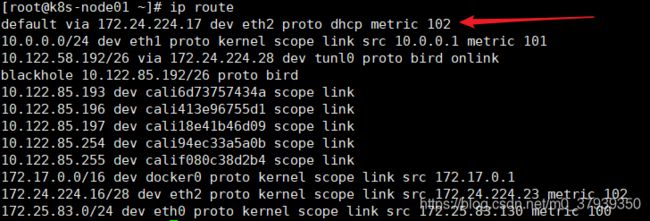

8 安装kubernetes-dashboard

a、官方部署dashboard的服务没使用nodeport,将yaml文件下载到本地,在service里添加nodeport

[root@master01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml #下载dashboard yaml官方模板

[root@master01 ~]# vim recommended.yaml #需要修改信息,才能提供访问;

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #增加NodePort端口

ports:

- port: 443

targetPort: 8443

nodePort: 30000 #该数字可自定义

selector:

k8s-app: kubernetes-dashboard

如下载yaml文件失败,则需要配置raw.githubusercontent.com主机IP,

vi /etc/hosts文件添加如下地址表:

**# GitHub Start**

52.74.223.119 github.com

192.30.253.119 gist.github.com

54.169.195.247 api.github.com

185.199.111.153 assets-cdn.github.com

151.101.76.133 raw.githubusercontent.com

151.101.108.133 user-images.githubusercontent.com

151.101.76.133 gist.githubusercontent.com

151.101.76.133 cloud.githubusercontent.com

151.101.76.133 camo.githubusercontent.com

151.101.76.133 avatars0.githubusercontent.com

151.101.76.133 avatars1.githubusercontent.com

151.101.76.133 avatars2.githubusercontent.com

151.101.76.133 avatars3.githubusercontent.com

151.101.76.133 avatars4.githubusercontent.com

151.101.76.133 avatars5.githubusercontent.com

151.101.76.133 avatars6.githubusercontent.com

151.101.76.133 avatars7.githubusercontent.com

151.101.76.133 avatars8.githubusercontent.com

**# GitHub End**

b、创建dashboard pod:

[root@master01 ~]# kubectl create -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

#显示创建成功后的信息

c、查看pod,service(确认正常)

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-dc6947fbf-869kf 1/1 Running 0 37s

kubernetes-dashboard-5d4dc8b976-sdxxt 1/1 Running 0 37s

[root@master01 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.10.58.93 <none> 8000/TCP 44s

kubernetes-dashboard NodePort 10.10.132.66 <none> 443:30000/TCP 44s

[root@master01 ~]#

d、查看凭证

kubectl -n kubernetes-dashboard get secret

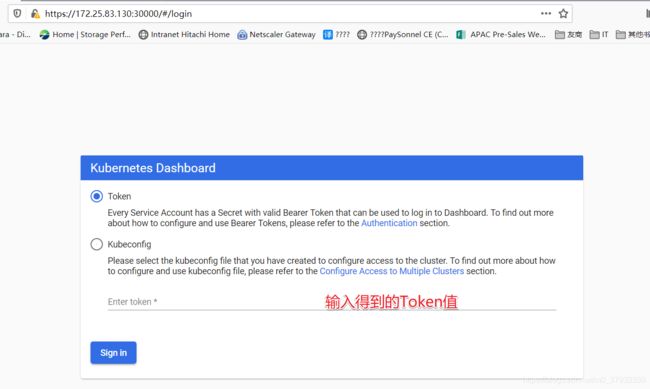

e、获取浏览器访问dashboard站点的Toke

[root@k8s-node01 ~]# kubectl describe secrets -n kubernetes-dashboard kubernetes-dashboard-token-9cjt2 | grep token | awk 'NR==3{print $2}'

#如下是我部署dashboard的toke信息

eyJhbGciOiJSUzI1NiIsImtpZCI6IjM5XzE5WFZscG5DTlVmWTZMRGo2UE8xdFl4dVNwTkJKUTQzeEZwQWY5UW8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi05Y2p0MiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjgzOGJmNWQzLTgzOGItNGY1YS04OGZiLTRiNjVlNjVkNjFmNyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.Oxpu_3fkXj51rsqhWx70wS7rCZC8fDJQLPNJbMl4zyTpRJyPUm0MFSf5DZBNZT9IcCrsIazSxI-EKfCzXWx8Vn9UijE9c6xAe8xxj6ITjDz65VIehN9VXClAWT5UUJ9km4eqNl76yexTq0kzK_uI0XS9qQNLR4wnb2wIdehkHBylcXS-iSwB00ChWGfNx6jm8syvlllH3i3dDsxsC9BxFhnPQ3OQgRcXsJw0US0S08aJSnhmAa8uOhtO4ntd2zD161j91A7IZ9f2Uh7vee5Ph3giMVZGQDj3rnDodZ4_JIouM1i5uzOTj9Cg-loQZHRZYOi3aBWXcaHBJkee2n77jg

f、使用浏览器打开kubernetes Dashboard:

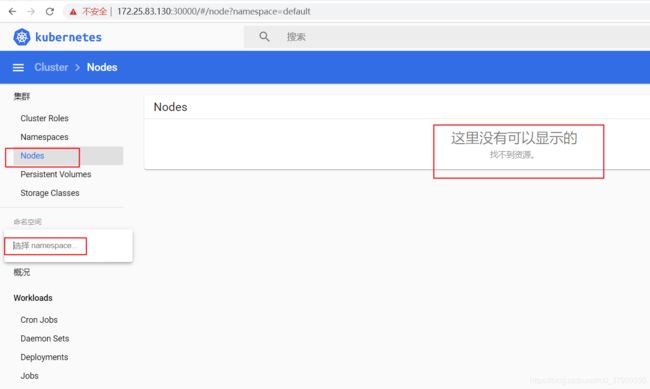

登录后如下展示,如果没有namespace可选,并且提示找不到资源 ,那么就是权限问题

以上问题是账号权限问题,解决方法:

kubectl create clusterrolebinding serviceaccount-cluster-admin --clusterrole=cluster-admin --user=system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard

kubectl delete clusterrolebinding serviceaccount-cluster-admin #如之前权限创建有问题,可以使用该命令清理,再重新执行上面命令

查看日志:

kubectl logs -f -n kubernetes-dashboard kubernetes-dashboard-5d4dc8b976-8xqgk

g、查验集群状态

[root@k8s-node01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node01 Ready master 3h29m v1.18.6

k8s-node02 Ready <none> 57m v1.18.6

k8s-node03 Ready <none> 57m v1.18.6

[root@k8s-node01 ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-578894d4cd-rpcps 1/1 Running 0 3h14m

kube-system calico-node-kjfnn 1/1 Running 0 3h14m

kube-system calico-node-sx4h6 1/1 Running 0 57m

kube-system calico-node-wx9tq 1/1 Running 0 58m

kube-system coredns-7ff77c879f-6mwdf 1/1 Running 0 3h29m

kube-system coredns-7ff77c879f-zf46c 1/1 Running 0 3h29m

kube-system etcd-k8s-node01 1/1 Running 0 3h29m

kube-system kube-apiserver-k8s-node01 1/1 Running 0 3h29m

kube-system kube-controller-manager-k8s-node01 1/1 Running 0 3h29m

kube-system kube-proxy-54wtk 1/1 Running 0 57m

kube-system kube-proxy-xlv5k 1/1 Running 0 3h29m

kube-system kube-proxy-zz9jz 1/1 Running 0 58m

kube-system kube-scheduler-k8s-node01 1/1 Running 0 3h29m

kubernetes-dashboard dashboard-metrics-scraper-dc6947fbf-wsf2b 1/1 Running 0 110m

kubernetes-dashboard kubernetes-dashboard-5d4dc8b976-8xqgk 1/1 Running 0 110m

[root@k8s-node01 ~]# kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.10.0.1 <none> 443/TCP 3h31m

kube-system kube-dns ClusterIP 10.10.0.10 <none> 53/UDP,53/TCP,9153/TCP 3h31m

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.10.224.238 <none> 8000/TCP 111m

kubernetes-dashboard kubernetes-dashboard NodePort 10.10.66.138 <none> 443:30000/TCP 111m

到此k8s集群+Dashboard全部完成,以上信息如有错误,请指正,谢谢。