python数据分析案例2-2:Python练习股票信息爬取

爬取股票数据

系统:win10;

python:3.8.5;Anconda3;

一 . 建立工程

2 : 在pycharm的terminal里初始化

直接在pycharm的terminal里初始化一个项目Stock_HK_SH:

scrapy startproject Stock_HK_SH

终端效果如下:

D:\SW_dvp\python\practice\Stock_HK_SH>scrapy startproject Stock_hk_SH

New Scrapy project 'Stock_hk_SH', using template directory 'C:\Users\Rechard\AppData\Roaming\Python\Python38\

site-packages\scrapy\templates\project', created in:

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH

You can start your first spider with:

cd Stock_hk_SH

scrapy genspider example example.com

操作 3 : 修改settings.py设置文件:

# Obey robots.txt rules不遵守此协议

ROBOTSTXT_OBEY = False

#下载延时

DOWNLOAD_DELAY = 0.5

操作 4 : 生成初始化文件:

抓取目标链接:https://sc.hkexnews.hk/TuniS/www.hkexnews.hk/sdw/search/mutualmarket_c.aspx?t=sh&t=sh

进入spiders目录,在终端输入生成命令:

scrapy genspider Stock_hk_SH_spider

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH>cd Stock_hk_SH

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH\Stock_hk_SH>cd spiders

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH\Stock_hk_SH\spiders>

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH\Stock_hk_SH\spiders>scrapy genspider Stock_hk_SH_spider sc.

hkexnews.hk

Created spider 'Stock_hk_SH_spider' using template 'basic' in module:

Stock_hk_SH.spiders.Stock_hk_SH_spider

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH\Stock_hk_SH\spiders>dir

驱动器 D 中的卷是 新加卷

卷的序列号是 BE92-2BF3

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH\Stock_hk_SH\spiders 的目录

2020/08/07 21:55 .

2020/08/07 21:55 ..

2020/08/07 21:55 222 Stock_hk_SH_spider.py

2020/08/06 22:58 161 __init__.py

2020/08/07 21:55 __pycache__

2 个文件 383 字节

3 个目录 439,820,099,584 可用字节

D:\SW_dvp\python\practice\Stock_HK_SH\Stock_hk_SH\Stock_hk_SH\spiders>

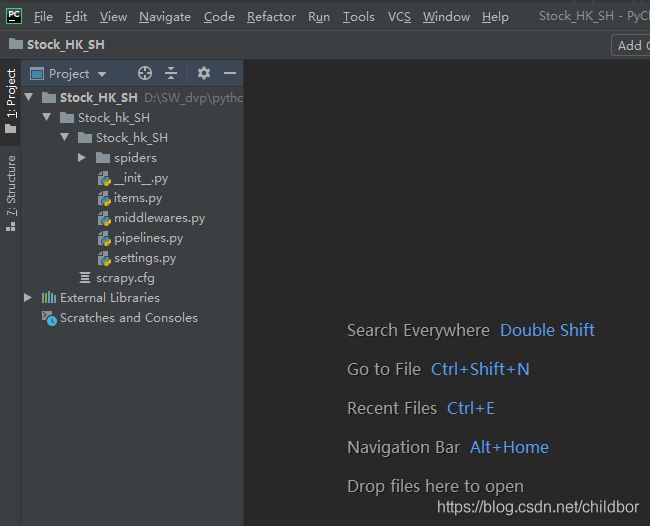

pycharm目录下多了spiders文件:

操作 5 : 根据需要抓取的对象编辑数据模型文件 items.py ,创建对象(序号,名称,描述,评价等等).

修改前:

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

pass

修改后:# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class StockHkShItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

#股票代码

Stock_Code = scrapy.Field()

# 股票名称

Stock_Name = scrapy.Field()

# 持股份额

Stock_Sharehold = scrapy.Field()

# 持股比例(占流通股)

Stock_percent = scrapy.Field()

操作 6 : 编辑爬虫文件douban_spider.py :

修改前:

import scrapy

class StockHkShSpiderSpider(scrapy.Spider):

name = 'Stock_hk_SH_spider'

allowed_domains = ['sc.hkexnews.hk']

start_urls = ['http://sc.hkexnews.hk/']

def parse(self, response):

pass修改后:

import scrapy

class StockHkShSpiderSpider(scrapy.Spider):

## 爬虫的名称

name = 'Stock_hk_SH_spider'

## 爬虫允许抓取的域名

allowed_domains = ['sc.hkexnews.hk']

## 爬虫抓取数据地址,给调度器

start_urls = ['https://sc.hkexnews.hk/TuniS/www.hkexnews.hk/sdw/search/mutualmarket_c.aspx?t=sz']

def parse(self, response):

print(response.text)操作 7 : 开启scrapy项目:

打开终端, 在spiders文件路径下执行命令:scrapy crawl Stock_hk_SH_spider

尝试一下是否能抓取到东西(验证URL是正确的),看到有抓取到页面的数据,就是OK的;

是OK的;

操作 8 : 设置请求头信息 USER_AGENT

我们需要打开网页,F12打开页面调试窗口,在网络(network)下,刷新页面,找到"mutualmarket_c.aspx?t=sh&t=sh",并点击它:

找到请求信息的消息头,里面有User-Agent信息: (复制它)

user-agent:

Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36

打开Pycharm CE的 settings.py 里 设置USER_AGENT:

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'

打开终端, 在spiders文件路径下重新执行命令:scrapy crawl Stock_hk_SH_spider

如果返回日志里有一堆html信息,说明执行成功:

占于上交所上市及交易的A股总

数的百分比:

2.50%

股份代号:

91877

股份名称:

正泰电器

于中央结算系统的持股量:

136,861,112

操作 9 : 上面我们是在终端执行的,为了方便,现在设置在Pycharm CE开发工具中执行.

首先我们需要创建一个启动文件,比如main.py:

创建完成后编写如下main.py:

from scrapy import cmdline

# 输出未过滤的页面信息

cmdline.execute('scrapy crawl douban_spider'.split())

右键运行,返回信息报错了.

D:\SW_dvp\python\envs\Anaconda3\Stock_HK_SH\python.exe D:/SW_dvp/python/practice/Stock_HK_SH/Stock_hk_SH/Stock_hk_SH/main.py

Traceback (most recent call last):

File "D:/SW_dvp/python/practice/Stock_HK_SH/Stock_hk_SH/Stock_hk_SH/main.py", line 3, in

from scrapy import cmdline

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\scrapy\cmdline.py", line 9, in

from scrapy.crawler import CrawlerProcess

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\scrapy\crawler.py", line 18, in

from scrapy.core.engine import ExecutionEngine

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\scrapy\core\engine.py", line 14, in

from scrapy.core.scraper import Scraper

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\scrapy\core\scraper.py", line 16, in

from scrapy.utils.log import failure_to_exc_info, logformatter_adapter

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\scrapy\utils\log.py", line 12, in

from scrapy.utils.versions import scrapy_components_versions

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\scrapy\utils\versions.py", line 12, in

from scrapy.utils.ssl import get_openssl_version

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\scrapy\utils\ssl.py", line 1, in

import OpenSSL

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\OpenSSL\__init__.py", line 8, in

from OpenSSL import crypto, SSL

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\OpenSSL\crypto.py", line 15, in

from OpenSSL._util import (

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\OpenSSL\_util.py", line 6, in

from cryptography.hazmat.bindings.openssl.binding import Binding

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\cryptography\hazmat\bindings\openssl\binding.py", line 184, in

Binding.init_static_locks()

File "C:\Users\Rechard\AppData\Roaming\Python\Python38\site-packages\cryptography\hazmat\bindings\openssl\binding.py", line 142, in init_static_locks

__import__("_ssl")

ImportError: DLL load failed while importing _ssl: 找不到指定的模块。

Process finished with exit code 1

import cmdline时报错:

ImportError: DLL load failed while importing _ssl: 找不到指定的模块。

估计是Anconda下面没装这个模块(红色是目前的环境),之前的工程用的python(蓝框是之前的);

百度了一下,应该是Anconda环境下没装srapy(有点奇怪,在pycharm的terminal下面输入scrapy可以显示相应的版本),

不过显示的project interpreter里面确实没有;

重新安装一下;

安装后多了很多package,一页都显示不全了;

右键运行,返回信息有同样的报错。

重启一下pycharm试试;

正常了(一段log):

于中央结算系统的持股量:

4,120,172

占于上交所上市及交易的A股总数的百分比:

0.65%

操作 10 : 下面进入爬虫文件douban_spider.py 进行进一步设置:

例程是抓取豆瓣上的电影信息,按照这个是能够运行OK的;

我这里是要抓取股票页面上的信息,要研究一下怎么设置抓取信息;

# -*- coding: utf-8 -*-

import scrapy

class DoubanSpiderSpider(scrapy.Spider):

# 爬虫的名称

name = 'douban_spider'

# 爬虫允许抓取的域名

allowed_domains = ['movie.douban.com']

# 爬虫抓取数据地址,给调度器

start_urls = ['http://movie.douban.com/top250']

def parse(self, response):

movie_list = response.xpath("//div[@class='article']//ol[@class='grid_view']/li")

for i_item in movie_list:

print(i_item)其中:response.xpath("//div[@class='article']//ol[@class='grid_view']/li")是xml的解析方法xpath, 括号内是xpath语法:(根据抓取网页的目录结构,等到上面结果, 意思是选取class为article的div下,class为grid_view的ol下的所有li标签)

![]()

示例:

![]()

我这里是要抓取股票页面上的信息,要研究一下怎么设置抓取信息;