tf.layers.dense用法

先看例3吧

文章目录

- 例1

- 例2

- 例3

- 常用参数

官方文档:

https://www.tensorflow.org/api_docs/python/tf/layers/dense

例1

import tensorflow as tf

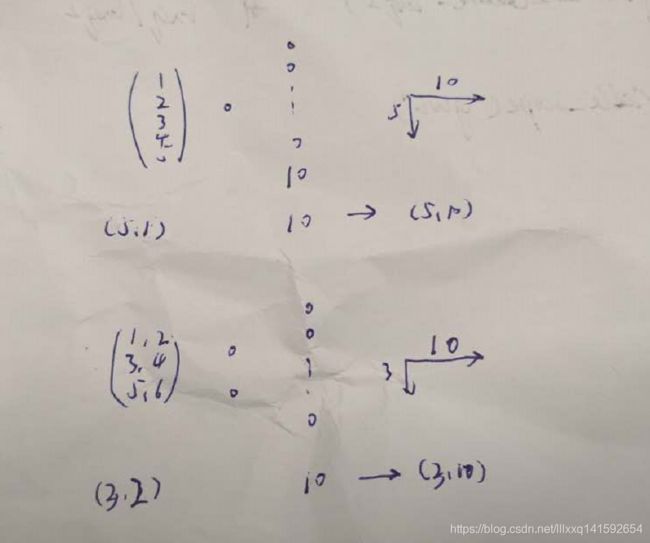

input = tf.ones([5, 1]) # [5,1]的矩阵,5组数据,每组数据为1个。tf.layers.dense会根据这个shape,自动调整输入层单元数。

output = tf.layers.dense(input, 10)

print(output.get_shape()) # (5, 10)

input = tf.ones([3, 2])

output = tf.layers.dense(input, 10)

print(output.get_shape()) # (3, 10)

input = tf.ones([1, 7, 20])

output = tf.layers.dense(input, 10)

print(output.get_shape()) # (1, 7, 10)

input = tf.ones([1, 7, 11, 20])

output = tf.layers.dense(input, 10)

print(output.get_shape()) # (1, 7, 11, 10)

参考文献:

https://blog.csdn.net/yangfengling1023/article/details/81774580

(5, 10)

(3, 10)

(1, 7, 10)

(1, 7, 11, 10)

例2

伪代码

def add_layer(inputs, in_size, out_size, activation_function=None):

Weights = tf.Variable(tf.random_normal([in_size, out_size]))

biases = tf.Variable(tf.zeros([1, out_size]) + 0.1)

Wx_plus_b = tf.matmul(inputs, Weights) + biases

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs

# 二者等价

l1 = add_layer(xs, 1, 10, activation_function=tf.nn.relu)

l1=tf.layers.dense(xs,10,activation=tf.nn.relu)

# 二者等价

prediction = add_layer(l1, 10, 1, activation_function=None)

prediction=tf.layers.dense(l1,1,activation=None)

例3

常用参数

tf.layers.dense(

inputs,

units, 整数或长整数,输出空间的维数

activation=None,

use_bias=True,

kernel_initializer=None,

bias_initializer=tf.zeros_initializer(),

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None,

trainable=True,

name=None,

reuse=None

)

返回值:一个Tensor,shape与input相同(不含最后一位),最后一位是unit参数(tf.layers.dense的第二个位置参数)的值。