Spark RDD操作,常用算子《二》

RDD官方操作原文如下:http://spark.apache.org/docs/latest/rdd-programming-guide.html#rdd-operations

一、RDD Operations

RDDs support two types of operations: transformations, which create a new dataset from an existing one, and actions, which return a value to the driver program after running a computation on the dataset. For example, map is a transformation that passes each dataset element through a function and returns a new RDD representing the results. On the other hand, reduce is an action that aggregates all the elements of the RDD using some function and returns the final result to the driver program (although there is also a parallel reduceByKey that returns a distributed dataset).

All transformations in Spark are lazy, in that they do not compute their results right away. Instead, they just remember the transformations applied to some base dataset (e.g. a file). The transformations are only computed when an action requires a result to be returned to the driver program. This design enables Spark to run more efficiently. For example, we can realize that a dataset created through map will be used in a reduce and return only the result of the reduce to the driver, rather than the larger mapped dataset.

By default, each transformed RDD may be recomputed each time you run an action on it. However, you may also persist an RDD in memory using the persist (or cache) method, in which case Spark will keep the elements around on the cluster for much faster access the next time you query it. There is also support for persisting RDDs on disk, or replicated across multiple nodes.

总结为一下:

支持两种类型操作:RDDs support two types of operations:

1)transformations:which create a new dataset from an existing one#从已有Rdd转化创建成一个新的RDD

2)actions:which return a value to the driver program after running a computation on the dataset. #程序计算后返回一个数据集。

二、常用算子

(1)Transformation算子

1)map:

map(func):将func函数作用于算子生成

#map 算子

def mymap():

data = [1,2,3,4,5]

rdd1 = sc.parallelize(data)

rdd2 = rdd1.map(lambda x:x*2)

print(rdd2.collect())

#输出结果:2,4,6,8,102)filter:

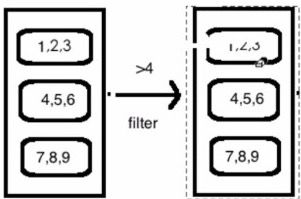

选出返回值为true的元素,生成新的分布式数据集

def myfilter():

data = [1,2,3,4,5]

rdd1 = sc.parallelize(data)

mapRdd = rdd1.map(lambda x:x*2)

filterRdd = mapRdd.filter(lambda x:x>5)

print(filterRdd.collect())

#简化写法

# rdd = rdd1.map(lambda x:x*2).filter(lambda x:x>5)

# print(rdd.collect())

#返回 6,8,103)flatMap:

flatMap(func)输入的item能够被map到0或者多个items输出,返回值是一个Sequence

def myflatMap():

data =['hello spark','hello world','hello world']

rdd1 = sc.parallelize(data)

rdd2 = rdd1.flatMap(lambda line:line.split(" "))

print(rdd2.collect())4)groupByKey:

把相同的key的数据分发到一起

def mygroupByKey():

data = ['hello spark', 'hello world',

'hello world']

rdd1 = sc.parallelize(data)

rdd2 = rdd1.flatMap(

lambda line: line.split(" ")).map(lambda x:(x,1))

groupRdd = rdd2.groupByKey()

print(groupRdd.map(lambda x:{x[0]:list(x[1])}).collect())

#打印结果为 [{'world': [1, 1]}, {'hello': [1, 1, 1]}, {'spark': [1]}]

5)reduceByKey

把相同的key放到一起并进行相应的计算

rdd2.reduceByKey(lambda x,y:x+y)

def myReduceByKey():

data = ['hello spark', 'hello world',

'hello world']

rdd1 = sc.parallelize(data)

rdd2 = rdd1.flatMap(

lambda line: line.split(" ")).map(

lambda x: (x, 1))

reduceByKeyRdd = rdd2.reduceByKey(lambda x,y:x+y)

print(reduceByKeyRdd.collect())

#输出结果: [('world', 2), ('hello', 3), ('spark', 1)]

6)union:

合并

def myunion():

a = sc.parallelize([1,2,3])

b = sc.parallelize([3,4,5,2,1,5])

print(a.union(b).collect())

#[1, 2, 3, 3, 4, 5, 2, 1, 5]

7)distinct:

去重

def mydistinct():

a = sc.parallelize([1, 2, 3])

b = sc.parallelize([3, 4, 2])

print(a.union(b).distinct().collect())

#输出结果:[4, 1, 2, 3]

8)join:

分为innerjoin,outerjoin

def myjoin():

a = sc.parallelize([("A",'a1'),("C",'c1'),("D",'d1'),("F",'f1'),('H','h1')])

b = sc.parallelize([("A",'a2'),("C",'c2'),("C",'c3'),("E",'e1')])

#内连接

print(a.join(b).collect())

print(a.leftOuterJoin(b).collect())

print(a.rightOuterJoin(b).collect())

print(a.fullOuterJoin(b).collect())

#输出结果为:[('A', ('a1', 'a2')), ('C', ('c1', 'c2')), ('C', ('c1', 'c3'))]

#[('A', ('a1', 'a2')), ('F', ('f1', None)), ('H', ('h1', None)), ('C', ('c1', #'c2')), ('C', ('c1', 'c3')), ('D', ('d1', None))]

#[('A', ('a1', 'a2')), ('C', ('c1', 'c2')), ('C', ('c1', 'c3')), ('E', (None, #'e1'))]

#[('A', ('a1', 'a2')), ('F', ('f1', None)), ('H', ('h1', None)), ('C', ('c1', #'c2')), ('C', ('c1', 'c3')), ('D', ('d1', None)), ('E', (None, 'e1'))](2)Action算子

action算子有:collect,count,take,reduce,saveAsTextFile,forearch,更多参见 rdd.py

def myaction():

data = [1,2,3,4,5,6,7,8,9,10]

rdd = sc.parallelize(data)

print(rdd.sum())

print(rdd.take(5))

print(rdd.min())

print(rdd.max())

print(rdd.top(2))

rdd.reduce(lambda x,y:x+y)

rdd.foreach(lambda x:print(x))

#输出结果:

55

[1, 2, 3, 4, 5]

1

10

[10, 9]

1

2

3

4

5

6

7

8

9

10相关推荐:

hadoop,pySpark环境安装与运行实战《一》

Spark RDD操作,常用算子《二》

PySpark之算子综合实战案例《三》

Spark运行模式以及部署《四》

Spark Core解析《五》

PySpark之Spark Core调优《六》

PySpark之Spark SQL的使用《七》