Kernel 中断分析六——softirq

Abstract

目前kernel中的中断机制主要有top half、bottom half(softirq、tasklet、waitqueue)、threaded irq handler。top half不用赘述,这里把threed irq handler与bottom half区分开,是因为他们有以下区别:

1、调度方式

threaded irq handler被系统调度器调度,bottom half被top half调度(也可通过ksoftirqd被schedule)。

2、运行上下文

threaded irq handler运行在process context中

bottom half:softirq和tasklet运行在interrupt context,waitqueue运行在process contex。

当然,他们有共同之处,将中断的部分或者大部分工作延迟执行。

啰嗦一句,系统调用属于异常处理程序,通过软件中断(software interrupt,不是softirq)实现,运行在process context。

本篇主要分析bottom half中的softirq。

softirq

软中断类型

379 /* PLEASE, avoid to allocate new softirqs, if you need not _really_ high

380 frequency threaded job scheduling. For almost all the purposes

381 tasklets are more than enough. F.e. all serial device BHs et

382 al. should be converted to tasklets, not to softirqs.

383 */

384

385 enum

386 {

387 HI_SOFTIRQ=0,

388 TIMER_SOFTIRQ,

389 NET_TX_SOFTIRQ,

390 NET_RX_SOFTIRQ,

391 BLOCK_SOFTIRQ,

392 BLOCK_IOPOLL_SOFTIRQ,

393 TASKLET_SOFTIRQ,

394 SCHED_SOFTIRQ,

395 HRTIMER_SOFTIRQ,

396 RCU_SOFTIRQ, /* Preferable RCU should always be the last softirq */

397

398 NR_SOFTIRQS

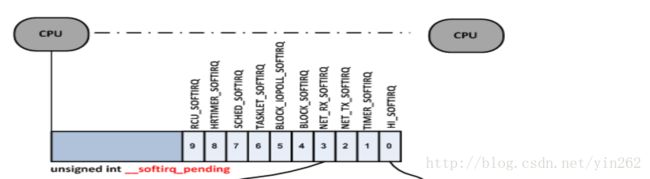

399 };系统已经定义好10种软中断类型,优先级依次降低,并且提示开发人员avoid to allocate new softirqs,开发最常使用的是HI_SOFTIRQ和TASKLET_SOFTIRQ。

软中断向量表

和硬中断的静态线性向量表类似,软中断也有个向量表,数组大小当然是10.

每个数据元素即一个软中断处理函数。

static struct softirq_action softirq_vec[NR_SOFTIRQS] __cacheline_aligned_in_smp;

408 /* softirq mask and active fields moved to irq_cpustat_t in

409 * asm/hardirq.h to get better cache usage. KAO

410 */

411

412 struct softirq_action

413 {

414 void (*action)(struct softirq_action *);

415 };

软中断初始化

每种软中断在初始化时都会调用open_softirq,来初始化软中断处理函数,以TASKLET_SOFTIRQ为例:

631 void __init softirq_init(void)

632 {

633 int cpu;

634

635 for_each_possible_cpu(cpu) {

636 per_cpu(tasklet_vec, cpu).tail =

637 &per_cpu(tasklet_vec, cpu).head;

638 per_cpu(tasklet_hi_vec, cpu).tail =

639 &per_cpu(tasklet_hi_vec, cpu).head;

640 }

641

642 open_softirq(TASKLET_SOFTIRQ, tasklet_action);

643 open_softirq(HI_SOFTIRQ, tasklet_hi_action);

644 }

430 void open_softirq(int nr, void (*action)(struct softirq_action *))

431 {

432 softirq_vec[nr].action = action;

433 }软中断触发

API

触发方软中断的API为raise_softirq_irqoff

446 void __tasklet_schedule(struct tasklet_struct *t)

447 {

448 unsigned long flags;

449

450 local_irq_save(flags);

451 t->next = NULL;

452 *__this_cpu_read(tasklet_vec.tail) = t;

453 __this_cpu_write(tasklet_vec.tail, &(t->next));

454 raise_softirq_irqoff(TASKLET_SOFTIRQ);

455 local_irq_restore(flags);

456 }

457 EXPORT_SYMBOL(__tasklet_schedule);有一个细节,调用raise_softirq_irqoff之前需要禁用本地cpu中断,那么是不是说执行softirq的过程中中断一直是禁止的呢?当然不是,我们看后面的分析。

raise_softirq_irqoff

395 /*

396 * This function must run with irqs disabled!

397 */

398 inline void raise_softirq_irqoff(unsigned int nr)

399 {

400 __raise_softirq_irqoff(nr);------------------------1

401

402 /*

403 * If we're in an interrupt or softirq, we're done

404 * (this also catches softirq-disabled code). We will

405 * actually run the softirq once we return from

406 * the irq or softirq.

407 *

408 * Otherwise we wake up ksoftirqd to make sure we

409 * schedule the softirq soon.

410 */

411 if (!in_interrupt())-------------------------------2

412 wakeup_softirqd();

413 }

1 . __raise_softirq_irqoff

调用or_softirq_pending,将irq_stat.__softirq_pending的对应软中断bit位置1,关于irq_stat.__softirq_pending,见appendix–__softirq_pending。

#define or_softirq_pending(x) this_cpu_or(irq_stat.__softirq_pending, (x))2 . in_interrupt判断此时是否处于中断上下文,具体判断的逻辑见appendix–in_interrupt。

如果处于中断上下文,那么不做任何处理,因为当前中断结束之后会处理pending的softirq。

否则,唤醒softorqd线程,处理软中断。

可以看到,这里并没有真正触发软中断,而是pending软中断,等待后续处理(或者中断退出时处理,或者主动唤醒softirqd处理)。所以之前的怀疑(软中断执行过程中禁用本地CPU中断)不成立。

触发时机

如图,kernel中触发软中断主要有以下几种情况

- irq_exit,退出中断时会检测有没有pending的软中断

- local_bh_enable

- netif_rx_ni

处理软中断的流程最终都会调用到__do_softirq,不同情况下有直接调用__do_softirq,也有通过唤醒softirqd来调用。

除了以上三种情况,在别的资料和blog上还有:

4. SMP中,处理完处理器间中断时

5. 在使用APIC的系统处理完本地中断时

本篇主要分析前两种情况。

irq_exit

最常见的调用流程是do_IRQ在退出中断时调用irq_exit。

374 /*

375 * Exit an interrupt context. Process softirqs if needed and possible:

376 */

377 void irq_exit(void)

378 {

379 #ifndef __ARCH_IRQ_EXIT_IRQS_DISABLED

380 local_irq_disable();

381 #else

382 WARN_ON_ONCE(!irqs_disabled());

383 #endif

384

385 account_irq_exit_time(current);

386 preempt_count_sub(HARDIRQ_OFFSET);-----------------------1

387 if (!in_interrupt() && local_softirq_pending())----------2

388 invoke_softirq();

389

390 tick_irq_exit();

391 rcu_irq_exit();

392 trace_hardirq_exit(); /* must be last! */

393 }

- 将硬件中断在preempt count中的计数减少,前面已经处理完硬件中断。

- 若当前不在中断上下文且有软中断pending,那么调用invoke_softirq

338 static inline void invoke_softirq(void)

339 {

340 if (!force_irqthreads) {

341 #ifdef CONFIG_HAVE_IRQ_EXIT_ON_IRQ_STACK

342 /*

343 * We can safely execute softirq on the current stack if

344 * it is the irq stack, because it should be near empty

345 * at this stage.

346 */

347 __do_softirq();

348 #else

349 /*

350 * Otherwise, irq_exit() is called on the task stack that can

351 * be potentially deep already. So call softirq in its own stack

352 * to prevent from any overrun.

353 */

354 do_softirq_own_stack();

355 #endif

356 } else {

357 wakeup_softirqd();

358 }

359 }从目前大部分内核配置来看,大多都使用强制线程化,所以会唤醒ksoftirqd进程。

ksoftirqd

kernel中每个cpu都定义了一个ksoftirqd进程,用来处理软中断。

57 DEFINE_PER_CPU(struct task_struct *, ksoftirqd);749 static struct smp_hotplug_thread softirq_threads = {

750 .store = &ksoftirqd,

751 .thread_should_run = ksoftirqd_should_run,

752 .thread_fn = run_ksoftirqd,

753 .thread_comm = "ksoftirqd/%u",

754 };

该线程被唤醒后会调用run_ksoftirqd

run_ksoftirqd

651 static void run_ksoftirqd(unsigned int cpu)

652 {

653 local_irq_disable();-------------------------1

654 if (local_softirq_pending()) {---------------2

655 /*

656 * We can safely run softirq on inline stack, as we are not deep

657 * in the task stack here.

658 */

659 __do_softirq();

660 local_irq_enable();-----------------3

661 cond_resched();

662

663 preempt_disable();

664 rcu_note_context_switch(cpu);

665 preempt_enable();

666

667 return;

668 }

669 local_irq_enable();

670 }- 进入ksoftirqd时先关闭中断,对应下文的3,很容易让人猜测,软中断处理过程中是不是对应CPU的中断时全部mask的?显然不是,在__do_softirq中还有对中断屏蔽的操作;

- 如果当前有软中断pending,那么调用__do_softirq

__do_softirq

225 asmlinkage void __do_softirq(void)

226 {

227 unsigned long end = jiffies + MAX_SOFTIRQ_TIME;

228 unsigned long old_flags = current->flags;

229 int max_restart = MAX_SOFTIRQ_RESTART;

230 struct softirq_action *h;

231 bool in_hardirq;

232 __u32 pending;

233 int softirq_bit;

234 int cpu;

235

236 /*

237 * Mask out PF_MEMALLOC s current task context is borrowed for the

238 * softirq. A softirq handled such as network RX might set PF_MEMALLOC

239 * again if the socket is related to swap

240 */

241 current->flags &= ~PF_MEMALLOC;----------------------------1

242

243 pending = local_softirq_pending();-------------------------2

244 account_irq_enter_time(current);---------------------------3

245

246 __local_bh_disable_ip(_RET_IP_, SOFTIRQ_OFFSET);------------4

247 in_hardirq = lockdep_softirq_start();

248

249 cpu = smp_processor_id();----------------------------------5

250 restart:

251 /* Reset the pending bitmask before enabling irqs */

252 set_softirq_pending(0);-----------------------------------6

253

254 local_irq_enable();----------------------------------------7

255

256 h = softirq_vec;-------------------------------------------8

257

258 while ((softirq_bit = ffs(pending))) {----------------------9

259 unsigned int vec_nr;

260 int prev_count;

261

262 h += softirq_bit - 1;------------------------------9.1

263

264 vec_nr = h - softirq_vec;

265 prev_count = preempt_count();---------------------9.2

266

267 kstat_incr_softirqs_this_cpu(vec_nr);

268

269 trace_softirq_entry(vec_nr);

270 h->action(h);--------------------------------------9.3

271 trace_softirq_exit(vec_nr);

272 if (unlikely(prev_count != preempt_count())) {-----9.4

273 pr_err("huh, entered softirq %u %s %p with preempt_count %08x, exited with %08x?\n",

274 vec_nr, softirq_to_name[vec_nr], h->action,

275 prev_count, preempt_count());

276 preempt_count_set(prev_count);

277 }

278 rcu_bh_qs(cpu);

279 h++;-----------------------------------------------9.5

280 pending >>= softirq_bit;----------------------------9.6

281 }

282

283 local_irq_disable();----------------------------------------10

284

285 pending = local_softirq_pending();-------------------------11

286 if (pending) {

287 if (time_before(jiffies, end) && !need_resched() &&----11.1

288 --max_restart)

289 goto restart;

290

291 wakeup_softirqd();--------------------------------11.2

292 }

293

294 lockdep_softirq_end(in_hardirq);

295 account_irq_exit_time(current);

296 __local_bh_enable(SOFTIRQ_OFFSET);-----------------------12

297 WARN_ON_ONCE(in_interrupt());

298 tsk_restore_flags(current, old_flags, PF_MEMALLOC);

299 }- 这里为什么要清除PF_MEMALLOC?这样分配内存时就会受到buddy system的限制。

- 获取当前pending的所有软中断

- 计算进入软中断的时间,便于出错时统计。

- disable当前CPU的软中断,所以__do_softirq时不响应新来的软中断(个人理解)

再来看所谓的disable软中断__local_bh_disable_ip,就是将preempt count中SOFTIRQ_OFFSET对应的bit加1,不用说,此时是禁止schedule的。还记得前面判断的in_interrupt吗?所以如果in_interrupt为真,那么此时可能其他软中断正在被处理。 - 获取当前CPU编号

- 清除irq_stat.__softirq_pending中所有pending的软中断

- enable当前cpu中断,所以软中断只是在前期关闭硬件中断,后期主要流程中可以被硬中断打断

- 获取softirq_vec,软中断向量表

从pending软中断中找第一个软中断,所以软中断的执行是有优先级的。但是从第四步可以看出,不能被新的软中断打断,即软中断不能打断软中断和硬中断

9.1 获取对应的软中断向量和向量编号vec_nr

9.2 获取preempt count

9.3 调用软中断向量的中断处理函数

9.4 执行软中断处理函数前后preempt count发生了改变,

我们知道有preempt count是percpu变量,

DECLARE_PER_CPU(int, __preempt_count);

说明该cpu上发生了软中断或者硬中断或者NMI或者在软中断处理函数中发生了schedule,而我们在之前已经禁用了软中断,并且如果发生了硬中断,该CPU应该会被硬中断打断并进入硬中断的处理流程,所以很大可能是在软中断处理函数中进行了调度,比如睡眠引发调度(以上个人理解)。这时kernel抛出一个错误信息提示用户。

9.4 发生了硬中断,我们知道硬中断的优先级比

9.5 h指向下一个软中断向量,既然下一次循环会重新计算h,不明白这里自加有什么意义。

9.6 便于下一次循环获取下一个软中断bit位这里禁用当前CPU的中断是为了下面可能要操作 wakeup_softirqd

- 在step 9中,依次遍历并执行了__softirq_pending中所有的软中断,借用网上的一张图,布局如下

在执行软中断处理函数的过程中,可能会有新的软中断触发,虽然不会打断当前正在执行的软中断处理流程,但是其对应的标志位会被set,比如我们之前分析的__raise_softirq_irqoff—>or_softirq_pending。

此时kernel会处理这些新来的软中断,如果此时处理软中断的时间没有超时且没有其他进程等待调度且step 6-9没有连续处理10次,那么继续restart重复处理;否则,kernel认为这段时间的软中断触发过于频繁,继续处理会给系统workload带来较大负担,其他进程得不到调度执行,影响了整体的performance,所以将后续到达的软中断延迟执行,即放到下一次ksoftirqd被调度的时候执行。

local_bh_enable

顾名思义,enable bottom half。看了一下,local_bh_enable的调用大都出现在net相关的驱动,由于对net缺乏了解,此处只分析local_bh_enable与softirq的相关流程。

30 static inline void local_bh_enable(void)

31 {

32 __local_bh_enable_ip(_THIS_IP_, SOFTIRQ_DISABLE_OFFSET);

33 }

143 void __local_bh_enable_ip(unsigned long ip, unsigned int cnt)

144 {

145 WARN_ON_ONCE(in_irq() || irqs_disabled());----------------------1

146 #ifdef CONFIG_TRACE_IRQFLAGS

147 local_irq_disable();

148 #endif

149 /*

150 * Are softirqs going to be turned on now:

151 */

152 if (softirq_count() == SOFTIRQ_DISABLE_OFFSET)

153 trace_softirqs_on(ip);

154 /*

155 * Keep preemption disabled until we are done with

156 * softirq processing:

157 */

158 preempt_count_sub(cnt - 1);------------------------------------2

159

160 if (unlikely(!in_interrupt() && local_softirq_pending())) {

161 /*

162 * Run softirq if any pending. And do it in its own stack

163 * as we may be calling this deep in a task call stack already.

164 */

165 do_softirq();

166 }

167

168 preempt_count_dec();--------------------------------------------3

169 #ifdef CONFIG_TRACE_IRQFLAGS

170 local_irq_enable();

171 #endif

172 preempt_check_resched();

173 }

174 EXPORT_SYMBOL(__local_bh_enable_ip);- 开头先check一下,in_irq其实是判断hard irq的context中,显然如果有硬中断正需要处理,应该硬中断优先。另外判断是否禁止了中断。

- 如果当前不在某个中断的中断上下文且有软中断pending,那么调用do_softirq。

do_softirq

301 asmlinkage void do_softirq(void)

302 {

303 __u32 pending;

304 unsigned long flags;

305

306 if (in_interrupt())----------------------1

307 return;

308

309 local_irq_save(flags);-------------------2

310

311 pending = local_softirq_pending();------3

312

313 if (pending)

314 do_softirq_own_stack();----------4

315

316 local_irq_restore(flags);---------------5

317 }- 再check一下~

- 关闭本地CPU中断

- 获得pending的软中断bit位

- 调用do_softirq_own_stack

- 开本地CPU中断

do_softirq_own_stack

148 void do_softirq_own_stack(void)

149 {

150 struct thread_info *curctx;

151 union irq_ctx *irqctx;

152 u32 *isp;

153

154 curctx = current_thread_info();

155 irqctx = __this_cpu_read(softirq_ctx);

156 irqctx->tinfo.task = curctx->task;

157 irqctx->tinfo.previous_esp = current_stack_pointer;

158

159 /* build the stack frame on the softirq stack */

160 isp = (u32 *) ((char *)irqctx + sizeof(*irqctx));

161

162 call_on_stack(__do_softirq, isp);

163 }这边其实就是保存当前进程的上下文,切换到softirq的栈,前面已经分析过hardirq和softirq在每个CPU各有自己的栈。

比较奇怪的是,运行run_ksoftirqd的时候没有看到栈的切换,希望能有大神解惑。

Appendix

__softirq_pending

每个cpu定义了一个irq_stat变量

24 DEFINE_PER_CPU_SHARED_ALIGNED(irq_cpustat_t, irq_stat);类型为irq_cpustat_t,其中__softirq_pending表示有哪些softirq已经pending等待处理。

7 typedef struct {

8 unsigned int __softirq_pending;

9 unsigned int __nmi_count; /* arch dependent */

10 #ifdef CONFIG_X86_LOCAL_APIC

11 unsigned int apic_timer_irqs; /* arch dependent */

12 unsigned int irq_spurious_count;

13 unsigned int icr_read_retry_count;

14 #endif

15 #ifdef CONFIG_HAVE_KVM

16 unsigned int kvm_posted_intr_ipis;

17 #endif

18 unsigned int x86_platform_ipis; /* arch dependent */

19 unsigned int apic_perf_irqs;

20 unsigned int apic_irq_work_irqs;

21 #ifdef CONFIG_SMP

22 unsigned int irq_resched_count;

23 unsigned int irq_call_count;

24 /*

25 * irq_tlb_count is double-counted in irq_call_count, so it must be

26 * subtracted from irq_call_count when displaying irq_call_count

27 */

28 unsigned int irq_tlb_count;

29 #endif

30 #ifdef CONFIG_X86_THERMAL_VECTOR

31 unsigned int irq_thermal_count;

32 #endif

33 #ifdef CONFIG_X86_MCE_THRESHOLD

34 unsigned int irq_threshold_count;

35 #endif

36 } ____cacheline_aligned irq_cpustat_t;

37in_interrupt

所谓中断上下文,必然是包含了各种中断情况,如HARDIRQ、SOFTIRQ、NMI(x86上才有NMI)。

在x86 kernel 中断分析三——中断处理流程中,我们分析了preempt_count的组成结构。判断当前是否处于中断上下文,就是看preempt_count中对应的bit是否有效。

66 #define in_interrupt() (irq_count())

55 #define irq_count() (preempt_count() & (HARDIRQ_MASK | SOFTIRQ_MASK \

56 | NMI_MASK))

25 EXPORT_PER_CPU_SYMBOL(irq_stat);

424 void __raise_softirq_irqoff(unsigned int nr)

425 {

426 trace_softirq_raise(nr);

427 or_softirq_pending(1UL << nr);

428 }