第七章、Hadoop之MapReduce框架原理(Shuffle机制)

一、简介

1、介绍说明

- Map方法之后,Reduce方法之前的数据处理过程称之为Shuffle。

2、Shuffle示意图

二、Partition分区

1、默认分区

- 问题引出

- 要求将统计结果按照条件输出到不同文件中(分区)。

比如:将统计结果按照手机归属地不同省份输出到不同的文件中。 - 默认Partitioner分区

public class HashPartitioner<K, V> extends Partitioner<K, V> {

/** Use {@link Object#hashCode()} to partition. */

public int getPartition(K key, V value,

int numReduceTasks) {

return (key.hashCode() & Integer.MAX_VALUE) % numReduceTasks;

}

}

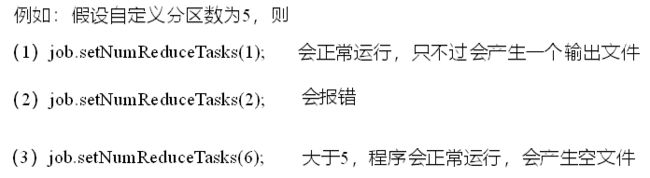

2、自定义Partitioner

3、自定义分区代码实现

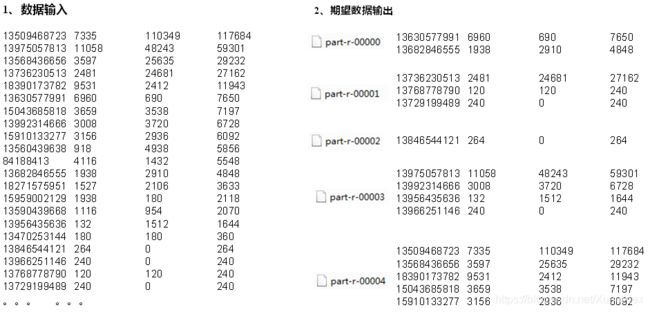

- 需求:将统计结果按照手机归属地不同省份输出到不同文件中(分区)

- 自定义分区代码

package com.lj.wordcount;

import com.lj.flowsum.FlowBean;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

public class ProvincePartitioner extends Partitioner<Text, FlowBean> {

@Override

public int getPartition(Text key, FlowBean value, int numPartitions) {

// 1 获取电话号码的前三位

String preNum = key.toString().substring(0, 3);

int partition = 4;

// 2 判断是哪个省

if ("136".equals(preNum)) {

partition = 0;

}else if ("137".equals(preNum)) {

partition = 1;

}else if ("138".equals(preNum)) {

partition = 2;

}else if ("139".equals(preNum)) {

partition = 3;

} else {

partition = 4;

}

return partition;

}

}

- 在驱动函数中增加自定义数据分区设置和ReduceTask设置

package com.lj.flowsum;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowsumDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{

"F:/HadoopTest/MapReduce/input/phone_data.txt","F:/HadoopTest/MapReduce/output/"};

Configuration conf = new Configuration();

// 1 获取job对象

Job job = Job.getInstance(conf );

// 2 设置jar的路径

job.setJarByClass(FlowsumDriver.class);

// 3 关联mapper和reducer

job.setMapperClass(FlowCountMapper.class);

job.setReducerClass(FlowCountReducer.class);

// 4 设置mapper输出的key和value类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class);

// 5 设置最终输出的key和value类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

//--> 8 指定自定义数据分区

job.setPartitionerClass(ProvincePartitioner.class);

//--> 9 同时指定相应数量的reduce task

job.setNumReduceTasks(5);

// 6 设置输入输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 提交job

boolean result = job.waitForCompletion(true);

System.exit(result?0 :1);

}

}

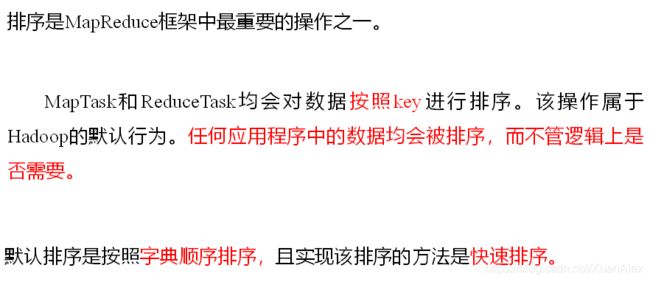

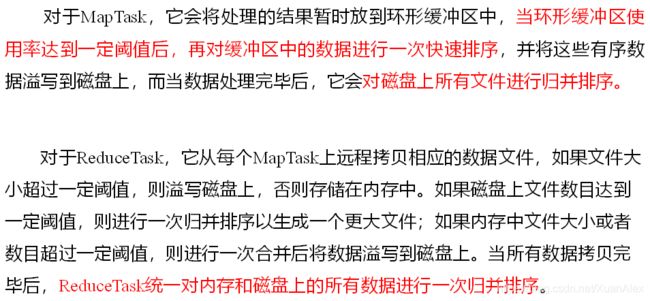

三、WritableComparable排序

1、排序概述

2、排序分类

3、自定义排序WritableComparable

- 原理分析

- bean对象做为key传输,需要实现WritableComparable接口重写compareTo方法,就可以实现排序。

@Override

public int compareTo(FlowBean o) {

int result;

// 按照总流量大小,倒序排列

if (sumFlow > bean.getSumFlow()) {

result = -1;

}else if (sumFlow < bean.getSumFlow()) {

result = 1;

}else {

result = 0;

}

return result;

}

4、WritableComparable排序案例实操(全排序)

package com.lj.flowsum;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class FlowBean implements WritableComparable<FlowBean> {

private long upFlow;// 上行流量

private long downFlow;// 下行流量

private long sumFlow;// 总流量

// 空参构造, 为了后续反射用

public FlowBean() {

super();

}

public FlowBean(long upFlow, long downFlow) {

super();

this.upFlow = upFlow;

this.downFlow = downFlow;

sumFlow = upFlow + downFlow;

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeLong(upFlow);

dataOutput.writeLong(downFlow);

dataOutput.writeLong(sumFlow);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

// 必须要求和序列化方法顺序一致

upFlow = dataInput.readLong();

downFlow = dataInput.readLong();

sumFlow = dataInput.readLong();

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getDownFlow() {

return downFlow;

}

public void setDownFlow(long downFlow) {

this.downFlow = downFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

public void set(long upFlow2, long downFlow2) {

upFlow = upFlow2;

downFlow = downFlow2;

sumFlow = upFlow2 + downFlow2;

}

@Override

public String toString() {

return upFlow + "\t" + downFlow + "\t" + sumFlow;

}

@Override

public int compareTo(FlowBean flowBean) {

int result;

// 按照总流量大小,倒序排列

if (sumFlow > flowBean.getSumFlow()) {

result = -1;

}else if (sumFlow < flowBean.getSumFlow()) {

result = 1;

}else {

result = 0;

}

return result;

}

}

- 编写Mapper类

package com.lj.flowsum;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class FlowCountMapper extends Mapper<LongWritable, Text, FlowBean, Text> {

private Text v = new Text();

private FlowBean bean = new FlowBean();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 7 13560436666 120.196.100.99 1116 954 200

// 1 获取一行

String line = value.toString();

// 2 切割 \t

String[] fields = line.split("\t");

// 3 封装对象

v.set(fields[1]);// 封装手机号

long upFlow = Long.parseLong(fields[fields.length - 3]);

long downFlow = Long.parseLong(fields[fields.length - 2]);

bean.set(upFlow,downFlow);

// 4 写出

context.write(bean, v);

}

}

- 编写Reducer类

package com.lj.flowsum;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class FlowCountReducer extends Reducer<FlowBean, Text, Text, FlowBean>{

FlowBean v = new FlowBean();

@Override

protected void reduce(FlowBean key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

// 循环输出,避免总流量相同情况

for (Text text : values) {

context.write(text, key);

}

}

}

- 编写Driver类

package com.lj.flowsum;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowsumDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{

"F:/HadoopTest/MapReduce/input/phone_data.txt","F:/HadoopTest/MapReduce/output/"};

Configuration conf = new Configuration();

// 1 获取job对象

Job job = Job.getInstance(conf );

// 2 设置jar的路径

job.setJarByClass(FlowsumDriver.class);

// 3 关联mapper和reducer

job.setMapperClass(FlowCountMapper.class);

job.setReducerClass(FlowCountReducer.class);

// 4 设置mapper输出的key和value类型

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(Text.class);

// 5 设置最终输出的key和value类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

// 6 设置输入输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 提交job

boolean result = job.waitForCompletion(true);

System.exit(result?0 :1);

}

}

5、WritableComparable排序案例实操(区内排序)

package com.lj.flowsum;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

public class ProvincePartitioner extends Partitioner<FlowBean, Text>{

@Override

public int getPartition(FlowBean key, Text value, int numPartitions) {

// key是手机号

// value 流量信息

// 获取手机号前三位

String prePhoneNum = key.toString().substring(0, 3);

int partition = 4;

if ("136".equals(prePhoneNum)) {

partition = 0;

}else if ("137".equals(prePhoneNum)) {

partition = 1;

}else if ("138".equals(prePhoneNum)) {

partition = 2;

}else if ("139".equals(prePhoneNum)) {

partition = 3;

}

return partition;

}

}

- 在驱动类中添加分区类

// 加载自定义分区类

job.setPartitionerClass(ProvincePartitioner.class);

// 设置Reducetask个数

job.setNumReduceTasks(5);

四、Combiner合并

1、介绍说明

2、自定义Combiner实现步骤

- 自定义一个Combiner继承Reducer,重写Reduce方法

package com.lj.wordcount;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountCombiner extends Reducer<Text, IntWritable, Text, IntWritable>{

private IntWritable v = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

int sum = 0;

// 1 累加求和

for (IntWritable value : values) {

sum += value.get();

}

v.set(sum);

// 2 写出

context.write(key, v);

}

}

- 在Job驱动类中设置

- job.setCombinerClass(WordCountCombiner.class);

3、Combiner合并案例实操

- 需求:统计过程中对每一个MapTask的输出进行局部汇总,以减小网络传输量即采用Combiner功能

- (1) 数据输入

输入数据较多。 - (2) 期望输出数据

期望:Combine输入数据多,输出时经过合并,输出数据降低。 - 需求分析

- 代码操作

- 增加一个WordcountCombiner类继承Reducer

package com.lj.wordcount;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountCombiner extends Reducer<Text, IntWritable, Text, IntWritable>{

private IntWritable v = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

int sum = 0;

// 1 累加求和

for (IntWritable value : values) {

sum += value.get();

}

v.set(sum);

// 2 写出

context.write(key, v);

}

}

- 1. 在WordCountDriver驱动类中指定Combiner

// 指定需要使用combiner,以及用哪个类作为combiner的逻辑

job.setCombinerClass(WordCountCombiner.class);

- 2. 将WordCountReducer作为Combiner在WordcCountDriver驱动类中指定

// 指定需要使用Combiner,以及用哪个类作为Combiner的逻辑

job.setCombinerClass(WordCountReducer.class);

五、GroupingComparator分组(辅助排序)

1、介绍说明

- 对Reduce阶段的数据根据某一个或几个字段进行分组

- 分组排序步骤:

- (1) 自定义类继承WritableComparator

- (2) 重写compare()方法

@Override

public int compare(WritableComparable a, WritableComparable b) {

// 比较的业务逻辑

return result;

}

- (3) 创建一个构造器将比较对象的类传给父类

protected OrderGroupingComparator() {

super(OrderBean.class, true);

}

2、分组案例代码实现

- 需求:有如下订单数据,现在需要求出每一个订单中最贵的商品

- 期望输出数据

1 222.8

2 722.4

3 232.8 - 需求分析

- (1) 利用“订单id和成交金额”作为key,可以将Map阶段读取到的所有订单数据按照id升序排序,如果id相同再按照金额降序排序,发送到Reduce。

- (2) 在Reduce端利用groupingComparator将订单id相同的kv聚合成组,然后取第一个即是该订单中最贵商品。

- 代码实现

- 定义订单信息OrderBean类

package com.lj.order;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class OrderBean implements WritableComparable<OrderBean> {

private int order_id;

private double price;

public OrderBean() {

super();

}

public OrderBean(int order_id, double price) {

super();

this.order_id = order_id;

this.price = price;

}

// 二次排序

@Override

public int compareTo(OrderBean orderBean) {

int result;

if (order_id > orderBean.getOrder_id()) {

result = 1;

} else if (order_id < orderBean.getOrder_id()) {

result = -1;

} else {

// 价格倒序排序

result = price > orderBean.getPrice() ? -1 : 1;

}

return result;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeInt(order_id);

out.writeDouble(price);

}

@Override

public void readFields(DataInput in) throws IOException {

order_id = in.readInt();

price = in.readDouble();

}

public int getOrder_id() {

return order_id;

}

public void setOrder_id(int order_id) {

this.order_id = order_id;

}

public double getPrice() {

return price;

}

public void setPrice(double price) {

this.price = price;

}

@Override

public String toString() {

return order_id + "\t" + price;

}

}

- 编写OrderMapper类

package com.lj.order;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class OrderMapper extends Mapper<LongWritable, Text, OrderBean, NullWritable> {

OrderBean k = new OrderBean();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行

String line = value.toString();

// 2 截取

String[] fields = line.split("\t");

// 3 封装对象

k.setOrder_id(Integer.parseInt(fields[0]));

k.setPrice(Double.parseDouble(fields[2]));

// 4 写出

context.write(k, NullWritable.get());

}

}

- 编写OrderGroupingComparator类

package com.lj.order;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

public class OrderGroupingComparator extends WritableComparator {

protected OrderGroupingComparator() {

super(OrderBean.class, true);

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

OrderBean aBean = (OrderBean) a;

OrderBean bBean = (OrderBean) b;

int result;

if (aBean.getOrder_id() > bBean.getOrder_id()) {

result = 1;

} else if (aBean.getOrder_id() < bBean.getOrder_id()) {

result = -1;

} else {

result = 0;

}

return result;

}

}

- 编写OrderReducer类

package com.lj.order;

import java.io.IOException;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Reducer;

public class OrderReducer extends Reducer<OrderBean, NullWritable, OrderBean, NullWritable> {

@Override

protected void reduce(OrderBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

context.write(key, NullWritable.get());

}

}

- 编写OrderDriver类

package com.lj.order;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class OrderDriver {

public static void main(String[] args) throws Exception, IOException {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置

args = new String[] {

"F:/HadoopTest/MapReduce/input/", "F:/HadoopTest/MapReduce/output" };

// 1 获取配置信息

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

// 2 设置jar包加载路径

job.setJarByClass(OrderDriver.class);

// 3 加载map/reduce类

job.setMapperClass(OrderMapper.class);

job.setReducerClass(OrderReducer.class);

// 4 设置map输出数据key和value类型

job.setMapOutputKeyClass(OrderBean.class);

job.setMapOutputValueClass(NullWritable.class);

// 5 设置最终输出数据的key和value类型

job.setOutputKeyClass(OrderBean.class);

job.setOutputValueClass(NullWritable.class);

// 6 设置输入数据和输出数据路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 8 设置reduce端的分组

job.setGroupingComparatorClass(OrderGroupingComparator.class);

// 7 提交

boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1);

}

}

对以前的知识回顾,加深基础知识!

学习来自:尚硅谷大数据学习视频

每天进步一点点,也许某一天你也会变得那么渺小!!!