LDA主题模型练习1

1.本文针对LDA主题模型进行学习和练习,核心摘要如下:

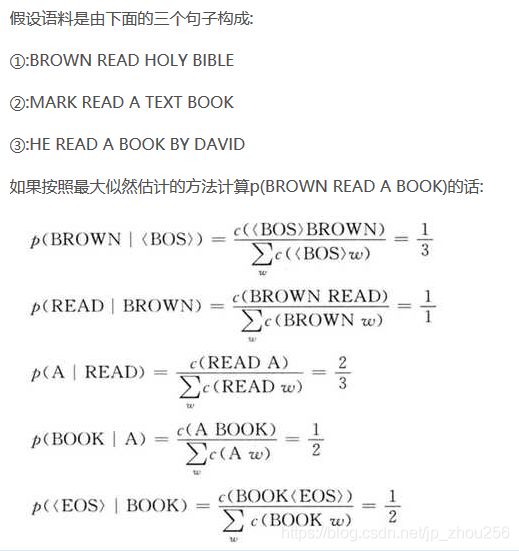

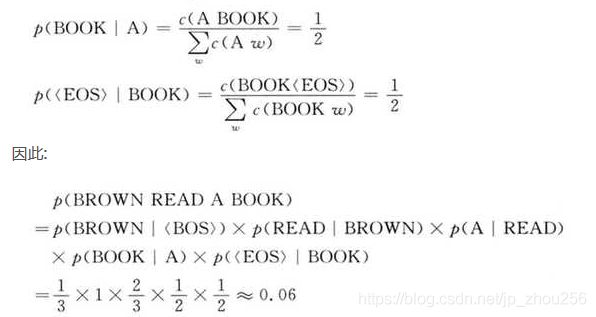

2.NLP中的共现对应条件概率(独立时最特殊),最大似然估计计算字符的共现例子:

2.NLP中的共现对应条件概率(独立时最特殊),最大似然估计计算字符的共现例子:

3.LDA主题模型代码实例

#-*-coding:utf8-*-

import jieba

dir1='E:/ssssszzz/lda/'

def stopwordslist(filepath):

stopwords = [line.strip() for line in open(filepath, 'r',encoding="UTF-8").readlines()]

#readlines是行的list

return stopwords

def seg_sentence(sentence):

sentence_seged = jieba.cut(sentence.strip())

stopwords = stopwordslist(dir1+'stopWords/stopwords.txt')

outstr = ''

for word in sentence_seged:

#jieba对每一行进行分词,并将英文字母大写转为小写

word = word.lower()

#不是停用词,不是特殊符号就加入到str字符串中,并以空格分隔开。

if word not in stopwords:

if word != '\t':

outstr += word

outstr += " "

return outstr

inputs = open(dir1+'input/copurs.txt','r+',encoding="UTF-8")

#读入文本语料,中文强制添加encoding='utf-8'才不会出现下面的gbk报错。

#UnicodeDecodeError: 'gbk' codec can't decode byte 0xbe in position 44: illegal multibyte sequence

content1= inputs.readlines()

inputs.close()

outputs = open(dir1+'input/copurs_out.txt', 'w',encoding="UTF-8") #写入jieba分词的结果

for line in content1:

line_seg = seg_sentence(line)

outputs.write(line_seg + '\n')

outputs.close()

import codecs

from gensim import corpora

from gensim.models import LdaModel

from gensim import models

from gensim.corpora import Dictionary

te = []

fp = codecs.open(dir1+'input/copurs_out.txt','r',encoding="UTF-8")

for line in fp:

line = line.split()

te.append([ w for w in line ])

print(len(te))

dictionary = corpora.Dictionary(te)

corpus = [ dictionary.doc2bow(text) for text in te ]

#语料扔到TF-IDF中计算

tfidf = models.TfidfModel(corpus)

corpus_tfidf = tfidf[corpus]

#########Run the LDA model for XX topics ###############################

lda = LdaModel(corpus=corpus, id2word=dictionary, num_topics=50,passes=2000)

doc_topic = [a for a in lda[corpus]]

####### write the topics in file topics_result.txt ####################

topics_r = lda.print_topics(num_topics = 50, num_words = 10)

topic_name = codecs.open(dir1+'output/topics_result.txt','w',encoding="UTF-8")

for v in topics_r:

topic_name.write(str(v)+'\n')

###################### write the class results to file #########################

###################### each document belongs to which topic ######################

fp2 = codecs.open(dir1+'output/documents_result.txt','w',encoding="UTF-8")

for t in doc_topic:

c = []

c.append([a[1] for a in t])

m = max(c[0])

for i in range(0, len(t)):

if m in t[i]:

#print(t[i])

fp2.write(str(t[i][0]) + ' ' + str(t[i][1]) + '\n')

break

################################ OVER ############################################

fp2.close()

#########Run the LDA model for XX topics ###############################

lda = LdaModel(corpus=corpus, id2word=dictionary, num_topics=50,passes=2000)

doc_topic = [a for a in lda[corpus]]

运行效果:然而不知道是单篇文章没用,还是哪里没弄对,不知道LDA为什么并没有给出具体的主体,也没有实现对多文档的归类功能?

1>.documents_result.txt

41 0.8911111111111114

22 0.988604651162792

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

41 0.6289422620757548

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

43 0.9711764705882348

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

28 0.9772093023255799

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

41 0.7800000000000002

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

8 0.9591666666666674

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

13 0.8775000000000017

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

13 0.9608000000000005

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

14 0.7695384615384631

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

30 0.9387500000000005

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

9 0.9683870967741941

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

38 0.8366666666666672

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

49 0.981153846153846

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

14 0.9777272727272702

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

31 0.9920325203247484

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

26 0.7549999999999999

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

35 0.9821818181818174

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

21 0.9833898305084731

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

27 0.9711764705882333

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

21 0.984444444444443

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

41 0.7525000000000003

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

8 0.986756756756759

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

19 0.9920967741935494

0 0.01999999999999999

0 0.01999999999999999

0 0.01999999999999999

45 0.9836666666666665

0 0.01999999999999999

15 0.5099999999999999

2>.topics_result.txt

(0, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(1, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(2, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(3, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(4, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(5, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(6, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(7, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(8, '0.086*"创新" + 0.057*"标准" + 0.048*"技术" + 0.038*"银行" + 0.038*"市场" + 0.038*"间" + 0.038*"体系" + 0.038*"金融" + 0.029*"科技" + 0.029*"标准化"')

(9, '0.077*"技术" + 0.052*"产品" + 0.052*"未来" + 0.052*"世界" + 0.026*"很大" + 0.026*"带来" + 0.026*"随之而来" + 0.026*"新" + 0.026*"颠覆性" + 0.026*"充分运用"')

(10, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(11, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(12, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(13, '0.093*"世界" + 0.074*"科技" + 0.074*"金融" + 0.056*"技术" + 0.056*"支撑" + 0.056*"标准化" + 0.056*"未来" + 0.056*"元素" + 0.037*"工作" + 0.037*"一是"')

(14, '0.098*"标准" + 0.049*"工作组" + 0.049*"技术标准" + 0.039*"银行" + 0.039*"市场" + 0.039*"间" + 0.030*"体系" + 0.020*"区块" + 0.020*"人工智能" + 0.020*"包括"')

(15, '0.101*"途径" + 0.002*"业务" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"sc9" + 0.002*"wg1" + 0.002*"此项工作" + 0.002*"金标委" + 0.002*"提升"')

(16, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(17, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(18, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(19, '0.061*"市场" + 0.046*"银行" + 0.030*"间" + 0.030*"建设" + 0.023*"技术" + 0.023*"金融" + 0.023*"中国" + 0.023*"中国外汇交易中心" + 0.023*"基础设施" + 0.023*"平台"')

(20, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(21, '0.047*"金融" + 0.047*"标准" + 0.047*"国际标准" + 0.031*"市场" + 0.031*"科技" + 0.031*"间" + 0.031*"银行" + 0.023*"发展" + 0.023*"我国" + 0.023*"中国外汇交易中心"')

(22, '0.065*"金融" + 0.065*"市场" + 0.054*"间" + 0.043*"科技" + 0.032*"银行" + 0.032*"发展" + 0.021*"影响" + 0.021*"颠覆性" + 0.021*"交易量" + 0.021*"中"')

(23, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(24, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(25, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(26, '0.084*"三是" + 0.084*"国际标准" + 0.084*"兼容" + 0.002*"核心成员" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"各项" + 0.002*"业务" + 0.002*"大部分"')

(27, '0.072*"fix" + 0.048*"相关" + 0.048*"外" + 0.048*"组织" + 0.048*"工作组" + 0.048*"国际标准" + 0.024*"exchange" + 0.024*"参与" + 0.024*"iso" + 0.024*"互联互通"')

(28, '0.057*"新" + 0.057*"活动" + 0.038*"世界" + 0.038*"物" + 0.038*"机" + 0.038*"融合" + 0.019*"链" + 0.019*"区块" + 0.019*"中" + 0.019*"未来"')

(29, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(30, '0.125*"货币" + 0.042*"世界" + 0.042*"金融交易" + 0.042*"数字" + 0.042*"介质" + 0.042*"思考" + 0.042*"构建" + 0.042*"如果说" + 0.042*"实物" + 0.042*"转换"')

(31, '0.069*"间" + 0.054*"银行" + 0.054*"市场" + 0.046*"标准" + 0.038*"生态圈" + 0.031*"机构" + 0.015*"机制" + 0.015*"服务" + 0.015*"开发" + 0.015*"深度"')

(32, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(33, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(34, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(35, '0.095*"标准" + 0.048*"iso" + 0.048*"语义" + 0.048*"各项" + 0.032*"统一" + 0.032*"组织" + 0.032*"建立" + 0.032*"工作组" + 0.016*"开放" + 0.016*"门户"')

(36, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(37, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(38, '0.072*"二是" + 0.072*"建立" + 0.072*"体系" + 0.072*"生态圈" + 0.072*"标准" + 0.001*"sc9" + 0.001*"模型" + 0.001*"fix" + 0.001*"核心成员" + 0.001*"semantic"')

(39, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(40, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(41, '0.114*"银行" + 0.114*"科技" + 0.113*"间" + 0.112*"市场" + 0.112*"金融" + 0.088*"标准化" + 0.059*"建设" + 0.030*"\ufeff" + 0.001*"此项工作" + 0.001*"核心成员"')

(42, '0.002*"sc9" + 0.002*"此项工作" + 0.002*"semantic" + 0.002*"模型" + 0.002*"fix" + 0.002*"核心成员" + 0.002*"各项" + 0.002*"提升" + 0.002*"大部分" + 0.002*"web"')

(43, '0.048*"世界" + 0.048*"外汇交易" + 0.048*"做" + 0.048*"程序" + 0.048*"交易" + 0.024*"半神" + 0.024*"另一端" + 0.024*"构筑" + 0.024*"很难" + 0.024*"创造"')

4.节选出来《银行间市场文章》中的20个关键词

import jieba

import jieba.analyse as janali #这里必须导入

#sentence = '全国港澳研究会会长徐泽在会上发言指出,学习系列重要讲话要深刻领会 主席关于香港回归后的宪制基础和宪制秩序的论述,这是过去20年特别是中共十八大以来"一国两制"在香港实践取得成功的根本经验。首先,要在夯实 香港的宪制基础、巩固香港的宪制秩序上着力。只有牢牢确立起"一国两制"的宪制秩序,才能保证"一国两制"实践不走样 、不变形。其次,要在完善基本法实施的制度和机制上用功。中央直接行使的权力和特区高度自治权的结合是特区宪制秩 序不可或缺的两个方面,同时必须切实建立以行政长官为核心的行政主导体制。第三,要切实加强香港社会特别是针对公 职人员和青少年的宪法、基本法宣传,牢固树立"一国"意识,坚守"一国"原则。第四,要努力在全社会形成聚焦发展、抵 制泛政治化的氛围和势能,全面准确理解和落实基本法有关经济事务的规定,使香港继续在国家发展中发挥独特作用并由 此让最广大民众获得更实在的利益。'

sentence=''

with open(dir1+'input/copurs.txt','r+',encoding="UTF-8") as fp:

content1=fp.readlines()

for line in content1:

if len(line)==0:

continue

else:

#print(line)

sentence+=line.strip() #将文章做字符串拼接

jieba.analyse.set_stop_words(dir1+'stopWords/stopwords.txt') #导入停用词词典

jieba.load_userdict(dir1+'input/cefts_dict.txt') #导入自定义词典

keywords = janali.extract_tags(sentence, topK=20, withWeight=True, allowPOS=('n','nr','ns'))

效果

('银行间市场', 0.6035416603405825)

('金融科技', 0.27855768938796116)

('标准', 0.2627427868752427)

('标准体系', 0.23213140782330097)

('中国外汇交易中心', 0.172578511944)

('银行间市场技术标准工作组', 0.16249198547631066)

('金融科技标准化', 0.13927884469398058)

('国际标准', 0.1262114562056505)

('创新', 0.12261755526291263)

('生态圈', 0.11933242465533982)

('ISO', 0.11606570391165048)

('建设', 0.11446558441118447)

('技术', 0.10996793425794174)

('金融', 0.10195540484135922)

('标准化', 0.0947095517924272)

('世界', 0.09328703584712622)

('FIX', 0.09285256312932039)

('颠覆性', 0.08509699940038834)

('工作组', 0.08246122214097089)

('发展', 0.07636060204823301)

5.补充例程(LDA代码及数据结构、存储结构解析)

from gensim import corpora, models, similarities

from pprint import pprint

# import logging

# logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

if __name__ == '__main__':

f = open('../data/LDA_test.txt')

stop_list = set('for a of the and to in'.split())

# texts = [line.strip().split() for line in f]

# print(texts)

texts = [[word for word in line.strip().lower().split() if word not in stop_list] for line in f]

print('Text = ')

pprint(texts)

dictionary = corpora.Dictionary(texts) #这是一个字典,直接使用字典的遍历方式查看具体的数据结构和组织方式

type(dictionary) #gensim.corpora.dictionary.Dictionary

for key,value in dictionary.items():

print(key,'===========',value)

V = len(dictionary)

corpus = [dictionary.doc2bow(text) for text in texts]

type(corpus) #list,每个元素是有个tuple对象,tuple中分装了词的ID和频数

corpus_tfidf = models.TfidfModel(corpus)[corpus] #转化所有9篇文章中的每个词汇的词频占比与逆文档率的乘积

#corpus_tfidf = models.TfidfModel(corpus)[corpus[0]] #输出第一篇文档的每个字的词频占比与逆文档率的乘积

type(models.TfidfModel(corpus)) # gensim.models.tfidfmodel.TfidfModel

print(models.TfidfModel(corpus)) #TfidfModel(num_docs=9, num_nnz=51),文档数为9,51个单词

print('TF-IDF:')

for c in corpus_tfidf: #多篇文章时为二维数组,输出是每一篇文章的每个词汇tf-idf的值

print(c)

print('\nLSI Model:')

lsi = models.LsiModel(corpus_tfidf, num_topics=3, id2word=dictionary)

topic_result = [a for a in lsi[corpus_tfidf]]

pprint(topic_result)

print('LSI Topics:')

pprint(lsi.print_topics(num_topics=3, num_words=5))

#9篇文章两两之间的相似度

similarity = similarities.MatrixSimilarity(lsi[corpus_tfidf]) # similarities.Similarity()

print('Similarity:')

pprint(list(similarity))#发现针对第一篇文章为1的,它和第3和4篇文章主题是最相近的

print('\nLDA Model:')

num_topics = 2

lda = models.LdaModel(corpus_tfidf, num_topics=num_topics, id2word=dictionary,

alpha='auto', eta='auto', minimum_probability=0.001)

type(lda)#gensim.models.ldamodel.LdaModel

doc_topic = [doc_t for doc_t in lda[corpus_tfidf]] #9篇文章的主体

doc_topic = [doc_t for doc_t in lda[corpus_tfidf[0]]] #只输出第一篇文章的两个主题

print('Document-Topic:\n')

pprint(doc_topic)

#for doc_topic in lda.get_document_topics(corpus_tfidf[0]): #单篇文章的主题分数

for doc_topic in lda.get_document_topics(corpus_tfidf): #多篇文章

print(doc_topic)

for topic_id in range(num_topics):

print('Topic', topic_id)

# pprint(lda.get_topic_terms(topicid=topic_id))

pprint(lda.show_topic(topic_id))

similarity = similarities.MatrixSimilarity(lda[corpus_tfidf])

print('Similarity:')

pprint(list(similarity))

for top in lda.print_topics(10):

print(top) #输出主题=关键词的线性加权组合序列

hda = models.HdpModel(corpus_tfidf, id2word=dictionary)

topic_result = [a for a in hda[corpus_tfidf]]

print('\n\nUSE WITH CARE--\nHDA Model:')

pprint(topic_result)

print('HDA Topics:')

print(hda.print_topics(num_topics=2, num_words=5))

Text =

[['human', 'machine', 'interface', 'lab', 'abc', 'computer', 'applications'],

['survey', 'user', 'opinion', 'computer', 'system', 'response', 'time'],

['eps', 'user', 'interface', 'management', 'system'],

['system', 'human', 'system', 'engineering', 'testing', 'eps'],

['relation', 'user', 'perceived', 'response', 'time', 'error', 'measurement'],

['generation', 'random', 'binary', 'unordered', 'trees'],

['intersection', 'graph', 'paths', 'trees'],

['graph', 'minors', 'iv', 'widths', 'trees', 'well', 'quasi', 'ordering'],

['graph', 'minors', 'survey']]

17 =========== relation

18 =========== perceived

34 =========== quasi

28 =========== paths

23 =========== random

29 =========== widths

19 =========== measurement

2 =========== human

20 =========== error

27 =========== graph

21 =========== binary

1 =========== applications

8 =========== response

9 =========== user

25 =========== generation

5 =========== computer

16 =========== engineering

14 =========== management

7 =========== opinion

32 =========== ordering

10 =========== survey

26 =========== intersection

0 =========== abc

30 =========== well

15 =========== testing

31 =========== iv

13 =========== eps

24 =========== unordered

4 =========== interface

11 =========== system

22 =========== trees

3 =========== lab

33 =========== minors

12 =========== time

6 =========== machine

TfidfModel(num_docs=9, num_nnz=51)

TF-IDF:

[(0, 0.4301019571350565), (1, 0.4301019571350565), (2, 0.2944198962221451), (3, 0.4301019571350565), (4, 0.2944198962221451), (5, 0.2944198962221451), (6, 0.4301019571350565)]

[(5, 0.3726494271826947), (7, 0.5443832091958983), (8, 0.3726494271826947), (9, 0.27219160459794917), (10, 0.3726494271826947), (11, 0.27219160459794917), (12, 0.3726494271826947)]

[(4, 0.438482464916089), (9, 0.32027755044706185), (11, 0.32027755044706185), (13, 0.438482464916089), (14, 0.6405551008941237)]

[(2, 0.3449874408519962), (11, 0.5039733231394895), (13, 0.3449874408519962), (15, 0.5039733231394895), (16, 0.5039733231394895)]

[(8, 0.30055933182961736), (9, 0.21953536176370683), (12, 0.30055933182961736), (17, 0.43907072352741366), (18, 0.43907072352741366), (19, 0.43907072352741366), (20, 0.43907072352741366)]

[(21, 0.48507125007266594), (22, 0.24253562503633297), (23, 0.48507125007266594), (24, 0.48507125007266594), (25, 0.48507125007266594)]

[(22, 0.31622776601683794), (26, 0.6324555320336759), (27, 0.31622776601683794), (28, 0.6324555320336759)]

[(22, 0.20466057569885868), (27, 0.20466057569885868), (29, 0.40932115139771735), (30, 0.40932115139771735), (31, 0.40932115139771735), (32, 0.40932115139771735), (33, 0.2801947048062438), (34, 0.40932115139771735)]

[(10, 0.6282580468670046), (27, 0.45889394536615247), (33, 0.6282580468670046)]

LSI Model:

[[(0, 0.34057117986841867), (1, -0.20602251622679593), (2, 0.2516322932612177)],

[(0, 0.6933040002171559),

(1, 0.007232758390388435),

(2, -0.42828031011610446)],

[(0, 0.5902607670389721), (1, -0.3526046949085568), (2, 0.30883209258107536)],

[(0, 0.5214901821825134), (1, -0.33887976154055316), (2, 0.4328304015902547)],

[(0, 0.3953319317635442),

(1, -0.059192853366600705),

(2, -0.6817088379096499)],

[(0, 0.036353173528493994), (1, 0.18146550208819023), (2, 0.2040948457195041)],

[(0, 0.14709012328778975), (1, 0.49432948127822346), (2, 0.2520741552399365)],

[(0, 0.21407117317565383), (1, 0.6406456664453949), (2, 0.21254395627262415)],

[(0, 0.40066568318170775), (1, 0.6413108299093997), (2, -0.04311301052997242)]]

LSI Topics:

[(0,

'0.400*"system" + 0.318*"survey" + 0.290*"user" + 0.274*"eps" + '

'0.236*"management"'),

(1,

'0.421*"minors" + 0.420*"graph" + 0.293*"survey" + 0.239*"trees" + '

'0.226*"paths"'),

(2,

'-0.318*"response" + -0.318*"time" + -0.261*"error" + -0.261*"perceived" + '

'-0.261*"relation"')]

Similarity:

[array([ 1. , 0.33056617, 0.989982 , 0.99866086, -0.06638948,

0.20311232, 0.04321319, -0.01678792, -0.0182744 ], dtype=float32),

array([ 0.33056617, 0.99999994, 0.4467398 , 0.28133896, 0.87825346,

-0.271207 , -0.0051405 , 0.10747484, 0.48745936], dtype=float32),

array([ 0.989982 , 0.4467398 , 1. , 0.9822476 , 0.07334356,

0.09873419, -0.02226283, -0.06350847, -0.00516158], dtype=float32),

array([ 0.99866086, 0.28133896, 0.9822476 , 1.0000001 , -0.11496954,

0.21939825, 0.04205609, -0.02510544, -0.04712418], dtype=float32),

array([-0.06638948, 0.87825346, 0.07334356, -0.11496954, 1. ,

-0.62235594, -0.31511295, -0.17545702, 0.2503119 ], dtype=float32),

array([ 0.20311232, -0.271207 , 0.09873419, 0.21939825, -0.62235594,

0.99999994, 0.9262762 , 0.85813475, 0.5853234 ], dtype=float32),

array([ 0.04321319, -0.0051405 , -0.02226283, 0.04205609, -0.31511295,

0.9262762 , 1. , 0.9883332 , 0.83967173], dtype=float32),

array([-0.01678792, 0.10747484, -0.06350847, -0.02510544, -0.17545702,

0.85813475, 0.9883332 , 1. , 0.9088737 ], dtype=float32),

array([-0.0182744 , 0.48745936, -0.00516158, -0.04712418, 0.2503119 ,

0.5853234 , 0.83967173, 0.9088737 , 1. ], dtype=float32)]

LDA Model:

Document-Topic:

[(0, 0.2965872083745731), (1, 0.7034127916254268)]

[(0, 0.2964665697022038), (1, 0.7035334302977961)]

[(0, 0.24696754833255918), (1, 0.7530324516674408)]

[(0, 0.3391022982510855), (1, 0.6608977017489145)]

[(0, 0.5928334226714413), (1, 0.40716657732855865)]

[(0, 0.24102655276664547), (1, 0.7589734472333545)]

[(0, 0.2841667707331107), (1, 0.7158332292668892)]

[(0, 0.34667048063848893), (1, 0.6533295193615112)]

[(0, 0.7336864063998245), (1, 0.2663135936001754)]

[(0, 0.5700078334633175), (1, 0.4299921665366824)]

Topic 0

[('minors', 0.041682411039787835),

('graph', 0.041520911134093876),

('system', 0.038329206254127626),

('survey', 0.03753585153191179),

('eps', 0.0351287056411886),

('human', 0.033430984047230196),

('trees', 0.031461369346122106),

('testing', 0.030907375780524896),

('well', 0.030744358008127703),

('engineering', 0.030595539801796162)]

Topic 1

[('system', 0.040118726033734085),

('survey', 0.03734100599657068),

('user', 0.03678637729732701),

('time', 0.03483279508494677),

('computer', 0.033932015303668),

('interface', 0.033793948617101006),

('response', 0.03378231469734522),

('trees', 0.033445559925836345),

('graph', 0.033062305667871876),

('paths', 0.03165595482730171)]

Similarity:

[array([1. , 0.996622 , 0.9971814 , 0.8418874 , 0.9958073 ,

0.99977267, 0.9960444 , 0.6795992 , 0.865116 ], dtype=float32),

array([0.996622 , 1. , 0.9876513 , 0.79472446, 0.99995595,

0.9981465 , 0.98538244, 0.617058 , 0.821002 ], dtype=float32),

array([0.9971814 , 0.9876513 , 1. , 0.88000363, 0.9861372 ,

0.995355 , 0.99990374, 0.7327231 , 0.90030956], dtype=float32),

array([0.8418874 , 0.79472446, 0.88000363, 1. , 0.78899217,

0.8301899 , 0.88650906, 0.96802676, 0.99900514], dtype=float32),

array([0.9958073 , 0.99995595, 0.9861372 , 0.78899217, 1. ,

0.9975313 , 0.98374 , 0.6096444 , 0.8156069 ], dtype=float32),

array([0.99977267, 0.9981465 , 0.995355 , 0.8301899 , 0.9975313 ,

1. , 0.9939234 , 0.6638038 , 0.85422516], dtype=float32),

array([0.9960444 , 0.98538244, 0.99990374, 0.88650906, 0.98374 ,

0.9939234 , 1. , 0.74209493, 0.90626204], dtype=float32),

array([0.6795992 , 0.617058 , 0.7327231 , 0.96802676, 0.6096444 ,

0.6638038 , 0.74209493, 0.99999994, 0.95587707], dtype=float32),

array([0.865116 , 0.821002 , 0.90030956, 0.99900514, 0.8156069 ,

0.85422516, 0.90626204, 0.95587707, 1. ], dtype=float32)]

(0, '0.042*"minors" + 0.042*"graph" + 0.038*"system" + 0.038*"survey" + 0.035*"eps" + 0.033*"human" + 0.031*"trees" + 0.031*"testing" + 0.031*"well" + 0.031*"engineering"')

(1, '0.040*"system" + 0.037*"survey" + 0.037*"user" + 0.035*"time" + 0.034*"computer" + 0.034*"interface" + 0.034*"response" + 0.033*"trees" + 0.033*"graph" + 0.032*"paths"')

USE WITH CARE--

HDA Model:

[[(0, 0.5565702203077617),

(1, 0.054574104632418734),

(2, 0.2729517480403645),

(3, 0.029618745787742838),

(4, 0.0222580699344652),

(5, 0.01655557651607404),

(6, 0.012229490830839239)],

[(0, 0.5205840667404564),

(1, 0.3231107910741006),

(2, 0.03961346309936006),

(3, 0.029821838447607565),

(4, 0.02240797412838224),

(5, 0.016668006423738994),

(6, 0.012312527505113606)],

[(0, 0.517790262705016),

(1, 0.06191946439382389),

(2, 0.28805465028468186),

(3, 0.03377804590557444),

(4, 0.025396487881103134),

(5, 0.01889150110691897),

(6, 0.013955025456098604),

(7, 0.010465705629518941)],

[(0, 0.7643410301426959),

(1, 0.061048475498996585),

(2, 0.04417331958826799),

(3, 0.03332724492765827),

(4, 0.025048725716184123),

(5, 0.018632957487399803),

(6, 0.013764043119053765),

(7, 0.010322476602212013)],

[(0, 0.0757181237596145),

(1, 0.7679391858106653),

(2, 0.039586714359868345),

(3, 0.029828166513061593),

(4, 0.02242222612483417),

(5, 0.016679291901008995),

(6, 0.012320882327277141)],

[(0, 0.08089166189934743),

(1, 0.7435023552504705),

(2, 0.044403927330132396),

(3, 0.033510143050357315),

(4, 0.025198860042726232),

(5, 0.01874461730245409),

(6, 0.013846529244831806),

(7, 0.010384337848137939)],

[(0, 0.7398447964237507),

(1, 0.06698283248044715),

(2, 0.04899313390216687),

(3, 0.036862205074928295),

(4, 0.02768180787661879),

(5, 0.02059139176174965),

(6, 0.01521071547172867),

(7, 0.011407422425566253)],

[(0, 0.7962703635234022),

(1, 0.05398861469394166),

(2, 0.03795941400773601),

(3, 0.028556923952855452),

(4, 0.021467485379882995),

(5, 0.015968638663872797),

(6, 0.011795927264606435)],

[(0, 0.5331648916261047),

(1, 0.2608024607901832),

(2, 0.05217821699693843),

(3, 0.03934504740893295),

(4, 0.02953790052514554),

(5, 0.02197118958402161),

(6, 0.0162299684418014),

(7, 0.012171820906447469)]]

HDA Topics:

[(0, '0.088*management + 0.087*abc + 0.085*ordering + 0.068*system + 0.053*human'), (1, '0.112*random + 0.105*error + 0.072*eps + 0.062*measurement + 0.058*abc')]

注:LDA_test.txt数据:

Human machine interface for lab abc computer applications

A survey of user opinion of computer system response time

The EPS user interface management system

System and human system engineering testing of EPS

Relation of user perceived response time to error measurement

The generation of random binary unordered trees

The intersection graph of paths in trees

Graph minors IV Widths of trees and well quasi ordering

Graph minors A survey

参考

- https://blog.csdn.net/sinat_34022298/article/details/75943272

- https://blog.csdn.net/weixin_40662229/article/details/80802325

- https://blog.csdn.net/Love_wanling/article/details/72872180

通俗的讲解了LDA主题模型,以及基于先验信息的Beta-Binomial共轭概念