ResNet之三——参数调整及网络构建的理解

接上一篇:https://blog.csdn.net/SPESEG/article/details/102963410

当我将repetition设置为[2,2]时,这时的h5模型在8M,当增加为[2,2,2]效果并没有提高,基本上是保持不变,甚至验证集acc还下降了一个点。当我设置为[2,1]时,网络结构如下,说实话第一个[2,2]的层数都小于常用的ResNet-18,而这个是最基本的,图像里面经常用到的。

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 40, 32, 1) 0

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 20, 16, 64) 3200 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 20, 16, 64) 256 conv2d_1[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 20, 16, 64) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 10, 8, 64) 0 activation_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 10, 8, 64) 36928 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 10, 8, 64) 256 conv2d_2[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 10, 8, 64) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 10, 8, 64) 36928 activation_2[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 10, 8, 64) 0 max_pooling2d_1[0][0]

conv2d_3[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 10, 8, 64) 256 add_1[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 10, 8, 64) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 10, 8, 64) 36928 activation_3[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 10, 8, 64) 256 conv2d_4[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 10, 8, 64) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 10, 8, 64) 36928 activation_4[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 10, 8, 64) 0 add_1[0][0]

conv2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 10, 8, 64) 256 add_2[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 10, 8, 64) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 5, 4, 128) 73856 activation_5[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 5, 4, 128) 512 conv2d_6[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 5, 4, 128) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 5, 4, 128) 8320 add_2[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 5, 4, 128) 147584 activation_6[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 5, 4, 128) 0 conv2d_8[0][0]

conv2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 5, 4, 128) 512 add_3[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 5, 4, 128) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 1, 1, 128) 0 activation_7[0][0]

__________________________________________________________________________________________________

flatten_1 (Flatten) (None, 128) 0 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

dense_1 (Dense) (None, 2) 258 flatten_1[0][0]

==================================================================================================

Total params: 383,234

Trainable params: 382,082

Non-trainable params: 1,152显而易见,repetition中的数之和就是add层数。

模型大小4.5M,效果与[2,2]是一样的,下面试试[1,2],

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_4 (InputLayer) (None, 40, 32, 1) 0

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 20, 16, 64) 3200 input_4[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 20, 16, 64) 256 conv2d_31[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 20, 16, 64) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

max_pooling2d_4 (MaxPooling2D) (None, 10, 8, 64) 0 activation_28[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 10, 8, 64) 36928 max_pooling2d_4[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 10, 8, 64) 256 conv2d_32[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 10, 8, 64) 0 batch_normalization_29[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 10, 8, 64) 36928 activation_29[0][0]

__________________________________________________________________________________________________

add_13 (Add) (None, 10, 8, 64) 0 max_pooling2d_4[0][0]

conv2d_33[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 10, 8, 64) 256 add_13[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 10, 8, 64) 0 batch_normalization_30[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 5, 4, 128) 73856 activation_30[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 5, 4, 128) 512 conv2d_34[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 5, 4, 128) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 5, 4, 128) 8320 add_13[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 5, 4, 128) 147584 activation_31[0][0]

__________________________________________________________________________________________________

add_14 (Add) (None, 5, 4, 128) 0 conv2d_36[0][0]

conv2d_35[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 5, 4, 128) 512 add_14[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 5, 4, 128) 0 batch_normalization_32[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 5, 4, 128) 147584 activation_32[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 5, 4, 128) 512 conv2d_37[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 5, 4, 128) 0 batch_normalization_33[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 5, 4, 128) 147584 activation_33[0][0]

__________________________________________________________________________________________________

add_15 (Add) (None, 5, 4, 128) 0 add_14[0][0]

conv2d_38[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 5, 4, 128) 512 add_15[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 5, 4, 128) 0 batch_normalization_34[0][0]

__________________________________________________________________________________________________

average_pooling2d_4 (AveragePoo (None, 1, 1, 128) 0 activation_34[0][0]

__________________________________________________________________________________________________

flatten_4 (Flatten) (None, 128) 0 average_pooling2d_4[0][0]

__________________________________________________________________________________________________

dense_4 (Dense) (None, 2) 258 flatten_4[0][0]

==================================================================================================

Total params: 605,058

Trainable params: 603,650

Non-trainable params: 1,408参数比上一个多。最后的acc是一样的,但收敛反而慢了。可见层数很重要。

下面试试[1,1]的如何,只有两个add,结果也不差啊

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_5 (InputLayer) (None, 40, 32, 1) 0

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 20, 16, 64) 3200 input_5[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 20, 16, 64) 256 conv2d_39[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 20, 16, 64) 0 batch_normalization_35[0][0]

__________________________________________________________________________________________________

max_pooling2d_5 (MaxPooling2D) (None, 10, 8, 64) 0 activation_35[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 10, 8, 64) 36928 max_pooling2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 10, 8, 64) 256 conv2d_40[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 10, 8, 64) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 10, 8, 64) 36928 activation_36[0][0]

__________________________________________________________________________________________________

add_16 (Add) (None, 10, 8, 64) 0 max_pooling2d_5[0][0]

conv2d_41[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 10, 8, 64) 256 add_16[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 10, 8, 64) 0 batch_normalization_37[0][0]

__________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 5, 4, 128) 73856 activation_37[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 5, 4, 128) 512 conv2d_42[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 5, 4, 128) 0 batch_normalization_38[0][0]

__________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 5, 4, 128) 8320 add_16[0][0]

__________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 5, 4, 128) 147584 activation_38[0][0]

__________________________________________________________________________________________________

add_17 (Add) (None, 5, 4, 128) 0 conv2d_44[0][0]

conv2d_43[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 5, 4, 128) 512 add_17[0][0]

__________________________________________________________________________________________________

activation_39 (Activation) (None, 5, 4, 128) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

average_pooling2d_5 (AveragePoo (None, 1, 1, 128) 0 activation_39[0][0]

__________________________________________________________________________________________________

flatten_5 (Flatten) (None, 128) 0 average_pooling2d_5[0][0]

__________________________________________________________________________________________________

dense_5 (Dense) (None, 2) 258 flatten_5[0][0]

==================================================================================================

Total params: 308,866

Trainable params: 307,970

Non-trainable params: 896在电脑训练中,我明天试试[2]和[1]的效果如何?下班。

[2]的网络结构及结果如下:

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_6 (InputLayer) (None, 40, 32, 1) 0

__________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 20, 16, 64) 3200 input_6[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 20, 16, 64) 256 conv2d_45[0][0]

__________________________________________________________________________________________________

activation_40 (Activation) (None, 20, 16, 64) 0 batch_normalization_40[0][0]

__________________________________________________________________________________________________

max_pooling2d_6 (MaxPooling2D) (None, 10, 8, 64) 0 activation_40[0][0]

__________________________________________________________________________________________________

conv2d_46 (Conv2D) (None, 10, 8, 64) 36928 max_pooling2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 10, 8, 64) 256 conv2d_46[0][0]

__________________________________________________________________________________________________

activation_41 (Activation) (None, 10, 8, 64) 0 batch_normalization_41[0][0]

__________________________________________________________________________________________________

conv2d_47 (Conv2D) (None, 10, 8, 64) 36928 activation_41[0][0]

__________________________________________________________________________________________________

add_18 (Add) (None, 10, 8, 64) 0 max_pooling2d_6[0][0]

conv2d_47[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 10, 8, 64) 256 add_18[0][0]

__________________________________________________________________________________________________

activation_42 (Activation) (None, 10, 8, 64) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

conv2d_48 (Conv2D) (None, 10, 8, 64) 36928 activation_42[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 10, 8, 64) 256 conv2d_48[0][0]

__________________________________________________________________________________________________

activation_43 (Activation) (None, 10, 8, 64) 0 batch_normalization_43[0][0]

__________________________________________________________________________________________________

conv2d_49 (Conv2D) (None, 10, 8, 64) 36928 activation_43[0][0]

__________________________________________________________________________________________________

add_19 (Add) (None, 10, 8, 64) 0 add_18[0][0]

conv2d_49[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 10, 8, 64) 256 add_19[0][0]

__________________________________________________________________________________________________

activation_44 (Activation) (None, 10, 8, 64) 0 batch_normalization_44[0][0]

__________________________________________________________________________________________________

average_pooling2d_6 (AveragePoo (None, 1, 1, 64) 0 activation_44[0][0]

__________________________________________________________________________________________________

flatten_6 (Flatten) (None, 64) 0 average_pooling2d_6[0][0]

__________________________________________________________________________________________________

dense_6 (Dense) (None, 2) 130 flatten_6[0][0]

==================================================================================================

Total params: 152,322

Trainable params: 151,682

Non-trainable params: 640效果是一样的。模型大小不到2M。下面是[1]的结果

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_7 (InputLayer) (None, 40, 32, 1) 0

__________________________________________________________________________________________________

conv2d_50 (Conv2D) (None, 20, 16, 64) 3200 input_7[0][0]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 20, 16, 64) 256 conv2d_50[0][0]

__________________________________________________________________________________________________

activation_45 (Activation) (None, 20, 16, 64) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

max_pooling2d_7 (MaxPooling2D) (None, 10, 8, 64) 0 activation_45[0][0]

__________________________________________________________________________________________________

conv2d_51 (Conv2D) (None, 10, 8, 64) 36928 max_pooling2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 10, 8, 64) 256 conv2d_51[0][0]

__________________________________________________________________________________________________

activation_46 (Activation) (None, 10, 8, 64) 0 batch_normalization_46[0][0]

__________________________________________________________________________________________________

conv2d_52 (Conv2D) (None, 10, 8, 64) 36928 activation_46[0][0]

__________________________________________________________________________________________________

add_20 (Add) (None, 10, 8, 64) 0 max_pooling2d_7[0][0]

conv2d_52[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 10, 8, 64) 256 add_20[0][0]

__________________________________________________________________________________________________

activation_47 (Activation) (None, 10, 8, 64) 0 batch_normalization_47[0][0]

__________________________________________________________________________________________________

average_pooling2d_7 (AveragePoo (None, 1, 1, 64) 0 activation_47[0][0]

__________________________________________________________________________________________________

flatten_7 (Flatten) (None, 64) 0 average_pooling2d_7[0][0]

__________________________________________________________________________________________________

dense_7 (Dense) (None, 2) 130 flatten_7[0][0]

==================================================================================================

Total params: 77,954

Trainable params: 77,570

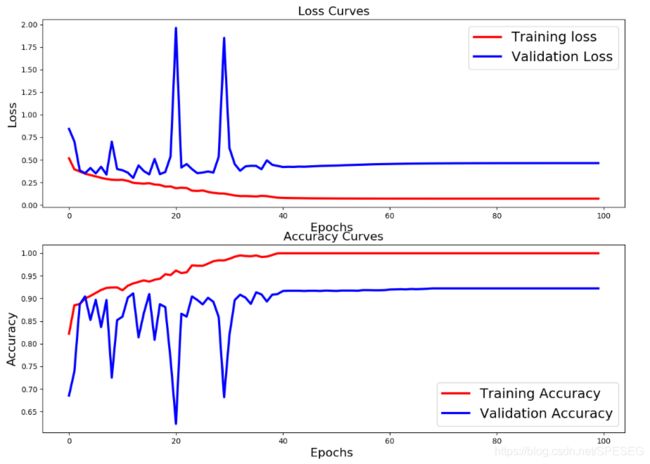

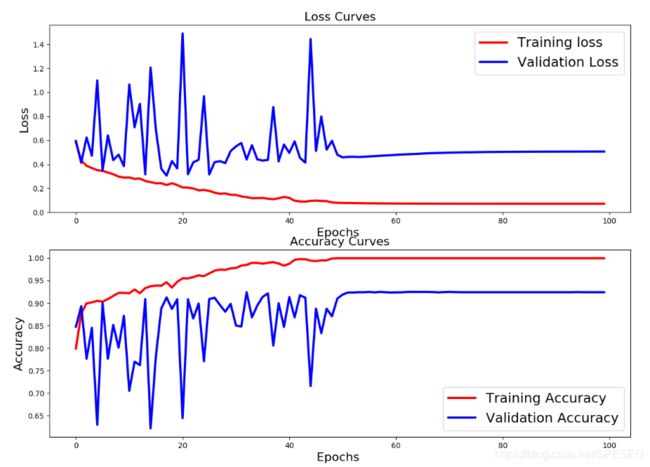

Non-trainable params: 384结果如图,最后验证集92.6%,模型不到1M,这是各方面最好的模型了。

当我考虑极限,即不采用add,很简单的层,如下

Layer (type) Output Shape Param #

=================================================================

input_8 (InputLayer) (None, 40, 32, 1) 0

_________________________________________________________________

conv2d_53 (Conv2D) (None, 20, 16, 64) 3200

_________________________________________________________________

batch_normalization_48 (Batc (None, 20, 16, 64) 256

_________________________________________________________________

activation_48 (Activation) (None, 20, 16, 64) 0

_________________________________________________________________

max_pooling2d_8 (MaxPooling2 (None, 10, 8, 64) 0

_________________________________________________________________

batch_normalization_49 (Batc (None, 10, 8, 64) 256

_________________________________________________________________

activation_49 (Activation) (None, 10, 8, 64) 0

_________________________________________________________________

average_pooling2d_8 (Average (None, 1, 1, 64) 0

_________________________________________________________________

flatten_8 (Flatten) (None, 64) 0

_________________________________________________________________

dense_8 (Dense) (None, 2) 130

=================================================================

Total params: 3,842

Trainable params: 3,586

Non-trainable params: 256效果不错,但有天花板,应该加些类似的层。模型大小只有88k。从8M到88k,压缩了90倍。

要做就要做到极致。

另外有相关问题可以加入QQ群讨论,不设微信群

QQ群:868373192

语音深度学习群