- 机器学习与深度学习间关系与区别

ℒℴѵℯ心·动ꦿ໊ོ꫞

人工智能学习深度学习python

一、机器学习概述定义机器学习(MachineLearning,ML)是一种通过数据驱动的方法,利用统计学和计算算法来训练模型,使计算机能够从数据中学习并自动进行预测或决策。机器学习通过分析大量数据样本,识别其中的模式和规律,从而对新的数据进行判断。其核心在于通过训练过程,让模型不断优化和提升其预测准确性。主要类型1.监督学习(SupervisedLearning)监督学习是指在训练数据集中包含输入

- 将cmd中命令输出保存为txt文本文件

落难Coder

Windowscmdwindow

最近深度学习本地的训练中我们常常要在命令行中运行自己的代码,无可厚非,我们有必要保存我们的炼丹结果,但是复制命令行输出到txt是非常麻烦的,其实Windows下的命令行为我们提供了相应的操作。其基本的调用格式就是:运行指令>输出到的文件名称或者具体保存路径测试下,我打开cmd并且ping一下百度:pingwww.baidu.com>./data.txt看下相同目录下data.txt的输出:如果你再

- 推荐3家毕业AI论文可五分钟一键生成!文末附免费教程!

小猪包333

写论文人工智能AI写作深度学习计算机视觉

在当前的学术研究和写作领域,AI论文生成器已经成为许多研究人员和学生的重要工具。这些工具不仅能够帮助用户快速生成高质量的论文内容,还能进行内容优化、查重和排版等操作。以下是三款值得推荐的AI论文生成器:千笔-AIPassPaper、懒人论文以及AIPaperPass。千笔-AIPassPaper千笔-AIPassPaper是一款基于深度学习和自然语言处理技术的AI写作助手,旨在帮助用户快速生成高质

- AI大模型的架构演进与最新发展

季风泯灭的季节

AI大模型应用技术二人工智能架构

随着深度学习的发展,AI大模型(LargeLanguageModels,LLMs)在自然语言处理、计算机视觉等领域取得了革命性的进展。本文将详细探讨AI大模型的架构演进,包括从Transformer的提出到GPT、BERT、T5等模型的历史演变,并探讨这些模型的技术细节及其在现代人工智能中的核心作用。一、基础模型介绍:Transformer的核心原理Transformer架构的背景在Transfo

- [实践应用] 深度学习之模型性能评估指标

YuanDaima2048

深度学习工具使用深度学习人工智能损失函数性能评估pytorchpython机器学习

文章总览:YuanDaiMa2048博客文章总览深度学习之模型性能评估指标分类任务回归任务排序任务聚类任务生成任务其他介绍在机器学习和深度学习领域,评估模型性能是一项至关重要的任务。不同的学习任务需要不同的性能指标来衡量模型的有效性。以下是对一些常见任务及其相应的性能评估指标的详细解释和总结。分类任务分类任务是指模型需要将输入数据分配到预定义的类别或标签中。以下是分类任务中常用的性能指标:准确率(

- [实践应用] 深度学习之优化器

YuanDaima2048

深度学习工具使用pytorch深度学习人工智能机器学习python优化器

文章总览:YuanDaiMa2048博客文章总览深度学习之优化器1.随机梯度下降(SGD)2.动量优化(Momentum)3.自适应梯度(Adagrad)4.自适应矩估计(Adam)5.RMSprop总结其他介绍在深度学习中,优化器用于更新模型的参数,以最小化损失函数。常见的优化函数有很多种,下面是几种主流的优化器及其特点、原理和PyTorch实现:1.随机梯度下降(SGD)原理:随机梯度下降通过

- 生成式地图制图

Bwywb_3

深度学习机器学习深度学习生成对抗网络

生成式地图制图(GenerativeCartography)是一种利用生成式算法和人工智能技术自动创建地图的技术。它结合了传统的地理信息系统(GIS)技术与现代生成模型(如深度学习、GANs等),能够根据输入的数据自动生成符合需求的地图。这种方法在城市规划、虚拟环境设计、游戏开发等多个领域具有应用前景。主要特点:自动化生成:通过算法和模型,系统能够根据输入的地理或空间数据自动生成地图,而无需人工逐

- 吴恩达深度学习笔记(30)-正则化的解释

极客Array

正则化(Regularization)深度学习可能存在过拟合问题——高方差,有两个解决方法,一个是正则化,另一个是准备更多的数据,这是非常可靠的方法,但你可能无法时时刻刻准备足够多的训练数据或者获取更多数据的成本很高,但正则化通常有助于避免过拟合或减少你的网络误差。如果你怀疑神经网络过度拟合了数据,即存在高方差问题,那么最先想到的方法可能是正则化,另一个解决高方差的方法就是准备更多数据,这也是非常

- 个人学习笔记7-6:动手学深度学习pytorch版-李沐

浪子L

深度学习深度学习笔记计算机视觉python人工智能神经网络pytorch

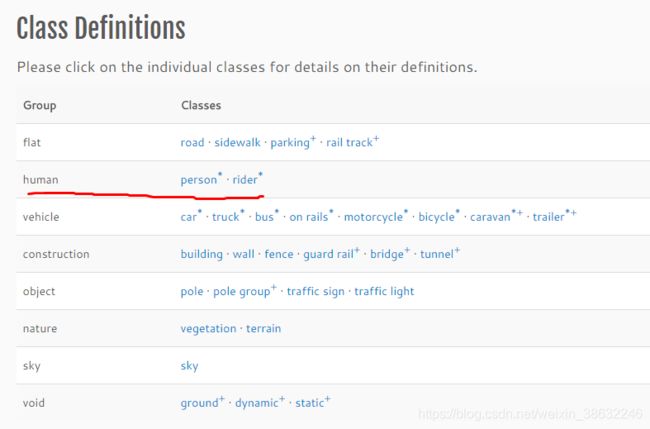

#人工智能##深度学习##语义分割##计算机视觉##神经网络#计算机视觉13.11全卷积网络全卷积网络(fullyconvolutionalnetwork,FCN)采用卷积神经网络实现了从图像像素到像素类别的变换。引入l转置卷积(transposedconvolution)实现的,输出的类别预测与输入图像在像素级别上具有一一对应关系:通道维的输出即该位置对应像素的类别预测。13.11.1构造模型下

- 深度学习-点击率预估-研究论文2024-09-14速读

sp_fyf_2024

深度学习人工智能

深度学习-点击率预估-研究论文2024-09-14速读1.DeepTargetSessionInterestNetworkforClick-ThroughRatePredictionHZhong,JMa,XDuan,SGu,JYao-2024InternationalJointConferenceonNeuralNetworks,2024深度目标会话兴趣网络用于点击率预测摘要:这篇文章提出了一种新

- 损失函数与反向传播

Star_.

PyTorchpytorch深度学习python

损失函数定义与作用损失函数(lossfunction)在深度学习领域是用来计算搭建模型预测的输出值和真实值之间的误差。1.损失函数越小越好2.计算实际输出与目标之间的差距3.为更新输出提供依据(反向传播)常见的损失函数回归常见的损失函数有:均方差(MeanSquaredError,MSE)、平均绝对误差(MeanAbsoluteErrorLoss,MAE)、HuberLoss是一种将MSE与MAE

- 【深度学习】训练过程中一个OOM的问题,太难查了

weixin_40293999

深度学习深度学习人工智能

现象:各位大佬又遇到过ubuntu的这个问题么?现象是在训练过程中,ssh上不去了,能ping通,没死机,但是ubunutu的pc侧的显示器,鼠标啥都不好用了。只能重启。问题原因:OOM了95G,尼玛!!!!pytorch爆内存了,然后journald假死了,在journald被watchdog干掉之后,系统就崩溃了。这种规模的爆内存一般,即使被oomkill了,也要卡半天的,确实会这样,能不能配

- 云服务业界动态简报-20180128

Captain7

一、青云青云QingCloud推出深度学习平台DeepLearningonQingCloud,包含了主流的深度学习框架及数据科学工具包,通过QingCloudAppCenter一键部署交付,可以让算法工程师和数据科学家快速构建深度学习开发环境,将更多的精力放在模型和算法调优。二、腾讯云1.腾讯云正式发布腾讯专有云TCE(TencentCloudEnterprise)矩阵,涵盖企业版、大数据版、AI

- 机器学习VS深度学习

nfgo

机器学习

机器学习(MachineLearning,ML)和深度学习(DeepLearning,DL)是人工智能(AI)的两个子领域,它们有许多相似之处,但在技术实现和应用范围上也有显著区别。下面从几个方面对两者进行区分:1.概念层面机器学习:是让计算机通过算法从数据中自动学习和改进的技术。它依赖于手动设计的特征和数学模型来进行学习,常用的模型有决策树、支持向量机、线性回归等。深度学习:是机器学习的一个子领

- 大数据毕业设计hadoop+spark+hive知识图谱租房数据分析可视化大屏 租房推荐系统 58同城租房爬虫 房源推荐系统 房价预测系统 计算机毕业设计 机器学习 深度学习 人工智能

2401_84572577

程序员大数据hadoop人工智能

做了那么多年开发,自学了很多门编程语言,我很明白学习资源对于学一门新语言的重要性,这些年也收藏了不少的Python干货,对我来说这些东西确实已经用不到了,但对于准备自学Python的人来说,或许它就是一个宝藏,可以给你省去很多的时间和精力。别在网上瞎学了,我最近也做了一些资源的更新,只要你是我的粉丝,这期福利你都可拿走。我先来介绍一下这些东西怎么用,文末抱走。(1)Python所有方向的学习路线(

- 深度学习-13-小语言模型之SmolLM的使用

皮皮冰燃

深度学习深度学习

文章附录1SmolLM概述1.1SmolLM简介1.2下载模型2运行2.1在CPU/GPU/多GPU上运行模型2.2使用torch.bfloat162.3通过位和字节的量化版本3应用示例4问题及解决4.1attention_mask和pad_token_id报错4.2max_new_tokens=205参考附录1SmolLM概述1.1SmolLM简介SmolLM是一系列尖端小型语言模型,提供三种规

- 基于深度学习的农作物病害检测

SEU-WYL

深度学习dnn深度学习人工智能

基于深度学习的农作物病害检测利用卷积神经网络(CNN)、生成对抗网络(GAN)、Transformer等深度学习技术,自动识别和分类农作物的病害,帮助农业工作者提高作物管理效率、减少损失。1.农作物病害检测的挑战病害种类繁多:农作物病害的类型多样,不同病害在同一作物上的表现差异很大,同时同一种病害在不同生长阶段的症状也可能不同。环境影响:天气、光照、湿度等外部环境因素会影响农作物的表现,使得病害检

- 基于深度学习的文本引导的图像编辑

SEU-WYL

深度学习dnn深度学习人工智能

基于深度学习的文本引导的图像编辑(Text-GuidedImageEditing)是一种通过自然语言文本指令对图像进行编辑或修改的技术。它结合了图像生成和自然语言处理(NLP)的最新进展,使用户能够通过描述性文本对图像内容进行精确的调整和操控。1.文本引导的图像编辑的挑战文本和图像之间的对齐:如何将文本中的语义信息准确地映射到图像中的特定区域或元素是一个关键挑战。这涉及到多模态数据的对齐和理解。编

- 深度学习--对抗生成网络(GAN, Generative Adversarial Network)

Ambition_LAO

深度学习生成对抗网络

对抗生成网络(GAN,GenerativeAdversarialNetwork)是一种深度学习模型,由IanGoodfellow等人在2014年提出。GAN主要用于生成数据,通过两个神经网络相互对抗,来生成以假乱真的新数据。以下是对GAN的详细阐述,包括其概念、作用、核心要点、实现过程、代码实现和适用场景。1.概念GAN由两个神经网络组成:生成器(Generator)和判别器(Discrimina

- 深度学习:怎么看pth文件的参数

奥利给少年

深度学习人工智能

.pth文件是PyTorch模型的权重文件,它通常包含了训练好的模型的参数。要查看或使用这个文件,你可以按照以下步骤操作:1.确保你有模型的定义你需要有创建这个.pth文件时所用的模型的代码。这意味着你需要有模型的类定义和架构。2.加载模型权重使用PyTorch的load_state_dict方法来加载权重。这里是如何操作的:importtorchimporttorch.nnasnn#定义模型结构

- chatgpt赋能python:如何在Python中安装Keras库?

turensu

ChatGptpythonchatgptkeras计算机

如何在Python中安装Keras库?Keras是一个简单易用的神经网络库,由FrançoisChollet编写。它在Python编程语言中实现了深度学习的功能,可以使您更轻松地构建和试验不同类型的神经网络。如果您是一名Python开发人员,肯定会想知道如何在您的Python项目中安装Keras库。在本文中,我们将向您展示如何安装和配置Keras库。步骤1:安装Python要使用Keras库,您需

- 如何理解深度学习的训练过程

奋斗的草莓熊

深度学习人工智能pythonscikit-learnvirtualenvnumpypandas

文章目录1.训练是干什么?2.预训练模型进行训练,主要更改的是预训练模型的什么东西?1.训练是干什么?以yolov5为例子,训练的目的是把一组输入猫狗图像放到神经网络中,得到一个输出模型,这个模型下次可以直接用来识别哪个是猫,哪个是狗2.预训练模型进行训练,主要更改的是预训练模型的什么东西?超参数(Hyperparameters):这是模型结构中定义的参数,比如:卷积核大小(kernel_size

- Keras深度学习框架入门及实战指南

司莹嫣Maude

Keras深度学习框架入门及实战指南keraskeras-team/keras:是一个基于Python的深度学习库,它没有使用数据库。适合用于深度学习任务的开发和实现,特别是对于需要使用Python深度学习库的场景。特点是深度学习库、Python、无数据库。项目地址:https://gitcode.com/gh_mirrors/ke/keras一、项目介绍Keras简介Keras是一款高级神经网络

- 深度学习驱动的车牌识别:技术演进与未来挑战

逼子歌

深度学习车牌识别神经网络字符识别YOLO卷积神经网络

一、引言1.1研究背景在当今社会,智能交通系统的发展日益重要,而车牌识别作为其关键组成部分,发挥着至关重要的作用。车牌识别技术广泛应用于交通管理、停车场管理、安防监控等领域。在交通管理中,它可以用于车辆识别、交通违法监控和车流统计等,提高交通管理的效率和准确性。在停车场管理中,实现车辆的自动识别和收费,提升管理和服务水平。在安防监控领域,可用于追踪嫌疑人及犯罪行为。深度学习的出现为车牌识别带来了重

- 每天五分钟玩转深度学习PyTorch:模型参数优化器torch.optim

幻风_huanfeng

深度学习框架pytorch深度学习pytorch人工智能神经网络机器学习优化算法

本文重点在机器学习或者深度学习中,我们需要通过修改参数使得损失函数最小化(或最大化),优化算法就是一种调整模型参数更新的策略。在pytorch中定义了优化器optim,我们可以使用它调用封装好的优化算法,然后传递给它神经网络模型参数,就可以对模型进行优化。本文是学习第6步(优化器),参考链接pytorch的学习路线随机梯度下降算法在深度学习和机器学习中,梯度下降算法是最常用的参数更新方法,它的公式

- 什么是AIGC?有哪些免费工具?

chent_某位

AIGC

AIGC(AIGeneratedContent),即“人工智能生成内容”,是指通过人工智能技术自动生成各种类型的数字内容。AIGC让机器能够根据输入的信息或数据生成符合人类需求的文本、图像、音频、视频等内容,极大提高了内容创作的效率。AIGC的背景与起源随着深度学习和自然语言处理技术的快速发展,人工智能已经不再局限于简单的任务,如分类、预测和数据分析,而是具备了生成内容的能力。生成式AI模型,如O

- transformer架构(Transformer Architecture)原理与代码实战案例讲解

AI架构设计之禅

大数据AI人工智能Python入门实战计算科学神经计算深度学习神经网络大数据人工智能大型语言模型AIAGILLMJavaPython架构设计AgentRPA

transformer架构(TransformerArchitecture)原理与代码实战案例讲解关键词:Transformer,自注意力机制,编码器-解码器,预训练,微调,NLP,机器翻译作者:禅与计算机程序设计艺术/ZenandtheArtofComputerProgramming1.背景介绍1.1问题的由来自然语言处理(NLP)领域的发展经历了从规则驱动到统计驱动再到深度学习驱动的三个阶段。

- 如何有效的学习AI大模型?

Python程序员罗宾

学习人工智能语言模型自然语言处理架构

学习AI大模型是一个系统性的过程,涉及到多个学科的知识。以下是一些建议,帮助你更有效地学习AI大模型:基础知识储备:数学基础:学习线性代数、概率论、统计学和微积分等,这些是理解机器学习算法的数学基础。编程技能:掌握至少一种编程语言,如Python,因为大多数AI模型都是用Python实现的。理论学习:机器学习基础:了解监督学习、非监督学习、强化学习等基本概念。深度学习:学习神经网络的基本结构,如卷

- 【深度学习】【OnnxRuntime】【Python】模型转化、环境搭建以及模型部署的详细教程

牙牙要健康

深度学习onnxonnxruntime深度学习python人工智能

【深度学习】【OnnxRuntime】【Python】模型转化、环境搭建以及模型部署的详细教程提示:博主取舍了很多大佬的博文并亲测有效,分享笔记邀大家共同学习讨论文章目录【深度学习】【OnnxRuntime】【Python】模型转化、环境搭建以及模型部署的详细教程前言模型转换--pytorch转onnxWindows平台搭建依赖环境onnxruntime调用onnx模型ONNXRuntime推理核

- 基于深度学习的多模态信息检索

SEU-WYL

深度学习dnn深度学习人工智能

基于深度学习的多模态信息检索(MultimodalInformationRetrieval,MMIR)是指利用深度学习技术,从包含多种模态(如文本、图像、视频、音频等)的数据集中检索出满足用户查询意图的相关信息。这种方法不仅可以处理单一模态的数据,还可以在多种模态之间建立关联,从而更准确地满足用户需求。1.多模态信息检索的挑战异构数据表示:多模态数据通常具有不同的特征和表示形式(如文本的词嵌入与图

- 设计模式介绍

tntxia

设计模式

设计模式来源于土木工程师 克里斯托弗 亚历山大(http://en.wikipedia.org/wiki/Christopher_Alexander)的早期作品。他经常发表一些作品,内容是总结他在解决设计问题方面的经验,以及这些知识与城市和建筑模式之间有何关联。有一天,亚历山大突然发现,重复使用这些模式可以让某些设计构造取得我们期望的最佳效果。

亚历山大与萨拉-石川佳纯和穆雷 西乐弗斯坦合作

- android高级组件使用(一)

百合不是茶

androidRatingBarSpinner

1、自动完成文本框(AutoCompleteTextView)

AutoCompleteTextView从EditText派生出来,实际上也是一个文本编辑框,但它比普通编辑框多一个功能:当用户输入一个字符后,自动完成文本框会显示一个下拉菜单,供用户从中选择,当用户选择某个菜单项之后,AutoCompleteTextView按用户选择自动填写该文本框。

使用AutoCompleteTex

- [网络与通讯]路由器市场大有潜力可挖掘

comsci

网络

如果国内的电子厂商和计算机设备厂商觉得手机市场已经有点饱和了,那么可以考虑一下交换机和路由器市场的进入问题.....

这方面的技术和知识,目前处在一个开放型的状态,有利于各类小型电子企业进入

&nbs

- 自写简单Redis内存统计shell

商人shang

Linux shell统计Redis内存

#!/bin/bash

address="192.168.150.128:6666,192.168.150.128:6666"

hosts=(${address//,/ })

sfile="staticts.log"

for hostitem in ${hosts[@]}

do

ipport=(${hostitem

- 单例模式(饿汉 vs懒汉)

oloz

单例模式

package 单例模式;

/*

* 应用场景:保证在整个应用之中某个对象的实例只有一个

* 单例模式种的《 懒汉模式》

* */

public class Singleton {

//01 将构造方法私有化,外界就无法用new Singleton()的方式获得实例

private Singleton(){};

//02 申明类得唯一实例

priva

- springMvc json支持

杨白白

json springmvc

1.Spring mvc处理json需要使用jackson的类库,因此需要先引入jackson包

2在spring mvc中解析输入为json格式的数据:使用@RequestBody来设置输入

@RequestMapping("helloJson")

public @ResponseBody

JsonTest helloJson() {

- android播放,掃描添加本地音頻文件

小桔子

最近幾乎沒有什麽事情,繼續鼓搗我的小東西。想在項目中加入一個簡易的音樂播放器功能,就像華為p6桌面上那麼大小的音樂播放器。用過天天動聽或者QQ音樂播放器的人都知道,可已通過本地掃描添加歌曲。不知道他們是怎麼實現的,我覺得應該掃描設備上的所有文件,過濾出音頻文件,每個文件實例化為一個實體,記錄文件名、路徑、歌手、類型、大小等信息。具體算法思想,

- oracle常用命令

aichenglong

oracledba常用命令

1 创建临时表空间

create temporary tablespace user_temp

tempfile 'D:\oracle\oradata\Oracle9i\user_temp.dbf'

size 50m

autoextend on

next 50m maxsize 20480m

extent management local

- 25个Eclipse插件

AILIKES

eclipse插件

提高代码质量的插件1. FindBugsFindBugs可以帮你找到Java代码中的bug,它使用Lesser GNU Public License的自由软件许可。2. CheckstyleCheckstyle插件可以集成到Eclipse IDE中去,能确保Java代码遵循标准代码样式。3. ECLemmaECLemma是一款拥有Eclipse Public License许可的免费工具,它提供了

- Spring MVC拦截器+注解方式实现防止表单重复提交

baalwolf

spring mvc

原理:在新建页面中Session保存token随机码,当保存时验证,通过后删除,当再次点击保存时由于服务器端的Session中已经不存在了,所有无法验证通过。

1.新建注解:

? 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

- 《Javascript高级程序设计(第3版)》闭包理解

bijian1013

JavaScript

“闭包是指有权访问另一个函数作用域中的变量的函数。”--《Javascript高级程序设计(第3版)》

看以下代码:

<script type="text/javascript">

function outer() {

var i = 10;

return f

- AngularJS Module类的方法

bijian1013

JavaScriptAngularJSModule

AngularJS中的Module类负责定义应用如何启动,它还可以通过声明的方式定义应用中的各个片段。我们来看看它是如何实现这些功能的。

一.Main方法在哪里

如果你是从Java或者Python编程语言转过来的,那么你可能很想知道AngularJS里面的main方法在哪里?这个把所

- [Maven学习笔记七]Maven插件和目标

bit1129

maven插件

插件(plugin)和目标(goal)

Maven,就其本质而言,是一个插件执行框架,Maven的每个目标的执行逻辑都是由插件来完成的,一个插件可以有1个或者几个目标,比如maven-compiler-plugin插件包含compile和testCompile,即maven-compiler-plugin提供了源代码编译和测试源代码编译的两个目标

使用插件和目标使得我们可以干预

- 【Hadoop八】Yarn的资源调度策略

bit1129

hadoop

1. Hadoop的三种调度策略

Hadoop提供了3中作业调用的策略,

FIFO Scheduler

Fair Scheduler

Capacity Scheduler

以上三种调度算法,在Hadoop MR1中就引入了,在Yarn中对它们进行了改进和完善.Fair和Capacity Scheduler用于多用户共享的资源调度

2. 多用户资源共享的调度

- Nginx使用Linux内存加速静态文件访问

ronin47

Nginx是一个非常出色的静态资源web服务器。如果你嫌它还不够快,可以把放在磁盘中的文件,映射到内存中,减少高并发下的磁盘IO。

先做几个假设。nginx.conf中所配置站点的路径是/home/wwwroot/res,站点所对应文件原始存储路径:/opt/web/res

shell脚本非常简单,思路就是拷贝资源文件到内存中,然后在把网站的静态文件链接指向到内存中即可。具体如下:

- 关于Unity3D中的Shader的知识

brotherlamp

unityunity资料unity教程unity视频unity自学

首先先解释下Unity3D的Shader,Unity里面的Shaders是使用一种叫ShaderLab的语言编写的,它同微软的FX文件或者NVIDIA的CgFX有些类似。传统意义上的vertex shader和pixel shader还是使用标准的Cg/HLSL 编程语言编写的。因此Unity文档里面的Shader,都是指用ShaderLab编写的代码,然后我们来看下Unity3D自带的60多个S

- CopyOnWriteArrayList vs ArrayList

bylijinnan

java

package com.ljn.base;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import java.util.concurrent.CopyOnWriteArrayList;

/**

* 总述:

* 1.ArrayListi不是线程安全的,CopyO

- 内存中栈和堆的区别

chicony

内存

1、内存分配方面:

堆:一般由程序员分配释放, 若程序员不释放,程序结束时可能由OS回收 。注意它与数据结构中的堆是两回事,分配方式是类似于链表。可能用到的关键字如下:new、malloc、delete、free等等。

栈:由编译器(Compiler)自动分配释放,存放函数的参数值,局部变量的值等。其操作方式类似于数据结构中

- 回答一位网友对Scala的提问

chenchao051

scalamap

本来准备在私信里直接回复了,但是发现不太方便,就简要回答在这里。 问题 写道 对于scala的简洁十分佩服,但又觉得比较晦涩,例如一例,Map("a" -> List(11,111)).flatMap(_._2),可否说下最后那个函数做了什么,真正在开发的时候也会如此简洁?谢谢

先回答一点,在实际使用中,Scala毫无疑问就是这么简单。

- mysql 取每组前几条记录

daizj

mysql分组最大值最小值每组三条记录

一、对分组的记录取前N条记录:例如:取每组的前3条最大的记录 1.用子查询: SELECT * FROM tableName a WHERE 3> (SELECT COUNT(*) FROM tableName b WHERE b.id=a.id AND b.cnt>a. cnt) ORDER BY a.id,a.account DE

- HTTP深入浅出 http请求

dcj3sjt126com

http

HTTP(HyperText Transfer Protocol)是一套计算机通过网络进行通信的规则。计算机专家设计出HTTP,使HTTP客户(如Web浏览器)能够从HTTP服务器(Web服务器)请求信息和服务,HTTP目前协议的版本是1.1.HTTP是一种无状态的协议,无状态是指Web浏览器和Web服务器之间不需要建立持久的连接,这意味着当一个客户端向服务器端发出请求,然后We

- 判断MySQL记录是否存在方法比较

dcj3sjt126com

mysql

把数据写入到数据库的时,常常会碰到先要检测要插入的记录是否存在,然后决定是否要写入。

我这里总结了判断记录是否存在的常用方法:

sql语句: select count ( * ) from tablename;

然后读取count(*)的值判断记录是否存在。对于这种方法性能上有些浪费,我们只是想判断记录记录是否存在,没有必要全部都查出来。

- 对HTML XML的一点认识

e200702084

htmlxml

感谢http://www.w3school.com.cn提供的资料

HTML 文档中的每个成分都是一个节点。

节点

根据 DOM,HTML 文档中的每个成分都是一个节点。

DOM 是这样规定的:

整个文档是一个文档节点

每个 HTML 标签是一个元素节点

包含在 HTML 元素中的文本是文本节点

每一个 HTML 属性是一个属性节点

注释属于注释节点

Node 层次

- jquery分页插件

genaiwei

jqueryWeb前端分页插件

//jquery页码控件// 创建一个闭包 (function($) { // 插件的定义 $.fn.pageTool = function(options) { var totalPa

- Mybatis与Ibatis对照入门于学习

Josh_Persistence

mybatisibatis区别联系

一、为什么使用IBatis/Mybatis

对于从事 Java EE 的开发人员来说,iBatis 是一个再熟悉不过的持久层框架了,在 Hibernate、JPA 这样的一站式对象 / 关系映射(O/R Mapping)解决方案盛行之前,iBaits 基本是持久层框架的不二选择。即使在持久层框架层出不穷的今天,iBatis 凭借着易学易用、

- C中怎样合理决定使用那种整数类型?

秋风扫落叶

c数据类型

如果需要大数值(大于32767或小于32767), 使用long 型。 否则, 如果空间很重要 (如有大数组或很多结构), 使用 short 型。 除此之外, 就使用 int 型。 如果严格定义的溢出特征很重要而负值无关紧要, 或者你希望在操作二进制位和字节时避免符号扩展的问题, 请使用对应的无符号类型。 但是, 要注意在表达式中混用有符号和无符号值的情况。

&nbs

- maven问题

zhb8015

maven问题

问题1:

Eclipse 中 新建maven项目 无法添加src/main/java 问题

eclipse创建maevn web项目,在选择maven_archetype_web原型后,默认只有src/main/resources这个Source Floder。

按照maven目录结构,添加src/main/ja

- (二)androidpn-server tomcat版源码解析之--push消息处理

spjich

javaandrodipn推送

在 (一)androidpn-server tomcat版源码解析之--项目启动这篇中,已经描述了整个推送服务器的启动过程,并且把握到了消息的入口即XmppIoHandler这个类,今天我将继续往下分析下面的核心代码,主要分为3大块,链接创建,消息的发送,链接关闭。

先贴一段XmppIoHandler的部分代码

/**

* Invoked from an I/O proc

- 用js中的formData类型解决ajax提交表单时文件不能被serialize方法序列化的问题

中华好儿孙

JavaScriptAjaxWeb上传文件FormData

var formData = new FormData($("#inputFileForm")[0]);

$.ajax({

type:'post',

url:webRoot+"/electronicContractUrl/webapp/uploadfile",

data:formData,

async: false,

ca

- mybatis常用jdbcType数据类型

ysj5125094

mybatismapperjdbcType

MyBatis 通过包含的jdbcType

类型

BIT FLOAT CHAR