3DShader之法线贴图(normal mapping)

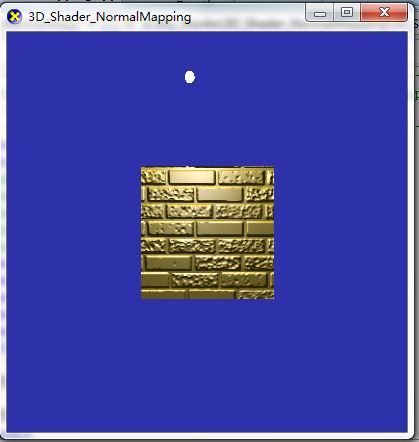

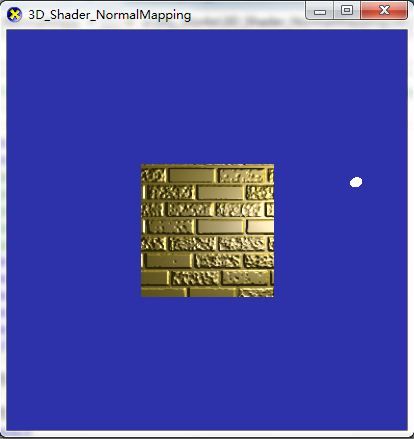

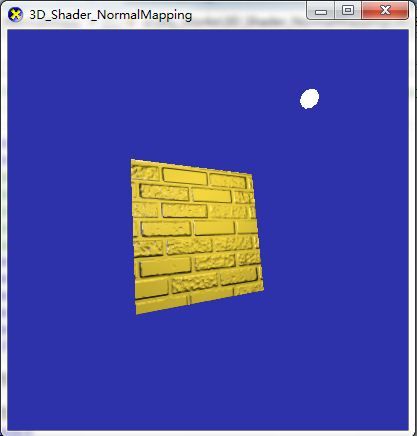

凹凸贴图(bump mapping)实现的技术有几种,normal mapping属于其中的一种,这里实现在物体的坐标系空间中实现的,国际惯例,上图先:

好了讲下原理

可以根据高度图生成法线量图,生成方法:在高度图的某一点用它上面的一点和右面的一点的高度减去这一点的高度,得到两个向量,再叉乘就是这点的法向量了,然后再单位化

然后用这个单位法向量去算光照

我们这里的光照的计算在物体空间计算的

但是注意一个问题,算出来的单位法向量里面的某些分量可能为负,它的范围为(-1,-1,-1)到(1,1,1),但是我们把单位法向量存储到纹理里面,里面的值只允许为正,所以我们需要把整个范围压缩到(0,0,0)到(1,1,1),公式为:x --> 0.5 + 0.5 * x,在用的时候我们始终解压,解压公式为: x --> ( x - 0.5) * 2

另外,在片断着色器里面,我们需要每个片断的指向灯光的单位向量和半角单位向量,我们可以在顶点着色器里面算出指向灯光的向量以及半角向量,单位化后传过去,

但是我们采用一个有单位化功能的立方体纹理,它的速度比直接单位化会快一些,它的输入为一个向量,输出为这个向量的单位向量.当然由于纹理存储的是压缩后的单位向量,取出向量后还需要解压

好了,原理大概如此,下面给出源码:

/*------------------------------------------------------------

SphereEnvMapping.cpp -- achieve sphere environment mapping

(c) Seamanj.2013/8/1

------------------------------------------------------------*/

#include "DXUT.h"

#include "resource.h"

//phase1 : add wall quadrilateral

//phase2 : add camera

//phase3 : add normal mapping shader

//phase4 : add light sphere

//phase5 : add animation

#define phase1 1

#define phase2 1

#define phase3 1

#define phase4 1

#define phase5 1

#if phase1

// Vertex Buffer

LPDIRECT3DVERTEXBUFFER9 g_pVB = NULL;

// Index Buffer

LPDIRECT3DINDEXBUFFER9 g_pIB = NULL;

#endif

#if phase2

#include "DXUTcamera.h"

CModelViewerCamera g_Camera;

#endif

#if phase3

#include "SDKmisc.h"//加载文件时会用到

ID3DXEffect* g_pEffect = NULL; // D3DX effect interface

static const unsigned char

myBrickNormalMapImage[3*(128*128+64*64+32*32+16*16+8*8+4*4+2*2+1*1)] = {

/* RGB8 image data for a mipmapped 128x128 normal map for a brick pattern */

#include "brick_image.h"

};

static const unsigned char

myNormalizeVectorCubeMapImage[6*3*32*32] = {

/* RGB8 image data for a normalization vector cube map with 32x32 faces */

#include "normcm_image.h"

};

static PDIRECT3DTEXTURE9 g_pMyBrickNormalMapTex = NULL;

static PDIRECT3DCUBETEXTURE9 g_pMyNormalizeVectorCubeMapTex = NULL;

D3DXHANDLE g_hTech = 0;

D3DXHANDLE g_hWorldViewProj = NULL; // Handle for world+view+proj matrix in effect

D3DXHANDLE g_hWorldInv = NULL;

D3DXHANDLE g_hAmbient = NULL;

D3DXHANDLE g_hLightMaterialDiffuse = NULL;

D3DXHANDLE g_hLightMaterialSpecular = NULL;

D3DXHANDLE g_hLightPosition = NULL;

D3DXHANDLE g_hEyePosition = NULL;

D3DXHANDLE g_hBrickNormal2DTex = NULL;

D3DXHANDLE g_hNormalizeVectorCubeTex = NULL;

#endif

#if phase4

ID3DXMesh* g_pLightSphereMesh = 0;

#endif

#if phase5

static float g_fLightAngle = 4.0f;

static bool g_bAnimation = false;

#endif

//--------------------------------------------------------------------------------------

// Rejects any D3D9 devices that aren't acceptable to the app by returning false

//--------------------------------------------------------------------------------------

bool CALLBACK IsD3D9DeviceAcceptable( D3DCAPS9* pCaps, D3DFORMAT AdapterFormat, D3DFORMAT BackBufferFormat,

bool bWindowed, void* pUserContext )

{

// Typically want to skip back buffer formats that don't support alpha blending

IDirect3D9* pD3D = DXUTGetD3D9Object();

if( FAILED( pD3D->CheckDeviceFormat( pCaps->AdapterOrdinal, pCaps->DeviceType,

AdapterFormat, D3DUSAGE_QUERY_POSTPIXELSHADER_BLENDING,

D3DRTYPE_TEXTURE, BackBufferFormat ) ) )

return false;

return true;

}

//--------------------------------------------------------------------------------------

// Before a device is created, modify the device settings as needed

//--------------------------------------------------------------------------------------

bool CALLBACK ModifyDeviceSettings( DXUTDeviceSettings* pDeviceSettings, void* pUserContext )

{

#if phase2

pDeviceSettings->d3d9.pp.PresentationInterval = D3DPRESENT_INTERVAL_IMMEDIATE;

#endif

return true;

}

#if phase3

static HRESULT initTextures( IDirect3DDevice9* pd3dDevice )

{

unsigned int size, level;

int face;

const unsigned char *image;

D3DLOCKED_RECT lockedRect;

//创建法向量纹理

if( FAILED( pd3dDevice->CreateTexture( 128, 128, 0, 0, D3DFMT_X8R8G8B8,

D3DPOOL_MANAGED, &g_pMyBrickNormalMapTex, NULL ) ) )

{

return E_FAIL;

}

for ( size = 128, level = 0, image = myBrickNormalMapImage;

size > 0;

image += 3 * size * size, size /= 2, level++)

{

if( FAILED(g_pMyBrickNormalMapTex->LockRect( level, &lockedRect, 0, 0 ) ) )

{

return E_FAIL;

}

DWORD *texel = (DWORD*) lockedRect.pBits;

const int bytes = size * size * 3;

for( int i = 0; i < bytes; i+= 3 )

{

*texel++ = image[i + 0] << 16 | image[i + 1] << 8 | image[i + 2];

}

g_pMyBrickNormalMapTex->UnlockRect(level);

}

//创建向量单位化的立方体纹理

if( FAILED( pd3dDevice->CreateCubeTexture(32, 1, 0, D3DFMT_X8R8G8B8,

D3DPOOL_MANAGED, &g_pMyNormalizeVectorCubeMapTex, NULL) ) )

return E_FAIL;

const int bytesPerFace = 32 * 32 * 3;

for( face = D3DCUBEMAP_FACE_POSITIVE_X, image = myNormalizeVectorCubeMapImage;

face <= D3DCUBEMAP_FACE_NEGATIVE_Z;

face += 1, image += bytesPerFace)

{

if( FAILED( g_pMyNormalizeVectorCubeMapTex->LockRect

((D3DCUBEMAP_FACES)face, 0, &lockedRect, 0/*lock entire surface*/, 0)))

return E_FAIL;

DWORD *texel = (DWORD*) lockedRect.pBits;

for (int i=0; i<bytesPerFace; i+=3)

{

*texel++ = image[i+0] << 16 |

image[i+1] << 8 |

image[i+2];

}

}

g_pMyNormalizeVectorCubeMapTex->UnlockRect((D3DCUBEMAP_FACES)face, 0);

return S_OK;

}

#endif

//--------------------------------------------------------------------------------------

// Create any D3D9 resources that will live through a device reset (D3DPOOL_MANAGED)

// and aren't tied to the back buffer size

//--------------------------------------------------------------------------------------

HRESULT CALLBACK OnD3D9CreateDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

#if phase4

D3DXCreateSphere(pd3dDevice, 0.4, 12, 12, &g_pLightSphereMesh, 0);

#endif

#if phase2

// Setup the camera's view parameters

D3DXVECTOR3 vecEye( 0.0f, 0.0f, -20.0f );

D3DXVECTOR3 vecAt ( 0.0f, 0.0f, 0.0f );

g_Camera.SetViewParams( &vecEye, &vecAt );

FLOAT fObjectRadius=1;

//摄像机缩放的3个参数

//g_Camera.SetRadius( fObjectRadius * 3.0f, fObjectRadius * 0.5f, fObjectRadius * 10.0f );

g_Camera.SetEnablePositionMovement( true );

#endif

#if phase3

HRESULT hr;

if( FAILED( initTextures( pd3dDevice ) ) )

return E_FAIL;

// Create the effect

WCHAR str[MAX_PATH];

// Read the D3DX effect file

V_RETURN( DXUTFindDXSDKMediaFileCch( str, MAX_PATH, L"NormalMapping.fx" ) );

// Create the effect

LPD3DXBUFFER pErrorBuff;

V_RETURN( D3DXCreateEffectFromFile(

pd3dDevice, // associated device

str, // effect filename

NULL, // no preprocessor definitions

NULL, // no ID3DXInclude interface

D3DXSHADER_DEBUG, // compile flags

NULL, // don't share parameters

&g_pEffect, // return effect

&pErrorBuff // return error messages

) );

// Get handle

g_hTech = g_pEffect->GetTechniqueByName("myTechnique");

g_hWorldViewProj = g_pEffect->GetParameterByName(0, "g_mWorldViewProj");

g_hWorldInv = g_pEffect->GetParameterByName(0, "g_mWorldInv");

g_hAmbient = g_pEffect->GetParameterByName( 0, "g_Ambient");

g_hLightMaterialDiffuse = g_pEffect->GetParameterByName( 0, "g_LMd");

g_hLightMaterialSpecular = g_pEffect->GetParameterByName( 0, "g_LMs");

g_hLightPosition = g_pEffect->GetParameterByName( 0, "g_lightPosition" );

g_hEyePosition = g_pEffect->GetParameterByName( 0, "g_eyePosition" );

g_hBrickNormal2DTex = g_pEffect->GetParameterByName(0, "g_txBrickNormal2D");

g_hNormalizeVectorCubeTex = g_pEffect->GetParameterByName(0, "g_txNormalizeVectorCube");

#endif

return S_OK;

}

#if phase1

struct MyVertexFormat

{

FLOAT x, y, z;

FLOAT u, v;

};

#define FVF_VERTEX (D3DFVF_XYZ | D3DFVF_TEX1)

static HRESULT initVertexIndexBuffer(IDirect3DDevice9* pd3dDevice)

{

// Create and initialize vertex buffer

static const MyVertexFormat Vertices[] =

{

{ -7.0f, -7.0f, 1.0f, 0.0f, 0.0f },

{ -7.0f, 7.0f, 1.0f, 0.0f, 1.0f },

{ 7.0f, 7.0f, 1.0f, 1.0f, 1.0f },

{ 7.0f, -7.0f, 1.0f, 1.0f, 0.0f }

};

if (FAILED(pd3dDevice->CreateVertexBuffer(sizeof(Vertices),

0, FVF_VERTEX,

D3DPOOL_DEFAULT,

&g_pVB, NULL))) {

return E_FAIL;

}

void* pVertices;

if (FAILED(g_pVB->Lock(0, 0, /* map entire buffer */

&pVertices, 0))) {

return E_FAIL;

}

memcpy(pVertices, Vertices, sizeof(Vertices));

g_pVB->Unlock();

// Create and initialize index buffer

static const WORD Indices[] =

{

0, 1, 2,

0, 2, 3

};

if (FAILED(pd3dDevice->CreateIndexBuffer(sizeof(Indices),

D3DUSAGE_WRITEONLY,

D3DFMT_INDEX16,

D3DPOOL_DEFAULT,

&g_pIB, NULL))) {

return E_FAIL;

}

void* pIndices;

if (FAILED(g_pIB->Lock(0, 0, /* map entire buffer */

&pIndices, 0))) {

return E_FAIL;

}

memcpy(pIndices, Indices, sizeof(Indices));

g_pIB->Unlock();

return S_OK;

}

#endif

//--------------------------------------------------------------------------------------

// Create any D3D9 resources that won't live through a device reset (D3DPOOL_DEFAULT)

// or that are tied to the back buffer size

//--------------------------------------------------------------------------------------

HRESULT CALLBACK OnD3D9ResetDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

#if phase3

HRESULT hr;

if( g_pEffect )

V_RETURN( g_pEffect->OnResetDevice() );

#endif

#if phase2

pd3dDevice->SetRenderState( D3DRS_CULLMODE, D3DCULL_NONE );

//Setup the camera's projection parameters

float fAspectRatio = pBackBufferSurfaceDesc->Width / ( FLOAT )pBackBufferSurfaceDesc->Height;

g_Camera.SetProjParams( D3DX_PI / 2, fAspectRatio, 0.1f, 5000.0f );

g_Camera.SetWindow( pBackBufferSurfaceDesc->Width, pBackBufferSurfaceDesc->Height );

g_Camera.SetButtonMasks( MOUSE_LEFT_BUTTON, MOUSE_WHEEL, MOUSE_RIGHT_BUTTON );

#endif

#if phase1

return initVertexIndexBuffer(pd3dDevice);

#endif

}

static const double my2Pi = 2.0 * 3.14159265358979323846;

//--------------------------------------------------------------------------------------

// Handle updates to the scene. This is called regardless of which D3D API is used

//--------------------------------------------------------------------------------------

void CALLBACK OnFrameMove( double fTime, float fElapsedTime, void* pUserContext )

{

#if phase2

g_Camera.FrameMove( fElapsedTime );

#endif

#if phase5

if( g_bAnimation )

{

g_fLightAngle += 0.00008;

if( g_fLightAngle > my2Pi)

{

g_fLightAngle -= my2Pi;

}

}

#endif

}

//--------------------------------------------------------------------------------------

// Render the scene using the D3D9 device

//--------------------------------------------------------------------------------------

void CALLBACK OnD3D9FrameRender( IDirect3DDevice9* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

HRESULT hr;

#if phase3

D3DXVECTOR3 lightPosition(12.5 * sin(g_fLightAngle), 12.5 * cos(g_fLightAngle), -4);

#endif

// Clear the render target and the zbuffer

V( pd3dDevice->Clear( 0, NULL, D3DCLEAR_TARGET | D3DCLEAR_ZBUFFER, D3DCOLOR_ARGB( 0, 45, 50, 170 ), 1.0f, 0 ) );

// Render the scene

if( SUCCEEDED( pd3dDevice->BeginScene() ) )

{

#if phase3

UINT iPass, cPasses;

D3DXMATRIXA16 mWorldViewProjection, mWorld, mWorldInv;

V( g_pEffect->SetTechnique( g_hTech ) );

V( g_pEffect->Begin( &cPasses, 0 ) );

for( iPass = 0; iPass < cPasses ; iPass++ )

{

V( g_pEffect->BeginPass( iPass ) );

// set WorldInv matrix

mWorld = *g_Camera.GetWorldMatrix();

mWorldInv = *D3DXMatrixInverse(&mWorldInv, 0, &mWorld);

V( g_pEffect->SetMatrix( g_hWorldInv, &mWorldInv ) );

//set WorldViewProject matrix

mWorldViewProjection = *g_Camera.GetWorldMatrix() * *g_Camera.GetViewMatrix() *

*g_Camera.GetProjMatrix();

V( g_pEffect->SetMatrix( g_hWorldViewProj, &mWorldViewProjection) );

//set texture

V( g_pEffect->SetTexture( g_hBrickNormal2DTex, g_pMyBrickNormalMapTex ) );

V( g_pEffect->SetTexture( g_hNormalizeVectorCubeTex, g_pMyNormalizeVectorCubeMapTex ) );

// set g_Ambient

g_pEffect->SetFloat( g_hAmbient, 0.2f );

// set light position

g_pEffect->SetFloatArray( g_hLightPosition, lightPosition, 3 );

// set eye position

//const float* eyePositionInObject = (const FLOAT *)g_Camera.GetEyePt();

g_pEffect->SetFloatArray( g_hEyePosition, (const FLOAT *)g_Camera.GetEyePt(), 3 );

float LMd[3] = {0.8f, 0.7f, 0.2f};

g_pEffect->SetFloatArray( g_hLightMaterialDiffuse, LMd, 3 );

float LMs[3] = {0.5f, 0.5f, 0.8f};

g_pEffect->SetFloatArray( g_hLightMaterialSpecular, LMs, 3 );

#if phase2 && !phase3

// Set world matrix

D3DXMATRIX world = *g_Camera.GetWorldMatrix() ; //注意茶壶总在摄像机前面,相对于摄像机静止

pd3dDevice->SetTransform(D3DTS_WORLD, &world) ;

D3DXMATRIX view = *g_Camera.GetViewMatrix() ;

pd3dDevice->SetTransform(D3DTS_VIEW, &view) ;

// Set projection matrix

D3DXMATRIX proj = *g_Camera.GetProjMatrix() ;

pd3dDevice->SetTransform(D3DTS_PROJECTION, &proj) ;

#endif

#if phase1

pd3dDevice->SetStreamSource(0, g_pVB, 0, sizeof(MyVertexFormat));

pd3dDevice->SetIndices(g_pIB);

pd3dDevice->SetFVF(FVF_VERTEX);

pd3dDevice->DrawIndexedPrimitive(D3DPT_TRIANGLELIST, 0, 0, 4, 0, 2);

V( g_pEffect->EndPass() );

#endif

}

V( g_pEffect->End() );

#endif

#if phase4

// D3DMATERIAL9 mtrlYellow;

// mtrlYellow.Ambient = D3DXCOLOR( D3DCOLOR_XRGB(0, 0, 0) );

// mtrlYellow.Diffuse = D3DXCOLOR( D3DCOLOR_XRGB(0, 0, 0) );

// mtrlYellow.Emissive = D3DXCOLOR( D3DCOLOR_XRGB(0, 0, 0) );

// mtrlYellow.Power = 2.0f;

// mtrlYellow.Specular = D3DXCOLOR( D3DCOLOR_XRGB(0, 0, 0) );

// pd3dDevice->SetMaterial( &mtrlYellow );

pd3dDevice->SetRenderState(D3DRS_LIGHTING, false);

// Set world matrix

D3DXMATRIX M;

D3DXMatrixIdentity( &M ); // M = identity matrix

D3DXMatrixTranslation(&M, lightPosition.x, lightPosition.y, lightPosition.z);

pd3dDevice->SetTransform(D3DTS_WORLD, &M) ;

//这里三角形更像是世界坐标中静止的物体(比如墙)因为按W它会相对与摄像机会动,不像茶壶总在摄像机前面,相对于摄像机静止

// Set view matrix

D3DXMATRIX view = *g_Camera.GetViewMatrix() ;

pd3dDevice->SetTransform(D3DTS_VIEW, &view) ;

// Set projection matrix

D3DXMATRIX proj = *g_Camera.GetProjMatrix() ;

pd3dDevice->SetTransform(D3DTS_PROJECTION, &proj) ;

g_pLightSphereMesh->DrawSubset(0);

#endif

V( pd3dDevice->EndScene() );

}

}

//--------------------------------------------------------------------------------------

// Handle messages to the application

//--------------------------------------------------------------------------------------

LRESULT CALLBACK MsgProc( HWND hWnd, UINT uMsg, WPARAM wParam, LPARAM lParam,

bool* pbNoFurtherProcessing, void* pUserContext )

{

#if phase2

g_Camera.HandleMessages( hWnd, uMsg, wParam, lParam );

#endif

return 0;

}

//--------------------------------------------------------------------------------------

// Release D3D9 resources created in the OnD3D9ResetDevice callback

//--------------------------------------------------------------------------------------

void CALLBACK OnD3D9LostDevice( void* pUserContext )

{

#if phase1

SAFE_RELEASE(g_pVB);

SAFE_RELEASE(g_pIB);

#endif

#if phase3

if( g_pEffect )

g_pEffect->OnLostDevice();

#endif

}

//--------------------------------------------------------------------------------------

// Release D3D9 resources created in the OnD3D9CreateDevice callback

//--------------------------------------------------------------------------------------

void CALLBACK OnD3D9DestroyDevice( void* pUserContext )

{

#if phase3

SAFE_RELEASE(g_pEffect);

SAFE_RELEASE(g_pMyBrickNormalMapTex);

SAFE_RELEASE(g_pMyNormalizeVectorCubeMapTex);

#endif

#if phase4

SAFE_RELEASE(g_pLightSphereMesh);

#endif

}

#if phase5

void CALLBACK OnKeyboardProc(UINT character, bool is_key_down, bool is_alt_down, void* user_context)

{

if(is_key_down)

{

switch(character)

{

case VK_SPACE:

g_bAnimation = !g_bAnimation;

break;

}

}

}

#endif

//--------------------------------------------------------------------------------------

// Initialize everything and go into a render loop

//--------------------------------------------------------------------------------------

INT WINAPI wWinMain( HINSTANCE, HINSTANCE, LPWSTR, int )

{

// Enable run-time memory check for debug builds.

#if defined(DEBUG) | defined(_DEBUG)

_CrtSetDbgFlag( _CRTDBG_ALLOC_MEM_DF | _CRTDBG_LEAK_CHECK_DF );

#endif

// Set the callback functions

DXUTSetCallbackD3D9DeviceAcceptable( IsD3D9DeviceAcceptable );

DXUTSetCallbackD3D9DeviceCreated( OnD3D9CreateDevice );

DXUTSetCallbackD3D9DeviceReset( OnD3D9ResetDevice );

DXUTSetCallbackD3D9FrameRender( OnD3D9FrameRender );

DXUTSetCallbackD3D9DeviceLost( OnD3D9LostDevice );

DXUTSetCallbackD3D9DeviceDestroyed( OnD3D9DestroyDevice );

DXUTSetCallbackDeviceChanging( ModifyDeviceSettings );

DXUTSetCallbackMsgProc( MsgProc );

DXUTSetCallbackFrameMove( OnFrameMove );

#if phase5

DXUTSetCallbackKeyboard( OnKeyboardProc );

#endif

// TODO: Perform any application-level initialization here

// Initialize DXUT and create the desired Win32 window and Direct3D device for the application

DXUTInit( true, true ); // Parse the command line and show msgboxes

DXUTSetHotkeyHandling( true, true, true ); // handle the default hotkeys

DXUTSetCursorSettings( true, true ); // Show the cursor and clip it when in full screen

DXUTCreateWindow( L"3D_Shader_NormalMapping" );

DXUTCreateDevice( true, 400, 400 );

// Start the render loop

DXUTMainLoop();

// TODO: Perform any application-level cleanup here

return DXUTGetExitCode();

}

/*--------------------------------------------------------------------------

NormalMapping.fx -- normal mapping shader

(c) Seamanj.2013/8/1

--------------------------------------------------------------------------*/

//--------------------------------------------------------------------------------------

// Global variables

//--------------------------------------------------------------------------------------

float3 g_lightPosition; // Object - space

float3 g_eyePosition; // Object - space

float4x4 g_mWorldViewProj;

float4x4 g_mWorldInv;

//--------------------------------------------------------------------------------------

// Vertex shader output structure

//--------------------------------------------------------------------------------------

struct VS_Output {

float4 oPosition : POSITION;

float2 oTexCoord : TEXCOORD0;

float3 lightDirection : TEXCOORD1;

float3 halfAngle : TEXCOORD2;

};

//--------------------------------------------------------------------------------------

// Vertex shader

//--------------------------------------------------------------------------------------

VS_Output myVertexEntry(float4 position : POSITION,float2 texCoord : TEXCOORD0)

{

VS_Output OUT;

OUT.oPosition = mul ( position, g_mWorldViewProj);

OUT.oTexCoord = texCoord;

//将灯光位置置于物体坐标系中

float3 tempLightPosition = mul( float4(g_lightPosition, 1), g_mWorldInv).xyz;

OUT.lightDirection = tempLightPosition - position.xyz;

//OUT.lightDirection = g_lightPosition - position.xyz;

float3 eyeDirection = g_eyePosition - position.xyz;

//这里不单位法也可以

OUT.halfAngle = normalize( normalize(OUT.lightDirection) + normalize(eyeDirection) );

return OUT;

}

float3 expand( float3 v )

{

return ( v - 0.5 ) * 2;

}

//--------------------------------------------------------------------------------------

// Global variables used by Pixel shader

//--------------------------------------------------------------------------------------

float g_Ambient;

float4 g_LMd;

float4 g_LMs;

texture g_txBrickNormal2D;

texture g_txNormalizeVectorCube;

//-----------------------------------------------------------------------------

// Sampler

//-----------------------------------------------------------------------------

sampler2D g_samBrickNormal2D =

sampler_state

{

Texture = <g_txBrickNormal2D>;

MinFilter = Linear;

MagFilter = Linear;

MipFilter = Linear;

AddressU = Wrap;

AddressV = Wrap;

};

samplerCUBE g_samNormalizeVectorCube1 =

sampler_state

{

Texture = <g_txNormalizeVectorCube>;

MinFilter = Linear;

MagFilter = Linear;

MipFilter = Linear;

AddressU = Clamp;

AddressV = Clamp;

};

samplerCUBE g_samNormalizeVectorCube2 =

sampler_state

{

Texture = <g_txNormalizeVectorCube>;

MinFilter = Linear;

MagFilter = Linear;

MipFilter = Linear;

AddressU = Clamp;

AddressV = Clamp;

};

//--------------------------------------------------------------------------------------

// Pixel shader

//--------------------------------------------------------------------------------------

float4 myPixelEntry(float2 normalMapTexCoord : TEXCOORD0,

float3 lightDirection : TEXCOORD1,

float3 halfAngle : TEXCOORD2

) : COLOR

{

float3 normalTex = tex2D(g_samBrickNormal2D, normalMapTexCoord).xyz;

float3 normal = expand( normalTex );

normal.z = -normal.z;//这里的法向量是以OPENGL的坐标系为基础提供的,转成DX的Z轴相反

float3 normLightDirTex = texCUBE( g_samNormalizeVectorCube1, lightDirection ).xyz;

float3 normLightDir = expand( normLightDirTex );

float3 normHalfAngleTex = texCUBE ( g_samNormalizeVectorCube2, halfAngle ).xyz;

float3 normHalfAngle = expand( normHalfAngleTex );

float diffuse = saturate( dot( normal, normLightDir ) );

float specular = saturate( dot( normal, normHalfAngle ) );

return g_LMd * (g_Ambient + diffuse ) + g_LMs * pow( specular, 8 );

}

//--------------------------------------------------------------------------------------

// Renders scene to render target

//--------------------------------------------------------------------------------------

technique myTechnique

{

pass P0

{

VertexShader = compile vs_2_0 myVertexEntry();

PixelShader = compile ps_2_0 myPixelEntry();

}

}