- Ai时代初期全球不同纬度的层级辐射现象

龙胥伯

人工智能

基于最新研究成果与行业动态,AI时代的"层级辐射"现象可被科学解构为以下六大维度,结合技术演进、产业实践和社会影响进行系统性分析:一、技术能力的层级跃迁模型效率革命DeepSeek研发的R1-Zero模型通过动态架构设计,将样本利用率提升40%以上,训练周期大幅缩短。这种技术突破推动AI从实验室走向规模化应用,在智能制造、生物医药等领域催生新生态。大语言模型的训练方式(预训练→多任务学习→强化学习

- PyTorch 深度学习实战(12):Actor-Critic 算法与策略优化

进取星辰

PyTorch深度学习实战深度学习pytorch算法

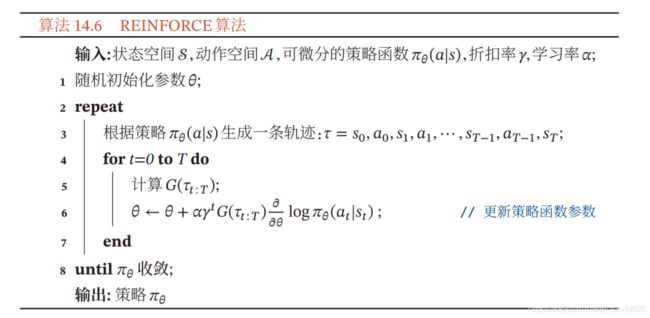

在上一篇文章中,我们介绍了强化学习的基本概念,并使用深度Q网络(DQN)解决了CartPole问题。本文将深入探讨Actor-Critic算法,这是一种结合了策略梯度(PolicyGradient)和值函数(ValueFunction)的强化学习方法。我们将使用PyTorch实现Actor-Critic算法,并应用于经典的CartPole问题。一、Actor-Critic算法基础Actor-Cri

- PyTorch 深度学习实战(17):Asynchronous Advantage Actor-Critic (A3C) 算法与并行训练

进取星辰

PyTorch深度学习实战深度学习pytorch算法

在上一篇文章中,我们深入探讨了SoftActor-Critic(SAC)算法及其在平衡探索与利用方面的优势。本文将介绍强化学习领域的重要里程碑——AsynchronousAdvantageActor-Critic(A3C)算法,并展示如何利用PyTorch实现并行化训练来加速学习过程。一、A3C算法原理A3C算法由DeepMind于2016年提出,通过异步并行的多个智能体(Worker)与环境交互

- DeepSeek在智慧物流管控中的全场景落地方案

猴的哥儿

笔记大数据交通物流python数据仓库微服务

一、智慧物流核心痛点与DeepSeek解决方案矩阵物流环节行业痛点DeepSeek技术方案价值增益仓储管理库存预测误差率>30%多模态时空预测模型库存周转率↑40%运输调度车辆空驶率35%强化学习动态调度引擎运输成本↓25%路径规划突发路况响应延迟>30分钟实时路况语义理解+自适应规划准时交付率↑18%异常检测50%异常依赖人工发现多传感器融合的异常模式识别异常发现时效↑6倍客户服务50%咨询需人

- 探索DeepSeek:前端开发者不可错过的新一代AI技术实践指南

formerlyai

人工智能前端

引言:为什么DeepSeek成为技术圈焦点?最近,国产AI模型DeepSeek凭借其低成本训练、高性能输出和开源策略,迅速成为开发者社区的热门话题。作为覆盖语言、代码、视觉的多模态技术矩阵,DeepSeek不仅实现了与ChatGPT相媲美的能力,还通过强化学习驱动的架构创新,解决了大模型落地中的成本与效率瓶颈。对于前端开发者而言,DeepSeek的API接入能力和私有化部署方案,为智能应用开发提供

- 【sklearn 02】监督学习、非监督下学习、强化学习

@金色海岸

sklearn学习人工智能

监督学习、非监督学习、强化学习**机器学习通常分为无监督学习、监督学习和强化学习三类。-第一类:无监督学习(unsupervisedlearning),指的是从信息出发自动寻找规律,分析数据的结构,常见的无监督学习任务有聚类、降维、密度估计、关联分析等。-第二类:监督学习(supervisedlearning),监督学习指的是使用带标签的数据去训练模型,并预测未知数据的标签。监督学习有两种,当预测

- 【人工智能基础2】机器学习、深度学习总结

roman_日积跬步-终至千里

人工智能习题人工智能机器学习深度学习

文章目录一、人工智能关键技术二、机器学习基础1.监督、无监督、半监督学习2.损失函数:四种损失函数3.泛化与交叉验证4.过拟合与欠拟合5.正则化6.支持向量机三、深度学习基础1、概念与原理2、学习方式3、多层神经网络训练方法一、人工智能关键技术领域基础原理与逻辑机器学习机器学习基于数据,研究从观测数据出发寻找规律,利用这些规律对未来数据进行预测。基于学习模式,机器学习可以分为监督、无监督、强化学习

- 从过拟合到强化学习:机器学习核心知识全解析

吴师兄大模型

0基础实现机器学习入门到精通机器学习人工智能过拟合强化学习pythonLLMscikit-learn

Langchain系列文章目录01-玩转LangChain:从模型调用到Prompt模板与输出解析的完整指南02-玩转LangChainMemory模块:四种记忆类型详解及应用场景全覆盖03-全面掌握LangChain:从核心链条构建到动态任务分配的实战指南04-玩转LangChain:从文档加载到高效问答系统构建的全程实战05-玩转LangChain:深度评估问答系统的三种高效方法(示例生成、手

- 基于DeepSeek R1构建下一代Manus通用型AI智能体的技术实践

zhangjiaofa

DeepSeekR1&AI人工智能大模型DeepSeekManus智能体AI

目录一、技术背景与目标定位1.1大模型推理能力演进趋势1.2DeepSeekR1核心特性解析-混合专家架构(MoE)优化-组相对策略优化(GRPO)原理-多阶段强化学习训练范式1.3Manus智能体框架设计理念-多智能体协作机制-安全执行沙箱设计二、系统架构设计2.1整体架构拓扑图-分层模块交互机制-数据流与控制流设计2.2核心组件实现-规划模块(GRPO算法集成)-记忆系统分级存储架构-工具调用

- 强化学习:时间差分(TD)(SARSA算法和Q-Learning算法)(看不懂算我输专栏)——手把手教你入门强化学习(六)

wxchyy

强化学习算法

目录前言前期回顾一、SARSA算法二、Q-Learning算法三、总结总结前言 前两期我们介绍了动态规划算法,还有蒙特卡洛算法,不过它们对于状态价值函数的估值都有其缺陷性,像动态规划,需要从最下面向上进行递推,而蒙特克洛则需要一个Episode(回合)结束才能对其进行估值,有没有更直接的方法,智能体能边做动作,边估值一次,不断学习策略?答案是有的。这就是本期需要介绍的算法,时间差分法(TimeDi

- 大型语言模型与强化学习的融合:迈向通用人工智能的新范式——基于基础复现的实验平台构建

(initial)

大模型科普人工智能强化学习

1.引言大型语言模型(LLM)在自然语言处理领域的突破,展现了强大的知识存储、推理和生成能力,为人工智能带来了新的可能性。强化学习(RL)作为一种通过与环境交互学习最优策略的方法,在智能体训练中发挥着重要作用。本文旨在探索LLM与RL的深度融合,分析LLM如何赋能RL,并阐述这种融合对于迈向通用人工智能(AGI)的意义。为了更好地理解这一融合的潜力,我们基于“LargeLanguageModela

- 强化学习-Chapter2-贝尔曼方程

Rsbs

算法机器学习概率论

强化学习-Chapter2-贝尔曼方程贝尔曼方程推导继续展开贝尔曼方程的矩阵形式状态值的求解动作价值函数与状态价值函数的关系贝尔曼方程推导Vπ(s)=E[Gt∣St=s]=E[rt+1+(γrt+2+…)∣St=s]=E[rt+1+γGt+1∣St=s]=∑a∈Aπ(s,a)∑s′∈SPs→s′a⋅(Rs→s′a+γE[Gt+1∣St+1=s′])=∑a∈Aπ(s,a)∑s′∈SPs→s′a⋅(R

- 【开源代码解读】AI检索系统R1-Searcher通过强化学习RL激励大模型LLM的搜索能力

accurater

人工智能深度学习R1-Searcher

关于R1-Searcher的报告:第一章:引言-AI检索系统的技术演进与R1-Searcher的创新定位1.1信息检索技术的范式转移在数字化时代爆发式增长的数据洪流中,信息检索系统正经历从传统关键词匹配到语义理解驱动的根本性变革。根据IDC的统计,2023年全球数据总量已突破120ZB,其中非结构化数据占比超过80%。这种数据形态的转变对检索系统提出了三个核心的挑战:语义歧义消除:如何准确理解"A

- PyTorch 深度学习实战(13):Proximal Policy Optimization (PPO) 算法

进取星辰

PyTorch深度学习实战深度学习pytorch算法

在上一篇文章中,我们介绍了Actor-Critic算法,并使用它解决了CartPole问题。本文将深入探讨ProximalPolicyOptimization(PPO)算法,这是一种更稳定、更高效的策略优化方法。我们将使用PyTorch实现PPO算法,并应用于经典的CartPole问题。一、PPO算法基础PPO是OpenAI提出的一种强化学习算法,旨在解决策略梯度方法中的训练不稳定问题。PPO通过

- 院士领衔、IEEE Fellow 坐镇,清华、上交大、复旦、同济等专家齐聚 2025 全球机器学习技术大会

CSDN资讯

机器学习人工智能

随着Manus出圈,OpenManus、OWL迅速开源,OpenAI推出智能体开发工具,全球AI生态正经历新一轮智能体革命。大模型如何协同学习?大模型如何自我进化?新型强化学习技术如何赋能智能体?围绕这些关键问题,由CSDN&Boolan联合举办的「2025全球机器学习技术大会」将于4月18-19日在上海隆重举行。大会云集院士、10所高校科研工作者、近30家一线科技企业技术实战专家组成的超50位重

- 推理大模型:技术解析与未来趋势全景

时光旅人01号

深度学习人工智能pythonpytorch神经网络

1.推理大模型的定义推理大模型(ReasoningLLMs)是专门针对复杂多步推理任务优化的大型语言模型,具备以下核心特性:输出形式创新展示完整逻辑链条(如公式推导、多阶段分析)任务类型聚焦擅长数学证明、编程挑战、多模态谜题等深度逻辑任务训练方法升级融合强化学习、思维链(CoT)、测试时计算扩展等技术2.主流推理大模型图谱2.1国际前沿模型OpenAIo1系列内部生成"思维链"机制数学/代码能力标

- 一文读懂强化学习:从基础到应用

LHTZ

算法时序数据库大数据数据库架构动态规划

强化学习是什么强化学习是人工智能领域的一种学习方法,简单来说,就是让一个智能体(比如机器人、电脑程序)在一个环境里不断尝试各种行为。每次行为后,环境会给智能体一个奖励或者惩罚信号,智能体根据这个信号来调整自己的行为,目的是让自己在未来能获得更多奖励。就像训练小狗,小狗做对了动作(比如坐下),就给它零食(奖励),做错了就没有零食(惩罚),慢慢地小狗就知道怎么做能得到更多零食,也就是学会了最优行为。强

- QwQ-32B企业级本地部署:结合XInference与Open-WebUI使用

大势下的牛马

搭建本地gptRAG知识库人工智能QwQ-32B

QwQ-32B是阿里巴巴Qwen团队推出的一款推理模型,拥有320亿参数,基于Transformer架构,采用大规模强化学习方法训练而成。它在数学推理、编程等复杂问题解决任务上表现出色,性能可媲美拥有6710亿参数的DeepSeek-R1。QwQ-32B在多个基准测试中表现出色,例如在AIME24基准上,其数学问题解决能力得分达到79.5,超过OpenAI的o1-mini。它在LiveBench、

- LLM Weekly(2025.03.03-03.09)

UnknownBody

LLMDailyLLMWeekly语言模型人工智能

网络新闻QwQ-32B:拥抱强化学习的力量。研究人员推出了QwQ-32B,这是一个拥有320亿参数的模型,它利用强化学习来提升推理能力。尽管参数较少,但通过整合类似智能体的推理和反馈机制,QwQ-32B的表现可与更大规模的模型相媲美。该模型可在HuggingFace平台上获取。**人工智能领域的先驱安德鲁·巴托(AndrewBarto)和理查德·萨顿(RichardSutton)因对强化学习的开创

- Chebykan wx 文章阅读

やっはろ

深度学习

文献筛选[1]神经网络:全面基础[2]通过sigmoid函数的超层叠近似[3]多层前馈网络是通用近似器[5]注意力是你所需要的[6]深度残差学习用于图像识别[7]视觉化神经网络的损失景观[8]牙齿模具点云补全通过数据增强和混合RL-GAN[9]强化学习:一项调查[10]使用PySR和SymbolicRegression.jl的科学可解释机器学习[11]Z.Liu,Y.Wang,S.Vaidya,F

- 用物理信息神经网络(PINN)解决实际优化问题:全面解析与实践

青橘MATLAB学习

深度学习网络设计人工智能深度学习物理信息神经网络强化学习

摘要本文系统介绍了物理信息神经网络(PINN)在解决实际优化问题中的创新应用。通过将物理定律与神经网络深度融合,PINN在摆的倒立控制、最短时间路径规划及航天器借力飞行轨道设计等复杂任务中展现出显著优势。实验表明,PINN相比传统数值方法及强化学习(RL)/遗传算法(GA),在收敛速度、解的稳定性及物理保真度上均实现突破性提升。关键词:物理信息神经网络;优化任务;深度学习;强化学习;航天器轨道一、

- django allauth 自定义登录界面

waterHBO

djangopythondjango数据库sqlitepython笔记经验分享

起因,目的:为什么前几天还在写强化学习,今天又写django,问就是:客户需求>个人兴趣。问题来源:allauth默认的登录界面不好看,这里记录几个问题。1.注册页面SignUp这里增加,手机号,邮编等等。2.使用谷歌来登录这个步骤其实也简单。xxxxxxxx一定要修改关键的信息,不能随便暴露给别人。xxxxxxxx#HowtouseGoogleLogin.1.createsuperuser.(m

- 人工智能机器学习算法分类全解析

power-辰南

人工智能人工智能机器学习算法python

目录一、引言二、机器学习算法分类概述(一)基于学习方式的分类1.监督学习(SupervisedLearning)2.无监督学习(UnsupervisedLearning)3.强化学习(ReinforcementLearning)(二)基于任务类型的分类1.分类算法2.回归算法3.聚类算法4.降维算法5.生成算法(三)基于模型结构的分类1.线性模型2.非线性模型3.基于树的模型4.基于神经网络的模型

- 怎么定义世界模型,Sora/Genie/JEPA 谁是世界模型呢?(1)

周博洋K

分布式人工智能深度学习自然语言处理机器学习

说这个问题之前先看一下什么是世界模型,它的定义是什么?首先世界模型的起源是咋回事呢?其实世界模型在ML领域不是什么新概念,远远早于Transfomer这些东西被提出来,因为它最早是强化学习RL领域的,在20世纪90年代由JuergenSchmiduber实验室给提出来的。2018年被Ha和Schmiduber发表了用RNN来做世界模型的论文,相当于给他重新做了一次定义。然后就是最近跟着Sora,G

- 《Natural Actor-Critic》译读笔记

songyuc

笔记

《NaturalActor-Critic》摘要本文提出了一种新型的强化学习架构,即自然演员-评论家(NaturalActor-Critic)。Theactor的更新通过使用Amari的自然梯度方法进行策略梯度的随机估计来实现,而评论家则通过线性回归同时获得自然策略梯度和价值函数的附加参数。本文展示了使用自然策略梯度的actor改进特别有吸引力,因为这些梯度与所选策略表示的坐标框架无关,并且比常规策

- LLM Weekly(2025.02.17-02.23)

UnknownBody

LLMDailyLLMWeekly人工智能自然语言处理

本文是LLM系列文章,主要是针对2025.02.17-02.23这一周的LLM相关新闻与文章、GitHub资源分享。网络新闻Grok3Beta——推理代理的时代。Grok发布了Grok3Beta,通过强化学习、扩展计算和多模态理解提供卓越的推理能力。Grok3和Grok3mini在学术基准上取得了高分,其中Grok3在AIME’25上获得了93.3%的分数。Grok3的推理可通过“思考”按钮访问,

- 大话机器学习三大门派:监督、无监督与强化学习

安意诚Matrix

机器学习笔记机器学习人工智能

以武侠江湖为隐喻,系统阐述了机器学习的三大范式:监督学习(少林派)凭借标注数据精准建模,擅长图像分类等预测任务;无监督学习(逍遥派)通过数据自组织发现隐藏规律,在生成对抗网络(GAN)等场景大放异彩;强化学习(明教)依托动态环境交互优化策略,驱动AlphaGo、自动驾驶等突破性应用。文章融合技术深度与江湖趣味,既解析了CNN、PCA、Q-learning等核心算法的"武功心法"(数学公式与代码实现

- 使用DeepSeek来构建LangGraph Agent

乔巴先生24

人工智能python人机交互

随着DeepseekR1的发布,我们不得不把目光聚焦在这个能赶超多个顶流大模型的模型身上,它主要是其在后训练阶段大规模使用了强化学习技术,在仅有极少标注数据的情况下,极大提升了模型推理能力。在数学、代码、自然语言推理等任务上,性能比肩OpenAIo1正式版。为了更好的了解它的性能,我们这篇文章来尝试用它来构建Agent。安装!pipinstall-qopenailangchainlanggraph

- 当深度学习遇见禅宗:用东方智慧重新诠释DQN算法

带上一无所知的我

智能体的自我修炼:强化学习指南深度学习算法人工智能DQN

当深度学习遇见禅宗:用东方智慧重新诠释DQN算法“好的代码如同山水画,既要工笔细描,又要留白写意”——一个在终端前顿悟的开发者DQN是Q-Learning算法与深度神经网络的结合体,通过神经网络近似Q值函数,解决传统Q-Learning在高维状态空间下的"维度灾难"问题。引言:代码与禅的碰撞♂️在某个调试代码到凌晨三点的夜晚,我突然意识到:强化学习的过程,竟与佛家修行惊人地相似。智能体在环境中探索

- 就在刚刚!马斯克决定将“地球上最聪明的人工智能”Grok-3免费了!

源代码杀手

AI技术快讯人工智能python

Grok-3概述与关键功能Grok-3是由xAI开发的先进AI模型,于2025年2月19日发布,旨在提升推理能力、计算能力和适应性,特别适用于数学、科学和编程问题。作为xAI系列模型的最新版本,Grok-3延续了公司对构建强大且安全的AI系统的承诺,并推动人工智能在多个领域的应用。Grok-3的核心优势在于其大规模强化学习(RL)优化,能够在几秒到几分钟内进行深度推理,适应复杂任务的需求。配备的D

- 矩阵求逆(JAVA)初等行变换

qiuwanchi

矩阵求逆(JAVA)

package gaodai.matrix;

import gaodai.determinant.DeterminantCalculation;

import java.util.ArrayList;

import java.util.List;

import java.util.Scanner;

/**

* 矩阵求逆(初等行变换)

* @author 邱万迟

*

- JDK timer

antlove

javajdkschedulecodetimer

1.java.util.Timer.schedule(TimerTask task, long delay):多长时间(毫秒)后执行任务

2.java.util.Timer.schedule(TimerTask task, Date time):设定某个时间执行任务

3.java.util.Timer.schedule(TimerTask task, long delay,longperiod

- JVM调优总结 -Xms -Xmx -Xmn -Xss

coder_xpf

jvm应用服务器

堆大小设置JVM 中最大堆大小有三方面限制:相关操作系统的数据模型(32-bt还是64-bit)限制;系统的可用虚拟内存限制;系统的可用物理内存限制。32位系统下,一般限制在1.5G~2G;64为操作系统对内存无限制。我在Windows Server 2003 系统,3.5G物理内存,JDK5.0下测试,最大可设置为1478m。

典型设置:

java -Xmx

- JDBC连接数据库

Array_06

jdbc

package Util;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class JDBCUtil {

//完

- Unsupported major.minor version 51.0(jdk版本错误)

oloz

java

java.lang.UnsupportedClassVersionError: cn/support/cache/CacheType : Unsupported major.minor version 51.0 (unable to load class cn.support.cache.CacheType)

at org.apache.catalina.loader.WebappClassL

- 用多个线程处理1个List集合

362217990

多线程threadlist集合

昨天发了一个提问,启动5个线程将一个List中的内容,然后将5个线程的内容拼接起来,由于时间比较急迫,自己就写了一个Demo,希望对菜鸟有参考意义。。

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.CountDownLatch;

public c

- JSP简单访问数据库

香水浓

sqlmysqljsp

学习使用javaBean,代码很烂,仅为留个脚印

public class DBHelper {

private String driverName;

private String url;

private String user;

private String password;

private Connection connection;

privat

- Flex4中使用组件添加柱状图、饼状图等图表

AdyZhang

Flex

1.添加一个最简单的柱状图

? 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28

<?xml version=

"1.0"&n

- Android 5.0 - ProgressBar 进度条无法展示到按钮的前面

aijuans

android

在低于SDK < 21 的版本中,ProgressBar 可以展示到按钮前面,并且为之在按钮的中间,但是切换到android 5.0后进度条ProgressBar 展示顺序变化了,按钮再前面,ProgressBar 在后面了我的xml配置文件如下:

[html]

view plain

copy

<RelativeLa

- 查询汇总的sql

baalwolf

sql

select list.listname, list.createtime,listcount from dream_list as list , (select listid,count(listid) as listcount from dream_list_user group by listid order by count(

- Linux du命令和df命令区别

BigBird2012

linux

1,两者区别

du,disk usage,是通过搜索文件来计算每个文件的大小然后累加,du能看到的文件只是一些当前存在的,没有被删除的。他计算的大小就是当前他认为存在的所有文件大小的累加和。

- AngularJS中的$apply,用还是不用?

bijian1013

JavaScriptAngularJS$apply

在AngularJS开发中,何时应该调用$scope.$apply(),何时不应该调用。下面我们透彻地解释这个问题。

但是首先,让我们把$apply转换成一种简化的形式。

scope.$apply就像一个懒惰的工人。它需要按照命

- [Zookeeper学习笔记十]Zookeeper源代码分析之ClientCnxn数据序列化和反序列化

bit1129

zookeeper

ClientCnxn是Zookeeper客户端和Zookeeper服务器端进行通信和事件通知处理的主要类,它内部包含两个类,1. SendThread 2. EventThread, SendThread负责客户端和服务器端的数据通信,也包括事件信息的传输,EventThread主要在客户端回调注册的Watchers进行通知处理

ClientCnxn构造方法

&

- 【Java命令一】jmap

bit1129

Java命令

jmap命令的用法:

[hadoop@hadoop sbin]$ jmap

Usage:

jmap [option] <pid>

(to connect to running process)

jmap [option] <executable <core>

(to connect to a

- Apache 服务器安全防护及实战

ronin47

此文转自IBM.

Apache 服务简介

Web 服务器也称为 WWW 服务器或 HTTP 服务器 (HTTP Server),它是 Internet 上最常见也是使用最频繁的服务器之一,Web 服务器能够为用户提供网页浏览、论坛访问等等服务。

由于用户在通过 Web 浏览器访问信息资源的过程中,无须再关心一些技术性的细节,而且界面非常友好,因而 Web 在 Internet 上一推出就得到

- unity 3d实例化位置出现布置?

brotherlamp

unity教程unityunity资料unity视频unity自学

问:unity 3d实例化位置出现布置?

答:实例化的同时就可以指定被实例化的物体的位置,即 position

Instantiate (original : Object, position : Vector3, rotation : Quaternion) : Object

这样你不需要再用Transform.Position了,

如果你省略了第二个参数(

- 《重构,改善现有代码的设计》第八章 Duplicate Observed Data

bylijinnan

java重构

import java.awt.Color;

import java.awt.Container;

import java.awt.FlowLayout;

import java.awt.Label;

import java.awt.TextField;

import java.awt.event.FocusAdapter;

import java.awt.event.FocusE

- struts2更改struts.xml配置目录

chiangfai

struts.xml

struts2默认是读取classes目录下的配置文件,要更改配置文件目录,比如放在WEB-INF下,路径应该写成../struts.xml(非/WEB-INF/struts.xml)

web.xml文件修改如下:

<filter>

<filter-name>struts2</filter-name>

<filter-class&g

- redis做缓存时的一点优化

chenchao051

redishadooppipeline

最近集群上有个job,其中需要短时间内频繁访问缓存,大概7亿多次。我这边的缓存是使用redis来做的,问题就来了。

首先,redis中存的是普通kv,没有考虑使用hash等解结构,那么以为着这个job需要访问7亿多次redis,导致效率低,且出现很多redi

- mysql导出数据不输出标题行

daizj

mysql数据导出去掉第一行去掉标题

当想使用数据库中的某些数据,想将其导入到文件中,而想去掉第一行的标题是可以加上-N参数

如通过下面命令导出数据:

mysql -uuserName -ppasswd -hhost -Pport -Ddatabase -e " select * from tableName" > exportResult.txt

结果为:

studentid

- phpexcel导出excel表简单入门示例

dcj3sjt126com

PHPExcelphpexcel

先下载PHPEXCEL类文件,放在class目录下面,然后新建一个index.php文件,内容如下

<?php

error_reporting(E_ALL);

ini_set('display_errors', TRUE);

ini_set('display_startup_errors', TRUE);

if (PHP_SAPI == 'cli')

die('

- 爱情格言

dcj3sjt126com

格言

1) I love you not because of who you are, but because of who I am when I am with you. 我爱你,不是因为你是一个怎样的人,而是因为我喜欢与你在一起时的感觉。 2) No man or woman is worth your tears, and the one who is, won‘t

- 转 Activity 详解——Activity文档翻译

e200702084

androidUIsqlite配置管理网络应用

activity 展现在用户面前的经常是全屏窗口,你也可以将 activity 作为浮动窗口来使用(使用设置了 windowIsFloating 的主题),或者嵌入到其他的 activity (使用 ActivityGroup )中。 当用户离开 activity 时你可以在 onPause() 进行相应的操作 。更重要的是,用户做的任何改变都应该在该点上提交 ( 经常提交到 ContentPro

- win7安装MongoDB服务

geeksun

mongodb

1. 下载MongoDB的windows版本:mongodb-win32-x86_64-2008plus-ssl-3.0.4.zip,Linux版本也在这里下载,下载地址: http://www.mongodb.org/downloads

2. 解压MongoDB在D:\server\mongodb, 在D:\server\mongodb下创建d

- Javascript魔法方法:__defineGetter__,__defineSetter__

hongtoushizi

js

转载自: http://www.blackglory.me/javascript-magic-method-definegetter-definesetter/

在javascript的类中,可以用defineGetter和defineSetter_控制成员变量的Get和Set行为

例如,在一个图书类中,我们自动为Book加上书名符号:

function Book(name){

- 错误的日期格式可能导致走nginx proxy cache时不能进行304响应

jinnianshilongnian

cache

昨天在整合某些系统的nginx配置时,出现了当使用nginx cache时无法返回304响应的情况,出问题的响应头: Content-Type:text/html; charset=gb2312 Date:Mon, 05 Jan 2015 01:58:05 GMT Expires:Mon , 05 Jan 15 02:03:00 GMT Last-Modified:Mon, 05

- 数据源架构模式之行数据入口

home198979

PHP架构行数据入口

注:看不懂的请勿踩,此文章非针对java,java爱好者可直接略过。

一、概念

行数据入口(Row Data Gateway):充当数据源中单条记录入口的对象,每行一个实例。

二、简单实现行数据入口

为了方便理解,还是先简单实现:

<?php

/**

* 行数据入口类

*/

class OrderGateway {

/*定义元数

- Linux各个目录的作用及内容

pda158

linux脚本

1)根目录“/” 根目录位于目录结构的最顶层,用斜线(/)表示,类似于

Windows

操作系统的“C:\“,包含Fedora操作系统中所有的目录和文件。 2)/bin /bin 目录又称为二进制目录,包含了那些供系统管理员和普通用户使用的重要

linux命令的二进制映像。该目录存放的内容包括各种可执行文件,还有某些可执行文件的符号连接。常用的命令有:cp、d

- ubuntu12.04上编译openjdk7

ol_beta

HotSpotjvmjdkOpenJDK

获取源码

从openjdk代码仓库获取(比较慢)

安装mercurial Mercurial是一个版本管理工具。 sudo apt-get install mercurial

将以下内容添加到$HOME/.hgrc文件中,如果没有则自己创建一个: [extensions] forest=/home/lichengwu/hgforest-crew/forest.py fe

- 将数据库字段转换成设计文档所需的字段

vipbooks

设计模式工作正则表达式

哈哈,出差这么久终于回来了,回家的感觉真好!

PowerDesigner的物理数据库一出来,设计文档中要改的字段就多得不计其数,如果要把PowerDesigner中的字段一个个Copy到设计文档中,那将会是一件非常痛苦的事情。