机器学习:BIRCH聚类、谱聚类

1、BIRCH聚类

BIRCH的全称是利用层次方法的平衡迭代规约和聚类(Balanced Iterative Reducing and Clustering Using Hierarchies)。BIRCH算法利用了一个树结构来快速的聚类,这个数结构类似于平衡B+树,一般将它称之为聚类特征树(Clustering Feature Tree,简称CF Tree)。这颗树的每一个节点是由若干个聚类特征(Clustering Feature,简称CF)组成。

一般来说,BIRCH算法适用于样本量较大和类别数比较大的情况,需要进行调参。

BIRCH算法的主要优点有:

- 节约内存,所有的样本都在磁盘上,CF Tree仅仅存了CF节点和对应的指针。

- 聚类速度快,只需要一遍扫描训练集就可以建立CF Tree,CF Tree的增删改都很快。

- 可以识别噪音点,还可以对数据集进行初步分类的预处理。

BIRCH算法的主要缺点有:

- 由于CF Tree对每个节点的CF个数有限制,导致聚类的结果可能和真实的类别分布不同。

- 对高维特征的数据聚类效果不好。

- 如果数据集的分布簇不是类似于超球体,或者说不是凸的,则聚类效果不好。

# BIRCH聚类,使用默认参数

from sklearn.datasets.samples_generator import make_blobs

from sklearn.cluster import Birch

from sklearn.metrics import calinski_harabaz_score

import matplotlib.pyplot as plt

# 随机生成数据

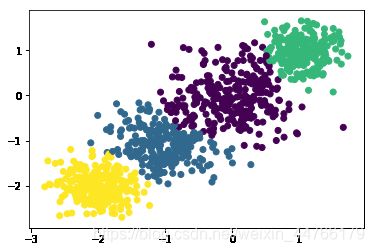

x, y = make_blobs(n_samples=1000, centers=[[-2, -2], [-1, -1], [0, 0], [1, 1]], cluster_std=[0.3, 0.4, 0.5, 0.3], random_state=2)

# BIRCH聚类

birch = Birch(n_clusters=None, threshold=0.5, branching_factor=50).fit(x)

# 预测

y_pred = birch.predict(x)

# 可视化

plt.scatter(x[:, 0], x[:, 1], c=y_pred)

plt.show()

# 使用calinski_harabaz_score作为模型评估标准

print(calinski_harabaz_score(x, y_pred))

# BIRCH聚类,调参

from sklearn.datasets.samples_generator import make_blobs

from sklearn.cluster import Birch

from sklearn.metrics import calinski_harabaz_score

from sklearn.model_selection import GridSearchCV

import matplotlib.pyplot as plt

# 随机生成数据

x, y = make_blobs(n_samples=1000, centers=[[-2, -2], [-1, -1], [0, 0], [1, 1]], cluster_std=[0.3, 0.4, 0.5, 0.3], random_state=2)

# 类别数

n_clusters = [2, 3, 4, 5]

# 叶子节点每个CF的最大样本半径阈值

thresholds = [0.3, 0.4, 0.5, 0.6]

# CF树内部节点的最大CF

branching_factors = [30, 40, 50, 60]

# 调参,得到最优的参数组合

def get_best_params():

max_chs = 0

for n in n_clusters:

for t in thresholds:

for b in branching_factors:

birch = Birch(n_clusters=n, threshold=t, branching_factor=b).fit(x)

y_pred = birch.predict(x)

chs = calinski_harabaz_score(x, y_pred) # 用Calinski-Harabasez分数作为评估标准

if chs > max_chs:

max_chs = chs

best_n = n

best_t = t

best_b = b

return best_n, best_t, best_b, max_chs

best_n, best_t, best_b, max_chs = get_best_params()

print('best n_cluster: ', best_n)

print('best threshold: ', best_t)

print('best branching_factor: ', best_b)

print('max Calinski-Harabasez_score: ', max_chs)

birch = Birch(n_clusters=best_n, threshold=best_t, branching_factor=best_b).fit(x)

y_pred = birch.predict(x)

plt.scatter(x[:, 0], x[:, 1], c=y_pred)

plt.show()

best n_cluster: 4

best threshold: 0.6

best branching_factor: 30

max Calinski-Harabasez_score: 3111.9410181233275

2、谱聚类Spectral Clustering

# 谱聚类

from sklearn.datasets.samples_generator import make_blobs

from sklearn.cluster import SpectralClustering

from sklearn.metrics import calinski_harabaz_score

import matplotlib.pyplot as plt

# 随机生成数据

x, y = make_blobs(n_samples=500, n_features=6, centers=5, cluster_std=[0.8, 0.5, 0.8, 0.5, 0.6], random_state=2)

# 参数

n_clusters = [3, 4, 5, 6]

gamma = [0.01, 0.1, 1, 10]

# 调参

def get_best_params():

max_chs = 0

for n in n_clusters:

for g in gamma:

y_pred = SpectralClustering(n_clusters=n, gamma=g).fit_predict(x)

chs = calinski_harabaz_score(x, y_pred)

if chs > max_chs:

max_chs = chs

best_n = n

best_gamma = g

return best_n, best_gamma, max_chs

best_n, best_gamma, max_ = get_best_params()

print('best clusters: ', best_n)

print('best gamma: ', best_gamma)

print('max chs: ', max_chs)

best clusters: 5

best gamma: 0.01

max chs: 3111.9410181233275