MathorCup高校数学建模挑战赛——大数据竞赛 赛道A 移动通信基站流量预测baseline

文章目录

- 前言

- 一、简单分析

- 二、具体程序

-

- 1.引入库

- 2.读入数据

- 3.数据处理

- 4.模型训练和预测

- 5.结果文件输出

- 总结

前言

本文给出2020年MathorCup高校数学建模挑战赛——大数据竞赛中的赛道A移动通信基站流量预测的baseline,这个题目的具体描述和数据集请见链接。

整个程序是用python写的,步骤包括文件读取、数据处理、特征构造、模型训练和预测、输出文件保存。读者可以在本文的基础上进行模型的提升。

一、简单分析

本文的训练数据有9G左右的大小,且特征字段是中文的,panda读取的时候需要注意。另外,训练数据中含有重复项,程序中直接删除了重复项只保留一个。关于日期字段,训练数据中的格式有两种例如“2018/3/26”和“018-4-09”因此需要分别处理,baseline中的程序使用的是通用的处理方式。需要注意的是,baseline的程序是将两个流量当作关于时间的函数,没有考虑时间序列特性,这里有很大的改进空间。但是如果考虑时序特性的话,不能直接使用程序中的交叉验证方法,这样会存在数据泄露,并且需要考虑对缺失值进行填充。

二、具体程序

1.引入库

import pandas as pd

import numpy as np

from tqdm import tqdm

import os

import lightgbm as lgb

import warnings

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import StratifiedKFold, KFold

import gc

import time

2.读入数据

博主将训练数据和测试数据全都重命名了,其实可以不用,这里读取必须要用gbk编码,这样才能读取中文。另外移植代码的时候注意路径匹配。

train = pd.read_csv('D:/mathorup/traindata.csv',encoding="gbk")

test1 = pd.read_csv('D:/mathorup/test1.csv',encoding="gbk")#短期测试集

test2 = pd.read_csv('D:/mathorup/test2.csv',encoding="gbk")#长期测试集

train.info()

为了方便后续处理,我用英文字段重命名了列名:

new_col = ['DATE', 'HOUR','NAME' , 'LABEL1','LABEL2']

train.columns = new_col

test1.columns = new_col

new_col2 = ['DATE','NAME' , 'LABEL1','LABEL2']

test2.columns = new_col2

输出进行观察:

train.head()

test1.head()

test2.head()

3.数据处理

删除训练数据中的重复列,保留一个:

train = train.drop_duplicates(keep='first')

删除含有缺失值的数据

train = train.dropna()

处理小时、日、月:

train['HOUR'] = train['HOUR'].apply(lambda x: int(x.split(':')[0]))

train['DAY'] = train['DATE'].apply(lambda x: int(x.split('/')[-1][-2:]))

train['MON'] = train['DATE'].apply(lambda x: int(x[5]))

测试集1做相同处理:

test1['HOUR'] = test1['HOUR'].apply(lambda x: int(x.split(':')[0]))

test1['DAY'] = test1['DATE'].apply(lambda x: int(x.split('/')[-1]))

test1['MON'] = test1['DATE'].apply(lambda x: int(x[5]))

输出观察:

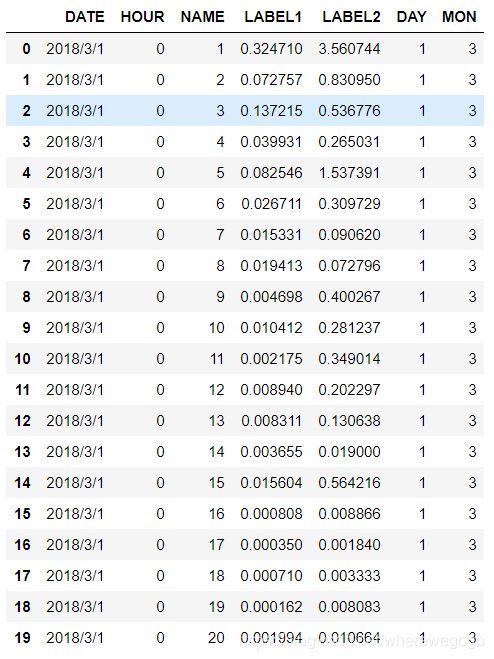

train.head(20)

4.模型训练和预测

模型我使用的是LGB模型,参数没有进行过优化,大家可以稍微做一下参数优化。训练和预测我使用了两个随机种子和五折交叉验证:

上行流量预测:

used_feat = ['HOUR','DAY','NAME','MON']

train_x = train[used_feat]

train_y = train['LABEL1']

test_x = test1[used_feat]

print(train_x.shape, test_x.shape)

# -----------------------------------------------

scores = []

params = {

'learning_rate': 0.1,

'boosting_type': 'gbdt',

'objective': 'regression',

'metric': 'rmse',

'min_child_samples': 46,

'min_child_weight': 0.01,

'feature_fraction': 0.8,

'bagging_fraction': 0.8,

'bagging_freq': 2,

'num_leaves': 16,

'max_depth': 5,

'n_jobs': -1,

'seed': 2019,

'verbosity': -1,

}

oof_train = np.zeros(len(train_x))

preds = np.zeros(len(test_x))

folds = 5

seeds = [2048, 1997]

for seed in seeds:

kfold = KFold(n_splits=folds, shuffle=True, random_state=seed)

for fold, (trn_idx, val_idx) in enumerate(kfold.split(train_x, train_y)):

print('fold ', fold + 1)

x_trn, y_trn, x_val, y_val = train_x.iloc[trn_idx], train_y.iloc[trn_idx], train_x.iloc[val_idx], train_y.iloc[val_idx]

train_set = lgb.Dataset(x_trn, y_trn)

val_set = lgb.Dataset(x_val, y_val)

model = lgb.train(params, train_set, num_boost_round=5000,

valid_sets=(train_set, val_set), early_stopping_rounds=25,

verbose_eval=50)

oof_train[val_idx] += model.predict(x_val) / len(seeds)

preds += model.predict(test_x) / folds / len(seeds)

del x_trn, y_trn, x_val, y_val, model, train_set, val_set

gc.collect()

mse = (mean_squared_error(oof_train, train['LABEL1']))

print('-'*120)

print('rmse ', round(mse, 5))

test1['LABEL1'] = preds

下行流量预测:

train_x = train[used_feat]

train_y = train['LABEL2']

test_x = test1[used_feat]

print(train_x.shape, test_x.shape)

# -----------------------------------------------

scores = []

params = {

'learning_rate': 0.1,

'boosting_type': 'gbdt',

'objective': 'regression',

'metric': 'rmse',

'min_child_samples': 46,

'min_child_weight': 0.01,

'feature_fraction': 0.8,

'bagging_fraction': 0.8,

'bagging_freq': 2,

'num_leaves': 16,

'max_depth': 5,

'n_jobs': -1,

'seed': 2019,

'verbosity': -1,

}

oof_train = np.zeros(len(train_x))

preds = np.zeros(len(test_x))

folds = 5

seeds = [2048, 1997]

for seed in seeds:

kfold = KFold(n_splits=folds, shuffle=True, random_state=seed)

for fold, (trn_idx, val_idx) in enumerate(kfold.split(train_x, train_y)):

print('fold ', fold + 1)

x_trn, y_trn, x_val, y_val = train_x.iloc[trn_idx], train_y.iloc[trn_idx], train_x.iloc[val_idx], train_y.iloc[val_idx]

train_set = lgb.Dataset(x_trn, y_trn)

val_set = lgb.Dataset(x_val, y_val)

model = lgb.train(params, train_set, num_boost_round=5000,

valid_sets=(train_set, val_set), early_stopping_rounds=25,

verbose_eval=50)

oof_train[val_idx] += model.predict(x_val) / len(seeds)

preds += model.predict(test_x) / folds / len(seeds)

del x_trn, y_trn, x_val, y_val, model, train_set, val_set

gc.collect()

mse = (mean_squared_error(oof_train, train['LABEL2']))

print('-'*120)

print('rmse ', round(mse, 5))

test1['LABEL2'] = preds

5.结果文件输出

由于我们对原文件做了较大的改动,上交的文件要处理成原文件的格式:

test11 = pd.read_csv('D:/mathorup/test1.csv',encoding="gbk")#短期测试集

test11['上行业务量GB'] = test1['LABEL1']

test11['下行业务量GB'] = test1['LABEL2']

test11.to_csv('短期验证选择的小区数据集.csv', index = False)

test11.head()

总结

本文仅仅给出了一种简单的方法,打通了整个流程,还有很大的提升空间,比如考虑时序特性、数据相关性分析、改用LSTM等神经网络模型等等。