京东评论爬取----爬虫入门进阶版

文章目录

- 京东评论的爬取

-

- 1.京东评论初次爬取

- 2.静态网站与动态网站

-

- 1).静态网站

- 2).动态网站

- 3.评论请求分析

-

- 1) 找js xhr请求评论数据

- 2)寻找技巧:

- 4.JSON

-

- 1) json格式

- 2) json格式字符

- 3) XML

- 5.请求天气接口

- 6.京东评论的爬取

- 7.哔哩哔哩番剧 标题爬取

京东评论的爬取

提示:以下是本篇文章正文内容,下面案例可供参考

1.京东评论初次爬取

- 以下代码为初次尝试,爬取时所写

- 但发现有点不尽人意,获取到的代码和开发者工具中的不同,所以返回不了正确结果

- 那么,请往下看

#站酷网爬虫

import os

import requests

from lxml import etree

url = 'https://item.jd.com/100009077475.html'

headers = {

#没有user-agent,返回简短的html代码,js重定向到登录页面

'user-Agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36'

}

resp = requests.get(url,headers=headers)

html = resp.text

# print(html)

#将html代码,写入本地

import os

file_path = os.path.join(os.path.dirname(__file__),'1京东商品页响应临时.html')

with open(file_path,mode='w',encoding='utf-8') as f:

f.write(html)

dom = etree.HTML(html)

comment_pattern = '//div[@class="comment-item"]//p[@class="comment-con"]/text()'

comments = dom.xpath(comment_pattern)

for i in comments:

print(i)

2.静态网站与动态网站

1).静态网站

- 几乎所有的后端语言的web框架,java ssh、 php 、python flask django.

- 服务端web程序接收到请求后,从数据库取数据,把数据拼凑到事先准备的html模板骨架中,形成个完整的网贝html,最终响应,浏览器解析,用户看到效果。

- 这种技术非常流行,广义上来说是静态网站,狭义上是动态网站

- 缺点:如果页面数据已经变更,例如刚发了一 条微博、一 条朋友圈,已经有人评论,但不知道,只有刷新页面才能看到。需要手动频繁刷新页面。不刷新可能错失信息,刷新又累。

#伪代码

def product_detail(pid):

pid,

comments = select * where id=pid

f"""

return httpResponse()

2).动态网站

- 第一次请求从后台收到html源代码,html 源代码只有网页菜单导航等公共部分利js。

- 浏览器拿到第次响应,解析js, js中发起后续异步后台请求,从后台拿到纯数据,通过js把数据滥染某一处div 里面,最终用户看到完整网页。

- 优点:传输更快,首屏加载更快,局部刷新,体验更好.缺点:写js增加开发成本

- 场景:评论、微博朋友圈提示、注册表单再提交前提示用户名已注册。

- 技术栈: js xhr jquery. ajax,nodejs vue aios

#伪代码

def product_ detail():

f"""

return httpResponse()

def get. comment by_ pid(101010997):

pass #post请求

select comments from comment where pid=101010997

return comments

3.评论请求分析

1) 找js xhr请求评论数据

- 不关心对方js怎么写的和渲染到网页。只要找到http请求,用python模拟。

2)寻找技巧:

- 开发者工具network, 过滤xhr 和js的请求。

- 找post类型的http请求。 post(隐藏参数)

- 找比较像的名字comment api get_ info get_ list

- 响应是纯数据,而不是html信息。

- 尝试在网页中触发异步js数据请求,先清空实验请求,然后在开发者工具中观察有没有新增请求。

- network工具获取焦点,ctrl+f打开隐藏的搜索框, 在所有请求、请求头、响应全文搜索关键字。|

4.JSON

1) json格式

- 为什么用son, xml?而不是list dict [] {}。

- 因为不同计算机互相交流数据,java、 php、 python、C、C++、 Go,

- 键值对,python里{‘name’; ’ 小明’}浏览器urL 里name=小明, java map, js对象{}。

- 类似的结构,因为解释器底层实现不一样,表示同样数据的二进制不样。{ ‘name’; ’ 小明’} >二. 进制,编码。

- 每一台计算机上的编程语言和服务不一样。 需求”中间格式”。 python 内置对象子中间格式+服务器自已语言格Cjava

- 所以,有统一格式。XML,Json, [字符串]。

2) json格式字符

- json格式语法{ }内键值对属性和值,[]数组一组, 基本类型整数、字符申。字符申用[双引号]

import json

xiaoming_json_str = """

{

"name" : "小明",

"age" : 13,

"parent" : [

{

"name" : "小明爸爸",

"age" : 43

},

{

"name" : "小明妈妈",

"age" : 43

}

]

}

"""

#打印在控制台的结果看不出来变量是字符申还是字典,应该用type()函数判断

# print(type(xiaoming_json_str), xiaoming_json_str)

# json字符串-> python内 置数据结构

xiaoming_dict = json.loads(xiaoming_json_str)

print(type(xiaoming_dict), xiaoming_dict)

print(xiaoming_dict['name'])

for parent in xiaoming_dict['parent']:

print(parent['name'])

#python 内置数据结构 -> json字符串

students =[

{

'name': '小明' ,'age' : 13,'gender' : '男'},

{

'name': '小2' ,'age' : 13,'gender' : '男'},

{

'name': '小1' ,'age' : 13,'gender' : '男'},

]

xiaoming_json_str = json.dumps(students)

print(type(xiaoming_json_str), xiaoming_json_str)

3) XML

- 过去很流行,但稍麻烦,现在用的越来越少

<students>

<stu>

<name>小明</name>

<age>13</age>

</stu>

<stu>

<name>小红</name>

<age>11</age>

</stu>

</students>

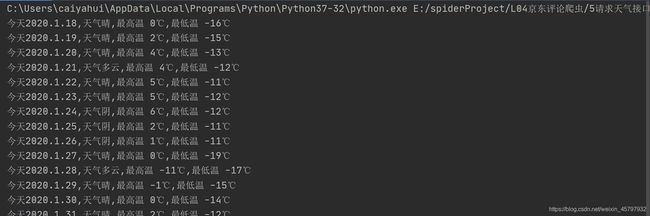

5.请求天气接口

- 时下流行,前后端分离开发技术

- 后端负责接收请求,响应纯数据,叫’API’前端js负责发请求

- 接口 (别人提供的后台程序) 分为合法和非法

- 合法:阿里云各种AI接口,淘宝商品,gtihub,

- 非法:****接口,

import json

import requests

url = 'http://t.weather.itboy.net/api/weather/city/101100201'

resp = requests.get(url)

status_code = resp.status_code

weather_str = resp.text

weather_obj = json.loads(weather_str)

# print(type(weather_obj), weather_obj)

# json结构复杂,如何观察取数据

# 把返回值从浏觉器或控制复制到pycharm的临时.json文件中

# pycharm/code/reformat格式化json 文件,照着json文件层级取数据

weather_data = weather_obj['data']

day_weather_list = weather_data['forecast']

for day in day_weather_list:

# print(day)

date = day['date']

high = day['high']

low = day['low']

type = day['type']

print(f'今天2020.1.{date},天气{type},最{high},最{low}')

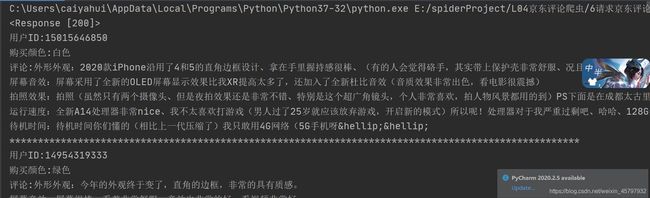

6.京东评论的爬取

重头戏

import json

import requests

base_url = 'https://club.jd.com/comment/productPageComments.action'

headers = {

#没有user-agent,返回简短的html代码,js重定向到登录页面

#

'user-Agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36',

'Referer': 'https://item.jd.com/'

}

# tips:从开发者工具network请求头下面的query params复制下来再调整。使用编辑器列编辑模式 alt+shift+鼠标拖动。

params = {

# 'callback': 'fetchJSON_comment98', #回调

'productId': 100009077475, #商品id

'score': 0,

'sortType': 5,

'page': 1, #第n页

'pageSize': 10,

'isShadowSku': 0,

'rid': 0,

'fold': 0

}

for i in range(1,11):

params['page'] = i

# print(params)

resp = requests.get(base_url,headers=headers,params=params)

print(resp)

status_code = resp.status_code

#得到一个jsonp (跨域)

comment_json = resp.text

# print(comment_json)

# 京东评论接口返回jsonp格式,涉及跨域问题。需要将先jsonp转json。

# 方法1:python字符串方法删除固定长度无用字符串;2(推荐)上网找从jsonp过滤json正则;3本例中发现修改参数可以直接返回json

comment_obj = json.loads(comment_json)

comment_data = comment_obj['comments']

for comment in comment_data:

id = comment['id']

content = comment['content']

creation_time = comment['creationTime']

product_color = comment['productColor']

print(f'用户ID:{id}\n购买颜色:{product_color}\n评论:{content}')

print('*'*100)

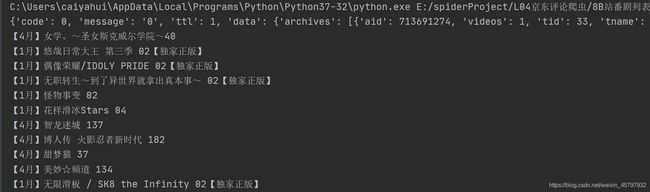

7.哔哩哔哩番剧 标题爬取

import json

import requests

base_url = 'https://api.bilibili.com/x/web-interface/newlist'

headers = {

#没有user-agent,返回简短的html代码,js重定向到登录页面

#本次请求头只用伪造user-agent即可,但前端时间测试需要cookie字段

'user-Agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36',

'Referer': 'https://www.bilibili.com/' #很重要

}

params = {

'rid' : 33,

'type' : 0,

'pn' : 1,

'ps' : 20,

'jsonp' : 'jsonp',

# 'callback' : 'jsonCallback_bili_310141257890750756'

}

for i in range(1,11):

params['pn'] = i

resp = requests.get(base_url,headers=headers,params=params)

status_code = resp.status_code

view_json = resp.text

view_obj = json.loads(view_json)

print(view_obj)

view_data = view_obj['data']

view_archives = view_data['archives']

for view in view_archives:

title = view['title']

print(title)