基于NumPy实现ID3决策树算法

ID3决策树算法

决策树 (decision tree) 是一类常见的机器学习方法,它基于树结构来进行决策,这恰是人类在面临决策问题时一种很自然的处理机制。著名的决策树学习算法包括ID3、C4.5、CART等,ID3决策树以信息增益 (information gain) 为准则来选择划分属性,C4.5决策树以增益率 (gain ratio) 为准则来选择划分属性,而CART决策树使用基尼指数 (Gini index) 来选择划分属性。下面主要介绍ID3决策树算法。

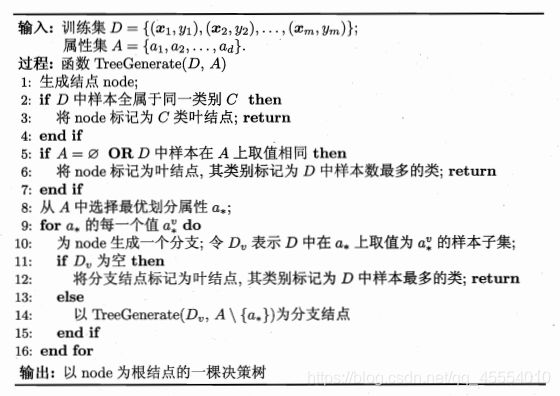

决策树学习的目的是为了产生一棵泛化能力强的决策树,其基本流程遵循“分而治之” (divide-and-conquer) 策略,算法流程如下:

可以看出,决策树学习的关键是如何选择最优划分属性。一般地,随着划分过程不断进行,决策树的分支结点所包含的样本尽可能属于同一类别,即结点的纯度 (purity) 越来越高。信息熵 (information entropy) 是度量样本集合纯度最常用的一种指标。假定当前样本集合 D D D中第 k k k类样本所占的比例为 p k ( k = 1 , 2 , . . . , ∣ Y ∣ ) p_k(k=1,2,...,|\mathcal{Y}|) pk(k=1,2,...,∣Y∣),则 D D D的信息熵定义为 E n t ( D ) = − Σ k = 1 ∣ Y ∣ p k l o g 2 p k . Ent(D)=-\Sigma^{|\mathcal{Y}|}_{k=1}p_klog_2p_k. Ent(D)=−Σk=1∣Y∣pklog2pk.约定:若 p = 0 p=0 p=0,则 p l o g 2 p = 0 plog_2p=0 plog2p=0。 E n t ( D ) Ent(D) Ent(D)的最小值为 0 0 0,最大值为 l o g 2 ∣ Y ∣ log_2|\mathcal{Y}| log2∣Y∣。 E n t ( D ) Ent(D) Ent(D)的值越小,则 D D D的纯度越高。均匀分布的概率分布具有较高的信息熵,接近确定性分布的具有较低的信息熵。

假定离散属性 a a a有 V V V个可能的取值 { a 1 , a 2 , . . . a V } \{a^1,a^2,...a^V\} { a1,a2,...aV},若使用 a a a来对样本集 D D D进行划分,则会产生 V V V个分支结点,其中第 v v v个分支结点包含了 D D D中所有在属性 a a a上取值为 a v a^v av的样本,记为 D v D^v Dv。用属性 a a a对样本集 D D D进行划分所获得的信息增益为 G a i n ( D , a ) = E n t ( D ) − Σ v = 1 V ∣ D v ∣ ∣ D ∣ E n t ( D v ) . Gain(D,a)=Ent(D)-\Sigma^{V}_{v=1}\frac{|D^v|}{|D|}Ent(D^v). Gain(D,a)=Ent(D)−Σv=1V∣D∣∣Dv∣Ent(Dv).一般地,信息增益越大,则意味着使用属性 a a a来进行划分所获得的纯度提升越大。ID3算法就是以信息增益为准则来选择划分属性。

ID3算法的实现

young myope no reduced no lenses

young myope no normal soft

young myope yes reduced no lenses

young myope yes normal hard

young hyper no reduced no lenses

young hyper no normal soft

young hyper yes reduced no lenses

young hyper yes normal hard

pre myope no reduced no lenses

pre myope no normal soft

pre myope yes reduced no lenses

pre myope yes normal hard

pre hyper no reduced no lenses

pre hyper no normal soft

pre hyper yes reduced no lenses

pre hyper yes normal no lenses

presbyopic myope no reduced no lenses

presbyopic myope no normal no lenses

presbyopic myope yes reduced no lenses

presbyopic myope yes normal hard

presbyopic hyper no reduced no lenses

presbyopic hyper no normal soft

presbyopic hyper yes reduced no lenses

presbyopic hyper yes normal no lenses

MyDecisionTree_ID3.py

"""

MyDecisionTree.py - 基于NumPy实现ID3决策树算法

ID3决策树学习算法以信息增益为准则来选择划分属性。

已知一个随机变量的信息后使得另一个随机变量的不确定性减小的程度叫做信息增益,

即信息增益越大,使用该属性来进行划分所获得的纯度提升越大。

"""

from collections import namedtuple

import operator

import uuid

import numpy as np

class ID3DecisionTree():

def __init__(self):

self.tree = {

}

self.dataset = []

self.labels = []

def InformationEntropy(self, dataset):

"""

计算信息熵

:param dataset: 样本集合

:return: 信息熵

"""

labelCounts = {

}

for featureVector in dataset:

currentLabel = featureVector[-1] # 从数据集中得到类别标签

if currentLabel not in labelCounts.keys():

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

ent = 0.0

for key in labelCounts:

prob = float(labelCounts[key]) / len(dataset)

ent -= prob * np.log2(prob)

return ent

def splitDataset(self, dataset, axis, value):

"""

按照给定特征划分数据集

:param dataset: 待划分的数据集

:param axis: 划分数据集的特征

:param value: 特征的返回值

:return: 划分后的数据集

"""

retDataset = []

for featureVector in dataset:

if featureVector[axis] == value:

reducedFeatureVector = featureVector[:axis]

reducedFeatureVector.extend(featureVector[axis + 1:])

retDataset.append(reducedFeatureVector)

return retDataset

def chooseBestFeatureToSplit(self, dataset):

"""

选择最好的数据集划分方式

:param dataset: 待划分的数据集

:return: 最好的划分数据集的特征

"""

numFeatures = len(dataset[0]) - 1 # 计算特征向量维数,其中最后一列用于类别标签,因此要减去

baseEntropy = self.InformationEntropy(dataset)

bestInfoGain = 0.0

bestFeature = -1

for i in range(numFeatures): # 遍历数据集各列,计算最优特征轴

featureList = [example[i] for example in dataset]

uniqueValues = set(featureList)

newEntropy = 0.0

for value in uniqueValues: # 按列和唯一值计算信息熵

subDataset = self.splitDataset(dataset, i, value) # 按指定列i和唯一值分隔数据集

prob = len(subDataset) / float(len(dataset))

newEntropy += prob * self.InformationEntropy(subDataset)

infoGain = baseEntropy - newEntropy

if infoGain > bestInfoGain:

bestInfoGain = infoGain # 用当前信息增益值替代之前的最优增益值

bestFeature = i # 重置最优特征为当前列

return bestFeature

def majorityCount(self, classList):

"""

如果数据集已经处理了所有属性但类标签仍不唯一,采用多数表决的方法决定叶子结点的分类

:param classList: 分类列表

:return: 叶子结点的分类

"""

classCount = {

}

for vote in classList:

if vote not in classCount.keys():

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.iteritems(), key=operator.itemgetter(1), reverse=True)

return sortedClassCount[0][0]

def createTree(self, dataset, labels):

"""

构造ID3决策树

:param dataset: 数据集

:param labels: 特征

:return: 字典形式的ID3决策树

"""

classList = [example[-1] for example in dataset]

# 递归返回情形1,classList只有一种决策标签,停止划分,返回这个决策标签

if classList.count(classList[0]) == len(classList):

return classList[0]

# 递归返回情形2,数据集的第一个决策标签只有一个,停止划分,返回这个决策标签

if len(dataset[0]) == 1:

return self.majorityCount(classList)

bestFeature = self.chooseBestFeatureToSplit(dataset)

bestFeatureLabel = labels[bestFeature]

tree = {

bestFeatureLabel: {

}}

del labels[bestFeature]

featureValues = [example[bestFeature] for example in dataset]

uniqueValues = set(featureValues)

# 决策树递归生长,将删除后的特征类别集建立子类别集,按最优特征列和值划分数据集,构建子树

for value in uniqueValues:

subLabels = labels[:]

tree[bestFeatureLabel][value] = self.createTree(self.splitDataset(dataset, bestFeature, value), subLabels)

return tree

def getNodesAndEdges(self, tree=None, root_node=None):

"""

递归获取树的结点和边

:param tree: 要进行可视化的决策树

:param root_node: 树的根结点

:return: 树的结点集和边集

"""

Node = namedtuple('Node', ['id', 'label'])

Edge = namedtuple('Edge', ['start', 'end', 'label'])

if tree is None:

tree = self.tree

if type(tree) is not dict:

return [], []

nodes, edges = [], []

if root_node is None:

label = list(tree.keys())[0]

root_node = Node._make([uuid.uuid4(), label])

nodes.append(root_node)

for edge_label, sub_tree in tree[root_node.label].items():

node_label = list(sub_tree.keys())[0] if type(sub_tree) is dict else sub_tree

sub_node = Node._make([uuid.uuid4(), node_label])

nodes.append(sub_node)

edge = Edge._make([root_node, sub_node, edge_label])

edges.append(edge)

sub_nodes, sub_edges = self.getNodesAndEdges(sub_tree, root_node=sub_node)

nodes.extend(sub_nodes)

edges.extend(sub_edges)

return nodes, edges

def getDotFileContent(self, tree):

"""

生成*.dot文件,以便可视化决策树

:param tree: 待可视化的决策树

:return: *.dot文件的文本

"""

content = 'digraph decision_tree {\n'

nodes, edges = self.getNodesAndEdges(tree)

for node in nodes:

content += ' "{}" [label="{}"];\n'.format(node.id, node.label)

for edge in edges:

start, label, end = edge.start, edge.label, edge.end

content += ' "{}" -> "{}" [label="{}"];\n'.format(start.id, end.id, label)

content += '}'

return content

决策树可视化部分参考链接:决策树的构建设计并用Graphviz实现决策树的可视化

ID3Demo.py

from MyDecisionTree_ID3 import *

# 加载数据集

fr = open('./lenses.txt')

lenses = [inst.strip().split('\t') for inst in fr.readlines()]

fr.close()

# 生成ID3决策树

dt = ID3DecisionTree() # 实例化ID3DecisionTree对象

lensesLabels = ['age', 'prescript', 'astigmatic', 'tearRate']

lensesTree = dt.createTree(lenses, lensesLabels)

print(lensesTree) # 以字典形式打印决策树

# 生成*.dot文件,实现决策树可视化

with open('./lenses.dot', 'w') as f:

dot = dt.getDotFileContent(lensesTree)

f.write(dot)

运行ID3Demo.py得到的字典形式的决策树

{'tearRate': {'reduced': 'no lenses', 'normal': {'astigmatic': {'yes': {'prescript': {'hyper': {'age': {'presbyopic': 'no lenses', 'pre': 'no lenses', 'young': 'hard'}}, 'myope': 'hard'}}, 'no': {'age': {'presbyopic': {'prescript': {'hyper': 'soft', 'myope': 'no lenses'}}, 'pre': 'soft', 'young': 'soft'}}}}}}

运行ID3Demo.py后产生lenses.dot

digraph decision_tree {

"26716d35-5dd0-4480-86e1-2e8cef0dd410" [label="tearRate"];

"3ad6100c-c014-41bf-bacd-b0b28ebda080" [label="no lenses"];

"7e784d36-e580-4b1c-abbc-9f3653b70264" [label="astigmatic"];

"0c5d1e53-6443-48b1-91ed-dfa20528c509" [label="prescript"];

"e6947147-dfac-42bc-a01f-ef97ef917aae" [label="age"];

"c51b8cbf-4182-41cf-aa3d-d0ae7116f107" [label="hard"];

"11ac6e3e-738a-46fc-b1b0-29bd0ae22d96" [label="no lenses"];

"c1a13702-804f-40be-92f1-5116e041c1e4" [label="no lenses"];

"b737ac17-92c9-40ab-a3af-74fb78d591ae" [label="hard"];

"a40fd94c-81a9-4ba2-98c5-eea817a313fe" [label="age"];

"8cd5e511-f598-4d79-9077-0199a559c94e" [label="soft"];

"6a4e5934-4c70-48e6-be01-7fb21e6c47ec" [label="soft"];

"51c8fa4c-48bb-4d12-b761-9afb4787b4b8" [label="prescript"];

"51049906-8158-4b73-9dc5-055c3d097d99" [label="soft"];

"4c7d93fe-ae8a-4caf-bf36-097ef5f8464a" [label="no lenses"];

"26716d35-5dd0-4480-86e1-2e8cef0dd410" -> "3ad6100c-c014-41bf-bacd-b0b28ebda080" [label="reduced"];

"26716d35-5dd0-4480-86e1-2e8cef0dd410" -> "7e784d36-e580-4b1c-abbc-9f3653b70264" [label="normal"];

"7e784d36-e580-4b1c-abbc-9f3653b70264" -> "0c5d1e53-6443-48b1-91ed-dfa20528c509" [label="yes"];

"0c5d1e53-6443-48b1-91ed-dfa20528c509" -> "e6947147-dfac-42bc-a01f-ef97ef917aae" [label="hyper"];

"e6947147-dfac-42bc-a01f-ef97ef917aae" -> "c51b8cbf-4182-41cf-aa3d-d0ae7116f107" [label="young"];

"e6947147-dfac-42bc-a01f-ef97ef917aae" -> "11ac6e3e-738a-46fc-b1b0-29bd0ae22d96" [label="pre"];

"e6947147-dfac-42bc-a01f-ef97ef917aae" -> "c1a13702-804f-40be-92f1-5116e041c1e4" [label="presbyopic"];

"0c5d1e53-6443-48b1-91ed-dfa20528c509" -> "b737ac17-92c9-40ab-a3af-74fb78d591ae" [label="myope"];

"7e784d36-e580-4b1c-abbc-9f3653b70264" -> "a40fd94c-81a9-4ba2-98c5-eea817a313fe" [label="no"];

"a40fd94c-81a9-4ba2-98c5-eea817a313fe" -> "8cd5e511-f598-4d79-9077-0199a559c94e" [label="young"];

"a40fd94c-81a9-4ba2-98c5-eea817a313fe" -> "6a4e5934-4c70-48e6-be01-7fb21e6c47ec" [label="pre"];

"a40fd94c-81a9-4ba2-98c5-eea817a313fe" -> "51c8fa4c-48bb-4d12-b761-9afb4787b4b8" [label="presbyopic"];

"51c8fa4c-48bb-4d12-b761-9afb4787b4b8" -> "51049906-8158-4b73-9dc5-055c3d097d99" [label="hyper"];

"51c8fa4c-48bb-4d12-b761-9afb4787b4b8" -> "4c7d93fe-ae8a-4caf-bf36-097ef5f8464a" [label="myope"];

}

安装graphviz并且配置好环境变量后,打开cmd,输入命令dot -Tpdf lenses.dot -o lenses.pdf或dot -Tpng lenses.dot -o lenses.png将lenses.dot文件转换为pdf文件或png文件