Mask Rcnn 训练数据集 日志记录备忘 win7 64 tensorflow1.10 anaconda3.5.10 cuda9.0

五一放假,正好有时间,把前段时间搭建的mrcnn拿出来训练一下

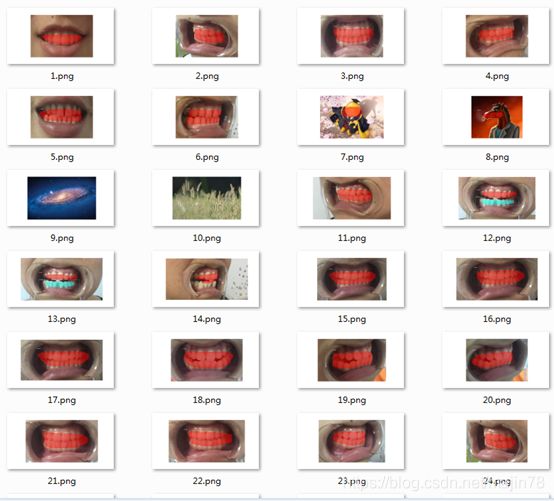

数据集 一些小图,本来拿来测试,只是做个DEMO 后期不以这个数据集训练,只用来跑通代码

训练二分类问题,效果确实不错,3个目标的时候,有误识别,只是前期测试,愿不愿意入坑,仅做参考。

搭个框架还是费时,不看过程的,最后有测试效果图,PO出来供参考。

前期

训练环境搭建

环境如题 win7 64 tensorflow1.10 anaconda3.5.10 cuda9.0

显卡是GTX960M 内存应该有2G ,实际1.92-1.6左右 笔记本

nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2017 NVIDIA Corporation

Built on Fri_Sep__1_21:08:32_Central_Daylight_Time_2017

Cuda compilation tools, release 9.0, V9.0.176mrcnn环境搭建,参考了https://github.com/matterport/Mask_RCNN 因为之前环境按照很多,不一一说明,下载案例后,运行ballon.py 能成功,则环境搭建完成。

数据集标注

使用labelme来标注图像。如果图中有同一个类的不同物体,我标注成同一标签,并不想进行区分。前期的时候先标注成多个类,后期训练的时候取单一个类也不报错。如果需要单独区分,则标注成label_1,label_2。比如两只小猫就标注成cat_1,cat_2,我这里每张图片只有一个目标,先测试识别一个目标,训练通过后,再进行调整增加类别,重新训练。

这一步很关键,回想起来,前期打算训练只选了15个图,训练的时候才发现,mrcnn对图像的格式是有要求的,标注15个图的时间全白费了。第二轮测试,只用5张图训练,调整参数找问题,又一个循环,几近奔溃,来回折腾了四五回,主要遇到下面问题。

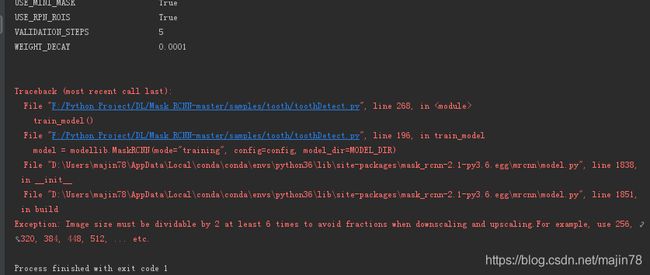

报错一Image size must be dividable by 2

Exception: Image size must be dividable by 2 at least 6 times to avoid fractions when downscaling and upscaling.For example, use 256, 320, 384, 448, 512, ... etc.

# 这里的h , w 必须是2的幂次方

图像索性调整896*1408,前期主要是图像太小,所以边不够报错,后期不调整到最大像素 主要是显卡内存不够,导致报错。

报错二Error when checking input

ValueError: Error when checking input: expected input_image_meta to have shape (16,) but got array with shape (14,)

用Mask-RCNN训练自己的数据集时,需要制定图片的长度和宽!!!

前期训练的时候需要先归一化 后期摸索着大概896*1408稍微费点时间,大概半小时。

分享一个训练指定长宽的方法

需要将图像处理成指定长宽比例的图像然后才可以用于训练,并且训练集中的图像需要长度和宽度都需一致。

若训练集中的图像有长度和宽度不同时则不能训练,这样极不方便。

https://blog.csdn.net/yql_617540298/article/details/81782685

数据处理

1)json转化

labelme保存的都是xxx.json文件,需要用labelme_json_to_dataset.exe转换一下,cv2是8bit的,很好理解。

这样转换后,打开转换后的图片一片漆黑,如果想看效果可以把"img = Image.fromarray(np.uint8(np.array(img)))"改成“img = Image.fromarray(np.uint8(np.array(img)) * 20 )”,不过这样不符合mask rcnn的要求,看看效果即可,后面运行还是需要不乘倍数的!(摘抄的)

防止文件夹有空格 影响读参数,%i 应加“”

for /r %i in (*.json) do labelme_json_to_dataset.exe “%i”2)数据存放

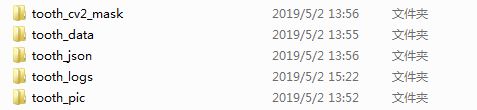

data 存放是元数据,json 运行产生的数据都在当前文件夹下,训练的时候需要移植到相应文件里

关键的是pic 原图片和mask 转化后的mask黑图模板 json 转化后的文件夹。

写了个处理文件

src_dir = r'F:\Python Project\DL\Mask_RCNN-master\tooth_data'

mask_dest_dir = r'F:\Python Project\DL\Mask_RCNN-master\tooth_cv2_mask'

json_dest_dir = r'F:\Python Project\DL\Mask_RCNN-master\tooth_json'

pic_dest_dir = r'F:\Python Project\DL\Mask_RCNN-master\tooth_pic'

def img_16to8():

from PIL import Image

import numpy as np

import shutil

import os

for child_dir in os.listdir(src_dir):

#new_name = child_dir.split('_')[0] + '.png'

if child_dir.endswith('_json'):

print("child_dir:",child_dir)

new_name = child_dir.split('_json')[0] + '.png'

old_mask = os.path.join(os.path.join(src_dir, child_dir), 'label.png')

img = Image.open(old_mask)

img = Image.fromarray(np.uint8(np.array(img)))

new_mask = os.path.join(mask_dest_dir, new_name)

img.save(new_mask)

def copy8bit_to_pic():

from PIL import Image

import numpy as np

import shutil

import os

for child_dir in os.listdir(src_dir):

if child_dir.endswith('.json'):

new_name = child_dir.split('.json')[0] + '.jpg'

old_pic = os.path.join(src_dir, new_name)

img = Image.open(old_pic)

new_pic = os.path.join(pic_dest_dir, new_name)

print("copy pic:",new_pic)

img.save(new_pic)

def copy_json_to_json():

from PIL import Image

import numpy as np

import shutil

import os

for child_dir in os.listdir(src_dir):

if child_dir.endswith('_json'):

old_json = os.path.join(src_dir, child_dir)

new_json = os.path.join(json_dest_dir, child_dir)

print("copy json:",new_json)

shutil.copytree(old_json,new_json)

img_16to8()

copy8bit_to_pic()

copy_json_to_json()

预处理完成后进入训练阶段

训练阶段

代码 从https://blog.csdn.net/qq_36810544/article/details/83582397#commentsedit 修改的

#!/usr/bin/env python

# coding: utf-8

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

import yaml

from PIL import Image

# Root directory of the project

ROOT_DIR = os.path.abspath("../../")

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn.config import Config

from mrcnn import utils

from mrcnn import model as modellib

# Directory to save logs and trained models

MODEL_DIR = os.path.join(ROOT_DIR, "tooth_logs")

iter_num = 0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

print("check ROOT_DIR:",ROOT_DIR)

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Backbone network architecture

# Supported values are: resnet50, resnet101.

# You can also provide a callable that should have the signature

# of model.resnet_graph. If you do so, you need to supply a callable

# to COMPUTE_BACKBONE_SHAPE as well

BACKBONE = "resnet50"

# Number of classes (including background)

NUM_CLASSES = 1 + 3 # background + 1 class 1 tooth 125 加类 262 修改

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 128

IMAGE_MAX_DIM = 128

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 20

# Use a small epoch since the data is simple GPUt 占用过高

STEPS_PER_EPOCH = 10

# use small validation steps since the epoch is small GPU 占用过高

VALIDATION_STEPS = 5

class DrugDataset(utils.Dataset):

# ?????????ж????????????壩

#得到该图中有多少实例

def get_obj_index(self, image):

n = np.max(image)

return n

# ????labelme?е????yaml???????????mask??????????????

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read())

labels = temp['label_names']

del labels[0]

return labels

# ????дdraw_mask

def draw_mask(self, num_obj, mask, image, image_id):

# print("draw_mask-->",image_id)

# print("self.image_info",self.image_info)

info = self.image_info[image_id]

# print("info-->",info)

# print("info[width]----->",info['width'],"-info[height]--->",info['height'])

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

# print("image_id-->",image_id,"-i--->",i,"-j--->",j)

# print("info[width]----->",info['width'],"-info[height]--->",info['height'])

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

# ????дload_shapes??????????????????????

# ????self.image_info??????????path??mask_path ??yaml_path

# yaml_pathdataset_root_path = "/tongue_dateset/"

# img_floder = dataset_root_path + "rgb"

# mask_floder = dataset_root_path + "mask"

# dataset_root_path = "/tongue_dateset/"

def load_shapes(self, count, img_floder, mask_floder, imglist, dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes

self.add_class("shapes", 1, "t") #t t_1 t_2

self.add_class("shapes", 2, "t_1")

self.add_class("shapes", 3, "t_2")

for i in range(count):

# ?????????

filestr = imglist[i].split(".")[0]

# print(imglist[i],"-->",cv_img.shape[1],"--->",cv_img.shape[0])

# print("id-->", i, " imglist[", i, "]-->", imglist[i],"filestr-->",filestr)

# filestr = filestr.split("_")[1]

mask_path = mask_floder + "/" + filestr + ".png"

yaml_path = dataset_root_path + "/tooth_json/" + filestr + "_json/info.yaml"

print(dataset_root_path + "/tooth_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "/tooth_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

# ??дload_mask

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

print("image_id", image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img, image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("t") != -1:

# print "box"

labels_form.append("t")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def get_ax(rows=1, cols=1, size=8):

"""Return a Matplotlib Axes array to be used in

all visualizations in the notebook. Provide a

central point to control graph sizes.

Change the default size attribute to control the size

of rendered images

"""

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

def train_model():

# ????????

dataset_root_path = r"F:\Python Project\DL\Mask_RCNN-master"

img_floder = os.path.join(dataset_root_path, "tooth_pic")

mask_floder = os.path.join(dataset_root_path, "tooth_cv2_mask")

# yaml_floder = dataset_root_path

imglist = os.listdir(img_floder)

count = len(imglist)

# train??val????????

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_floder, mask_floder, imglist, dataset_root_path)

dataset_train.prepare()

# print("dataset_train-->",dataset_train._image_ids)

dataset_val = DrugDataset()

dataset_val.load_shapes(count, img_floder, mask_floder, imglist, dataset_root_path)

dataset_val.prepare()

# Create models in training mode

config = ShapesConfig()

config.display()

model = modellib.MaskRCNN(mode="training", config=config, model_dir=MODEL_DIR)

# Which weights to start with?

# ?????????????????coco????????????????????last

init_with = "coco" # imagenet, coco, or last firt time use coco

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

# Load weights trained on MS COCO, but skip layers that

# are different due to the different number of classes

# See README for instructions to download the COCO weights

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last models you trained and continue training

checkpoint_file = model.find_last()

model.load_weights(checkpoint_file, by_name=True)

# Train the head branches

# Passing layers="heads" freezes all layers except the head

# layers. You can also pass a regular expression to select

# which layers to train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs=10,

layers='heads')

# Fine tune all layers

# Passing layers="all" trains all layers. You can also

# pass a regular expression to select which layers to

# train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE / 10,

epochs=30,

layers="all")

class TongueConfig(ShapesConfig):

GPU_COUNT = 1

IMAGES_PER_GPU = 1

def predict():

import skimage.io

from mrcnn import visualize

# Create models in training mode

config = TongueConfig()

config.display()

model = modellib.MaskRCNN(mode="inference", config=config, model_dir=MODEL_DIR)

model_path = model.find_last()

# Load trained weights (fill in path to trained weights here)

assert model_path != "", "Provide path to trained weights"

print("Loading weights from ", model_path)

model.load_weights(model_path, by_name=True)

class_names = ['BG', 't','t_1','t_2']

#class_names = ['BG', 't']

pre_dir = r'F:\Python Project\DL\Mask_RCNN-master\samples\tooth'

for child_dir in os.listdir(pre_dir):

# Load a random image from the images folder

#file_names = r'F:\Python Project\DL\Mask_RCNN-master\samples\tooth\IMG_1610.jpg' # next(os.walk(IMAGE_DIR))[2]

if child_dir.endswith('.jpg') or child_dir.endswith('.JPG'):

file_name=os.path.join(pre_dir, child_dir)

# image = skimage.io.imread(os.path.join(IMAGE_DIR, random.choice(file_names)))

image = skimage.io.imread(file_name)

# Run detection

results = model.detect([image], verbose=1)

# Visualize results

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'], class_names, r['scores'])

#skimage.io.imsave(image,file_name+'_1.jpg')

#image.save(file_name+'_1.jpg')

print(file_name,':',r['scores'])

if __name__ == "__main__":

train_model()

predict()

遇到的问题主要是GPU 内存不够

报错三GUP out of memory 00M

调整参数1

IMAGE_MIN_DIM = 128

IMAGE_MAX_DIM = 128

调整参数使数值满足被64整除,设置最小,防止内存不够

调整参数2

BACKBONE = “resnet50” ;这个是迁移学习调用的模型,分为resnet101和resnet50,电脑性能不是特别好的话,建议选择resnet50,这样网络更小,训练的更快。

修改建议:https://github.com/matterport/Mask_RCNN/wiki

默认的是resnet101,模型影响很大 最后定下的这几参数

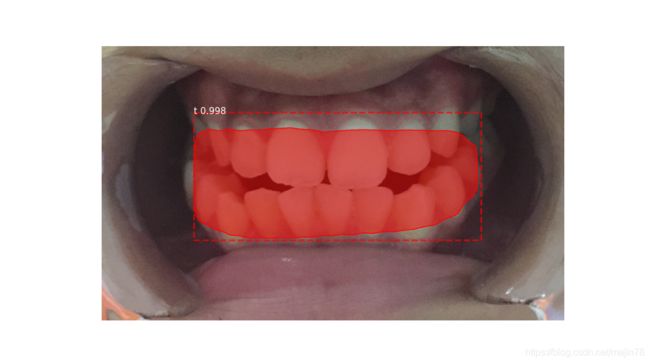

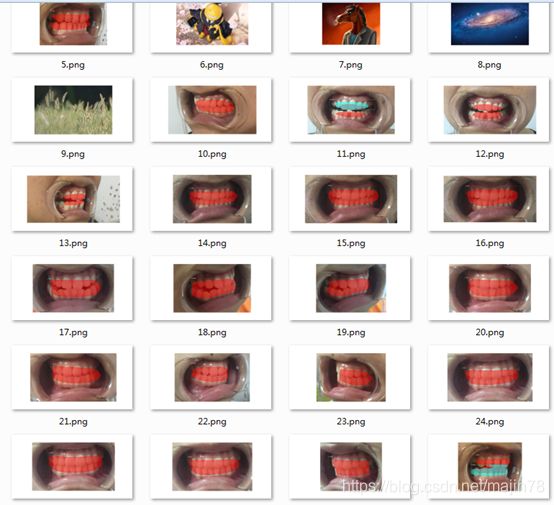

测试结果

修改训练模型后,训练完成,训练费时半小时左右。第一轮训练如下,没报错就可以了,中途的时候拿没训练好的结果测试,也能出来个图。挺方便的。

mrcnn_class_loss: 0.0385 - mrcnn_bbox_loss: 0.0051 - mrcnn_mask_loss: 0.1063image_id 0

image_id 1

image_id 2

image_id 1

image_id 0

image_id 1

10/10 [==============================] - 7s 725ms/step - loss: 0.1597 - rpn_class_loss: 0.0019 - rpn_bbox_loss: 0.0033 - mrcnn_class_loss: 0.0368 - mrcnn_bbox_loss: 0.0055 - mrcnn_mask_loss: 0.1122 - val_loss: 0.1445 - val_rpn_class_loss: 0.0020 - val_rpn_bbox_loss: 0.0026 - val_mrcnn_class_loss: 0.0270 - val_mrcnn_bbox_loss: 0.0083 - val_mrcnn_mask_loss: 0.1046这是效果图。图片有点恐怖呀,非喜勿入

用的大概18个训练集

左侧是二分类的结果,右侧是3个类别的结果。左侧随机图,4张均未检出。右侧牙齿部门有两张检测。

介绍完毕

接下做正式的训练集。

加几个参考过的博客

https://github.com/matterport/Mask_RCNN

https://blog.csdn.net/qq_15969343/article/details/80167215 matterport版mask_rcnn系列 扫盲

有完整过程的参考

https://blog.csdn.net/qq_15969343/article/details/80893844 Mask_RCNN训练自己的数据 训练交通车辆模型

https://blog.csdn.net/qq_15969343/article/details/80388311 Mask_RCNN:使用自己训练好的模型进行预测 人像

https://blog.csdn.net/l297969586/article/details/79140840/ Mask RCNN训练自己的数据集 摄像头下物品检测

https://blog.csdn.net/lovebyz/article/details/80138261Mask R-CNN 训练自己的数据集—踩坑与填坑

https://blog.csdn.net/qq_29462849/article/details/81037343mask rcnn训练自己的数据集 过程简洁。全流程参考

https://blog.csdn.net/Xiongchao99/article/details/79106588 Mask R-CNN+tensorflow/keras的配置介绍、代码详解与训练自己的数据集演示 比较详细的完整过程

https://blog.csdn.net/u012746060/article/details/82143285Win10系统下一步一步教你实现MASK_RCNN训练自己的数据集(使用labelme制作自己的数据集)及需要注意的大坑 比较完整

改进的细节

https://blog.csdn.net/m0_37957160/article/details/82757780解决Mask RCNN训练时GPU内存溢出问题 并没有解决,最后是修改了训练模型

https://blog.csdn.net/yql_617540298/article/details/81123147?utm_source=blogxgwz5 Mask_RCNN实现批量测试并保存测试结果到文件夹内

https://blog.csdn.net/yql_617540298/article/details/81780850Mask-RCNN校验结果计算mAP值