论文阅读 A simple yet effective baseline for 3d human pose estimation

A simple yet effective baseline for 3d human pose estimation

一个简单有效的3d人体姿态估计基准

Abstract

继深层卷积网络的成功之后,用于3D人体姿势估计的最新方法已集中于在给定原始图像像素的情况下预测3D联合位置的深层端到端系统。

尽管它们具有出色的性能,但通常很难理解其剩余错误是由于有限的2D姿势(视觉)理解还是由于未能将2D姿势映射到3维位置而引起的。

为了理解这些错误源,我们着手建立一个给定2d关节位置可预测3d位置的系统。令我们惊讶的是,我们发现,使用当前的技术,将真实的2d联合位置“提升”到3d空间是可以以非常低的错误率解决的任务:相对简单的深度前馈网络在性能上要比目前最好的结果好30%在Human3.6M(最大的公开3d姿态估计基准)上。此外,在现有的最新2D探测器的输出上训练我们的系统(即使用图像作为输入)会产生最新的结果——其中包括一系列已端对端训练的系统专为此任务。我们的结果表明,现代深度3d姿态估计系统的很大一部分误差源于其视觉分析,并提出了进一步推进3d人体姿势估计技术水平的方向。

1. Introduction

在本文中,我们将集中讨论此空间推理问题的一个特殊实例:从单个图像进行3d人为估计。

探索将3d姿态估计解耦到充分研究2d姿态估计问题的能力,和2d联合检测的3d姿态估计,重点是后者。

将姿势估计分为这两个问题,可以利用现有的2d姿势估计系统,该系统已经为上述因素提供了不变性。此外,我们可以使用在受控环境中捕获的大量3D Mocap数据来训练2D至3D问题的数据渴望算法,同时使用可处理大量数据的低维表示形式。

我们对这个问题的主要贡献是神经网络的设计和分析,该神经网络的性能略优于最新系统

代码:https://github.com/una-dinosauria/3d-pose-baseline

2. Previous work

·······

3. Solution methodology

我们的目标是在给定二维输入的情况下估计3维空间中的人体关节位置。形式上,我们的输入是一系列2d点 x ∈ R 2 n x∈\R^{2n} x∈R2n,而我们的输出是3d空间 y ∈ R 3 n y∈\R^{3n} y∈R3n 中的一系列点。我们旨在学习一个函数 f ∗ : R 2 n → R 3 n f ^∗:\R^{2n}→\R^{3n} f∗:R2n→R3n,该函数可将 N N N个姿势的数据集上的预测误差最小化:

loss函数: f ∗ = min f ∑ i = 1 N L ( f ( x i ) − y i ) f^∗= \displaystyle\min_f\sum_{i=1}^{N}L(f(x_i)−y_i) f∗=fmini=1∑NL(f(xi)−yi)

参数解释:

x i x_i xi: 实际上,可以在已知摄像机参数下或使用2d关节检测器将 x i x_i xi 作为ground truth 2d关节位置获得。通常会相对于其根关节预测相对于固定全局空间的3d位置,从而产生较小尺寸的输出(?)。

f ∗ f^* f∗:深度神经网络系统

3.1. Our approach – network design

基于一个简单、深入、多层的神经网络,该网络具有批处理标准化、丢弃、relu激活以及残差连接。未指定的是两个额外的线性层:一个直接应用于输入,使其维度增加到1024;另一个应用于最终预测之前,产生大小为3n的输出。在我们的大多数实验中,我们使用了2个残差块,这意味着我们总共有6个线性层,我们的模型包含了400万到500万个可训练参数。

- 2d/3d positions:将2d和3d点坐标用为输入和输出,尽管二维检测所携带的信息较少,但它们的维数较低,使其非常有吸引力。例如,在训练网络时,可以轻松地将整个Human3.6M数据集存储在GPU中,这减少了总体训练时间,并且极大地允许我们加快对网络设计和训练超参数的搜索。

- Linear-RELU layers:由于我们将低维点作为输入和输出进行处理,因此可以使用更简单且计算开销较小的线性层。 RELU是在深度神经网络中添加非线性的标准选择。

- Residual connections:我们发现残差连接最近被提出来作为一种很深的卷积神经网络的训练技术,它改善了泛化性能并减少了训练时间。(当网络很深的时候,可能会发生梯度消失,导数接近于0,参数无法更新,残差网络可以使导数在1附近浮动)在我们的案例中,它们帮助我们将误差减少了大约10%。

- Batch normalization and dropout:尽管具有上述三个组件的简单网络在ground truth 2d位置上训练时在2d到3d姿态估计上获得了良好的性能,但我们发现,在2d检测器的输出上进行训练或在2d ground truth并在嘈杂的二维观测中进行测试,其效果不佳。在这两种情况下,批处理规范化和丢弃改善了我们系统的性能,同时导致训练和测试时间略有增加。

- Max-norm constraint:我们还对每层的权重施加了约束,以使它们的最大范数小于或等于1。结合批归一化,我们发现当训练和测试示例之间的分布不同时,这可以稳定训练并提高通用化。(???)

代码

"""Simple model to regress 3d human poses from 2d joint locations"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from tensorflow.python.ops import variable_scope as vs

import os

import numpy as np

from six.moves import xrange # pylint: disable=redefined-builtin

import tensorflow as tf

import data_utils

import cameras as cam

def kaiming(shape, dtype, partition_info=None):

"""Kaiming initialization as described in https://arxiv.org/pdf/1502.01852.pdf

Args

shape: dimensions of the tf array to initialize /tf数组初始化的尺寸

dtype: data type of the array /数组的数据类型

partition_info: (Optional) info about how the variable is partitioned. /(可选)关于变量如何分区的信息

See https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/ops/init_ops.py#L26

Needed to be used as an initializer. /需要作为初始化器使用

Returns

Tensorflow array with initial weights /具有初始权重的Tensorflow数组

"""

"""

truncated_normal函数:从截断的正态分布输出随机值。

生成的值遵循一定的均值和标准差的正态分布,但对其幅度大于均值2个标准差的值进行丢弃和重新挑选。

"""

return(tf.truncated_normal(shape, dtype=dtype)*tf.sqrt(2/float(shape[0])))

class LinearModel(object):

""" A simple Linear+RELU model """

def __init__(self,

linear_size,

num_layers,

residual,

batch_norm,

max_norm,

batch_size,

learning_rate,

summaries_dir,

predict_14=False,

dtype=tf.float32):

"""Creates the linear + relu model

Args

linear_size: integer. number of units in each layer of the model /整数。模型每一层的单元数

num_layers: integer. number of bilinear blocks in the model /整数。模型中双线性块的数目

residual: boolean. Whether to add residual connections /布尔。是否添加剩余连接

batch_norm: boolean. Whether to use batch normalization /布尔。是否使用批处理标准化

max_norm: boolean. Whether to clip weights to a norm of 1 /布尔。是否将权重修剪为1

batch_size: integer. The size of the batches used during training /整数。训练期间使用的批次的大小

learning_rate: float. Learning rate to start with /浮点数。学习率

summaries_dir: String. Directory where to log progress /字符串。记录进程的目录

predict_14: boolean. Whether to predict 14 instead of 17 joints /布尔。是否预测14个关节而不是17个关节

dtype: the data type to use to store internal variables /用于存储内部变量的数据类型

"""

# There are in total 17 joints in H3.6M and 16 in MPII (and therefore in stacked

# hourglass detections). We settled with 16 joints in 2d just to make models

# compatible (e.g. you can train on ground truth 2d and test on SH detections).

# 在H3.6M内共有17个关节,在MPII内有16个关节(因此在堆叠沙漏检测中)。我们在2d中使用了16个关节,只是为了使模型兼容

# (例如,你可以在2d的地面真相训练和SH探测测试)。

# This does not seem to have an effect on prediction performance.

# 这似乎对预测性能没有影响

self.HUMAN_2D_SIZE = 16 * 2

# In 3d all the predictions are zero-centered around the root (hip) joint, so

# we actually predict only 16 joints. The error is still computed over 17 joints,

# because if one uses, e.g. Procrustes alignment, there is still error in the

# hip to account for!

# There is also an option to predict only 14 joints, which makes our results

# directly comparable to those in https://arxiv.org/pdf/1611.09010.pdf

# 在3d中,所有的预测都是以根关节(髋关节)为中心的

# 所以我们实际上只能预测16个关节。误差仍然是计算超过17个关节

# 因为如果一个人使用,例如Procrustes对齐,仍然有误差在髋关节的解释!

# 还有一种预测只有14个关节的方法

# 这使得我们的结果可以直接与https://arxiv.org/pdf/1611.09010.pdf中的结果进行比较

self.HUMAN_3D_SIZE = 14 * 3 if predict_14 else 16 * 3

self.input_size = self.HUMAN_2D_SIZE

self.output_size = self.HUMAN_3D_SIZE

self.isTraining = tf.placeholder(tf.bool, name="isTrainingflag")

self.dropout_keep_prob = tf.placeholder(tf.float32, name="dropout_keep_prob")

# Summary writers for train and test runs /编写训练和测试的摘要(总结)

self.train_writer = tf.summary.FileWriter( os.path.join(summaries_dir, 'train' ))

self.test_writer = tf.summary.FileWriter( os.path.join(summaries_dir, 'test' ))

self.linear_size = linear_size

self.batch_size = batch_size

self.learning_rate = tf.Variable( float(learning_rate), trainable=False, dtype=dtype, name="learning_rate")

self.global_step = tf.Variable(0, trainable=False, name="global_step")

decay_steps = 100000 # empirical

decay_rate = 0.96 # empirical

self.learning_rate = tf.train.exponential_decay(self.learning_rate, self.global_step, decay_steps, decay_rate)

# === Transform the inputs === /对输入数据进行转换

with vs.variable_scope("inputs"): # inputs的变量作用域

# in=2d poses, out=3d poses /输入2d姿态,输出3d姿态

enc_in = tf.placeholder(dtype, shape=[None, self.input_size], name="enc_in")

dec_out = tf.placeholder(dtype, shape=[None, self.output_size], name="dec_out")

self.encoder_inputs = enc_in

self.decoder_outputs = dec_out

# === Create the linear + relu combos === /创建 线性层+ relu激活函数 组合

with vs.variable_scope( "linear_model" ):

# === First layer, brings dimensionality up to linear_size === /第一层,将维度提升到linear_size

w1 = tf.get_variable( name="w1", initializer=kaiming, shape=[self.HUMAN_2D_SIZE, linear_size], dtype=dtype )

# w1有HUMAN_2D_SIZE,linear_size列,改变张量大小

b1 = tf.get_variable( name="b1", initializer=kaiming, shape=[linear_size], dtype=dtype )

w1 = tf.clip_by_norm(w1,1) if max_norm else w1 # max_norm: 是否将权重修剪为1

y3 = tf.matmul( enc_in, w1 ) + b1 # y = x * w + b

if batch_norm: # 是否使用批处理标准化

y3 = tf.layers.batch_normalization(y3,training=self.isTraining, name="batch_normalization") # 对y3实行 (x - u)/std的归一化操作

y3 = tf.nn.relu( y3 ) # 激活函数

y3 = tf.nn.dropout( y3, self.dropout_keep_prob )

# === Create multiple bi-linear layers === /创建多个双线性层

for idx in range( num_layers ): # 重复创建num_layers个线性层

y3 = self.two_linear( y3, linear_size, residual, self.dropout_keep_prob, max_norm, batch_norm, dtype, idx )

# === Last linear layer has HUMAN_3D_SIZE in output === /最后一个线性层的输出是HUMAN_3D_SIZE

w4 = tf.get_variable( name="w4", initializer=kaiming, shape=[linear_size, self.HUMAN_3D_SIZE], dtype=dtype )

b4 = tf.get_variable( name="b4", initializer=kaiming, shape=[self.HUMAN_3D_SIZE], dtype=dtype )

w4 = tf.clip_by_norm(w4,1) if max_norm else w4

y = tf.matmul(y3, w4) + b4 # y是最终的三维姿态输出结果

# === End linear model === /线性模型结束

# Store the outputs here /存储输出

self.outputs = y

self.loss = tf.reduce_mean(tf.square(y - dec_out)) # 定义损失函数: (y'-y)^2。(reduce_mean: 不算均值)

self.loss_summary = tf.summary.scalar('loss/loss', self.loss)

# To keep track of the loss in mm /用毫米记录损失值

self.err_mm = tf.placeholder( tf.float32, name="error_mm" )

self.err_mm_summary = tf.summary.scalar( "loss/error_mm", self.err_mm )

# Gradients and update operation for training the model. /训练模型的梯度和更新操作

opt = tf.train.AdamOptimizer( self.learning_rate ) # 优化器,梯度下降,设置学习率

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS) # 收集参数,参数更新

with tf.control_dependencies(update_ops):

# Update all the trainable parameters /更新所有可训练参数

gradients = opt.compute_gradients(self.loss)

self.gradients = [[] if i==None else i for i in gradients]

self.updates = opt.apply_gradients(gradients, global_step=self.global_step)

# Keep track of the learning rate /记录学习率

self.learning_rate_summary = tf.summary.scalar('learning_rate/learning_rate', self.learning_rate)

# To save the model /保存模型

self.saver = tf.train.Saver( tf.global_variables(), max_to_keep=10 )

def two_linear( self, xin, linear_size, residual, dropout_keep_prob, max_norm, batch_norm, dtype, idx ):

"""

Make a bi-linear block with optional residual connection

Args

xin: the batch that enters the block

linear_size: integer. The size of the linear units

residual: boolean. Whether to add a residual connection

dropout_keep_prob: float [0,1]. Probability of dropping something out

max_norm: boolean. Whether to clip weights to 1-norm

batch_norm: boolean. Whether to do batch normalization

dtype: type of the weigths. Usually tf.float32

idx: integer. Number of layer (for naming/scoping)

Returns

y: the batch after it leaves the block

"""

with vs.variable_scope( "two_linear_"+str(idx) ) as scope: # two_linear+第index层,变量名作用域

input_size = int(xin.get_shape()[1])

# Linear 1

w2 = tf.get_variable( name="w2_"+str(idx), initializer=kaiming, shape=[input_size, linear_size], dtype=dtype)

b2 = tf.get_variable( name="b2_"+str(idx), initializer=kaiming, shape=[linear_size], dtype=dtype)

w2 = tf.clip_by_norm(w2,1) if max_norm else w2

y = tf.matmul(xin, w2) + b2

if batch_norm:

y = tf.layers.batch_normalization(y,training=self.isTraining,name="batch_normalization1"+str(idx))

y = tf.nn.relu( y )

y = tf.nn.dropout( y, dropout_keep_prob )

# Linear 2

w3 = tf.get_variable( name="w3_"+str(idx), initializer=kaiming, shape=[linear_size, linear_size], dtype=dtype)

b3 = tf.get_variable( name="b3_"+str(idx), initializer=kaiming, shape=[linear_size], dtype=dtype)

w3 = tf.clip_by_norm(w3,1) if max_norm else w3

y = tf.matmul(y, w3) + b3

if batch_norm:

y = tf.layers.batch_normalization(y,training=self.isTraining,name="batch_normalization2"+str(idx))

y = tf.nn.relu( y )

y = tf.nn.dropout( y, dropout_keep_prob )

# Residual every 2 blocks

y = (xin + y) if residual else y

return y

def step(self, session, encoder_inputs, decoder_outputs, dropout_keep_prob, isTraining=True):

"""Run a step of the model feeding the given inputs. /运行模型的一个步骤,提供给定的输入。

Args

session: tensorflow session to use /使用tensorflow

encoder_inputs: list of numpy vectors to feed as encoder inputs /作为编码器输入提供的numpy向量的列表

decoder_outputs: list of numpy vectors that are the expected decoder outputs /预期解码器输出的numpy向量的列表

dropout_keep_prob: (0,1] dropout keep probability /(0,1]保持退出概率

isTraining: whether to do the backward step or only forward / 做反向传播还是只做前向传播

Returns

if isTraining is True, a 4-tuple /如果做反向传播,返回一个四元组

loss: the computed loss of this batch /这一批次的损失值

loss_summary: tf summary of this batch loss, to log on tensorboard /此批次损失值的tf摘要记录

learning_rate_summary: tf summary of learnign rate to log on tensorboard /此批次的学习率记录

outputs: predicted 3d poses /预测的3d姿势

if isTraining is False, a 3-tuple /如果只做前馈,返回一个三元组(就没有学习率了,因为不需要反馈更新)

(loss, loss_summary, outputs) same as above

"""

input_feed = {

self.encoder_inputs: encoder_inputs,

self.decoder_outputs: decoder_outputs,

self.isTraining: isTraining,

self.dropout_keep_prob: dropout_keep_prob}

# Output feed: depends on whether we do a backward step or not. /取决于是否做反馈

if isTraining:

output_feed = [self.updates, # Update Op that does SGD /更新执行梯度下降的操作

self.loss,

self.loss_summary,

self.learning_rate_summary,

self.outputs]

outputs = session.run( output_feed, input_feed )

return outputs[1], outputs[2], outputs[3], outputs[4]

else:

output_feed = [self.loss, # Loss for this batch. /这批次的损失

self.loss_summary,

self.outputs]

outputs = session.run(output_feed, input_feed)

return outputs[0], outputs[1], outputs[2] # No gradient norm

def get_all_batches( self, data_x, data_y, camera_frame, training=True ):

"""

Obtain a list of all the batches, randomly permutted /获取随机排列的所有批次的列表

Args

data_x: dictionary with 2d inputs /带有2d输入的字典

data_y: dictionary with 3d expected outputs /具有3d预期输出的字典

camera_frame: whether the 3d data is in camera coordinates /3d数据是否为相机坐标

training: True if this is a training batch. False otherwise. /是否是训练批次

Returns

encoder_inputs: list of 2d batches /2d批次的列表

decoder_outputs: list of 3d batches /3d批次的列表

"""

# Figure out how many frames we have /算出我们有多少帧

n = 0

for key2d in data_x.keys():

n2d, _ = data_x[ key2d ].shape

n = n + n2d

encoder_inputs = np.zeros((n, self.input_size), dtype=float)

decoder_outputs = np.zeros((n, self.output_size), dtype=float)

# Put all the data into big arrays /将所有数据放入大数组中

idx = 0

for key2d in data_x.keys():

(subj, b, fname) = key2d

# keys should be the same if 3d is in camera coordinates /如果3d是在摄像机坐标中,键应该是相同的???????

key3d = key2d if (camera_frame) else (subj, b, '{0}.h5'.format(fname.split('.')[0]))

key3d = (subj, b, fname[:-3]) if fname.endswith('-sh') and camera_frame else key3d

n2d, _ = data_x[ key2d ].shape

encoder_inputs[idx:idx+n2d, :] = data_x[ key2d ]

decoder_outputs[idx:idx+n2d, :] = data_y[ key3d ]

idx = idx + n2d

if training:

# Randomly permute everything /随机排列一切

idx = np.random.permutation( n ) # 生成长度为n的随机排列,返回一个索引

encoder_inputs = encoder_inputs[idx, :]

decoder_outputs = decoder_outputs[idx, :]

# Make the number of examples a multiple of the batch size /将示例数量设置为批大小的倍数

n_extra = n % self.batch_size

if n_extra > 0: # Otherwise examples are already a multiple of batch size /取模结果大于0,则说明不是批处理大小的倍数。否则,示例已经是批大小的倍数

encoder_inputs = encoder_inputs[:-n_extra, :] # 减去多余的数量

decoder_outputs = decoder_outputs[:-n_extra, :]

n_batches = n // self.batch_size # 做整数除法,计算有多少批次

encoder_inputs = np.split( encoder_inputs, n_batches ) # 进行切割

decoder_outputs = np.split( decoder_outputs, n_batches )

return encoder_inputs, decoder_outputs

PyTorch版的代码更加简洁:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

from __future__ import absolute_import

from __future__ import print_function

import torch.nn as nn

def weight_init(m): # 权重初始化

if isinstance(m, nn.Linear): # 判断和线性层类型是否相同

nn.init.kaiming_normal_(m.weight) # kaiming正态分布初始化权重:https://blog.csdn.net/VictoriaW/article/details/73166752

class Linear(nn.Module): # 一个单元

def __init__(self, linear_size, p_dropout=0.5):

super(Linear, self).__init__()

self.l_size = linear_size # 线性层大小

self.relu = nn.ReLU(inplace=True) # 激活函数

self.dropout = nn.Dropout(p_dropout) # 丢弃比例

self.w1 = nn.Linear(self.l_size, self.l_size) # 矩阵大小[l_size, l_size]

self.batch_norm1 = nn.BatchNorm1d(self.l_size) # 批处理正则化大小

self.w2 = nn.Linear(self.l_size, self.l_size)

self.batch_norm2 = nn.BatchNorm1d(self.l_size)

def forward(self, x):

y = self.w1(x)

y = self.batch_norm1(y)

y = self.relu(y)

y = self.dropout(y)

y = self.w2(y)

y = self.batch_norm2(y)

y = self.relu(y)

y = self.dropout(y)

out = x + y

return out

class LinearModel(nn.Module): # 训练模型

def __init__(self,

linear_size=1024,

num_stage=2,

p_dropout=0.5):

super(LinearModel, self).__init__()

self.linear_size = linear_size # 输入1024

self.p_dropout = p_dropout # 丢弃0.5比例的参数

self.num_stage = num_stage # 两个重复单元

# 2d joints

self.input_size = 16 * 2 # 2d关节点做输入的大小

# 3d joints

self.output_size = 16 * 3 # 3d关节点做输出

# process input to linear size /将输入大小通过一个线性层变为1024维度

self.w1 = nn.Linear(self.input_size, self.linear_size)

self.batch_norm1 = nn.BatchNorm1d(self.linear_size) # 批处理正则化

self.linear_stages = []

for l in range(num_stage):

self.linear_stages.append(Linear(self.linear_size, self.p_dropout)) # 向列表里加入个数为num_stage = 2的Linear类型的的实例

self.linear_stages = nn.ModuleList(self.linear_stages) # 变为模型列表

# post processing / 后面的过程

self.w2 = nn.Linear(self.linear_size, self.output_size) # 将线性层的大小1024变为输出关节点维度的大小

self.relu = nn.ReLU(inplace=True)

self.dropout = nn.Dropout(self.p_dropout)

def forward(self, x):

# pre-processing /预处理数据

y = self.w1(x) # 维度变为1024

y = self.batch_norm1(y) # 批处理正则化

y = self.relu(y) # 激活函数

y = self.dropout(y) # 丢弃0.5比例的参数

# linear layers /线性层

for i in range(self.num_stage): # 两个相同的模型单元

y = self.linear_stages[i](y) # 依次训练

y = self.w2(y) # 经过一个线性层改变输出维度,变为3d关节点的维度大小

return y

3.2. Data preprocessing

归一化处理:

我们通过减去平均值并除以标准偏差,将标准归一化应用于2d输入和3d输出。由于我们无法预测3d预测的全局位置,因此我们将髋关节周围的3d姿势定为零中心。

- Camera coordinates: 在我们看来,期望算法推断任意坐标空间中的三维关节位置是不现实的,因为任意坐标空间的平移或旋转都不会导致输入数据的变化。全局坐标系的自然选择是摄像机坐标系,因为这使得不同摄像机之间的2d到3d问题相似,隐式地为每个摄像机提供更多的训练数据,并防止对特定全局坐标系的过度拟合。在任意的全局坐标系下推导三维位姿的一个直接影响是不能回归到每个子节点的全局方向,从而导致所有关节产生较大的误差。注意,这个坐标系的定义是任意的,并不意味着我们在我们的测试中使用了位姿的groundtruth。

- 2d detections: 我们的2d探测结果来自于最新的在MPII数据集上预训练的Newell的堆叠沙漏网络。与先前的工作一样,我们使用H3.6M提供的bounding boxes来估计图像中的人的中心。我们剪裁一个440x440大小的图像。

我们还在Human3.6M数据集上微调了堆叠沙漏网络(在MPII上预训练过),这样使得2d关节点坐标检测更加精确,进而减少3d姿态估计的误差。因为GPU的内存限制,我们将原来的最小batch szie从6改成3。除此之外,我们对堆叠沙漏网络都使用默认的参数。我们设置学习率为 2.5 × 1 0 − 4 2.5 \times 10^{-4} 2.5×10−4,迭代训练40 000次。 - Training details: 我们使用Adam训练我们的网络200 epochs,开始的学习率是0.001并且指数递减,最小的batch size 是64。初始时,我们的线性层的权值使用Kaiming初始化。我们用tensorflow实现,初一个前向和反向传播过程花费大约5ms,在Titan Xp GPU只要2ms。也就是说,与最新的实时地2d探测模块一起,我们的网络可以达到实时的效果。在整个Human3.6M的数据集上训练一个epoch需要大概2分钟,这使我们可以训练一些架构的变种和超参数。

4. Experimental evaluation

Datasets and protocols: 使用Human3.6M的1,5,6,7,8来训练,用9,11来评估。

4.1. Quantitative results

我们的方法,基于从2d关节位置的直接回归,自然依赖于2d位姿检测器输出的质量,并在使用ground-truth 2d关节位置时获得最佳性能。

加了不同程度的高斯噪声,在不同方法下结果的对比:

表一

我们的方法在所有噪声水平上都大大优于距离矩阵方法,在对ground truth 2d投影进行训练时,误差达到峰值37.10 mm。这比我们所知的地面真相2d关节的最佳结果要好43%。此外,请注意,这个结果也比Pavlakos等人报告的51.9mm好30%左右。我们所知Human 3.6M的最佳结果——然而,他们的结果没有使用ground truth 2d位置,这使得这种比较不公平。

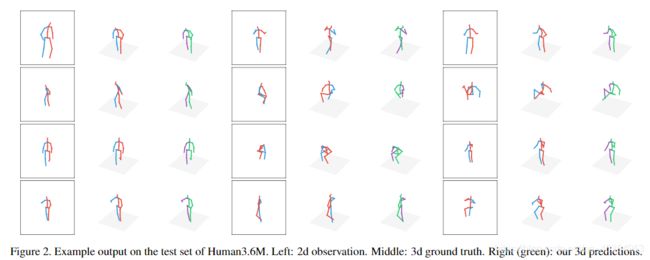

4.2. Qualitative results

最后,我们在图2中的Human3.6M上和在图3中的MPII测试集的“野外”图像中显示了一些定性结果。例如,我们的系统无法从失败的探测器输出中恢复,并且很难处理与H3.6M中的任何示例(例如,人倒置)不同的姿势。最后,在野外,大多数人的图像没有完整的身体,但在一定程度上被裁剪了。我们的系统经过全面的姿势训练,目前无法处理此类情况。