基于HRNet-Segmentation的遥感图像语义分割

HRNet网络结构

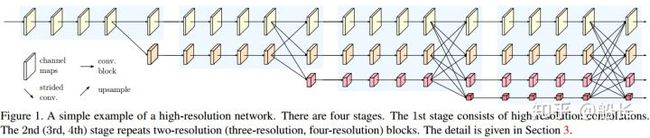

HRNet的设计思路延续了一路保持较大分辨率特征图的方法,在网络前进的过程中,都**保持较大的特征图**,但是在网路前进过程中,也会**平行地**做一些下采样缩小特征图,如此**迭代**下去。最后生成**多组有不同分辨率的特征图**,**再融合**这些特征图做Segmentation map的预测。

### 主干网络结构

上图是HRNet简单地示意图,生成多种不同分辨率的特征。这里需要注意的细节是,它在网络的前,中,后三段都做了特征融合,而不是仅仅在最后的特征图上做融合。别的好像也没什么了,结构和思路都比较简单,没有[前面的RefineNet](https://github.com/Captain1986/CaptainBlackboard/blob/master/D%230041-用RefineNet做分割/D%230041.md)那么复杂,就不多做介绍了。

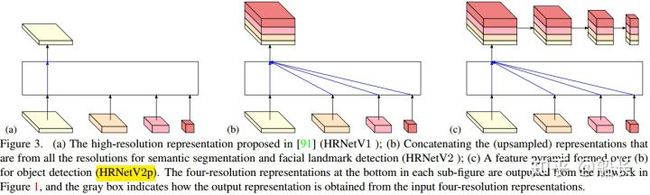

### 多分辨率融合Multi-resolution Fusion

HRNet作为主干网络提取了特征,这些特征有不同的分辨率,需要根据不同的任务来选择融合的方式。

如果做语义分割或者人脸特征点定位,那么就是如上图b中所示,把不同分辨率的特征通过upsample操作后得到一致的大分辨率特征图,然后concate起来做融合。整体思路很简单,不在阐述:

HRNet网络结构keras代码:源码:https://github.com/niecongchong/HRNet-keras-semantic-segmentation 或者 https://github.com/soyan1999/segmentation_hrnet_keras

import keras.backend as K

from keras.models import Model

from keras.layers import Input, Conv2D, BatchNormalization, Activation

from keras.layers import UpSampling2D, add, concatenate

from loss import *

from keras.optimizers import Adam,Nadam

def conv3x3(x, out_filters, strides=(1, 1)):

x = Conv2D(out_filters, 3, padding='same', strides=strides, use_bias=False, kernel_initializer='he_normal')(x)

return x

def basic_Block(input, out_filters, strides=(1, 1), with_conv_shortcut=False):

x = conv3x3(input, out_filters, strides)

x = BatchNormalization(axis=3)(x)

x = Activation('relu')(x)

x = conv3x3(x, out_filters)

x = BatchNormalization(axis=3)(x)

if with_conv_shortcut:

residual = Conv2D(out_filters, 1, strides=strides, use_bias=False, kernel_initializer='he_normal')(input)

residual = BatchNormalization(axis=3)(residual)

x = add([x, residual])

else:

x = add([x, input])

x = Activation('relu')(x)

return x

def bottleneck_Block(input, out_filters, strides=(1, 1), with_conv_shortcut=False):

expansion = 4

de_filters = int(out_filters / expansion)

x = Conv2D(de_filters, 1, use_bias=False, kernel_initializer='he_normal')(input)

x = BatchNormalization(axis=3)(x)

x = Activation('relu')(x)

x = Conv2D(de_filters, 3, strides=strides, padding='same', use_bias=False, kernel_initializer='he_normal')(x)

x = BatchNormalization(axis=3)(x)

x = Activation('relu')(x)

x = Conv2D(out_filters, 1, use_bias=False, kernel_initializer='he_normal')(x)

x = BatchNormalization(axis=3)(x)

if with_conv_shortcut:

residual = Conv2D(out_filters, 1, strides=strides, use_bias=False, kernel_initializer='he_normal')(input)

residual = BatchNormalization(axis=3)(residual)

x = add([x, residual])

else:

x = add([x, input])

x = Activation('relu')(x)

return x

def stem_net(input):

x = Conv2D(64, 3, strides=(2, 2), padding='same', use_bias=False, kernel_initializer='he_normal')(input)

x = BatchNormalization(axis=3)(x)

x = Activation('relu')(x)

# x = Conv2D(64, 3, strides=(2, 2), padding='same', use_bias=False, kernel_initializer='he_normal')(x)

# x = BatchNormalization(axis=3)(x)

# x = Activation('relu')(x)

x = bottleneck_Block(x, 256, with_conv_shortcut=True)

x = bottleneck_Block(x, 256, with_conv_shortcut=False)

x = bottleneck_Block(x, 256, with_conv_shortcut=False)

x = bottleneck_Block(x, 256, with_conv_shortcut=False)

return x

def transition_layer1(x, out_filters_list=[32, 64]):

x0 = Conv2D(out_filters_list[0], 3, padding='same', use_bias=False, kernel_initializer='he_normal')(x)

x0 = BatchNormalization(axis=3)(x0)

x0 = Activation('relu')(x0)

x1 = Conv2D(out_filters_list[1], 3, strides=(2, 2),

padding='same', use_bias=False, kernel_initializer='he_normal')(x)

x1 = BatchNormalization(axis=3)(x1)

x1 = Activation('relu')(x1)

return [x0, x1]

def make_branch1_0(x, out_filters=32):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def make_branch1_1(x, out_filters=64):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def fuse_layer1(x):

x0_0 = x[0]

x0_1 = Conv2D(32, 1, use_bias=False, kernel_initializer='he_normal')(x[1])

x0_1 = BatchNormalization(axis=3)(x0_1)

x0_1 = UpSampling2D(size=(2, 2))(x0_1)

x0 = add([x0_0, x0_1])

x1_0 = Conv2D(64, 3, strides=(2, 2), padding='same', use_bias=False, kernel_initializer='he_normal')(x[0])

x1_0 = BatchNormalization(axis=3)(x1_0)

x1_1 = x[1]

x1 = add([x1_0, x1_1])

return [x0, x1]

def transition_layer2(x, out_filters_list=[32, 64, 128]):

x0 = Conv2D(out_filters_list[0], 3, padding='same', use_bias=False, kernel_initializer='he_normal')(x[0])

x0 = BatchNormalization(axis=3)(x0)

x0 = Activation('relu')(x0)

x1 = Conv2D(out_filters_list[1], 3, padding='same', use_bias=False, kernel_initializer='he_normal')(x[1])

x1 = BatchNormalization(axis=3)(x1)

x1 = Activation('relu')(x1)

x2 = Conv2D(out_filters_list[2], 3, strides=(2, 2),

padding='same', use_bias=False, kernel_initializer='he_normal')(x[1])

x2 = BatchNormalization(axis=3)(x2)

x2 = Activation('relu')(x2)

return [x0, x1, x2]

def make_branch2_0(x, out_filters=32):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def make_branch2_1(x, out_filters=64):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def make_branch2_2(x, out_filters=128):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def fuse_layer2(x):

x0_0 = x[0]

x0_1 = Conv2D(32, 1, use_bias=False, kernel_initializer='he_normal')(x[1])

x0_1 = BatchNormalization(axis=3)(x0_1)

x0_1 = UpSampling2D(size=(2, 2))(x0_1)

x0_2 = Conv2D(32, 1, use_bias=False, kernel_initializer='he_normal')(x[2])

x0_2 = BatchNormalization(axis=3)(x0_2)

x0_2 = UpSampling2D(size=(4, 4))(x0_2)

x0 = add([x0_0, x0_1, x0_2])

x1_0 = Conv2D(64, 3, strides=(2, 2), padding='same', use_bias=False, kernel_initializer='he_normal')(x[0])

x1_0 = BatchNormalization(axis=3)(x1_0)

x1_1 = x[1]

x1_2 = Conv2D(64, 1, use_bias=False, kernel_initializer='he_normal')(x[2])

x1_2 = BatchNormalization(axis=3)(x1_2)

x1_2 = UpSampling2D(size=(2, 2))(x1_2)

x1 = add([x1_0, x1_1, x1_2])

x2_0 = Conv2D(32, 3, strides=(2, 2), padding='same', use_bias=False, kernel_initializer='he_normal')(x[0])

x2_0 = BatchNormalization(axis=3)(x2_0)

x2_0 = Activation('relu')(x2_0)

x2_0 = Conv2D(128, 3, strides=(2, 2), padding='same', use_bias=False, kernel_initializer='he_normal')(x2_0)

x2_0 = BatchNormalization(axis=3)(x2_0)

x2_1 = Conv2D(128, 3, strides=(2, 2), padding='same', use_bias=False, kernel_initializer='he_normal')(x[1])

x2_1 = BatchNormalization(axis=3)(x2_1)

x2_2 = x[2]

x2 = add([x2_0, x2_1, x2_2])

return [x0, x1, x2]

def transition_layer3(x, out_filters_list=[32, 64, 128, 256]):

x0 = Conv2D(out_filters_list[0], 3, padding='same', use_bias=False, kernel_initializer='he_normal')(x[0])

x0 = BatchNormalization(axis=3)(x0)

x0 = Activation('relu')(x0)

x1 = Conv2D(out_filters_list[1], 3, padding='same', use_bias=False, kernel_initializer='he_normal')(x[1])

x1 = BatchNormalization(axis=3)(x1)

x1 = Activation('relu')(x1)

x2 = Conv2D(out_filters_list[2], 3, padding='same', use_bias=False, kernel_initializer='he_normal')(x[2])

x2 = BatchNormalization(axis=3)(x2)

x2 = Activation('relu')(x2)

x3 = Conv2D(out_filters_list[3], 3, strides=(2, 2),

padding='same', use_bias=False, kernel_initializer='he_normal')(x[2])

x3 = BatchNormalization(axis=3)(x3)

x3 = Activation('relu')(x3)

return [x0, x1, x2, x3]

def make_branch3_0(x, out_filters=32):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def make_branch3_1(x, out_filters=64):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def make_branch3_2(x, out_filters=128):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def make_branch3_3(x, out_filters=256):

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

x = basic_Block(x, out_filters, with_conv_shortcut=False)

return x

def fuse_layer3(x):

x0_0 = x[0]

x0_1 = Conv2D(32, 1, use_bias=False, kernel_initializer='he_normal')(x[1])

x0_1 = BatchNormalization(axis=3)(x0_1)

x0_1 = UpSampling2D(size=(2, 2))(x0_1)

x0_2 = Conv2D(32, 1, use_bias=False, kernel_initializer='he_normal')(x[2])

x0_2 = BatchNormalization(axis=3)(x0_2)

x0_2 = UpSampling2D(size=(4, 4))(x0_2)

x0_3 = Conv2D(32, 1, use_bias=False, kernel_initializer='he_normal')(x[3])

x0_3 = BatchNormalization(axis=3)(x0_3)

x0_3 = UpSampling2D(size=(8, 8))(x0_3)

x0 = concatenate([x0_0, x0_1, x0_2, x0_3], axis=-1)

return x0

def final_layer(x, classes=1):

x = UpSampling2D(size=(2, 2))(x)

x = Conv2D(classes, 1, use_bias=False, kernel_initializer='he_normal')(x)

x = BatchNormalization(axis=3)(x)

x = Activation('softmax', name='Classification')(x)

return x

def seg_hrnet(height=320, width=320, channel=3, classes=5):

# inputs = Input(batch_shape=(batch_size,) + (height, width, channel))

inputs = Input(shape=(height, width, channel))

x = stem_net(inputs)

x = transition_layer1(x)

x0 = make_branch1_0(x[0])

x1 = make_branch1_1(x[1])

x = fuse_layer1([x0, x1])

x = transition_layer2(x)

x0 = make_branch2_0(x[0])

x1 = make_branch2_1(x[1])

x2 = make_branch2_2(x[2])

x = fuse_layer2([x0, x1, x2])

x = transition_layer3(x)

x0 = make_branch3_0(x[0])

x1 = make_branch3_1(x[1])

x2 = make_branch3_2(x[2])

x3 = make_branch3_3(x[3])

x = fuse_layer3([x0, x1, x2, x3])

out = final_layer(x, classes=classes)

model = Model(inputs=inputs, outputs=out)

adam=Adam(lr=1e-4, beta_1=0.9, beta_2=0.999, epsilon=1e-08)

model.compile(optimizer = Nadam(lr = 1e-4), loss = dices,metrics=['accuracy',precision,fbeta_score,fmeasure,IoU,recall])

return model

# from keras.utils import plot_model

# import os

# os.environ["PATH"] += os.pathsep + 'C:/Program Files (x86)/Graphviz2.38/bin/'

#

# model = seg_hrnet(batch_size=2, height=512, width=512, channel=3, classes=1)

# model.summary()

# plot_model(model, to_file='seg_hrnet.png', show_shapes=True)

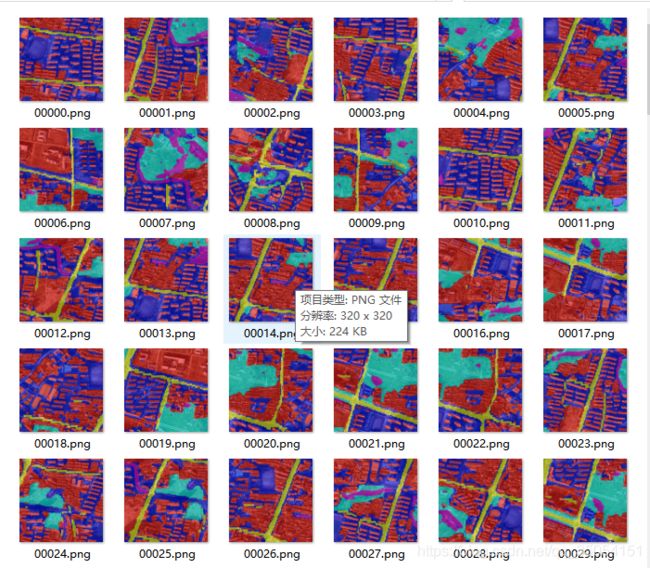

训练数据切分到320*320大小:

预测效果整体正常,只是loss没有继续收敛,如果loss继续收敛下降的话

应该会有不错的效果: