莫烦pytorch学习笔记3

莫烦pytorch学习笔记3

- 1.批次训练

- 2.优化器

1.批次训练

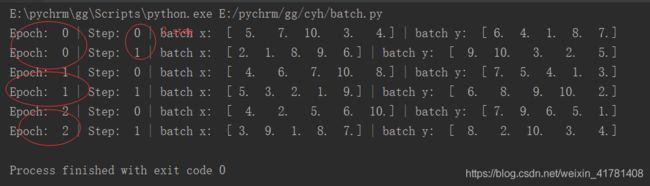

我们之前的代码中都是将所有的数据一起feed到神经网络中进行训练,但是当数据量比较大的时候,效率就会下下降,这个时候就需要批训练,分批把数据feed到网络中,这样会提高效率。

如果训练样本有 10 batch_size=5 每个epoch 需要进行10/5=2 step

import torch

import torch.utils.data as Data

torch.manual_seed(1) # reproducible

BATCH_SIZE = 5

x=torch.linspace(1,10,15)

y=torch.linspace(10,1,15)

torch_dataset = Data.TensorDataset(x,y)

loader = Data.DataLoader(

dataset=torch_dataset, # torch TensorDataset format

batch_size=BATCH_SIZE, # mini batch size

shuffle=True, # random shuffle for training 打乱

# num_workers=2, # subprocesses for loading data

)

for epoch in range(3): # train entire dataset 3 times

for step, (batch_x, batch_y) in enumerate(loader): # for each training step

# train your data...

print('Epoch: ', epoch, '| Step: ', step, '| batch x: ',

batch_x.numpy(), '| batch y: ', batch_y.numpy())

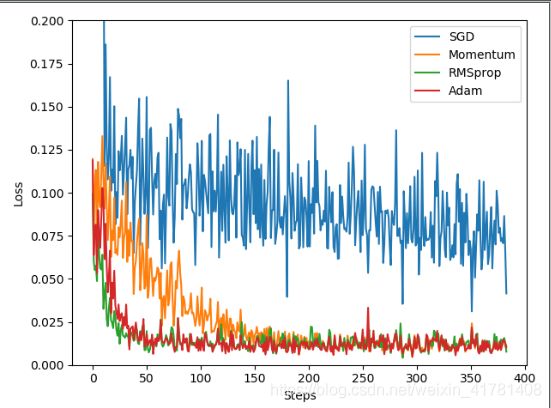

2.优化器

参考:https://blog.csdn.net/weixin_40170902/article/details/80092628

import torch

import torch.utils.data as Data

import torch.nn.functional as F

from torch.autograd import Variable

import matplotlib.pyplot as plt

torch.manual_seed(1) # reproducible

LR = 0.01

BATCH_SIZE = 32

EPOCH = 12

# fake dataset

x = torch.unsqueeze(torch.linspace(-1, 1, 1000), dim=1)

print(type(x))

y = x.pow(2) + 0.1*torch.normal(torch.zeros(*x.size()))

# plot dataset

plt.scatter(x.numpy(), y.numpy())

plt.show()

torch_dataset = Data.TensorDataset(x,y)

loader = Data.DataLoader(

dataset=torch_dataset,

batch_size=BATCH_SIZE,

shuffle=True,)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(1, 20) # hidden layer

self.predict = torch.nn.Linear(20, 1) # output layer

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = self.predict(x) # linear output

return x

net_SGD= Net()

net_Momentum= Net()

net_RMSprop= Net()

net_Adam= Net()

nets = [net_SGD, net_Momentum, net_RMSprop, net_Adam]

opt_SGD = torch.optim.SGD(net_SGD.parameters(), lr=LR)

opt_Momentum = torch.optim.SGD(net_Momentum.parameters(), lr=LR, momentum=0.8)

opt_RMSprop = torch.optim.RMSprop(net_RMSprop.parameters(), lr=LR, alpha=0.9)

opt_Adam = torch.optim.Adam(net_Adam.parameters(), lr=LR, betas=(0.9, 0.99))

optimizers = [opt_SGD, opt_Momentum, opt_RMSprop, opt_Adam]

loss_func = torch.nn.MSELoss()

losses_his = [[], [], [], []] # record loss

# training

for epoch in range(EPOCH):

print('Epoch: ', epoch)

for step, (batch_x, batch_y) in enumerate(loader): # for each training step

b_x = Variable(batch_x)

b_y = Variable(batch_y)

for net, opt, l_his in zip(nets, optimizers, losses_his):

output = net(b_x) # get output for every net

loss = loss_func(output, b_y) # compute loss for every net

opt.zero_grad() # clear gradients for next train

loss.backward() # backpropagation, compute gradients

opt.step() # apply gradients

l_his.append(loss.item()) # loss recoder

labels = ['SGD', 'Momentum', 'RMSprop', 'Adam']

for i, l_his in enumerate(losses_his):

plt.plot(l_his, label=labels[i])

plt.legend(loc='best')

plt.xlabel('Steps')

plt.ylabel('Loss')

plt.ylim((0, 0.2))

plt.show()