DeepGlint AI编程练习赛——对抗性攻击(详解,python)

DeepGlint AI编程练习赛——对抗性攻击(详解,python)

做这道题 ,只想做自己明明不那么优秀 ,还那么自信!各位大哥大姐,只有40分,40分,40分,不喜勿喷

这道题,我差点差点没背过气来,做了千万遍,最后发现可以用函数。好了 言归正传。

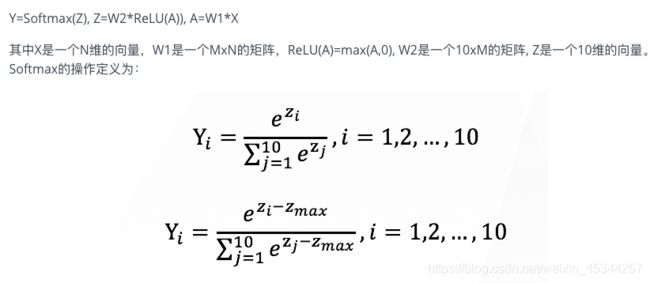

先来一遍正向传播吧,

Y= AX + B

题目把B去掉了,

感谢出题人

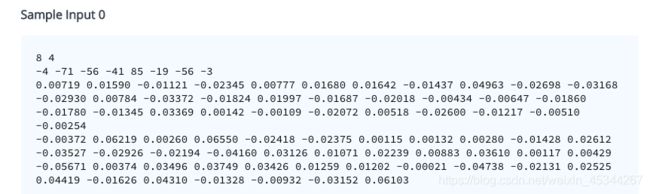

好感谢完之后 ,我们先看下 是不是 [1, 8] [8, 4] , [4,10]

的鬼样子呢?

那必须是?看不懂吗? 那就是输出一个10维的向量啊,用于分类。

好,那接下来写函数吧,

不管三七二十一,先把两个函数写出来。

def Relu(vector):

res = []

for v in vector:

res.append(v if v > 0 else 0)

return res

def softmax(vector):

res = []

max_v = max(vector)

for v in vector:

res.append(math.exp(v - max_v))

res_sum = sum(res)

return [r / res_sum for r in res]

函数搞定,接下来怎么办呢?np.reshpe(),调用包是一件多么开心的事啊,可惜,一口老血。自己搞来吧!

简单粗暴,一个一个拿 这个总没问题吧,向量转矩阵了

def V_C(data, rows, cols):

new_data = []

for r in range(rows):

row_data = []

for c in range(cols):

row_data.append(data[r * cols + c])

new_data.append(row_data)

return new_data

好,最重要的一步来了,还记不记得kears, pytorch 中的全连接层,是不是感觉,造轮子的人真可爱。我么能不能也可爱一下下。奥特曼附身,我们也可以,哈哈。

def Full_c(vector, matrix):

res = []

for row in matrix:

tmp = 0

for i in range(len(vector)):

tmp += (row[i] * vector[i])

res.append(tmp)

return res

到这里 是不是发现了一个很严重的问题,我一直在创空间,看来完犊子了。先写吧,皮卡丘。

喜不喜欢我们曾经写的net函数

def net(x):

a = Full_c(x, w1)

r = Relu(a)

z = Full_c(r, w2)

y = softmax(z)

return y

到此为止正向传播的函数全部完成。

接下来我们看

由题我们可以看出来,此时要分两种情况去讨论:

一: 修改后发生改变

二:修改后不发生改变

第一步是找出,当前预测的最大值,以及最大值的索引

max_val = max(y)

max_idx = y.index(max_val)

接下来就是遍历了,

for n in range(N):

x_new = copy.deepcopy(x)

for ii in range(-128, 128):

if x[n] == ii:

continue

x_new[n] = ii

y = net(x_new)

new_max_val = max(y)

new_max_idx = y.index(new_max_val)

if (not new_max_idx == max_idx) and new_max_val > new_max_val_record:

new_max_val_record = new_max_val

new_max_idx_record = (n + 1)

sensitive_ii = ii

new_val_of_old_max_idx = y[max_idx]

if new_val_of_old_max_idx < val_of_old_max_idx:

val_of_old_max_idx = new_val_of_old_max_idx

no_change_sensitive_ii = ii

遍历好了,就输出吧。

再附上全部代码,以供参考,也恳请各位小哥哥,小姐姐,私信带来一个满分的解

import math

import copy

def V_C(data, rows, cols):

new_data = []

for r in range(rows):

row_data = []

for c in range(cols):

row_data.append(data[r * cols + c])

new_data.append(row_data)

return new_data

def Full_c(vector, matrix):

res = []

for row in matrix:

tmp = 0

for i in range(len(vector)):

tmp += (row[i] * vector[i])

res.append(tmp)

return res

def Relu(vector):

res = []

for v in vector:

res.append(v if v > 0 else 0)

return res

def softmax(vector):

res = []

max_v = max(vector)

for v in vector:

res.append(math.exp(v - max_v))

res_sum = sum(res)

return [r / res_sum for r in res]

def net(x):

a = Full_c(x, w1)

r = Relu(a)

z = Full_c(r, w2)

y = softmax(z)

return y

N, M = list(map(int, input().strip().split()))

x = list(map(int, input().strip().split()))

w1 = list(map(float, input().strip().split()))

w1 = V_C(w1, M, N)

w2 = list(map(float, input().strip().split()))

w2 = V_C(w2, 10, M)

y = net(x)

max_val = max(y)

max_idx = y.index(max_val)

new_max_val_record = -10

new_max_idx_record = -1

sensitive_ii = -129

val_of_old_max_idx = 10

no_change_sensitive_ii = -129

for n in range(N):

x_new = copy.deepcopy(x)

for ii in range(-128, 128):

if x[n] == ii:

continue

x_new[n] = ii

y = net(x_new)

new_max_val = max(y)

new_max_idx = y.index(new_max_val)

if (not new_max_idx == max_idx) and new_max_val > new_max_val_record:

new_max_val_record = new_max_val

new_max_idx_record = (n + 1)

sensitive_ii = ii

new_val_of_old_max_idx = y[max_idx]

if new_val_of_old_max_idx < val_of_old_max_idx:

val_of_old_max_idx = new_val_of_old_max_idx

no_change_sensitive_ii = ii

if not (new_max_idx_record == -1 and sensitive_ii == -129):

print(new_max_idx_record, sensitive_ii)

else:

print(max_idx, no_change_sensitive_ii)