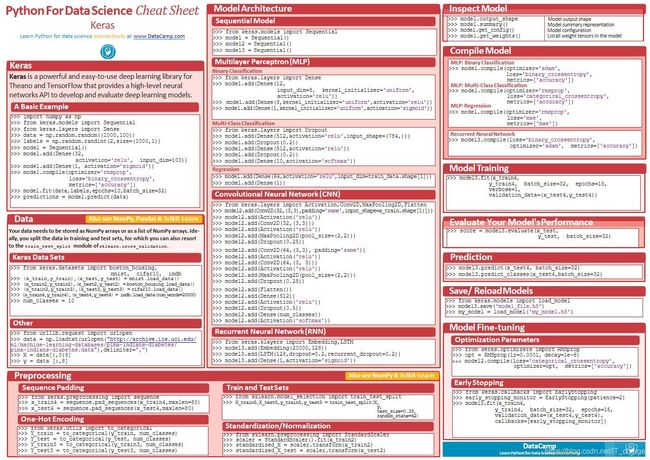

Keras框架速查手册(Python For Data Science Cheat Sheet Keras)

Keras框架速查表

- 1 Keras

-

- 1.1 一个基本示例

- 2 数据

-

- 2.1 Keras数据设置

- 3 模型结构

-

- 3.1 Sequential模型

- 3.2 多层感知器(MLP)

-

- 3.2.1 二元分类

- 3.2.2 多类别分类

- 3.2.3 回归

- 3.3 卷积神经网络(CNN)

- 3.4 循环神经网络(RNN)

- 4 预处理

-

- 4.1 序列填充

- 4.2 创建虚拟变量

- 4.3 训练集、测试集分离

- 4.4 标准化/归一化

- 5 模型细节提取

-

- 5.1 模型输出形状

- 5.2 模型总结

- 5.3 get模型参数

- 5.4 get神经网络weights

- 6 编译模型

-

- 6.1 多层感知器

-

- 6.1.1 二分类问题

- 6.1.2 多分类问题

- 6.1.3 回归问题

- 6.2 循环神经网络

- 7 训练模型

- 8 评价模型

- 9 预测结果

- 10 保存/载入模型

- 11 模型参数调节

-

- 11.1 优化参数

- 11.2 模型提前终止

1 Keras

1.1 一个基本示例

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

# 1.加载数据集

data = np.random.random((1000, 100)) # 创建样本

labels = np.random.randint(2, size=(1000, 1)) # 创建只有0,1两类的标签

# 2.构建模型

model = Sequential() # 构建序列结构

model.add(Dense(32, activation='relu', input_dim=100)) # 往序列结构中添加拥有32个神经元的全连接层,输入是100维向量(注意默认忽略批量维度)

model.add(Dense(1, activation='sigmoid')) # 往序列结构中添加拥有1个神经元的全连接层

# 3.编译模型

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy'])

# 4.训练模型

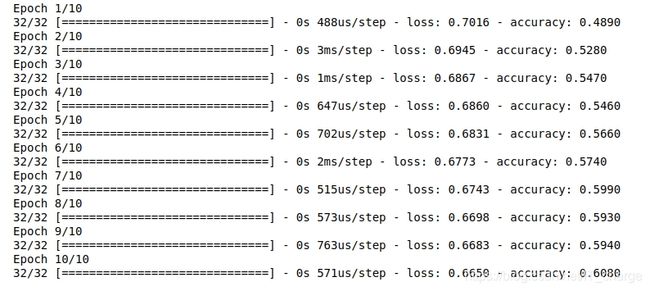

model.fit(data, labels, epochs=10, batch_size=32)

# 5.预测模型

predictions = model.predict(data)

2 数据

2.1 Keras数据设置

from keras.datasets import boston_housing, mnist, cifar10, imdb

(x_train1, y_train1), (x_test1, y_test1) = mnist.load_data()

(x_train2, y_train2), (x_test2, y_test2) = boston_housing.load_data()

(x_train3, y_train3), (x_test3, y_test3) = cifar10.load_data()

(x_train4, y_train4), (x_test4, y_test4) = imdb.load_data(num_words=20000)

num_classes = 10

3 模型结构

3.1 Sequential模型

from keras.models import Sequential

model = Sequential()

model2 = Sequential()

model3 = Sequential()

3.2 多层感知器(MLP)

3.2.1 二元分类

from keras.layers import Dense

model.add(Dense(12, input_dim=8, kernel_initializer='uniform', activation='relu'))

model.add(Dense(8, kernel_initializer='uniform', activation='relu'))

model.add(Dense(1, kernel_initializer='uniform', activation='sigmoid'))

3.2.2 多类别分类

from keras.layers import Dense, Dropout

model.add(Dense(512, activation='relu', input_shape=(784,)))

model.add(Dropout(0.2))

model.add(Dense(512, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='softmax'))

3.2.3 回归

from keras.layers import Dense

model.add(Dense(64, activation='relu', input_dim=train_data.shape[1]))

model.add(Dense(1))

3.3 卷积神经网络(CNN)

from keras.layers import Activation,Conv2D,MaxPooling2D,Flatten

model.add(Conv2D(32, (3,3), padding='same', input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(32, (3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3,3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(num_classes))

model.add(Activation('softmax'))

3.4 循环神经网络(RNN)

from keras.layers import Embedding,LSTM

model.add(Embedding(20000,128))

model.add(LSTM(128,dropout=0.2,recurrent_dropout=0.2))

model.add(Dense(1,activation='sigmoid'))

4 预处理

4.1 序列填充

将数据填充至指定长度(maxlen),默认填充值value = 0.0

from keras.preprocessing import sequence

x_train = sequence.pad_sequences(x_train4,maxlen=80)

x_test = sequence.pad_sequences(x_test4,maxlen=80)

4.2 创建虚拟变量

from keras.utils import to_categorical

Y_train = to_categorical(y_train, num_classes)

Y_test = to_categorical(y_test, num_classes)

Y_train3 = to_categorical(y_train3, num_classes)

Y_test3 = to_categorical(y_test3, num_classes)

4.3 训练集、测试集分离

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test = train_test_split(X,

y,

test_size=0.33,

random_state=42)

4.4 标准化/归一化

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler().fit(x_train)

standardized_X = scaler.transform(x_train)

standardized_X_test = scaler.transform(x_test)

5 模型细节提取

5.1 模型输出形状

model.output_shape

5.2 模型总结

model.summary()

5.3 get模型参数

model.get_config()

5.4 get神经网络weights

model.get_weights()

6 编译模型

6.1 多层感知器

6.1.1 二分类问题

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

6.1.2 多分类问题

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

6.1.3 回归问题

model.compile(optimizer='rmsprop',

loss='mse',

metrics=['mae'])

6.2 循环神经网络

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

7 训练模型

model.fit(x_train4,

y_train4,

batch_size=32,

epochs=15,

verbose=1,

validation_data=(x_test4, y_test4))

8 评价模型

score = model.evaluate(x_test,

y_test,

batch_size=32)

9 预测结果

model.predict(x_test4, batch_size=32)

model.predict_classes(x_test4,batch_size=32)

10 保存/载入模型

from keras.models import load_model

model.save('model_file.h5')

my_model = load_model('my_model.h5')

11 模型参数调节

11.1 优化参数

from keras.optimizers import RMSprop

opt = RMSprop(lr=0.0001, decay=1e-6)

model.compile(loss='categorical_crossentropy',

optimizer=opt,

metrics=['accuracy'])

11.2 模型提前终止

from keras.callbacks import EarlyStopping

early_stopping_monitor = EarlyStopping(patience=2)

model.fit(x_train4,

y_train4,

batch_size=32,

epochs=15,

validation_data=(x_test4,y_test4),

callbacks=[early_stopping_monitor])