行人属性识别,PA100K

1. 前景介绍

最近在调研行人属性识别,有不少研究行人属性识别的论文,阅读多篇论文之后,自己独立写了一个工程,专门做行人属性识别。

工程里面的代码主要参考两篇论文,ResNest对应的论文和Bag of Tricks for Image Classification对应的论文。

主干网络用的是ResNet的最强改进版本:ResNest50,论文详解请看我之前的博客。

训练技巧主要运用了《Bag of Tricks for Image Classification with Convolutional Neural Networks》里面的训练技巧,详细讲解请参考我的专栏:模型训练技巧。

训练数据应用的是行人属性数据集PA100K,关于PA100K的介绍请参考我的博客。

2. 训练部分代码讲解

一共训练10个属性,分别是:性别、年龄、朝向、是否戴帽子、是否戴眼镜、是否有手提包、是否有肩膀包、是否有背包、上衣类型、下衣类型。

for epoch in range(1, args.e + 1):

if epoch > args.warm:

train_scheduler.step(epoch)

#training procedure

net.train()

for batch_index, (images, labels) in enumerate(train_dataloader):

if epoch <= args.warm:

warmup_scheduler.step()

images = images.cuda()

labels = labels.cuda()

optimizer.zero_grad()

predicts = net(images)

loss_gender = cross_entropy(predicts[0], labels[:, 0].long())

loss_age = cross_entropy(predicts[1], labels[:, 1].long())

loss_orientation = cross_entropy(predicts[2], labels[:, 2].long())

loss_hat = cross_entropy(predicts[3], labels[:, 3].long())

loss_glasses = cross_entropy(predicts[4], labels[:, 4].long())

loss_handBag = cross_entropy(predicts[5], labels[:, 5].long())

loss_shoulderBag = cross_entropy(predicts[6], labels[:, 6].long())

loss_backBag = cross_entropy(predicts[7], labels[:, 7].long())

loss_upClothing = cross_entropy(predicts[8], labels[:, 8].long())

loss_downClothing= cross_entropy(predicts[9], labels[:, 9].long())

loss = loss_gender + loss_age + loss_orientation + loss_hat + loss_glasses + loss_handBag + loss_shoulderBag + loss_backBag + loss_upClothing + loss_downClothing

loss.backward()

optimizer.step()

n_iter = (epoch - 1) * len(train_dataloader) + batch_index + 1

if batch_index % 10 == 0:

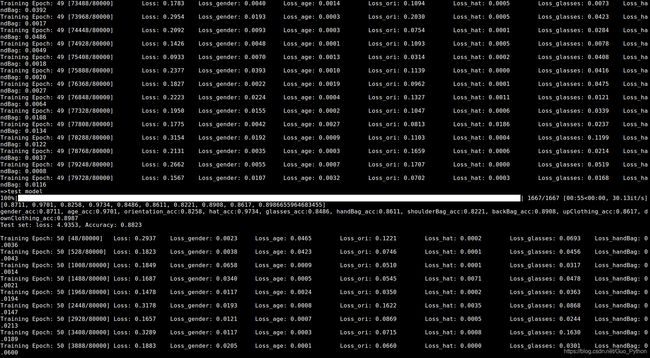

print('Training Epoch: {epoch} [{trained_samples}/{total_samples}]\tLoss: {:0.4f}\tLoss_gender: {:0.4f}\tLoss_age: {:0.4f}\tLoss_ori: {:0.4f}\tLoss_hat: {:0.4f}\tLoss_glasses: {:0.4f}\tLoss_handBag: {:0.4f}\t'.format(

loss.item(),

loss_gender.item(),

loss_age.item(),

loss_orientation.item(),

loss_hat.item(),

loss_glasses.item(),

loss_handBag.item(),

epoch=epoch,

trained_samples=batch_index * args.b + len(images),

total_samples=len(train_dataloader.dataset),

))

#visualization

visualize_lastlayer(writer, net, n_iter)

visualize_train_loss(writer, loss.item(), n_iter)

visualize_learning_rate(writer, optimizer.param_groups[0]['lr'], epoch)

visualize_param_hist(writer, net, epoch)

net.eval()

total_loss = 0

correct = np.zeros(10)

ignore = np.zeros(10)

print("=>test model")

for images, labels in tqdm(test_dataloader):

images = images.cuda()

labels = labels.cuda()

predicts = net(images)

for index in range(10):

_, preds = predicts[index].max(1)

ignore[index] += int((labels[:, index]==-1).sum())

correct[index] += preds.eq(labels[:, index]).sum().float()

loss = cross_entropy(predicts[index], labels[:, index].long())

total_loss += loss.item()

test_loss = total_loss / len(test_dataloader)

all_list = np.array([len(test_dataloader.dataset) for i in range(10)])-ignore

acc_list = correct / all_list

print(acc_list.tolist())训练过程如下:

3. 测试代码讲解

"""

author:guopei

"""

import os

import cv2

import torch

from torch.nn import DataParallel

import numpy as np

from PIL import Image,ImageDraw,ImageFont

import transforms

from models import resnest50

class Person_Attribute(object):

def __init__(self, weights="resnest50.pth"):

self.device = torch.device("cuda")

self.net = resnest50().to(self.device)

self.net = DataParallel(self.net)

self.weights = weights

self.net.load_state_dict(torch.load(self.weights))

TRAIN_MEAN = [0.485, 0.499, 0.432]

TRAIN_STD = [0.232, 0.227, 0.266]

self.transforms = transforms.Compose([

transforms.ToCVImage(),

transforms.Resize((128,256)),

transforms.ToTensor(),

transforms.Normalize(TRAIN_MEAN, TRAIN_STD)

])

def recog(self, img_path):

img = cv2.imread(img_path)

img = self.transforms(img)

img = img.unsqueeze(0)

with torch.no_grad():

self.net.eval()

img_input = img.to(self.device)

outputs = self.net(img_input)

results = []

for output in outputs:

output = torch.softmax(output, 1)

output = np.array(output[0].cpu())

label = np.argmax(output)

score = output[label]

results.append((label, score))

return results

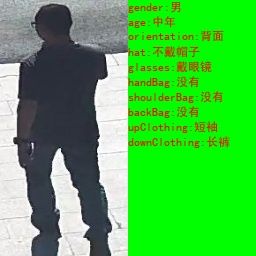

name_dict = {

"gender":['男', '女'],

"age":["老年", "中年", "少年"],

"orientation":['正面', '侧面', '背面'],

"hat":["不戴帽子", "戴帽子"],

"glasses":["不戴眼镜", "戴眼镜"],

"handBag":["没有", "有"],

"shoulderBag":["没有", "有"],

"backBag":["没有", "有"],

"upClothing":["短袖", "长袖"],

"downClothing":["长裤", "短裤", "裙子"]

}

if __name__ == "__main__":

atts = ["gender","age", "orientation", "hat", "glasses",

"handBag", "shoulderBag", "backBag", "upClothing", "downClothing"]

person_attribute = Person_Attribute("./resnest50-50-regular.pth")

img_path = "test.jpg"

results = person_attribute.recog(img_path)

print(results)

img = cv2.imread(img_path)

img = cv2.resize(img, (128,256))

img1 = img*0 +255

img1[:,:,0] *= 255

img1[:,:,2] *= 255

line = ""

labels = [i[0] for i in results]

for att, label in zip(atts, labels):

if label == -1:

continue

line += "%s:%s\n" % (att, name_dict[att][label])

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2RGB)

img1 = Image.fromarray(img1)

draw = ImageDraw.Draw(img1)

font = ImageFont.truetype("simhei.ttf", 12, encoding="utf-8")

draw.text((0, 0), line, (255, 0, 0), font=font)

img1 = cv2.cvtColor(np.array(img1), cv2.COLOR_RGB2BGR)

img_rst = np.hstack([img, img1])

cv2.imwrite(os.path.basename(img_path), img_rst)测试效果如下:

注:本文所用的训练数据都是公开数据集,如果你需要训练好的模型或者训练数据或者技术交流,请留言或者微信。近期会将代码开源。