大数据实操篇 No.15-Elasticsearch集群高可用部署(含Elasticsearch head+Kibana)

第1章 ELK简介

ELK(Elasticsearch、Logstash、Kibana)提供了一整套日志分析解决方案,其中elasticsearch是开源分布式搜索引擎。Logstash是一个开源的日志采集工具。Kibana也是开源的数据分析可视化工具。三者结合,Logstash采集系统日志信息后,上传到Elasticsearch中,kibana在利用Elasticsearch分布式高效的检索引擎,将数据可视化的展示到Web界面上。

对于一些中小型的系统,可以用ELK快速搭建一套日志分析系统。本篇文章为大家介绍Elasticsearch和Kibana的高可用部署。

第2章 集群规划

| elasticsearch140 | elasticsearch141 | elasticsearch143 | |

|---|---|---|---|

| elasticsearch | ✔ | ✔ | ✔ |

| elasticsearch head | ✔ | ||

| kibana | ✔ |

elasticsearch笔者安装了3台机器,master机器设置为这3台机器任意一台。生产环境中,master节点与data节点分开部署,master节点一般为奇数台。

head是一个客户端工具,具体连到哪台elasticsearch,在web界面上指定即可,不需要单独部署高可用,只要elasticsearch高可用即可。

kibana也是一个图形化分析工具,数据还是在elasticsearch集群上,所以elasticsearch做到高可用,kibana从elasticsearch中获取数据做展示即可。

第3章 下载

3.1 Node.js

https://nodejs.org/dist/

注意:Elasticsearch head基于node.js,所以必须提前安装Node.js。

3.2 Elasticsearch

https://www.elastic.co/cn/downloads/elasticsearch

3.3 Elasticsearch head

https://github.com/mobz/elasticsearch-head

3.4 Kibana

https://www.elastic.co/cn/downloads/kibana

注意Kibana和Elasticsearch的版本要一致。

第4章 安装Elasticsearch

4.1 解压

$ tar -zxvf elasticsearch-7.8.0-linux-x86_64.tar.gz -C /opt/module/4.2 建立数据/日志目录

在elasticsearch解压目录下创建数据/日志目录

$ mkdir data

$ mkdir logs4.3 修改elasticsearch配置

修改config目录下elasticsearch.yml文件

#cluster 集群名称

cluster.name: zmboo_es_cluster

#node 当前节点名称 集群内节点不能相同

node.name: es-140

#paths

path.data: /opt/module/elasticsearch-7.8.0/data

path.logs: /opt/module/elasticsearch-7.8.0/logs

#memory

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

#network 主机IP 端口号

network.host: 192.168.207.140

http.port: 9200

#discovery

#首次启动集群时 参与master选举的主节点 节点名称来自上面配置的node.name

cluster.initial_master_nodes: ["es-140", "es-141", "es-142"]

#[旧]集群内的主机名 供发现连接

#discovery.zen.ping.unicast.hosts: ["elasticsearch140", "elasticsearch141", "elasticsearch142"]

#[新]集群内的主机名 供发现连接 默认端口9300

discovery.seed_hosts: ["elasticsearch140", "elasticsearch141", "elasticsearch142"]

#最少可工作的候选主节点个数

discovery.zen.minimum_master_nodes: 2

#跨域问题

http.cors.enabled: true

http.cors.allow-origin: "*"elasticsearch的集群配置非常简单,保证所有机器的cluster.name相同即可,机器自动根据cluster.name关联集群。

logs和data目录可以手动创建,也可待启动时自动创建。

4.4 同步文件

同步文件到其他机器,并求改cluster.name配置(同步脚本请参考笔者之前的文章)

$ xsync elasticsearch-7.8.0/修改config目录下elasticsearch.yml文件,cluster.name和network.host。注意:cluster.name每台机器必须唯一,不能重复。

#node 当前节点名称 集群内节点不能相同

node.name: es-141

#network 主机IP

network.host: 192.168.207.1414.5 启动/停止

4.5.1 启动

$ bin/elasticsearch4.5.2 停止

$ kill [进程ID]4.6 elasticsearch安装常见问题

4.6.1 错误一

ERROR: bootstrap checks failed

max file descriptors [4096] for elasticsearch process likely too low, increase to at least [65536] max number of threads [1024] for user [zihao] likely too low, increase to at least [2048]

修改limits.conf

$ su root

# vi /etc/security/limits.conf添加如下内容:

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 40964.6.2 错误二

max number of threads [1024] for user [zihao] is too low, increase to at least [4096]

修改90-nproc.conf

$ su root

# vi /etc/security/limits.d/90-nproc.conf修改文件:

* soft nproc 1024改为

* soft nproc 40964.6.3 错误三

max virtual memory areas vm.max_map_count [65530] likely too low, increase to at least [262144]

修改sysctl.conf

$ su root

# vi /etc/sysctl.conf添加如下配置

vm.max_map_count=655360执行命令:

# sysctl -p注意:如果修改之前已经启动elasticsearch,则需要重启elasticsearch。

4.6.4 错误四

2021-01-17T05:43:54,481[o.e.c.c.JoinHelper ] [es-140] failed to join {es-140}{LinCCy_QT3WE6GA8y72exA}{aY3uc3FmSQ6oL1ELsV-YGQ}{192.168.207.140}{192.168.207.140:9300}{dilmrt}{ml.machine_memory=491331584, xpack.installed=true, transform.node=true, ml.max_open_jobs=20} with JoinRequest{sourceNode={es-140}{LinCCy_QT3WE6GA8y72exA}{aY3uc3FmSQ6oL1ELsV-YGQ}{192.168.207.140}{192.168.207.140:9300}{dilmrt}{ml.machine_memory=491331584, xpack.installed=true, transform.node=true, ml.max_open_jobs=20}, minimumTerm=0, optionalJoin=Optional[Join{term=1, lastAcceptedTerm=0, lastAcceptedVersion=0, sourceNode={es-140}{LinCCy_QT3WE6GA8y72exA}{aY3uc3FmSQ6oL1ELsV-YGQ}{192.168.207.140}{192.168.207.140:9300}{dilmrt}{ml.machine_memory=491331584, xpack.installed=true, transform.node=true, ml.max_open_jobs=20}, targetNode={es-140}{LinCCy_QT3WE6GA8y72exA}{aY3uc3FmSQ6oL1ELsV-YGQ}{192.168.207.140}{192.168.207.140:9300}{dilmrt}{ml.machine_memory=491331584, xpack.installed=true, transform.node=true, ml.max_open_jobs=20}}]} org.elasticsearch.transport.RemoteTransportException: es-140[internal:cluster/coordination/join] Caused by: org.elasticsearch.cluster.coordination.FailedToCommitClusterStateException: node is no longer master for term 1 while handling publication at org.elasticsearch.cluster.coordination.Coordinator.publish(Coordinator.java:1076) ~[elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.cluster.service.MasterService.publish(MasterService.java:268) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.cluster.service.MasterService.runTasks(MasterService.java:250) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.cluster.service.MasterService.access$000(MasterService.java:73) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.cluster.service.MasterService$Batcher.run(MasterService.java:151) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.cluster.service.TaskBatcher.runIfNotProcessed(TaskBatcher.java:150) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.cluster.service.TaskBatcher$BatchedTask.run(TaskBatcher.java:188) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:636) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.runAndClean(PrioritizedEsThreadPoolExecutor.java:252) [elasticsearch-7.8.0.jar:7.8.0] at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.run(PrioritizedEsThreadPoolExecutor.java:215) [elasticsearch-7.8.0.jar:7.8.0] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_221] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_221] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_221]

新机器复制elasticsearch文件的时候不要复制data和logs文件夹,删除掉即可。

4.7 检查运行情况

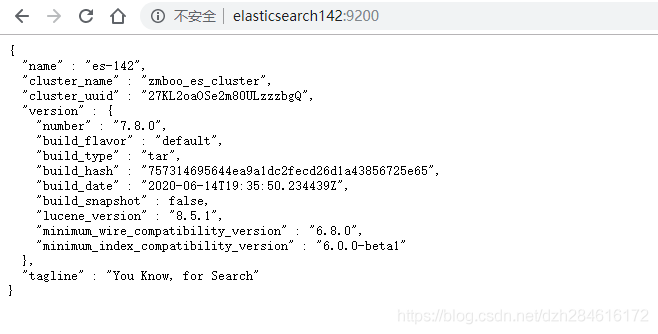

4.7.1 访问各节点

elasticsearch140节点

elasticsearch141节点

elasticsearch142节点

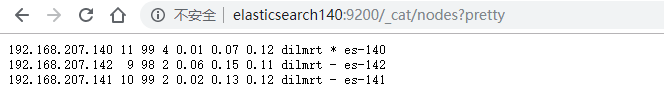

4.7.2 查看节点情况

http://elasticsearch140:9200/_cat/nodes?pretty

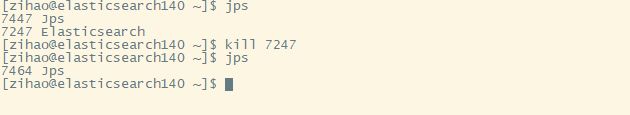

4.7.3 高可用验证

Kill掉elasticsearch140的进程

先观察elasticsearch141和elasticsearch142上的日志信息

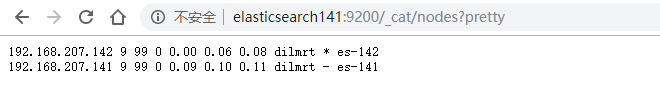

这里可以看到已经连接不上es-140了,并且master节点已经发生改变,变为es-142节点。

我们在打开集群的节点观察页面确认一下:

这里也能看到es-142为当前集群master节点。

到这里elasticsearch高可用集群就已经部署好了。接下来我们将elasticsearch head和kibana安装一下。

第5章 安装Elasticsearch head

5.1 安装Node.js

5.1.1 解压

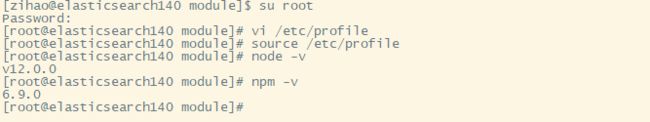

$ tar -zxvf node-v12.0.0-linux-x64.tar.gz -C /opt/module/5.1.2 配置node.js环境变量

$ su root

# vi /etc/profile添加如下内容

export NODE_HOME=/opt/module/node-v12.0.0-linux-x64

export PATH=$PATH:$NODE_HOME/binsource

$ source /etc/profile注意当前用户下要source

5.1.3 查看node和npm版本

# node -v

# npm -v

5.2 安装head插件

Elsticsearch head是Elasticsearch网页版管理插件。

注意:head插件依赖于nodejs,安装head前一定要先安装nodejs。

5.2.1 解压

$ unzip elasticsearch-head-master.zip -d /opt/module/5.2.2 设置npm镜像

npm config set registry https://registry.npm.taobao.org5.2.3 创建目录

查看当前head插件目录下有无node_modules/grunt目录,默认没有;如果有,则先删除。在执行如下命令创建:

$ npm install grunt --save5.2.4 安装cnpm

安装npm,淘宝团队做的国内镜像,因为npm的服务器位于国外可能会影响安装

$ npm install -g cnpm --registry=https://registry.npm.taobao.org5.2.5 安装grunt

$ npm install -g grunt -cli5.2.6 修改Gruntfile.js

添加hostname:'0.0.0.0'

options{

hostname: '0.0.0.0',

...

}具体位置如下:

connect: {

server:{

options: {

hostname: '0.0.0.0',

port: 9100,

base: '.',

keepalive: true

}

}

}5.2.7 迁移文件

检查head根目录是否存在base文件夹

如果没有,则将_site下的base文件夹及其内容复制到head根目录下的base,注意使用普通用户。

$ mkdir base

$ cd _site/

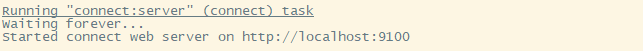

$ cp base/* ../base/5.2.8 启动grunt server

$ grunt server -d-d:后台启动

或者到elastic/node_modules/grunt/bin/目录下执行

$ elasticsearch-head-master/node_modules/grunt/bin/grunt server &5.2.9 常见错误

[zihao@elasticsearch140 elasticsearch-head-master]$ npm install -g grunt -cli

/opt/module/node-v12.0.0-linux-x64/bin/grunt -> /opt/module/node-v12.0.0-linux-x64/lib/node_modules/grunt/bin/grunt

+ [email protected]

added 196 packages from 160 contributors in 24.132s

[zihao@elasticsearch140 elasticsearch-head-master]$ grunt server

>> Local Npm module "grunt-contrib-clean" not found. Is it installed?

>> Local Npm module "grunt-contrib-concat" not found. Is it installed?

>> Local Npm module "grunt-contrib-watch" not found. Is it installed?

>> Local Npm module "grunt-contrib-connect" not found. Is it installed?

>> Local Npm module "grunt-contrib-copy" not found. Is it installed?

>> Local Npm module "grunt-contrib-jasmine" not found. Is it installed?

Warning: Task "connect:server" not found. Use --force to continue.

Aborted due to warnings.

如果启动提示“Local Npm module "grunt-contrib-...... " not found. Is it installed?”

则执行命令一个一个安装:

$ npm install grunt-contrib-...... -registry=https://registry.npm.taobao.org安装完成后,重新启动

启动成功后,访问地址(笔者这里是在elasticsearch140启动的head)

http://elasticsearch140:9100/

5.3 Chrome插件方式

手动安装一个head插件太过复杂,这里在介绍一种简单的方式,直接安装Chrome插件。在Chrome应用商店里查找名安装ElasticSearch head插件,以后打开浏览器就能直接使用了。

到这里,elasticsearch-head插件已经安装完成。

5.4 elasticsearch head安装常见问题

5.4.1 问题一

[zihao@elasticsearch140 elasticsearch-head-master]$ npm install -g cnpm --registry=https://registry.npm.taobao.org

npm WARN deprecated [email protected]: this

npm ERR! code ENOTFOUND

npm ERR! errno ENOTFOUND

npm ERR! network request to https://registry.npm.taobao.org/abbrev/download/abbrev-1.1.1.tgz failed, reason: getaddrinfo ENOTFOUND registry.npm.taobao.org

npm ERR! network This is a problem related to network connectivity.

npm ERR! network In most cases you are behind a proxy or have bad network settings.

npm ERR! network

npm ERR! network If you are behind a proxy, please make sure that the

npm ERR! network 'proxy' config is set properly. See: 'npm help config'

注:npm install国外的仓库非常慢,所以采用国内的仓库进行安装

解决方案:

根据提示的地址,curl测试是否能访问,如果不能访问,修改DNS

$ su root

# vi /etc/resolv.conf添加Google DNS服务器:

nameserver 8.8.8.8

nameserver 8.8.4.4

第6章 安装Kibana

6.1 解压

$ tar -zxvf kibana-7.8.0-linux-x86_64.tar.gz -C /opt/module/6.2 修改配置文件

配置kibana.yml

#对外服务监听端口

server.port: 5601

#绑定可以访问5601端口服务的IP地址,0.0.0.0表示任何地址在没有防火墙限制的情况下都可以访问,生产环境别这样设置,不安全。

#对应elasticsearch请求的服务URL

server.host: "elasticsearch140"

elasticsearch.hosts: ["http://elasticsearch140:9200", "http://elasticsearch141:9200", "http://elasticsearch142:9200"]

#用来控制证书的认证,可选的值为full,none,certificate。此处由于没有证书,所以设置为null,否则启动会提示错误.

elasticsearch.ssl.verificationMode: none6.3 启动Kibana

bin/kibana6.4 打开Web界面

访问 http://elasticsearch140:5601

到这里,Kibana安装完成了!

总结,至此Elasticsearch、Elasticsearch head、Kibana都部署完成,为后续的实时计算案例打好了基础,后续笔者会用Flink将本文所部署的内容串起来,实现一次完整的实时计算案例。