Pytorch学习笔记二:训练步骤

Pytorch学习笔记二:训练步骤

文章目录

- Pytorch学习笔记二:训练步骤

- 前言

- 一、导入数据包

- 二、定义数据集

- 三、实例化数据类

- 四、 构建网络

- 五、 训练

- 六、 验证模型

- 七、 保存模型

- 八、 加载训练好的模型

前言

继上一篇第一次实现自己的数据集,本篇用于介绍pytorch训练网络的代码。

一、导入数据包

import cv2

import os

import torch

import numpy as np

import torchvision

import torch.nn as nn

import torch.utils.data as Data

from PIL import Image

from matplotlib.pyplot import subplot

from DLP import DLP

import torch.optim as optim

import torchvision.transforms as transforms

二、定义数据集

class OpticalSARDataset(Data.Dataset):

def __init__(self, data_dir, part): #保存数据路径

pass

def __len__(self):

return len(self.labels)

def __getitem__(self,index):

return image,label

三、实例化数据类

代码如下(示例):

# 利用之前创建好的OpticalSARDataset类去创建数据对象

train_dataset = OpticalSARDataset(data_dir, 'train') # 训练数据集

test_dataset = OpticalSARDataset(data_dir, 'test') # 测试数据集

四、 构建网络

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = DLP() # 实例化网络

model.to(device)

五、 训练

epoches = 5

lr = 0.001

criterion = nn.MSELoss().to(device) # 使用交叉熵作为损失函数

# nn.MESLoss的输入可以使矩阵和向量,默认输出的是loos的mean

optimizer = optim.Adam(model.parameters(), lr=lr) # 定义优化器

for epoch in range(epoches): # # 一个epoch可以认为是一次训练循环

model.train() #必须要写这句

for i, data in enumerate(train_iter):

imgs = data["image"]

labels = data["label"]

images = imgs.to(device)

labels = labels.to(device)

output = model(images) # 经过模型对象就产生了输出

loss = criterion(output, labels) # 传入的参数: 输出值(预测值), 实际值(标签)

# backprop

optimizer.zero_grad() # 梯度清零

loss.backward() # #梯度反传

optimizer.step() # 保留梯度

# loss.item

print('Epoch [{}/{}], Loss: {:.4f}'.format(epoch + 1, epoches, loss.item()))

print('Finished Training')

六、 验证模型

running_loss = 0

total = 0

model.eval() #这句话也是必须的

with torch.no_grad():

for data in test_iter:

imgs = data["image"]

labels = data["label"]

images = imgs.to(device)

labels = labels.to(device)

output = model(images) # 经过模型对象就产生了输出

loss = criterion(output, labels) # 传入的参数: 输出值(预测值), 实际值(标签)

total += labels.size(0)

running_loss += loss.item()

averageloss = running_loss/total

print('Loss of the network on the test images: %f ' % (averageloss))

七、 保存模型

PATH = 'E:\pycharmProject\LaRecNet\dlp_net.pth'

torch.save(model.state_dict(), PATH)

八、 加载训练好的模型

dlp = DLP().to(device) # 实例化网络

dlp.load_state_dict(torch.load(PATH))

path = 'E:/pycharmProject/LaRecNet/3.jpg'

img = cv2.imread(path)

print('opencv读入图片的格式为', type(img)) # 输入图片的numpy表示

imgf = transforms.ToTensor()(img) # 将输入图片读为向量

print(imgf.requires_grad)

imgf.requires_grad_(True)

print('转化为tensor的格式为:', type(imgf))

print('输入图片的tensor大小为:', imgf.size()) # c,h,w

imgf = torchvision.transforms.Compose([torchvision.transforms.ToTensor()])(img).unsqueeze(0) #把要处理的图片转化为1,c,h,w

print(imgf.shape) # 输入图片向量的大小

out = dlp(imgf)

print(out.size())

array1 = out[0].detach().numpy() # 将tensor数据转为numpy数据,且只要HWC

maxValue = array1.max()

array1 = array1*255/maxValue # normalize,将图像数据扩展到[0,255]

mat = np.uint8(array1) # float32-->uint8

print('mat_shape:', mat.shape) # mat_shape: (3, 982, 814),tensor(3,982, 814)、numpy(982, 814, 3)存储的数据维度顺序不同

mat = mat.transpose(1, 2, 0) # mat_shape: (982, 814,3)

cv2.imshow("feature", mat)

cv2.waitKey()

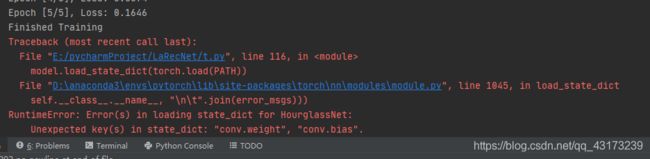

注意:我这个地方加载保存的网络参数时出错了,如下:

我没有使用gpu,参考添加链接描述解决的

参考链接:

添加链接描述