C++神经网络预测模型

参考链接:AI基础教程 / 神经网络原理简明教程(来自github)

一、前言

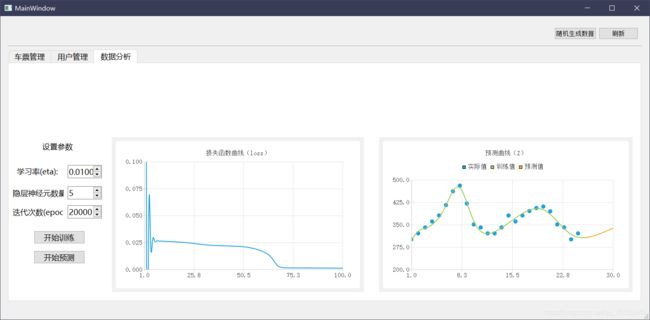

C++课程设计中有用到神经网络预测模型,网上参考代码很多,但是大部分无法运行或与我的目标不一致。所以我重新写了一个能用的,下面提供完整的C++工程。

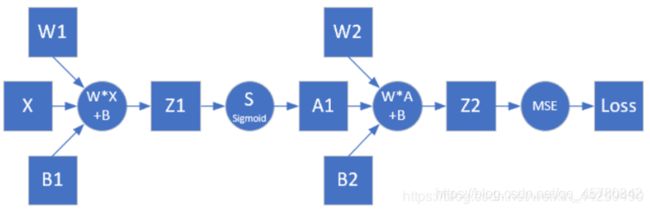

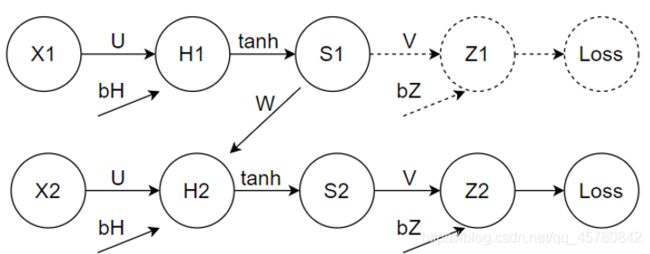

可以进入顶部参考链接了解详细原理和公式推导过程。参考链接使用Python语言,由于课程设计要求,我按照源码思路用C++重写了一遍,包括一个前馈神经网络和一个带时间步长的神经网络(简单的RNN),如下图。

实验发现,普通的前馈网络预测效果较差,RNN更适合用于与时间相关的数据。

普通前馈:

简单的RNN:

二、目标

已知25天的销售量数据,预测未来几天的销售量。

数据如下:

X特征(第n天):

double x[25] = {1, 2, 3, …, 24, 25}

Y标签(第n天的销售量):

double y[25] = {300, 320, 340, 360, 380, 415, 423, 430, 394, 350, 340, 320,320, 340, 380, 360, 380, 410, 420, 430, 394, 350, 340, 300, 320};

三、源码

请按照规定创建文件和类,在vs2019中可以直接运行。

提示:训练过程中出现“特别大的loss值”是偶然现象,请多次训练以获取最优值。

提供两种模型,请仔细查看注释

首先得保证你的ide能运行C++工程,然后按标题名创建3个文件并复制代码,编译运行即可。

3.1 main.cpp

#include 3.2 Network.h

#pragma once

class Network

{

private:

double* w1; /*< 权重w1*/

double* w2; /*< 权重w2*/

double* b1; /*< 偏移量b1*/

double b2; /*< 偏移量b2*/

double* gw1; /*< 权重w1梯度*/

double* gw2; /*< 权重w2梯度*/

double* gb1; /*< 偏移量b1梯度*/

double gb2; /*< 偏移量b2梯度*/

double* trainX; /*< 待训练的数据*/

double* trainY;

double output; /*< 输出值*/

double* z1;

double* a1;

double eta; /*< 更新效率*/

int lenData; /*< 数据长度*/

int lenWeight; /*< 权重数量*/

double* saveZ;

double* SAOutput;

double* normalParamX; /*< 归一化参数,用于恢复数据*/

double* normalParamY;

/*RNN-Param*/

double* W;

double* U;

double* V;

double* bH;

double bZ;

double* h1;

double* s1;

double x1;

double gz1;

double* gh1;

double* gbh1;

double* gU1;

double* h2;

double* s2;

double x2;

double z2;

double gz2;

double gbZ;

double* gh2;

double* gbh2;

double* gV2;

double* gU2;

double* gW;

public:

Network(int numOfWeights, int numOfData);

~Network();

void forward(double x); /*< 向前计算*/

void backward(double x, double y); /*< 向后计算*/

void readTrainData(double* dataX, double* dataY); /*< 读取数据,并做归一化处理*/

void normalize(double* normalParam, double* data);

void train(int iterTime);

void update();

void randomParam();

void shuffle(double* a, double* b);

void showResult();

/*-----------------RNN-----------------*/

void forward1(double x1, double* U, double* V, double* W, double* bh );

void forward2(double x2, double* U, double* V, double* W, double* bh, double bz, double* s1);

void backward2(double y, double* s1);

void backward1(double y, double* gh2);

void RNNTrain(int maxEpoch);

void RNNUpdate();

void RNNInitParam();

void RNNShowResult();

bool RNNCheckLoss(double x1, double x2, double y, int totalIterTime);

/*--------------end RNN-----------------*/

double test(double x);

double loss(double* z, double* y); /*< 损失函数*/

double singleLoss(double z, double y);

double sigmoid(double x);

double dSigmoid(double x);

double resData(double normalData, double differMaxMin, double avgRes); /*< 复原数据*/

double dTanh(double y); /*< tanh求导*/

bool checkErrorAndLoss(double x, double y, int totalIterTime);

};

3.3 Network.cpp

#include "Network.h"

#include VS部分,完。

四、结尾备注:

1 在VS2019 社区版中测试正常运行,并输出结果。

2 关于(模型)参数保存:

建议保存为json格式,原项目是用Qt做的,所以下面提供有关参数保存和读取的Qt源码,理解思路就好

#include

lenData = obj["lenData"].toInt();

lenWeight = obj["lenWeight"].toInt();

trainY[0] = obj["trainY0"].toDouble();

QJsonArray arr = obj["WeightsBias"].toArray();

for (int i=0; i<lenWeight; i++)

{

this->U[i] = arr[i].toObject()["U"].toDouble();

this->V[i] = arr[i].toObject()["V"].toDouble();

this->W[i] = arr[i].toObject()["W"].toDouble();

this->bH[i] = arr[i].toObject()["bH"].toDouble();

}

this->bZ = arr[0].toObject()["bZ"].toDouble();

// qDebug() << bZ;

normalParamX[0] = obj["AverageX"].toDouble();

normalParamX[1] = obj["differMaxMinX"].toDouble();

normalParamY[0] = obj["AverageY"].toDouble();

normalParamY[1] = obj["differMaxMinY"].toDouble();

file.close();

}

/**

* @brief 将训练完成的参数保存为json格式的文件

*/

void RNN::saveParam()

{

//打开文件

QFile file("rnn_param.json");

if(!file.open(QIODevice::WriteOnly)) {

qDebug() << "File open failed!";

} else {

qDebug() <<"File open successfully!";

}

file.resize(0);

QJsonDocument jdoc;

QJsonObject obj;

QJsonArray WeightsBias;

for(int i=0;i<lenWeight;i++)

{

QJsonObject trainParam; //定义数组成员

trainParam["U"] = U[i];

trainParam["V"] = V[i];

trainParam["W"] = W[i];

trainParam["bH"] = bH[i];

trainParam["bZ"] = bZ;

WeightsBias.append(trainParam);

}

obj["WeightsBias"] = WeightsBias;

obj["AverageX"] = normalParamX[0];

obj["differMaxMinX"] = normalParamX[1];

obj["AverageY"] = normalParamY[0];

obj["differMaxMinY"] = normalParamY[1];

obj["lenData"] = lenData;

obj["lenWeight"] = lenWeight;

obj["trainY0"] = trainY[0];

jdoc.setObject(obj);

file.write(jdoc.toJson(QJsonDocument::Indented)); //Indented:表示自动添加/n回车符

file.close();

}

3 Qt测试结果

提示:完整项目已经开源:

C++ QT Creater 车票信息管理及预测系统