python爬虫 2 静态网页抓取

获取响应内容:

import requests

r = requests.get('http://www.santostang.com/')

print("文本编码:",r.encoding) #服务器使用的文本编码

print("响应状态码:",r.status_code) #检测响应的状态码,200为成功,4xx为客户端错误,5xx为服务器错误响应

print("字符串方式的响应体:",r.text) #服务器响应的内容

另外还有r.content是字节方式的响应体,会自动解码gzip和deflate编码的响应数据;r.json()是Requests中内置的JSON解码器。

定制Requests:

为了请求特定数据需要在URL查询字符串中加入某些数据,一般跟在一个问号后面,并且以键/值的形式放在URL中:

import requests

key_dict = {'key1':'value1','key2':'value2'}

r = requests.get('http://httpbin.org/get',params = key_dict)

print("URL已经正确编码:",r.url)

print("字符串方式的响应体:\n",r.text)

#URL已经正确编码: https://httpbin.org/get?key1=value1&key2=value2

#字符串方式的响应体:

#{

# "args": {

# "key1": "value1",

# "key2": "value2"

# },

# "headers": {

# "Accept": "*/*",

# "Accept-Encoding": "gzip, deflate",

# "Connection": "close",

# "Host": "httpbin.org",

# "User-Agent": "python-requests/2.19.1"

# },

# "origin": "112.86.150.208",

# "url": "https://httpbin.org/get?key1=value1&key2=value2"

#}

定制请求头Headers:

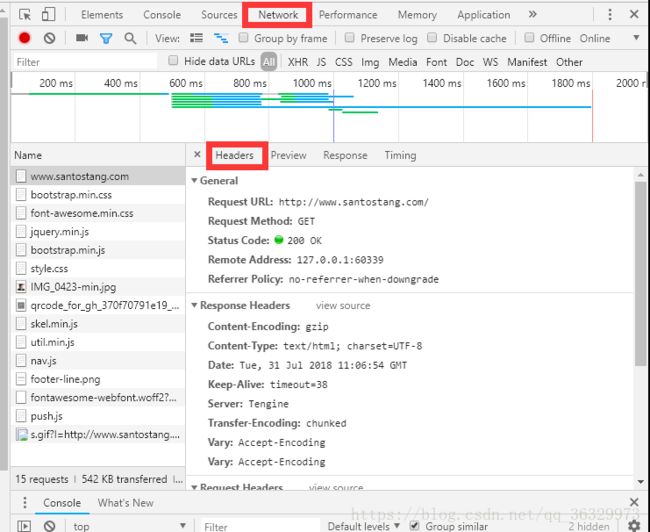

F12->network->Headers

查看请求头信息:

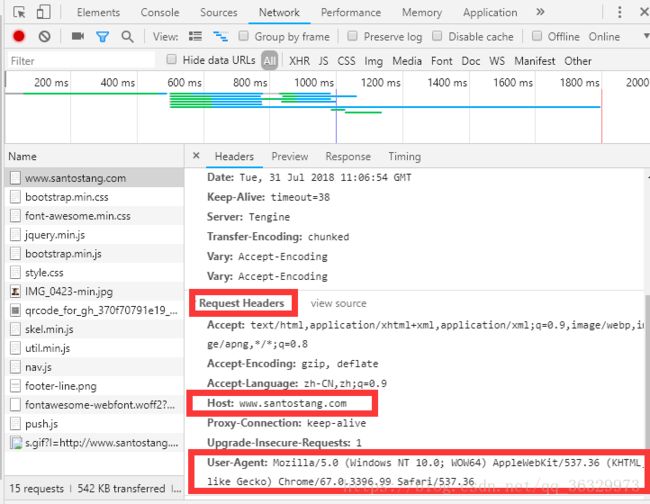

提取请求头中的重要信息并修改代码:

import requests

headers = {

'user-agent':'Mozilla/5.0 (Windows NT 6.1;Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36',

'Host':'www.santostang.com'

}

r = requests.get('http://www.santostang.com/',headers = headers)

print("响应状态码:",r.status_code)

发送POST请求:

有时还需要发送一些编码为表单形式的数据,列如在登陆时使用的就是POST,GET会将密码显示在URL中。

import requests

key_dict = {'key1':'value1','key2':'value2'}

r = requests.post('http://httpbin.org/post',data = key_dict)

print(r.text)

超时:

可以在Requests的timeout参数中设置:

import requests

link = 'http://www.santostang.com/'

r = requests.get(link,timeout = 0.001)

爬虫实践:TOP250电影数据

目标网址:https://movie.douban.com/top250

1、分析:

每页有25个电影,第二页时地址变为https://movie.douban.com/top250?start=25,第三页strat=50,可见每过一页就在start参数上加25

2、拿到网页全部内容:

import requests

def get_movies():

headers = {

'user-agent':"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36",

'Host':"movie.douban.com"

}

for i in range(0,10):

link = 'https://movie.douban.com/top250?start=' + str( i * 25)

r = requests.get(link,headers = headers,timeout = 10)

print(str(i + 1),"页响应状态码:",r.status_code)

print(r.text)

get_movies()

3、分析后取出:

import requests

import re

def get_movies():

headers = {

'user-agent':"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36",

'Host':"movie.douban.com"

}

movie_list = []

root_pattern = '

name_pattern = '([\w]*?)'

for i in range(0,10):

link = 'https://movie.douban.com/top250?start=' + str( i * 25)

r = requests.get(link,headers = headers,timeout = 10)

print(str(i + 1),"页响应状态码:",r.status_code)

root_html = re.findall(root_pattern,r.text)

for html in root_html:

name = re.findall(name_pattern,html)

movie_list.append(name)

print(movie_list)

get_movies()

我的个人主页:www.unconstraint.cn