tensorflow estimator 使用hook实现finetune

- model_fn里面定义好模型之后直接赋值

- 使用钩子 hooks

为了实现finetune有如下两种解决方案:

model_fn里面定义好模型之后直接赋值

def model_fn(features, labels, mode, params):

# .....

# finetune

if params.checkpoint_path and (not tf.train.latest_checkpoint(params.model_dir)):

checkpoint_path = None

if tf.gfile.IsDirectory(params.checkpoint_path):

checkpoint_path = tf.train.latest_checkpoint(params.checkpoint_path)

else:

checkpoint_path = params.checkpoint_path

tf.train.init_from_checkpoint(

ckpt_dir_or_file=checkpoint_path,

assignment_map={params.checkpoint_scope: params.checkpoint_scope} # 'OptimizeLoss/':'OptimizeLoss/'

)

使用钩子 hooks。

可以在定义tf.contrib.learn.Experiment的时候通过train_monitors参数指定

# Define the experiment

experiment = tf.contrib.learn.Experiment(

estimator=estimator, # Estimator

train_input_fn=train_input_fn, # First-class function

eval_input_fn=eval_input_fn, # First-class function

train_steps=params.train_steps, # Minibatch steps

min_eval_frequency=params.eval_min_frequency, # Eval frequency

# train_monitors=[], # Hooks for training

# eval_hooks=[eval_input_hook], # Hooks for evaluation

eval_steps=params.eval_steps # Use evaluation feeder until its empty

)

也可以在定义tf.estimator.EstimatorSpec 的时候通过training_chief_hooks参数指定。

不过个人觉得最好还是在estimator中定义,让experiment只专注于控制实验的模式(训练次数,验证次数等等)。

def model_fn(features, labels, mode, params):

# ....

return tf.estimator.EstimatorSpec(

mode=mode,

predictions=predictions,

loss=loss,

train_op=train_op,

eval_metric_ops=eval_metric_ops,

# scaffold=get_scaffold(),

# training_chief_hooks=None

)

这里顺便解释以下tf.estimator.EstimatorSpec对像的作用。该对象描述来一个模型的方方面面。包括:

当前的模式:

mode: A ModeKeys. Specifies if this is training, evaluation or prediction.

计算图

predictions: Predictions Tensor or dict of Tensor.

loss: Training loss Tensor. Must be either scalar, or with shape [1].

train_op: Op for the training step.

eval_metric_ops: Dict of metric results keyed by name. The values of the dict are the results of calling a metric function, namely a (metric_tensor, update_op) tuple. metric_tensor should be evaluated without any impact on state (typically is a pure computation results based on variables.). For example, it should not trigger the update_op or requires any input fetching.

导出策略

export_outputs: Describes the output signatures to be exported to

SavedModel and used during serving. A dict {name: output} where:

name: An arbitrary name for this output.

output: an ExportOutput object such as ClassificationOutput, RegressionOutput, or PredictOutput. Single-headed models only need to specify one entry in this dictionary. Multi-headed models should specify one entry for each head, one of which must be named using signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY.

chief钩子 训练时的模型保存策略钩子CheckpointSaverHook, 模型恢复等

training_chief_hooks: Iterable of tf.train.SessionRunHook objects to run on the chief worker during training.

worker钩子 训练时的监控策略钩子如: NanTensorHook LoggingTensorHook 等

training_hooks: Iterable of tf.train.SessionRunHook objects to run on all workers during training.

指定初始化和saver

scaffold: A tf.train.Scaffold object that can be used to set initialization, saver, and more to be used in training.

evaluation钩子

evaluation_hooks: Iterable of tf.train.SessionRunHook objects to run during evaluation.

自定义的钩子如下:

class RestoreCheckpointHook(tf.train.SessionRunHook):

def __init__(self,

checkpoint_path,

exclude_scope_patterns,

include_scope_patterns

):

tf.logging.info("Create RestoreCheckpointHook.")

#super(IteratorInitializerHook, self).__init__()

self.checkpoint_path = checkpoint_path

self.exclude_scope_patterns = None if (not exclude_scope_patterns) else exclude_scope_patterns.split(',')

self.include_scope_patterns = None if (not include_scope_patterns) else include_scope_patterns.split(',')

def begin(self):

# You can add ops to the graph here.

print('Before starting the session.')

# 1. Create saver

#exclusions = []

#if self.checkpoint_exclude_scopes:

# exclusions = [scope.strip()

# for scope in self.checkpoint_exclude_scopes.split(',')]

#

#variables_to_restore = []

#for var in slim.get_model_variables(): #tf.global_variables():

# excluded = False

# for exclusion in exclusions:

# if var.op.name.startswith(exclusion):

# excluded = True

# break

# if not excluded:

# variables_to_restore.append(var)

#inclusions

#[var for var in tf.trainable_variables() if var.op.name.startswith('InceptionResnetV1')]

variables_to_restore = tf.contrib.framework.filter_variables(

slim.get_model_variables(),

include_patterns=self.include_scope_patterns, # ['Conv'],

exclude_patterns=self.exclude_scope_patterns, # ['biases', 'Logits'],

# If True (default), performs re.search to find matches

# (i.e. pattern can match any substring of the variable name).

# If False, performs re.match (i.e. regexp should match from the beginning of the variable name).

reg_search = True

)

self.saver = tf.train.Saver(variables_to_restore)

def after_create_session(self, session, coord):

# When this is called, the graph is finalized and

# ops can no longer be added to the graph.

print('Session created.')

tf.logging.info('Fine-tuning from %s' % self.checkpoint_path)

self.saver.restore(session, os.path.expanduser(self.checkpoint_path))

tf.logging.info('End fineturn from %s' % self.checkpoint_path)

def before_run(self, run_context):

#print('Before calling session.run().')

return None #SessionRunArgs(self.your_tensor)

def after_run(self, run_context, run_values):

#print('Done running one step. The value of my tensor: %s', run_values.results)

#if you-need-to-stop-loop:

# run_context.request_stop()

pass

def end(self, session):

#print('Done with the session.')

pass

https://blog.csdn.net/andylei777/article/details/79074757

最近训练模型时遇到一些问题,记载下来以备以后可以查看。在使用tensorflow的接口estimator遇到一些问题,官方的api也不是很具体,因此通过搜索与查看源码,一步步熟悉其操作与原理,并解决了问题。 tf.estimator是专为分布式设计的,其中包含很多分布式策略。包含如下参数:

1、model_fn,该函数的输入包含feature、label、config、mode一些参数设置等,对于train、eval、predict过程,主要通过mode参数区分,主要定义模型的运行过程。

2、Model_dir,模型存储的目录

3、config一些配置设置,如采用分布式策略

4、params一些参数设置

5、warm_start_from 从已保存的checkpoint加载

Estimator有一些很巧妙的实现,比如对一些保存checkpoint、打印日志操作等都是通过hook实现,如sessionRunhook,详见https://www.tensorflow.org/api_docs/python/tf/train/SessionRunHook,可以通过继承sessionRunhook实现一些会话内的操作。Estimator会根据设置的是否分布式策略执行不同的训练过程,其中分布策略主要通过tf.train.MonitorSession实现。

模型训练过程中,往往通过加载之前模型的参数,对原有模型或进行finetune或改变图的结构,而我在训练模型中修改了优化器部分,对于优化,estimator通过CheckpointSaverHook每隔一段时间进行checkpoint存储,为了能够断点训练,也存储了如adam类似优化器的参数,而adam中本身包含两个变量,如m,v等参数,具体可见之前的博客https://blog.csdn.net/u013453936/article/details/79088291等,它在checkpoint中存储的名称默认为Adam,那么m和v两个变量就会变成Adam,Adam1默认命名,如果使用adagrad,那变量名将会改成Adagrad默认命名等。或者添加一些网络层。由于estimator CheckpointSaverHook源码见于tensorflow/python/training/basic_session_run_hooks.py中,默认会加载model_dir中的checkpoint,如swats,详见https://github.com/summersunshine1/optimize/blob/master/adadelta.py优化算法,它的变量不止两个,而加载模型过程中即使swats命名改为Adam,那可能会出现Adam_2,Adam_3参数,于是便会报not found Adam_2等错误。难道不可以直接加载旧的模型的部分参数到新的模型,这样就可以修改旧有模型,同时复用旧有模型参数。在以前的类似finetune过程中,直接通过调用tf.train.saver即可实现,那estimator中如何实现。

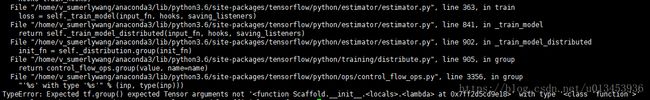

我尝试了四种方法,其中两种方法在多卡环境下不适用,另一种会出现很奇怪的bug,最后一种终于解决了该问题。 首先,查看estimator的接口中有一个warm_start_from,warm_start_from这个参数用于从旧的模型中加载参数。采用warm_start_from时,会出现"TypeError: var MUST be one of the following: a Variable, list of Variable or PartitionedVariable, but is

for var in tf.trainable_variables():

if not 'Adam' in var.name:

var_to_restore.append(var)

checkpoint_state = tf.train.get_checkpoint_state(params["model_dir"])

input_checkpoint = checkpoint_state.model_checkpoint_path

pretrain_saver = tf.train.Saver(var_to_restore)

def init_fun(scaffold, session):

pretrain_saver.restore(session, input_checkpoint)

sca = tf.train.Scaffold(init_fn = init_fun)

于是进一步搜索estimator如何finetune,发现这是一个多卡的bug,在github上刚被提出,见https://github.com/tensorflow/tensorflow/issues/19958,绝望透顶。 另一个issue中https://github.com/tensorflow/tensorflow/issues/10155,看到mixuala 回复使用hooks可以实现模型参数的加载。该hook主要继承自sessionrunhook,可以在session创建后进行模型的恢复,该方法最终解决了我的问题。我也在https://github.com/tensorflow/tensorflow/issues/19958,进行了回复。该方法只是绕路解决了问题,真的很希望tensorflow能够解决该多设备问题。 通过不断尝试,逐渐熟悉estimator的使用,以及解决了问题,官方的文档很简略,没有详细说明其使用例子,这是我想吐槽的,不过tensorflow的设计真的很巧妙。https://blog.csdn.net/u013453936/article/details/80787271