flume进阶(事务,原理,自定义,案例)

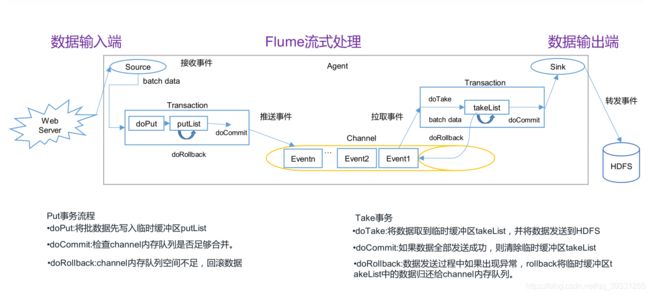

Flume 事务

Flume Agent 内部原理

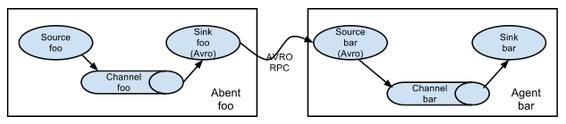

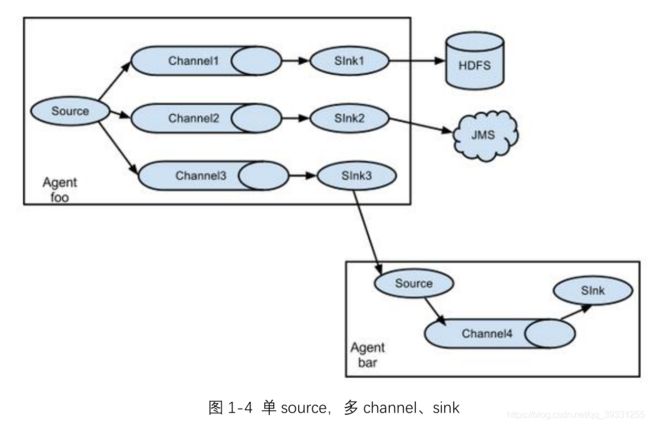

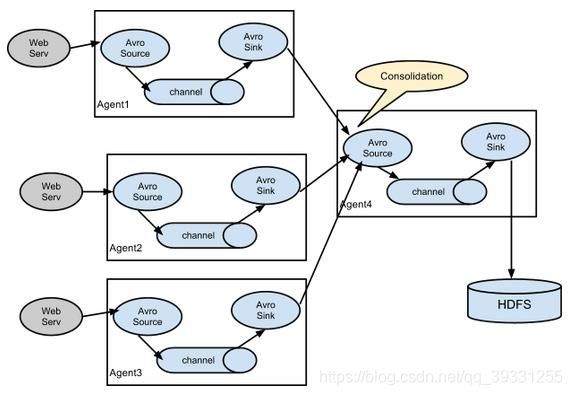

Flume 拓扑结构

1.简单串联

2.副本和多路复用(多路复用要配合拦截器使用)

3.负载均衡和故障转移

Flume支持使用将多个sink逻辑上分到一个sink组,sink组配合不同的SinkProcessor可以实现负载均衡和错误恢复的功能。

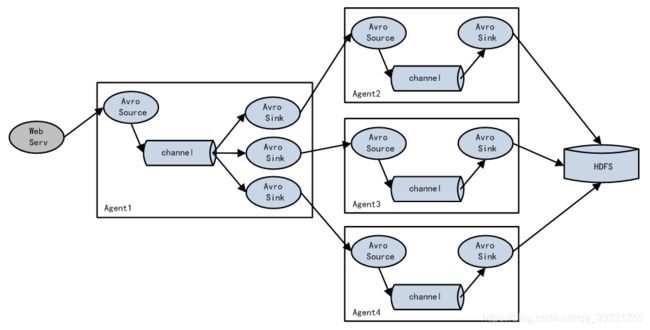

4.聚合

Flume 企业开发案例

1.副本和多路复用

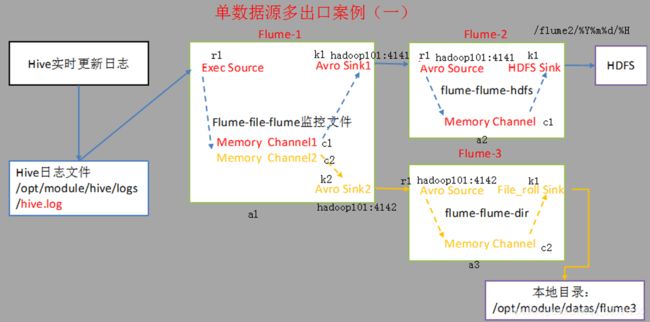

单数据源多出口案例(副本)

在/opt/module/datas/目录下创建flume3文件夹

[root@hadoop003 datas]$ mkdir flume3

在flume/job目录下创建group1文件夹

[root@hadoop003 job]$ mkdir group1

配置监控日志变化的flume

[root@hadoop003 group1]$ touch flume-file-flume.conf

[root@hadoop003 group1]$ vim flume-file-flume.conf

添加内容

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

# 将数据流复制给多个channel

a1.sources.r1.selector.type = replicating

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /opt/module/hive/logs/hive.log

a1.sources.r1.shell = /bin/bash -c

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop003

a1.sinks.k1.port = 4141

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop003

a1.sinks.k2.port = 4142

# Describe the channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

配置上级flume输出的source,输出到hdfs

[root@hadoop003 group1]$ touch flume-flume-hdfs.conf

[root@hadoop003 group1]$ vim flume-flume-hdfs.conf

添加内容

# Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop003

a2.sources.r1.port = 4141

# Describe the sink

a2.sinks.k1.type = hdfs

a2.sinks.k1.hdfs.path = hdfs://hadoop003:9000/flume2/%Y%m%d/%H

#上传文件的前缀

a2.sinks.k1.hdfs.filePrefix = flume2-

#是否按照时间滚动文件夹

a2.sinks.k1.hdfs.round = true

#多少时间单位创建一个新的文件夹

a2.sinks.k1.hdfs.roundValue = 1

#重新定义时间单位

a2.sinks.k1.hdfs.roundUnit = hour

#是否使用本地时间戳

a2.sinks.k1.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

a2.sinks.k1.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a2.sinks.k1.hdfs.fileType = DataStream

#多久生成一个新的文件

a2.sinks.k1.hdfs.rollInterval = 30

#设置每个文件的滚动大小大概是128M

a2.sinks.k1.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关

a2.sinks.k1.hdfs.rollCount = 0

#最小冗余数

a2.sinks.k1.hdfs.minBlockReplicas = 1

#访问hdfs的超时时间 默认10 000 毫秒

a2.sinks.k1.hdfs.callTimeout = 1000000

# Describe the channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

配置上级flume输出的source,输出到本地目录。

输出的本地目录必须是已经存在的目录。

[root@hadoop003 group1]$ touch flume-flume-dir.conf

[root@hadoop003 group1]$ vim flume-flume-dir.conf

添加内容

# Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c2

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop003

a3.sources.r1.port = 4142

# Describe the sink

a3.sinks.k1.type = file_roll

a3.sinks.k1.sink.directory = /opt/module/datas/flume3

# Describe the channel

a3.channels.c2.type = memory

a3.channels.c2.capacity = 1000

a3.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c2

a3.sinks.k1.channel = c2

开启hadoop

[root@hadoop003 hadoop-2.7.2]$ sbin/start-dfs.sh

[root@hadoop003 hadoop-2.7.2]$ sbin/start-yarn.sh

开启监控

先开启和关闭流程都是先接收方,后发送方

[root@hadoop003 flume]$ bin/flume-ng agent --conf conf/ --name a3 --conf-file job/group1/flume-flume-dir.conf

[root@hadoop003 flume]$ bin/flume-ng agent --conf conf/ --name a2 --conf-file job/group1/flume-flume-hdfs.conf

[root@hadoop003 flume]$ bin/flume-ng agent --conf conf/ --name a1 --conf-file job/group1/flume-file-flume.conf

开启hive

[root@hadoop003 hive]$ bin/hive

hive (default)>

查看hdfs和本地的数据

2.负载均衡和故障转移

故障转移案例

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1

a1.sinkgroups = g1

a1.sources.r1.type = netcat

a1.sources.r1.bind = hadoop003

a1.sources.r1.port = 44444

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop003

a1.sinks.k1.port = 4545

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop003

a1.sinks.k2.port = 4546

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = failover

a1.sinkgroups.g1.processor.priority.k1 = 5

a1.sinkgroups.g1.processor.priority.k2 = 10

a1.sinkgroups.g1.processor.maxpenalty = 10000

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c1

flume-2

# Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop003

a2.sources.r1.port = 4545

# Describe the sink

a2.sinks.k1.type = logger

# Use a channel which buffers events in memory

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

flume-3

# Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c1

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop003

a3.sources.r1.port = 4546

# Describe the sink

a3.sinks.k1.type = logger

# Use a channel which buffers events in memory

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

负载均衡

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1

a1.sinkgroups = g1

a1.sources.r1.type = netcat

a1.sources.r1.bind = hadoop003

a1.sources.r1.port = 44444

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop003

a1.sinks.k1.port = 4545

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop003

a1.sinks.k2.port = 4546

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = load_balance

a1.sinkgroups.g1.processor.backoff = true

a1.sinkgroups.g1.processor.selector = random

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c1

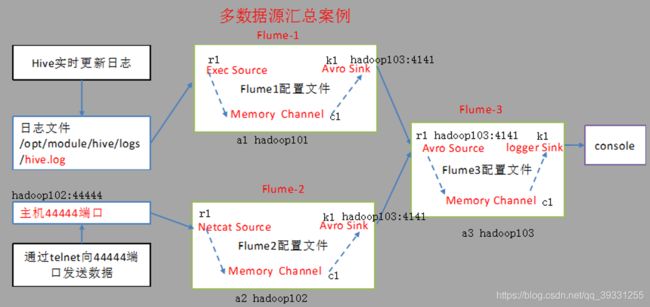

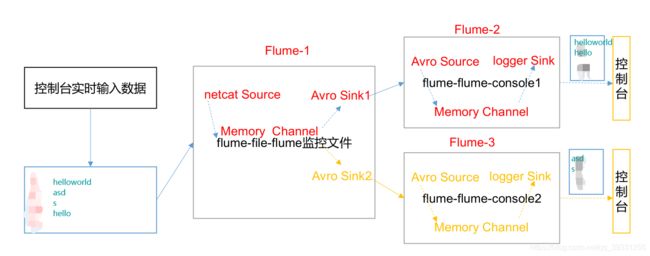

3.聚合(多数据源汇总案例)

本案例代码实现以三个flume都在hadoop003一台主机上完成,为了方便。

以后可以按下图在三台主机上完成,但都要安装flume

在hadoop003的/opt/module/flume/job目录下创建一个group2文件夹

[root@hadoop003 job]$ mkdir group2

配置flume1监控hive.log,配置sink输出数据到下一级flume3。

[root@hadoop003 group2]$ touch flume-file.conf

[root@hadoop003 group2]$ vim flume1.conf

添加配置内容

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /opt/module/hive/logs/hive.log

a1.sources.r1.shell = /bin/bash -c

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop003

a1.sinks.k1.port = 4141

# Describe the channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

配置flume2监控端口44444数据流,配置sink数据到下一级flume3:

[root@hadoop003 group2]# vim flume2.conf

添加配置内容

#Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

a2.sources.r1.type = netcat

a2.sources.r1.bind = hadoop003

a2.sources.r1.port = 44444

# Describe the sink

a2.sinks.k1.type = avro

a2.sinks.k1.hostname = hadoop003

a2.sinks.k1.port = 4141

# Use a channel which buffers events in memory

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

配置flume3用于接收flume1与flume2发送过来的数据流,最终合并后sink到控制台。

[root@hadoop003 group2]# vim flume3.conf

添加配置内容

#Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c1

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop003

a3.sources.r1.port = 4141

# Describe the sink

# Describe the sink

a3.sinks.k1.type = logger

# Describe the channel

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

分别开启对应配置文件:flume3.conf,flume2.conf,flume1.conf。

开启flume3

bin/flume-ng agent --conf conf/ --name a3 --conf-file job/group2/flume3.conf -Dflume.root.logger=INFO,console

开启flume2

bin/flume-ng agent --conf conf/ --name a2 --conf-file job/group2/flume2.conf

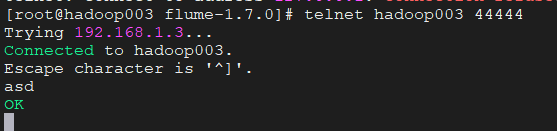

登录到hadoop003向44444端口发送数据asd

查看flume3的监控

开启flume1

bin/flume-ng agent --conf conf/ --name a1 --conf-file job/group2/flume1.conf

[root@hadoop003 logs]# echo ‘111111’ >> hive.log

查看flume3的监控

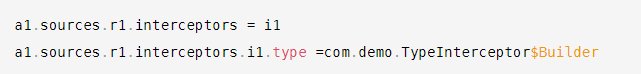

4.自定义拦截器interceptor(多路复用)

Interceptor和Multiplexing ChannelSelector结合使用

实现:为每一个evnet加上header(kv对),在编写flume的conf文件中,在ChannelSelector位置让每个v分发到不同的channel

编写拦截器类,然后打jar包上传到虚拟机的flume/lib下

导入依赖

<dependencies>

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-core</artifactId>

<version>1.7.0</version>

</dependency>

</dependencies>

编写类

package com.demo;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

/**

* @author ljh

* @create 2021-02-20 18:20

*/

public class TypeInterceptor implements Interceptor {

//创建存放事件的集合

List<Event> typeEvents;

//初始化集合

public void initialize() {

typeEvents=new ArrayList<Event>();

}

//单个事件拦截

public Event intercept(Event event) {

//获取事件的头信息和body信息

Map<String, String> headers = event.getHeaders();

String body = new String(event.getBody());

//根据body中数据是否包含hello来决定添加怎样的头信息

if(body.contains("hello")){

headers.put("type","good");

}else{

headers.put("type","bad");

}

return event;

}

//批量事件拦截

public List<Event> intercept(List<Event> list) {

//清空集合中上次传来的event

typeEvents.clear();

//给每一个event添加头信息

for (Event event:list){

Event e = intercept(event);

typeEvents.add(e);

}

return typeEvents;

}

public void close() {

}

//类名随便起,使用时要写这个类名

public static class Builder implements Interceptor.Builder{

public Interceptor build() {

return new TypeInterceptor();

}

public void configure(Context context) {

}

}

}

a1采集数据,多路复用传给a2,a3

此处的type值是根据自定义类的全类名+内部类的类名

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type =com.demo.TypeInterceptor$Builder

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = type

a1.sources.r1.selector.mapping.good = c1

a1.sources.r1.selector.mapping.bad = c2

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop003

a1.sinks.k1.port = 4545

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop003

a1.sinks.k2.port = 4546

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

a2打印到控制台(包含hello的)

a2.sources = r1

a2.sinks = k1

a2.channels = c1

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop003

a2.sources.r1.port = 4545

a2.sinks.k1.type = logger

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

a3打印到控制台(不包含hello的)

a3.sources = r1

a3.sinks = k1

a3.channels = c1

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop003

a3.sources.r1.port = 4546

a3.sinks.k1.type = logger

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

执行

flume-ng agent -n a1 -c /opt/module/flume-1.7.0/conf/ -f netcat-intercepter-avros.conf

flume-ng agent -n a2 -c /opt/module/flume-1.7.0/conf/ -f avro-flume-logs1.conf -Dflume.root.logger=INFO,console

flume-ng agent -n a3 -c /opt/module/flume-1.7.0/conf/ -f avro-flume-logs2.conf -Dflume.root.logger=INFO,console

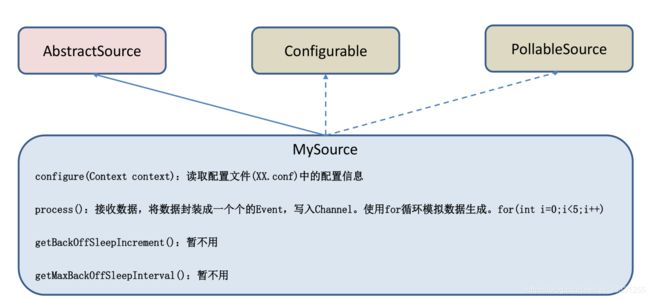

5.自定义source

开发者文档http://flume.apache.org/releases/content/1.7.0/FlumeDeveloperGuide.html#source

实现:导入依赖,编写自定义source代码,打jar包放入flume/lib下,编写conf,在source部分引入自定义source全类名,就可以运行了。

需求:使用 flume 接收数据,并给每条数据添加前缀,输出到控制台。前缀可从 flume 配置文件中配置。

导入依赖

<dependencies>

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-core</artifactId>

<version>1.7.0</version>

</dependency>

</dependencies>

编写类

package com.demo;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.EventDeliveryException;

import org.apache.flume.PollableSource;

import org.apache.flume.conf.Configurable;

import org.apache.flume.event.SimpleEvent;

import org.apache.flume.source.AbstractSource;

/**

* @author ljh

* @create 2021-02-22 16:34

*/

public class MySource extends AbstractSource implements Configurable, PollableSource {

private String prefix;

private String subfix;

//读取配置信息,给前后缀赋值

public void configure(Context context) {

prefix=context.getString("prefix");//读配置赋值

subfix=context.getString("subfix","aaa");//给默认值(如果配置文件里赋值,先用配置文件的)

}

/**

* 接收数据(此处我们用for循环造数据,以后可以通过io流和jdbc接受数据)

* 将数据封装到event中

* 把event传给channel

* @return

*

*/

public Status process() throws EventDeliveryException {

Status status=null;

try {

for (int i=0;i<5;i++){

//构建事件

SimpleEvent event = new SimpleEvent();//此行不能提到循环外,本来想提出去减少创建event对象,没想到不行

//给event传入数据

//前缀设置值,后缀用默认值

event.setBody((prefix+"--"+i+"--"+subfix).getBytes());

//将evnet传递给channel

getChannelProcessor().processEvent(event);

status=Status.READY;

}

} catch (Exception e) {

e.printStackTrace();

status=Status.BACKOFF;

}

//接受一次睡2s,免得发送太快不便于观察

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

return status;

}

public long getBackOffSleepIncrement() {

return 0;

}

public long getMaxBackOffSleepInterval() {

return 0;

}

}

配置文件

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = com.demo.MySource

a1.sources.r1.prefix = hello

a1.sources.r1.subfix = ss

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

执行

flume-ng agent -n a1 -c /opt/module/flume-1.7.0/conf/ -f mySource.conf -Dflume.root.logger=INFO,console

提示:loggersink每条日志的输出长度是有默认值的 官网:maxBytesToLog 16 Maximum number of bytes of the Event body to log

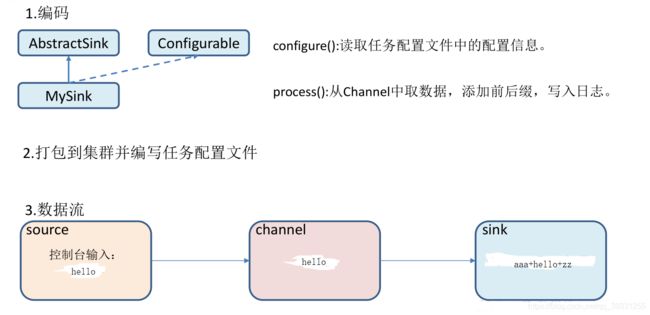

6.自定义 Sink

Sink 是完全事务性的。在从 Channel 批量删除数据之前,每个 Sink 用 Channel 启动一个事务。批量事件一旦成功写出到存储系统或下一个 Flume Agent,Sink 就利用 Channel 提交事务。事务一旦被提交,该 Channel 从自己的内部缓冲区删除事件。

需求:使用 flume 接收数据,并在 Sink 端给每条数据添加前缀和后缀,输出到控制台。前后缀可在 flume 任务配置文件中配置。

自定义类,打jar包上传

package com.demo;

import org.apache.flume.*;

import org.apache.flume.conf.Configurable;

import org.apache.flume.sink.AbstractSink;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* @author ljh

* @create 2021-02-22 21:04

*/

public class MySink extends AbstractSink implements Configurable {

//定义前后缀,来测试一下通过conf配置文件的kv赋值

private String prefix;

private String subfix;

//创建logger对象

private Logger logger = LoggerFactory.getLogger(MySink.class);

public void configure(Context context) {

prefix = context.getString("prefix");//从conf获取值

subfix=context.getString("subfix");//给默认值

}

/**

*获取channel

* 从channel获取事务和event

* 发送event

*/

public Status process() throws EventDeliveryException {

Status status= null;

Channel channel = getChannel();

Transaction transaction = channel.getTransaction();

try {

transaction.begin();

Event event = channel.take();

//处理event

if(event!=null){

String body = new String(event.getBody());

//以logger形式输出,至于输出到控制台还是文件,要靠配置信息-Dflume.root.logger=INFO,console

String tmp=prefix + body+subfix;//此处有问题,不出后缀,目前找不出问题所在

logger.info(tmp);

}

transaction.commit();

status=Status.READY;

} catch (ChannelException e) {

e.printStackTrace();

transaction.rollback();

status=Status.BACKOFF;

}finally {

transaction.close();

}

return status;

}

}

编写conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = com.demo.MySink

a1.sinks.k1.prefix=aaa

a1.sinks.k1.subfix=qq

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

启动agent

flume-ng agent -n a1 -c /opt/module/flume-1.7.0/conf/ -f mySink.conf -Dflume.root.logger=INFO,console