MapReduce入门

MapReduce入门

- MapReduce模板

-

- Driver模板

- Map模板

- Reduce模板

- WordCount小项目

-

- Driver类

- Mapper类

- Reducer类

- 集群运行

-

- 打开集群

- 启动ZooKeeper:

- 启动HDFS:

- 启动YARN

- 上传文件

- 运行脚本

- 关闭集群

- 本地运行

-

- 本地部署

- 二手房数量统计小项目

MapReduce概述

YARN运行Jar脚本

MapReduce模板

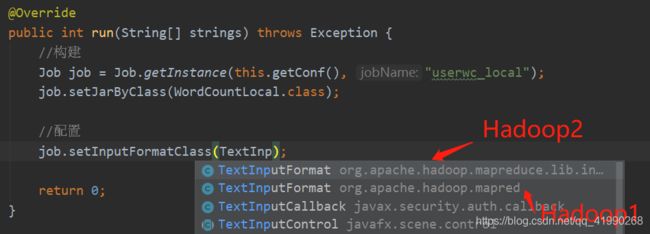

编写代码时,要注意导包的问题。全部选择含Hadoop字眼的包,导错包将会导致程序无法正常运行。

Driver模板

package com.aa.mode;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class MRDriverMode extends Configured implements Tool {

//构建、配置、连接、提交Job

public int run(String[] args) throws Exception{

//构建Job

//实例化一个MapReduce的Job对象

Job job = Job.getInstance(this.getConf(), "mode");

//指定允许jar包运行的类

job.setJarByClass(MRDriverMode.class);

//配置Job

//Input:配置输入

//指定输入类的类型

job.setInputFormatClass(TextInputFormat.class);//可以不指定,不指定就是用默认值TextInputFormat

//指定输入源

Path inputPath = new Path(args[0]);//使用第一个参数作为程序的输入

TextInputFormat.setInputPaths(job,inputPath);

//Map:配置Map...

job.setMapperClass(MRMapperMode.class);//设置调用Mapper类

job.setMapOutputKeyClass(Text.class);//设置K2的类型

job.setMapOutputValueClass(Text.class);//这只V2的类型

//Shuffle:配置Shuffle...先写null占位,再慢慢改

//job.setPartitionerClass(null);//设置分区器

//job.setSortComparatorClass(null);//设置排序器

//job.setGroupingComparatorClass(null);//设置分组器

//job.setCombinerClass(null);//设置Map端聚合

//Reduce:配置Reduce...

job.setReducerClass(MRReducerMode.class);//设置调用reduce的类

job.setOutputKeyClass(Text.class);//设置K3的类型

job.setOutputValueClass(Text.class);//设置V3的类型

//job.setNumReduceTasks(1);//设置ReduceTask的个数,默认为1个,写不写都可以

//Output:配置输出

//指定输出类的类型

job.setOutputFormatClass(TextOutputFormat.class);//默认就是TextOutputFormat

//设置输出的路径

Path outputPath = new Path(args[1]);

//判断输出路径是否存在,存在就删除

FileSystem fs = FileSystem.get(this.getConf());

if(fs.exists(outputPath)){

fs.delete(outputPath,true);//使用true为递归删除,可以删除目录

}

TextOutputFormat.setOutputPath(job,outputPath);

//提交Job

return job.waitForCompletion(true)?0:-1;//true会显示执行过程。该方法内部会submit

}

//程序入口方法

public static void main(String[] args) throws Exception{

//构建配置连接对象

Configuration conf = new Configuration();

//调用run方法

int status = ToolRunner.run(conf, new MRDriverMode(), args);

System.out.println("status = " + status);

//退出程序

System.exit(status);

}

}

配置Map与Reduce的参数时,可以先写null占位(防止报错),再慢慢改。

Map模板

package com.aa.mode;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class MRMapperMode extends Mapper<LongWritable, Text,Text,Text> {

//这4个参数为:KEYIN, VALUEIN, KEYOUT, VALUEOUT

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//Map处理的逻辑由实际的需求决定

}

}

Reduce模板

package com.aa.mode;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

*

*

*/

public class MRReducerMode extends Reducer<Text,Text,Text,Text> {

//这4个参数为:KEYIN, VALUEIN, KEYOUT, VALUEOUT

@Override

protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//Reduce的处理逻辑由实际的需求决定

}

}

以后就可以参照这套模板来写MapReduce代码,又回到了Ctrl+C,Ctrl+V改参数的年代。。。

WordCount小项目

Driver类

package com.aa.wordcount;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class WordCountDriver extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

//构建

Job job = Job.getInstance(this.getConf(), "userwc");

job.setJarByClass(WordCountDriver.class);

//配置

job.setInputFormatClass(TextInputFormat.class);

//使用程序的第一个参数作为输入

TextInputFormat.setInputPaths(job, new Path(args[0]));

job.setMapperClass(WordCountMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(WordCountReduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

//使用程序的第二个参数作为输出路径

Path outputPath = new Path(args[1]);

FileSystem fs = FileSystem.get(this.getConf());

if (fs.exists(outputPath)) {

fs.delete(outputPath, true);

}

TextOutputFormat.setOutputPath(job, outputPath);

//提交

return job.waitForCompletion(true) ? 0 : 1;

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

int status = ToolRunner.run(conf, new WordCountDriver(), args);

System.out.println("status = " + status);

System.exit(status);

}

}

Mapper类

package com.aa.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class WordCountMapper extends Mapper<LongWritable, Text,Text, IntWritable> {

//输出的K2

Text outputKey = new Text();

//输出的V2

IntWritable outputValue = new IntWritable(1);

//每一条KV调用一次map

@Override//直接输入map就可以重写

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//将每行的内容分割得到每个单词

String[] words = value.toString().split("\\s+");

//根据给定的正则表达式的匹配来拆分此字符串,空格、回车、换行等空白符都可以

//迭代取出每个单词作为K2,iter,反编译看到,数组的增强for循环遍历底层还是普通for循环

for (String word : words) {

//将当前的单词作为K2

this.outputKey.set(word);

//将K2和V2传递到下一步

context.write(outputKey,outputValue);

}

}

}

Reducer类

package com.aa.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WordCountReduce extends Reducer<Text, IntWritable, Text, IntWritable> {

//输出V3

IntWritable outputValue = new IntWritable();

//由于K3=K2,直接使用即可,不需要新建变量

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum=0;

for (IntWritable value : values) {

//取出当前单词所有1,进行累加

sum+=value.get();

}

//给V3赋值

this.outputValue.set(sum);

//传递到下一步

context.write(key,outputValue);

}

}

集群运行

打开集群

参照集群的开机顺序。

启动ZooKeeper:

cd /export/server/zookeeper-3.4.6/

bin/zkServer.sh status

bin/zkServer.sh start

bin/zkServer.sh status

启动HDFS:

start-dfs.sh

启动YARN

start-yarn.sh

使用jps查看进程。

上传文件

上传wc.txt文件:

cd /export/data/

rz上传。

创建HDFS目录:

hdfs dfs -mkdir -p /wordcount/input

复制文件到HDFS:

hdfs dfs -put /export/data/wc.txt /wordcount/input

查看是否成功:

hdfs dfs -ls /wordcount/input

上传Maven编译好的jar包:

cd /export/data/

使用rz上传。

运行脚本

使用YARN运行jar脚本:

yarn jar /export/data/day210426-1.0-SNAPSHOT.jar com.aa.wordcount.WordCountDriver /wordcount/input/wc.txt /wordcount/output1

运行过程:

[root@node1 data]# yarn jar /export/data/day210426-1.0-SNAPSHOT.jar com.aa.wordcount.WordCountDriver /wordcount/input/wc.txt /wordcount/output1

21/04/26 20:23:27 INFO client.RMProxy: Connecting to ResourceManager at node3/192.168.88.11:8032

21/04/26 20:23:27 INFO input.FileInputFormat: Total input paths to process : 1

21/04/26 20:23:28 INFO mapreduce.JobSubmitter: number of splits:3

21/04/26 20:23:28 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1619438325526_0002

21/04/26 20:23:28 INFO impl.YarnClientImpl: Submitted application application_1619438325526_0002

21/04/26 20:23:28 INFO mapreduce.Job: The url to track the job: http://node3:8088/proxy/application_1619438325526_0002/

21/04/26 20:23:28 INFO mapreduce.Job: Running job: job_1619438325526_0002

21/04/26 20:23:39 INFO mapreduce.Job: Job job_1619438325526_0002 running in uber mode : false

21/04/26 20:23:39 INFO mapreduce.Job: map 0% reduce 0%

21/04/26 20:24:00 INFO mapreduce.Job: map 9% reduce 0%

21/04/26 20:24:03 INFO mapreduce.Job: map 10% reduce 0%

21/04/26 20:24:06 INFO mapreduce.Job: map 12% reduce 0%

21/04/26 20:24:07 INFO mapreduce.Job: map 13% reduce 0%

21/04/26 20:24:09 INFO mapreduce.Job: map 14% reduce 0%

21/04/26 20:24:10 INFO mapreduce.Job: map 18% reduce 0%

21/04/26 20:24:13 INFO mapreduce.Job: map 20% reduce 0%

21/04/26 20:24:15 INFO mapreduce.Job: map 22% reduce 0%

21/04/26 20:24:16 INFO mapreduce.Job: map 24% reduce 0%

21/04/26 20:24:18 INFO mapreduce.Job: map 25% reduce 0%

21/04/26 20:24:19 INFO mapreduce.Job: map 28% reduce 0%

21/04/26 20:24:21 INFO mapreduce.Job: map 31% reduce 0%

21/04/26 20:24:22 INFO mapreduce.Job: map 32% reduce 0%

21/04/26 20:24:24 INFO mapreduce.Job: map 35% reduce 0%

21/04/26 20:24:25 INFO mapreduce.Job: map 36% reduce 0%

21/04/26 20:24:27 INFO mapreduce.Job: map 39% reduce 0%

21/04/26 20:24:28 INFO mapreduce.Job: map 40% reduce 0%

21/04/26 20:24:31 INFO mapreduce.Job: map 43% reduce 0%

21/04/26 20:24:32 INFO mapreduce.Job: map 45% reduce 0%

21/04/26 20:24:34 INFO mapreduce.Job: map 47% reduce 0%

21/04/26 20:24:35 INFO mapreduce.Job: map 48% reduce 0%

21/04/26 20:24:37 INFO mapreduce.Job: map 52% reduce 0%

21/04/26 20:24:39 INFO mapreduce.Job: map 53% reduce 0%

21/04/26 20:24:40 INFO mapreduce.Job: map 55% reduce 0%

21/04/26 20:24:42 INFO mapreduce.Job: map 56% reduce 0%

21/04/26 20:24:43 INFO mapreduce.Job: map 58% reduce 0%

21/04/26 20:24:45 INFO mapreduce.Job: map 60% reduce 0%

21/04/26 20:24:46 INFO mapreduce.Job: map 64% reduce 0%

21/04/26 20:24:49 INFO mapreduce.Job: map 65% reduce 0%

21/04/26 20:24:52 INFO mapreduce.Job: map 68% reduce 0%

21/04/26 20:24:54 INFO mapreduce.Job: map 69% reduce 0%

21/04/26 20:24:55 INFO mapreduce.Job: map 71% reduce 0%

21/04/26 20:24:57 INFO mapreduce.Job: map 73% reduce 0%

21/04/26 20:24:58 INFO mapreduce.Job: map 75% reduce 0%

21/04/26 20:25:01 INFO mapreduce.Job: map 77% reduce 0%

21/04/26 20:25:02 INFO mapreduce.Job: map 80% reduce 0%

21/04/26 20:25:04 INFO mapreduce.Job: map 81% reduce 0%

21/04/26 20:25:05 INFO mapreduce.Job: map 85% reduce 0%

21/04/26 20:25:07 INFO mapreduce.Job: map 88% reduce 0%

21/04/26 20:25:08 INFO mapreduce.Job: map 89% reduce 0%

21/04/26 20:25:10 INFO mapreduce.Job: map 91% reduce 0%

21/04/26 20:25:11 INFO mapreduce.Job: map 93% reduce 0%

21/04/26 20:25:13 INFO mapreduce.Job: map 95% reduce 0%

21/04/26 20:25:14 INFO mapreduce.Job: map 98% reduce 0%

21/04/26 20:25:17 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 20:25:23 INFO mapreduce.Job: map 100% reduce 11%

21/04/26 20:25:26 INFO mapreduce.Job: map 100% reduce 22%

21/04/26 20:25:29 INFO mapreduce.Job: map 100% reduce 67%

21/04/26 20:25:32 INFO mapreduce.Job: map 100% reduce 69%

21/04/26 20:25:35 INFO mapreduce.Job: map 100% reduce 71%

21/04/26 20:25:38 INFO mapreduce.Job: map 100% reduce 74%

21/04/26 20:25:41 INFO mapreduce.Job: map 100% reduce 77%

21/04/26 20:25:44 INFO mapreduce.Job: map 100% reduce 80%

21/04/26 20:25:47 INFO mapreduce.Job: map 100% reduce 83%

21/04/26 20:25:50 INFO mapreduce.Job: map 100% reduce 85%

21/04/26 20:25:53 INFO mapreduce.Job: map 100% reduce 88%

21/04/26 20:25:56 INFO mapreduce.Job: map 100% reduce 90%

21/04/26 20:25:59 INFO mapreduce.Job: map 100% reduce 92%

21/04/26 20:26:02 INFO mapreduce.Job: map 100% reduce 95%

21/04/26 20:26:05 INFO mapreduce.Job: map 100% reduce 98%

21/04/26 20:26:08 INFO mapreduce.Job: map 100% reduce 100%

21/04/26 20:26:08 INFO mapreduce.Job: Job job_1619438325526_0002 completed successfully

21/04/26 20:26:09 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1679368168

FILE: Number of bytes written=2519541603

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=380018507

HDFS: Number of bytes written=82

HDFS: Number of read operations=12

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=3

Launched reduce tasks=1

Data-local map tasks=3

Total time spent by all maps in occupied slots (ms)=277120

Total time spent by all reduces in occupied slots (ms)=55989

Total time spent by all map tasks (ms)=277120

Total time spent by all reduce tasks (ms)=55989

Total vcore-milliseconds taken by all map tasks=277120

Total vcore-milliseconds taken by all reduce tasks=55989

Total megabyte-milliseconds taken by all map tasks=283770880

Total megabyte-milliseconds taken by all reduce tasks=57332736

Map-Reduce Framework

Map input records=33308000

Map output records=79388000

Map output bytes=680908000

Map output materialized bytes=839684018

Input split bytes=315

Combine input records=0

Combine output records=0

Reduce input groups=6

Reduce shuffle bytes=839684018

Reduce input records=79388000

Reduce output records=6

Spilled Records=238164000

Shuffled Maps =3

Failed Shuffles=0

Merged Map outputs=3

GC time elapsed (ms)=8971

CPU time spent (ms)=212670

Physical memory (bytes) snapshot=1000062976

Virtual memory (bytes) snapshot=8431161344

Total committed heap usage (bytes)=731906048

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=380018192

File Output Format Counters

Bytes Written=82

status = 0

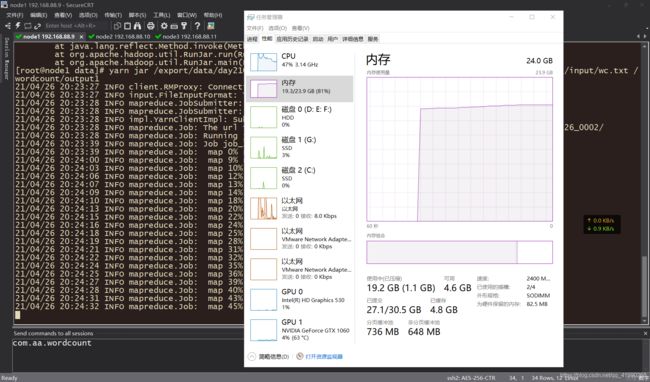

笔者的老爷机很吃力呢:

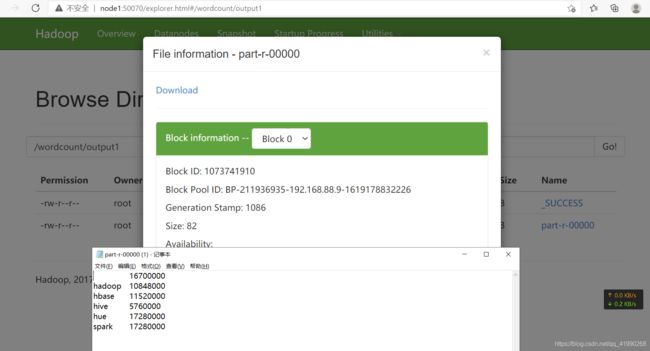

在node1:50070可以看到:

可以看出,自己写的wordcount程序计算出了正确的结果。和官方自带的示例程序相比,可以删除原有目录。

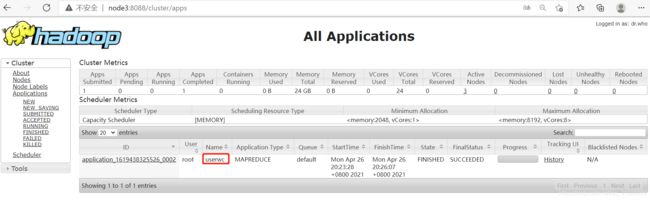

在node3:8088还可以看到提交过的MapReduce任务。

参照HDFS集群关机顺序关闭集群。

关闭集群

参照关闭集群的顺序。

node1关闭HDFS:

stop-dfs.sh

关闭YARN:

stop-yarn.sh

3台机关闭ZooKeeper:

cd /export/server/zookeeper-3.4.6/

bin/zkServer.sh stop

3台机断电:

poweroff

本地运行

扔集群运行还是比较麻烦的,好在Java可以跨平台,在本地运行也可以测试代码的正确性。

package com.aa.wordcount_local;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.io.IOException;

public class WordCountLocal extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

//构建

Job job = Job.getInstance(this.getConf(), "userwc_local");

job.setJarByClass(WordCountLocal.class);

//配置

job.setInputFormatClass(TextInputFormat.class);

//使用程序的第一个参数作为输入

TextInputFormat.setInputPaths(job, new Path(args[0]));

job.setMapperClass(WCMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(WCReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

//使用程序的第二个参数作为输出路径

Path outputPath = new Path(args[1]);

FileSystem fs = FileSystem.get(this.getConf());

if (fs.exists(outputPath)) {

fs.delete(outputPath, true);//true为递归删除

}

TextOutputFormat.setOutputPath(job, outputPath);

return job.waitForCompletion(true) ? 0 : -1;

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

int status = ToolRunner.run(conf, new WordCountLocal(), args);

System.out.println("status = " + status);

System.exit(status);

}

public static class WCMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

//输出的K2

Text outputKey = new Text();

//输出的V2

IntWritable outputValue = new IntWritable(1);//由于是平权的,使之恒=1便可以计数

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//将每行的内容分割得到每个单词

String[] words = value.toString().split("\\s+");

//迭代取出每个单词作为K2

for (String word : words) {

//将当前的单词作为K2

this.outputKey.set(word);

//将K2和V2传递到下一步

context.write(outputKey,outputValue);

}

}

}

public static class WCReducer extends Reducer<Text,IntWritable,Text,IntWritable>{

//K3=K2,不需要重复定义变量

//输出V3

IntWritable outputValue = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum=0;

for (IntWritable value : values) {

sum+=value.get();

}

//给V3赋值

this.outputValue.set(sum);

//传递到下一步

context.write(key,this.outputValue);

}

}

}

当放到同一个类中时,Mapper和Reducer必须申明为static(别问我为神马)。

本地部署

Hadoop本地环境变量配置

配置成功后可以在本地运行。idea中进行如下设置:

Run→Edit →Configus

com.aa.wordcount_local.WordCountLocal

传递的2个路径变量使用空格隔开:

E:\bigdata\2021.4.26\wc.txt E:\bigdata\2021.4.26\result

Apply之后即可直接运行。

运行过程:

"C:\Program Files\Java\jdk1.8.0_241\bin\java.exe" "-javaagent:C:\Program Files\JetBrains\IntelliJ IDEA 2019.3.5\lib\idea_rt.jar=59719:C:\Program Files\JetBrains\IntelliJ IDEA 2019.3.5\bin" -Dfile.encoding=UTF-8 -classpath "C:\Program Files\Java\jdk1.8.0_241\jre\lib\charsets.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\deploy.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\cldrdata.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\dnsns.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\jaccess.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\jfxrt.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\localedata.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\nashorn.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\sunec.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\sunmscapi.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\ext\zipfs.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\javaws.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\jce.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\jfr.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\jfxswt.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\jsse.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\management-agent.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\plugin.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\resources.jar;C:\Program Files\Java\jdk1.8.0_241\jre\lib\rt.jar;C:\Users\killer\IdeaProjects\bigdata_demo\day210426\target\classes;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-common\2.7.5\hadoop-common-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-annotations\2.7.5\hadoop-annotations-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\google\guava\guava\11.0.2\guava-11.0.2.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-cli\commons-cli\1.2\commons-cli-1.2.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\commons\commons-math3\3.1.1\commons-math3-3.1.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\xmlenc\xmlenc\0.52\xmlenc-0.52.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-httpclient\commons-httpclient\3.1\commons-httpclient-3.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-codec\commons-codec\1.4\commons-codec-1.4.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-io\commons-io\2.4\commons-io-2.4.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-net\commons-net\3.1\commons-net-3.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-collections\commons-collections\3.2.2\commons-collections-3.2.2.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\javax\servlet\servlet-api\2.5\servlet-api-2.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\mortbay\jetty\jetty\6.1.26\jetty-6.1.26.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\mortbay\jetty\jetty-util\6.1.26\jetty-util-6.1.26.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\mortbay\jetty\jetty-sslengine\6.1.26\jetty-sslengine-6.1.26.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\javax\servlet\jsp\jsp-api\2.1\jsp-api-2.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\sun\jersey\jersey-core\1.9\jersey-core-1.9.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\sun\jersey\jersey-json\1.9\jersey-json-1.9.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\codehaus\jettison\jettison\1.1\jettison-1.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\sun\xml\bind\jaxb-impl\2.2.3-1\jaxb-impl-2.2.3-1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\codehaus\jackson\jackson-jaxrs\1.8.3\jackson-jaxrs-1.8.3.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\codehaus\jackson\jackson-xc\1.8.3\jackson-xc-1.8.3.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\sun\jersey\jersey-server\1.9\jersey-server-1.9.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\asm\asm\3.1\asm-3.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\log4j\log4j\1.2.17\log4j-1.2.17.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\net\java\dev\jets3t\jets3t\0.9.0\jets3t-0.9.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\httpcomponents\httpclient\4.1.2\httpclient-4.1.2.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\httpcomponents\httpcore\4.1.2\httpcore-4.1.2.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\jamesmurty\utils\java-xmlbuilder\0.4\java-xmlbuilder-0.4.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-configuration\commons-configuration\1.6\commons-configuration-1.6.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-digester\commons-digester\1.8\commons-digester-1.8.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-beanutils\commons-beanutils\1.7.0\commons-beanutils-1.7.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-beanutils\commons-beanutils-core\1.8.0\commons-beanutils-core-1.8.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\slf4j\slf4j-api\1.7.10\slf4j-api-1.7.10.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\slf4j\slf4j-log4j12\1.7.10\slf4j-log4j12-1.7.10.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\codehaus\jackson\jackson-core-asl\1.9.13\jackson-core-asl-1.9.13.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\codehaus\jackson\jackson-mapper-asl\1.9.13\jackson-mapper-asl-1.9.13.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\avro\avro\1.7.4\avro-1.7.4.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\thoughtworks\paranamer\paranamer\2.3\paranamer-2.3.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\xerial\snappy\snappy-java\1.0.4.1\snappy-java-1.0.4.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\google\protobuf\protobuf-java\2.5.0\protobuf-java-2.5.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\google\code\gson\gson\2.2.4\gson-2.2.4.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-auth\2.7.5\hadoop-auth-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\directory\server\apacheds-kerberos-codec\2.0.0-M15\apacheds-kerberos-codec-2.0.0-M15.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\directory\server\apacheds-i18n\2.0.0-M15\apacheds-i18n-2.0.0-M15.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\directory\api\api-asn1-api\1.0.0-M20\api-asn1-api-1.0.0-M20.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\directory\api\api-util\1.0.0-M20\api-util-1.0.0-M20.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\curator\curator-framework\2.7.1\curator-framework-2.7.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\jcraft\jsch\0.1.54\jsch-0.1.54.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\curator\curator-client\2.7.1\curator-client-2.7.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\curator\curator-recipes\2.7.1\curator-recipes-2.7.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\google\code\findbugs\jsr305\3.0.0\jsr305-3.0.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\htrace\htrace-core\3.1.0-incubating\htrace-core-3.1.0-incubating.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\zookeeper\zookeeper\3.4.6\zookeeper-3.4.6.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\commons\commons-compress\1.4.1\commons-compress-1.4.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\tukaani\xz\1.0\xz-1.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-client\2.7.5\hadoop-client-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-mapreduce-client-app\2.7.5\hadoop-mapreduce-client-app-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-mapreduce-client-common\2.7.5\hadoop-mapreduce-client-common-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-yarn-client\2.7.5\hadoop-yarn-client-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-yarn-server-common\2.7.5\hadoop-yarn-server-common-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-mapreduce-client-shuffle\2.7.5\hadoop-mapreduce-client-shuffle-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-yarn-api\2.7.5\hadoop-yarn-api-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-mapreduce-client-jobclient\2.7.5\hadoop-mapreduce-client-jobclient-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-hdfs\2.7.5\hadoop-hdfs-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\commons-daemon\commons-daemon\1.0.13\commons-daemon-1.0.13.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\io\netty\netty\3.6.2.Final\netty-3.6.2.Final.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\io\netty\netty-all\4.0.23.Final\netty-all-4.0.23.Final.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\xerces\xercesImpl\2.9.1\xercesImpl-2.9.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\xml-apis\xml-apis\1.3.04\xml-apis-1.3.04.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\fusesource\leveldbjni\leveldbjni-all\1.8\leveldbjni-all-1.8.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-mapreduce-client-core\2.7.5\hadoop-mapreduce-client-core-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\apache\hadoop\hadoop-yarn-common\2.7.5\hadoop-yarn-common-2.7.5.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\javax\xml\bind\jaxb-api\2.2.2\jaxb-api-2.2.2.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\javax\xml\stream\stax-api\1.0-2\stax-api-1.0-2.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\javax\activation\activation\1.1\activation-1.1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\sun\jersey\jersey-client\1.9\jersey-client-1.9.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\google\inject\guice\3.0\guice-3.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\javax\inject\javax.inject\1\javax.inject-1.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\aopalliance\aopalliance\1.0\aopalliance-1.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\sun\jersey\contribs\jersey-guice\1.9\jersey-guice-1.9.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\com\google\inject\extensions\guice-servlet\3.0\guice-servlet-3.0.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\junit\junit\4.13\junit-4.13.jar;C:\Program Files\apache-maven-3.3.9\Maven_Repository\org\hamcrest\hamcrest-core\1.3\hamcrest-core-1.3.jar" com.aa.wordcount_local.WordCountLocal E:\bigdata\2021.4.26\wc.txt E:\bigdata\2021.4.26\result

21/04/26 21:26:22 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

21/04/26 21:26:22 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

21/04/26 21:26:24 WARN mapreduce.JobResourceUploader: No job jar file set. User classes may not be found. See Job or Job#setJar(String).

21/04/26 21:26:24 INFO input.FileInputFormat: Total input paths to process : 1

21/04/26 21:26:24 INFO mapreduce.JobSubmitter: number of splits:12

21/04/26 21:26:24 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local588307001_0001

21/04/26 21:26:24 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

21/04/26 21:26:24 INFO mapreduce.Job: Running job: job_local588307001_0001

21/04/26 21:26:24 INFO mapred.LocalJobRunner: OutputCommitter set in config null

21/04/26 21:26:24 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:26:24 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

21/04/26 21:26:24 INFO mapred.LocalJobRunner: Waiting for map tasks

21/04/26 21:26:24 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000000_0

21/04/26 21:26:24 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:26:24 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:26:24 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@46db6474

21/04/26 21:26:24 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:0+33554432

21/04/26 21:26:24 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:26:24 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:26:24 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:26:24 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:26:24 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:26:24 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:26:25 INFO mapreduce.Job: Job job_local588307001_0001 running in uber mode : false

21/04/26 21:26:25 INFO mapreduce.Job: map 0% reduce 0%

21/04/26 21:26:27 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:27 INFO mapred.MapTask: bufstart = 0; bufend = 29274899; bufvoid = 104857600

21/04/26 21:26:27 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:26:27 INFO mapred.MapTask: (EQUATOR) 39760649 kvi 9940156(39760624)

21/04/26 21:26:28 INFO mapred.MapTask: Finished spill 0

21/04/26 21:26:28 INFO mapred.MapTask: (RESET) equator 39760649 kv 9940156(39760624) kvi 7318732(29274928)

21/04/26 21:26:30 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:30 INFO mapred.MapTask: bufstart = 39760649; bufend = 69035531; bufvoid = 104857600

21/04/26 21:26:30 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:26:30 INFO mapred.MapTask: (EQUATOR) 79521285 kvi 19880316(79521264)

21/04/26 21:26:30 INFO mapred.LocalJobRunner: map > map

21/04/26 21:26:30 INFO mapred.LocalJobRunner: map > map

21/04/26 21:26:30 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:26:31 INFO mapreduce.Job: map 6% reduce 0%

21/04/26 21:26:32 INFO mapred.MapTask: Finished spill 1

21/04/26 21:26:32 INFO mapred.MapTask: (RESET) equator 79521285 kv 19880316(79521264) kvi 19146444(76585776)

21/04/26 21:26:32 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:32 INFO mapred.MapTask: bufstart = 79521285; bufend = 81094879; bufvoid = 104857600

21/04/26 21:26:32 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146448(76585792); length = 733869/6553600

21/04/26 21:26:32 INFO mapred.MapTask: Finished spill 2

21/04/26 21:26:32 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:26:32 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143116 bytes

21/04/26 21:26:33 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:26:36 INFO mapred.Task: Task:attempt_local588307001_0001_m_000000_0 is done. And is in the process of committing

21/04/26 21:26:36 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:26:36 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000000_0' done.

21/04/26 21:26:36 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000000_0: Counters: 17

File System Counters

FILE: Number of bytes read=107703100

FILE: Number of bytes written=148580113

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941057

Map output records=7009866

Map output bytes=60123375

Map output materialized bytes=74143113

Input split bytes=98

Combine input records=0

Spilled Records=14019732

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=55

Total committed heap usage (bytes)=1497366528

File Input Format Counters

Bytes Read=33558528

21/04/26 21:26:36 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000000_0

21/04/26 21:26:36 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000001_0

21/04/26 21:26:36 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:26:36 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:26:36 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@30b25df7

21/04/26 21:26:36 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:33554432+33554432

21/04/26 21:26:36 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:26:36 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:26:36 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:26:36 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:26:36 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:26:36 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:26:36 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:26:38 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:38 INFO mapred.MapTask: bufstart = 0; bufend = 29274882; bufvoid = 104857600

21/04/26 21:26:38 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:26:38 INFO mapred.MapTask: (EQUATOR) 39760641 kvi 9940156(39760624)

21/04/26 21:26:39 INFO mapred.MapTask: Finished spill 0

21/04/26 21:26:39 INFO mapred.MapTask: (RESET) equator 39760641 kv 9940156(39760624) kvi 7318728(29274912)

21/04/26 21:26:41 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:41 INFO mapred.MapTask: bufstart = 39760641; bufend = 69035537; bufvoid = 104857600

21/04/26 21:26:41 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:26:41 INFO mapred.MapTask: (EQUATOR) 79521288 kvi 19880316(79521264)

21/04/26 21:26:41 INFO mapred.LocalJobRunner:

21/04/26 21:26:41 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:26:41 INFO mapreduce.Job: map 8% reduce 0%

21/04/26 21:26:42 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:26:42 INFO mapreduce.Job: map 14% reduce 0%

21/04/26 21:26:42 INFO mapred.MapTask: Finished spill 1

21/04/26 21:26:42 INFO mapred.MapTask: (RESET) equator 79521288 kv 19880316(79521264) kvi 19146444(76585776)

21/04/26 21:26:42 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:42 INFO mapred.MapTask: bufstart = 79521288; bufend = 81094874; bufvoid = 104857600

21/04/26 21:26:42 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146448(76585792); length = 733869/6553600

21/04/26 21:26:42 INFO mapred.MapTask: Finished spill 2

21/04/26 21:26:42 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:26:42 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143105 bytes

21/04/26 21:26:45 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:26:45 INFO mapreduce.Job: map 16% reduce 0%

21/04/26 21:26:46 INFO mapred.Task: Task:attempt_local588307001_0001_m_000001_0 is done. And is in the process of committing

21/04/26 21:26:46 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:26:46 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000001_0' done.

21/04/26 21:26:46 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000001_0: Counters: 17

File System Counters

FILE: Number of bytes read=215405945

FILE: Number of bytes written=296866361

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941058

Map output records=7009866

Map output bytes=60123364

Map output materialized bytes=74143102

Input split bytes=98

Combine input records=0

Spilled Records=14019732

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=115

Total committed heap usage (bytes)=1507852288

File Input Format Counters

Bytes Read=33558528

21/04/26 21:26:46 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000001_0

21/04/26 21:26:46 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000002_0

21/04/26 21:26:46 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:26:46 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:26:46 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@5a8242f3

21/04/26 21:26:46 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:67108864+33554432

21/04/26 21:26:46 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:26:46 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:26:46 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:26:46 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:26:46 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:26:46 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:26:46 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:26:48 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:48 INFO mapred.MapTask: bufstart = 0; bufend = 29274882; bufvoid = 104857600

21/04/26 21:26:48 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:26:48 INFO mapred.MapTask: (EQUATOR) 39760641 kvi 9940156(39760624)

21/04/26 21:26:49 INFO mapred.MapTask: Finished spill 0

21/04/26 21:26:49 INFO mapred.MapTask: (RESET) equator 39760641 kv 9940156(39760624) kvi 7318728(29274912)

21/04/26 21:26:51 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:51 INFO mapred.MapTask: bufstart = 39760641; bufend = 69035523; bufvoid = 104857600

21/04/26 21:26:51 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:26:51 INFO mapred.MapTask: (EQUATOR) 79521281 kvi 19880316(79521264)

21/04/26 21:26:51 INFO mapred.LocalJobRunner:

21/04/26 21:26:51 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:26:51 INFO mapreduce.Job: map 17% reduce 0%

21/04/26 21:26:52 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:26:52 INFO mapreduce.Job: map 22% reduce 0%

21/04/26 21:26:52 INFO mapred.MapTask: Finished spill 1

21/04/26 21:26:52 INFO mapred.MapTask: (RESET) equator 79521281 kv 19880316(79521264) kvi 19146448(76585792)

21/04/26 21:26:52 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:52 INFO mapred.MapTask: bufstart = 79521281; bufend = 81094884; bufvoid = 104857600

21/04/26 21:26:52 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146452(76585808); length = 733865/6553600

21/04/26 21:26:53 INFO mapred.MapTask: Finished spill 2

21/04/26 21:26:53 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:26:53 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143106 bytes

21/04/26 21:26:55 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:26:55 INFO mapreduce.Job: map 24% reduce 0%

21/04/26 21:26:56 INFO mapred.Task: Task:attempt_local588307001_0001_m_000002_0 is done. And is in the process of committing

21/04/26 21:26:56 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:26:56 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000002_0' done.

21/04/26 21:26:56 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000002_0: Counters: 17

File System Counters

FILE: Number of bytes read=323108791

FILE: Number of bytes written=445152611

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941054

Map output records=7009865

Map output bytes=60123367

Map output materialized bytes=74143103

Input split bytes=98

Combine input records=0

Spilled Records=14019730

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=77

Total committed heap usage (bytes)=1313865728

File Input Format Counters

Bytes Read=33558528

21/04/26 21:26:56 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000002_0

21/04/26 21:26:56 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000003_0

21/04/26 21:26:56 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:26:56 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:26:56 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@be049c8

21/04/26 21:26:56 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:100663296+33554432

21/04/26 21:26:56 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:26:56 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:26:56 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:26:56 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:26:56 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:26:56 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:26:57 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:26:58 INFO mapred.MapTask: Spilling map output

21/04/26 21:26:58 INFO mapred.MapTask: bufstart = 0; bufend = 29274885; bufvoid = 104857600

21/04/26 21:26:58 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561600(50246400); length = 13652797/6553600

21/04/26 21:26:58 INFO mapred.MapTask: (EQUATOR) 39760634 kvi 9940152(39760608)

21/04/26 21:27:00 INFO mapred.MapTask: Finished spill 0

21/04/26 21:27:00 INFO mapred.MapTask: (RESET) equator 39760634 kv 9940152(39760608) kvi 7318728(29274912)

21/04/26 21:27:02 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:02 INFO mapred.MapTask: bufstart = 39760634; bufend = 69035520; bufvoid = 104857600

21/04/26 21:27:02 INFO mapred.MapTask: kvstart = 9940152(39760608); kvend = 22501760(90007040); length = 13652793/6553600

21/04/26 21:27:02 INFO mapred.MapTask: (EQUATOR) 79521272 kvi 19880312(79521248)

21/04/26 21:27:02 INFO mapred.LocalJobRunner:

21/04/26 21:27:02 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:27:02 INFO mapreduce.Job: map 25% reduce 0%

21/04/26 21:27:02 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:03 INFO mapreduce.Job: map 31% reduce 0%

21/04/26 21:27:03 INFO mapred.MapTask: Finished spill 1

21/04/26 21:27:03 INFO mapred.MapTask: (RESET) equator 79521272 kv 19880312(79521248) kvi 19146444(76585776)

21/04/26 21:27:03 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:03 INFO mapred.MapTask: bufstart = 79521272; bufend = 81094865; bufvoid = 104857600

21/04/26 21:27:03 INFO mapred.MapTask: kvstart = 19880312(79521248); kvend = 19146448(76585792); length = 733865/6553600

21/04/26 21:27:03 INFO mapred.MapTask: Finished spill 2

21/04/26 21:27:03 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:27:03 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143105 bytes

21/04/26 21:27:05 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:27:06 INFO mapreduce.Job: map 32% reduce 0%

21/04/26 21:27:08 INFO mapred.Task: Task:attempt_local588307001_0001_m_000003_0 is done. And is in the process of committing

21/04/26 21:27:08 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:08 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000003_0' done.

21/04/26 21:27:08 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000003_0: Counters: 17

File System Counters

FILE: Number of bytes read=430811636

FILE: Number of bytes written=593438859

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941058

Map output records=7009866

Map output bytes=60123364

Map output materialized bytes=74143102

Input split bytes=98

Combine input records=0

Spilled Records=14019732

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=67

Total committed heap usage (bytes)=1136132096

File Input Format Counters

Bytes Read=33558528

21/04/26 21:27:08 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000003_0

21/04/26 21:27:08 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000004_0

21/04/26 21:27:08 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:27:08 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:27:08 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@6918296b

21/04/26 21:27:08 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:134217728+33554432

21/04/26 21:27:08 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:27:08 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:27:08 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:27:08 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:27:08 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:27:08 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:27:08 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:27:10 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:10 INFO mapred.MapTask: bufstart = 0; bufend = 29274888; bufvoid = 104857600

21/04/26 21:27:10 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561600(50246400); length = 13652797/6553600

21/04/26 21:27:10 INFO mapred.MapTask: (EQUATOR) 39760636 kvi 9940152(39760608)

21/04/26 21:27:11 INFO mapred.MapTask: Finished spill 0

21/04/26 21:27:11 INFO mapred.MapTask: (RESET) equator 39760636 kv 9940152(39760608) kvi 7318728(29274912)

21/04/26 21:27:13 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:13 INFO mapred.MapTask: bufstart = 39760636; bufend = 69035514; bufvoid = 104857600

21/04/26 21:27:13 INFO mapred.MapTask: kvstart = 9940152(39760608); kvend = 22501760(90007040); length = 13652793/6553600

21/04/26 21:27:13 INFO mapred.MapTask: (EQUATOR) 79521269 kvi 19880312(79521248)

21/04/26 21:27:13 INFO mapred.LocalJobRunner:

21/04/26 21:27:13 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:27:13 INFO mapreduce.Job: map 33% reduce 0%

21/04/26 21:27:14 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:14 INFO mapreduce.Job: map 39% reduce 0%

21/04/26 21:27:14 INFO mapred.MapTask: Finished spill 1

21/04/26 21:27:14 INFO mapred.MapTask: (RESET) equator 79521269 kv 19880312(79521248) kvi 19146428(76585712)

21/04/26 21:27:14 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:14 INFO mapred.MapTask: bufstart = 79521269; bufend = 81094906; bufvoid = 104857600

21/04/26 21:27:14 INFO mapred.MapTask: kvstart = 19880312(79521248); kvend = 19146432(76585728); length = 733881/6553600

21/04/26 21:27:14 INFO mapred.MapTask: Finished spill 2

21/04/26 21:27:14 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:27:14 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143152 bytes

21/04/26 21:27:17 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:27:17 INFO mapreduce.Job: map 41% reduce 0%

21/04/26 21:27:17 INFO mapred.Task: Task:attempt_local588307001_0001_m_000004_0 is done. And is in the process of committing

21/04/26 21:27:17 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:17 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000004_0' done.

21/04/26 21:27:17 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000004_0: Counters: 17

File System Counters

FILE: Number of bytes read=538514528

FILE: Number of bytes written=741725201

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941058

Map output records=7009870

Map output bytes=60123403

Map output materialized bytes=74143149

Input split bytes=98

Combine input records=0

Spilled Records=14019740

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=67

Total committed heap usage (bytes)=953155584

File Input Format Counters

Bytes Read=33558528

21/04/26 21:27:17 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000004_0

21/04/26 21:27:17 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000005_0

21/04/26 21:27:17 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:27:17 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:27:17 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@4b678c5a

21/04/26 21:27:17 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:167772160+33554432

21/04/26 21:27:17 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:27:17 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:27:17 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:27:17 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:27:17 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:27:17 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:27:18 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:27:19 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:19 INFO mapred.MapTask: bufstart = 0; bufend = 29274881; bufvoid = 104857600

21/04/26 21:27:19 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:27:19 INFO mapred.MapTask: (EQUATOR) 39760640 kvi 9940156(39760624)

21/04/26 21:27:21 INFO mapred.MapTask: Finished spill 0

21/04/26 21:27:21 INFO mapred.MapTask: (RESET) equator 39760640 kv 9940156(39760624) kvi 7318728(29274912)

21/04/26 21:27:22 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:22 INFO mapred.MapTask: bufstart = 39760640; bufend = 69035526; bufvoid = 104857600

21/04/26 21:27:22 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:27:22 INFO mapred.MapTask: (EQUATOR) 79521283 kvi 19880316(79521264)

21/04/26 21:27:22 INFO mapred.LocalJobRunner:

21/04/26 21:27:22 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:27:23 INFO mapreduce.Job: map 42% reduce 0%

21/04/26 21:27:23 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:24 INFO mapred.MapTask: Finished spill 1

21/04/26 21:27:24 INFO mapred.MapTask: (RESET) equator 79521283 kv 19880316(79521264) kvi 19146452(76585808)

21/04/26 21:27:24 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:24 INFO mapred.MapTask: bufstart = 79521283; bufend = 81094858; bufvoid = 104857600

21/04/26 21:27:24 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146456(76585824); length = 733861/6553600

21/04/26 21:27:24 INFO mapred.MapTask: Finished spill 2

21/04/26 21:27:24 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:27:24 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143079 bytes

21/04/26 21:27:24 INFO mapreduce.Job: map 47% reduce 0%

21/04/26 21:27:26 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:27:27 INFO mapred.Task: Task:attempt_local588307001_0001_m_000005_0 is done. And is in the process of committing

21/04/26 21:27:27 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:27 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000005_0' done.

21/04/26 21:27:27 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000005_0: Counters: 17

File System Counters

FILE: Number of bytes read=646217347

FILE: Number of bytes written=890011397

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941056

Map output records=7009864

Map output bytes=60123342

Map output materialized bytes=74143076

Input split bytes=98

Combine input records=0

Spilled Records=14019728

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=75

Total committed heap usage (bytes)=825753600

File Input Format Counters

Bytes Read=33558528

21/04/26 21:27:27 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000005_0

21/04/26 21:27:27 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000006_0

21/04/26 21:27:27 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:27:27 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:27:27 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@33ada1f

21/04/26 21:27:27 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:201326592+33554432

21/04/26 21:27:27 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:27:27 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:27:27 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:27:27 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:27:27 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:27:27 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:27:27 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:27:29 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:29 INFO mapred.MapTask: bufstart = 0; bufend = 29274896; bufvoid = 104857600

21/04/26 21:27:29 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:27:29 INFO mapred.MapTask: (EQUATOR) 39760648 kvi 9940156(39760624)

21/04/26 21:27:30 INFO mapred.MapTask: Finished spill 0

21/04/26 21:27:30 INFO mapred.MapTask: (RESET) equator 39760648 kv 9940156(39760624) kvi 7318728(29274912)

21/04/26 21:27:32 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:32 INFO mapred.MapTask: bufstart = 39760648; bufend = 69035532; bufvoid = 104857600

21/04/26 21:27:32 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:27:32 INFO mapred.MapTask: (EQUATOR) 79521286 kvi 19880316(79521264)

21/04/26 21:27:32 INFO mapred.LocalJobRunner:

21/04/26 21:27:32 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:27:32 INFO mapreduce.Job: map 50% reduce 0%

21/04/26 21:27:33 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:33 INFO mapred.MapTask: Finished spill 1

21/04/26 21:27:33 INFO mapred.MapTask: (RESET) equator 79521286 kv 19880316(79521264) kvi 19146440(76585760)

21/04/26 21:27:33 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:33 INFO mapred.MapTask: bufstart = 79521286; bufend = 81094880; bufvoid = 104857600

21/04/26 21:27:33 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146444(76585776); length = 733873/6553600

21/04/26 21:27:33 INFO mapred.MapTask: Finished spill 2

21/04/26 21:27:33 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:27:33 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143117 bytes

21/04/26 21:27:33 INFO mapreduce.Job: map 56% reduce 0%

21/04/26 21:27:36 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:27:36 INFO mapreduce.Job: map 58% reduce 0%

21/04/26 21:27:37 INFO mapred.Task: Task:attempt_local588307001_0001_m_000006_0 is done. And is in the process of committing

21/04/26 21:27:37 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:37 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000006_0' done.

21/04/26 21:27:37 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000006_0: Counters: 17

File System Counters

FILE: Number of bytes read=753919692

FILE: Number of bytes written=1038297669

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941058

Map output records=7009867

Map output bytes=60123374

Map output materialized bytes=74143114

Input split bytes=98

Combine input records=0

Spilled Records=14019734

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=71

Total committed heap usage (bytes)=791150592

File Input Format Counters

Bytes Read=33558528

21/04/26 21:27:37 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000006_0

21/04/26 21:27:37 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000007_0

21/04/26 21:27:37 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:27:37 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:27:37 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@a1d7db9

21/04/26 21:27:37 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:234881024+33554432

21/04/26 21:27:37 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:27:37 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:27:37 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:27:37 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:27:37 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:27:37 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:27:37 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:27:39 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:39 INFO mapred.MapTask: bufstart = 0; bufend = 29274882; bufvoid = 104857600

21/04/26 21:27:39 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:27:39 INFO mapred.MapTask: (EQUATOR) 39760641 kvi 9940156(39760624)

21/04/26 21:27:40 INFO mapred.MapTask: Finished spill 0

21/04/26 21:27:40 INFO mapred.MapTask: (RESET) equator 39760641 kv 9940156(39760624) kvi 7318728(29274912)

21/04/26 21:27:42 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:42 INFO mapred.MapTask: bufstart = 39760641; bufend = 69035537; bufvoid = 104857600

21/04/26 21:27:42 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:27:42 INFO mapred.MapTask: (EQUATOR) 79521288 kvi 19880316(79521264)

21/04/26 21:27:42 INFO mapred.LocalJobRunner:

21/04/26 21:27:42 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:27:42 INFO mapreduce.Job: map 58% reduce 0%

21/04/26 21:27:43 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:43 INFO mapred.MapTask: Finished spill 1

21/04/26 21:27:43 INFO mapred.MapTask: (RESET) equator 79521288 kv 19880316(79521264) kvi 19146444(76585776)

21/04/26 21:27:43 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:43 INFO mapred.MapTask: bufstart = 79521288; bufend = 81094880; bufvoid = 104857600

21/04/26 21:27:43 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146448(76585792); length = 733869/6553600

21/04/26 21:27:43 INFO mapred.MapTask: Finished spill 2

21/04/26 21:27:43 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:27:43 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143111 bytes

21/04/26 21:27:43 INFO mapreduce.Job: map 64% reduce 0%

21/04/26 21:27:46 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:27:46 INFO mapreduce.Job: map 66% reduce 0%

21/04/26 21:27:46 INFO mapred.Task: Task:attempt_local588307001_0001_m_000007_0 is done. And is in the process of committing

21/04/26 21:27:46 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:46 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000007_0' done.

21/04/26 21:27:46 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000007_0: Counters: 17

File System Counters

FILE: Number of bytes read=861622031

FILE: Number of bytes written=1186583929

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941058

Map output records=7009866

Map output bytes=60123370

Map output materialized bytes=74143108

Input split bytes=98

Combine input records=0

Spilled Records=14019732

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=68

Total committed heap usage (bytes)=1449656320

File Input Format Counters

Bytes Read=33558528

21/04/26 21:27:46 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000007_0

21/04/26 21:27:46 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000008_0

21/04/26 21:27:46 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:27:46 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:27:46 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@22664ee0

21/04/26 21:27:46 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:268435456+33554432

21/04/26 21:27:46 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:27:46 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:27:46 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:27:46 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:27:46 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:27:46 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:27:47 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:27:48 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:48 INFO mapred.MapTask: bufstart = 0; bufend = 29274881; bufvoid = 104857600

21/04/26 21:27:48 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:27:48 INFO mapred.MapTask: (EQUATOR) 39760640 kvi 9940156(39760624)

21/04/26 21:27:50 INFO mapred.MapTask: Finished spill 0

21/04/26 21:27:50 INFO mapred.MapTask: (RESET) equator 39760640 kv 9940156(39760624) kvi 7318728(29274912)

21/04/26 21:27:51 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:51 INFO mapred.MapTask: bufstart = 39760640; bufend = 69035523; bufvoid = 104857600

21/04/26 21:27:51 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:27:51 INFO mapred.MapTask: (EQUATOR) 79521281 kvi 19880316(79521264)

21/04/26 21:27:51 INFO mapred.LocalJobRunner:

21/04/26 21:27:51 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:27:52 INFO mapreduce.Job: map 67% reduce 0%

21/04/26 21:27:52 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:53 INFO mapred.MapTask: Finished spill 1

21/04/26 21:27:53 INFO mapred.MapTask: (RESET) equator 79521281 kv 19880316(79521264) kvi 19146440(76585760)

21/04/26 21:27:53 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:53 INFO mapred.MapTask: bufstart = 79521281; bufend = 81094894; bufvoid = 104857600

21/04/26 21:27:53 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146444(76585776); length = 733873/6553600

21/04/26 21:27:53 INFO mapred.MapTask: Finished spill 2

21/04/26 21:27:53 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:27:53 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143120 bytes

21/04/26 21:27:53 INFO mapreduce.Job: map 72% reduce 0%

21/04/26 21:27:55 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:27:56 INFO mapreduce.Job: map 74% reduce 0%

21/04/26 21:27:56 INFO mapred.Task: Task:attempt_local588307001_0001_m_000008_0 is done. And is in the process of committing

21/04/26 21:27:56 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:27:56 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000008_0' done.

21/04/26 21:27:56 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000008_0: Counters: 17

File System Counters

FILE: Number of bytes read=969324379

FILE: Number of bytes written=1334870207

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941056

Map output records=7009867

Map output bytes=60123377

Map output materialized bytes=74143117

Input split bytes=98

Combine input records=0

Spilled Records=14019734

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=57

Total committed heap usage (bytes)=1227358208

File Input Format Counters

Bytes Read=33558528

21/04/26 21:27:56 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000008_0

21/04/26 21:27:56 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000009_0

21/04/26 21:27:56 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:27:56 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:27:56 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@559403aa

21/04/26 21:27:56 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:301989888+33554432

21/04/26 21:27:56 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:27:56 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:27:56 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:27:56 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:27:56 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:27:56 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:27:57 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:27:58 INFO mapred.MapTask: Spilling map output

21/04/26 21:27:58 INFO mapred.MapTask: bufstart = 0; bufend = 29274888; bufvoid = 104857600

21/04/26 21:27:58 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561600(50246400); length = 13652797/6553600

21/04/26 21:27:58 INFO mapred.MapTask: (EQUATOR) 39760636 kvi 9940152(39760608)

21/04/26 21:28:00 INFO mapred.MapTask: Finished spill 0

21/04/26 21:28:00 INFO mapred.MapTask: (RESET) equator 39760636 kv 9940152(39760608) kvi 7318728(29274912)

21/04/26 21:28:02 INFO mapred.MapTask: Spilling map output

21/04/26 21:28:02 INFO mapred.MapTask: bufstart = 39760636; bufend = 69035517; bufvoid = 104857600

21/04/26 21:28:02 INFO mapred.MapTask: kvstart = 9940152(39760608); kvend = 22501760(90007040); length = 13652793/6553600

21/04/26 21:28:02 INFO mapred.MapTask: (EQUATOR) 79521270 kvi 19880312(79521248)

21/04/26 21:28:02 INFO mapred.LocalJobRunner:

21/04/26 21:28:02 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:28:02 INFO mapreduce.Job: map 75% reduce 0%

21/04/26 21:28:02 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:28:03 INFO mapreduce.Job: map 81% reduce 0%

21/04/26 21:28:03 INFO mapred.MapTask: Finished spill 1

21/04/26 21:28:03 INFO mapred.MapTask: (RESET) equator 79521270 kv 19880312(79521248) kvi 19146444(76585776)

21/04/26 21:28:03 INFO mapred.MapTask: Spilling map output

21/04/26 21:28:03 INFO mapred.MapTask: bufstart = 79521270; bufend = 81094872; bufvoid = 104857600

21/04/26 21:28:03 INFO mapred.MapTask: kvstart = 19880312(79521248); kvend = 19146448(76585792); length = 733865/6553600

21/04/26 21:28:03 INFO mapred.MapTask: Finished spill 2

21/04/26 21:28:03 INFO mapred.Merger: Merging 3 sorted segments

21/04/26 21:28:03 INFO mapred.Merger: Down to the last merge-pass, with 3 segments left of total size: 74143112 bytes

21/04/26 21:28:05 INFO mapred.LocalJobRunner: map > sort >

21/04/26 21:28:06 INFO mapreduce.Job: map 82% reduce 0%

21/04/26 21:28:07 INFO mapred.Task: Task:attempt_local588307001_0001_m_000009_0 is done. And is in the process of committing

21/04/26 21:28:07 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:28:07 INFO mapred.Task: Task 'attempt_local588307001_0001_m_000009_0' done.

21/04/26 21:28:07 INFO mapred.Task: Final Counters for attempt_local588307001_0001_m_000009_0: Counters: 17

File System Counters

FILE: Number of bytes read=1077026719

FILE: Number of bytes written=1483156469

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=2941054

Map output records=7009866

Map output bytes=60123371

Map output materialized bytes=74143109

Input split bytes=98

Combine input records=0

Spilled Records=14019732

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=61

Total committed heap usage (bytes)=1042808832

File Input Format Counters

Bytes Read=33558528

21/04/26 21:28:07 INFO mapred.LocalJobRunner: Finishing task: attempt_local588307001_0001_m_000009_0

21/04/26 21:28:07 INFO mapred.LocalJobRunner: Starting task: attempt_local588307001_0001_m_000010_0

21/04/26 21:28:07 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

21/04/26 21:28:07 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

21/04/26 21:28:07 INFO mapreduce.Job: map 100% reduce 0%

21/04/26 21:28:07 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@abc125

21/04/26 21:28:07 INFO mapred.MapTask: Processing split: file:/E:/bigdata/2021.4.26/wc.txt:335544320+33554432

21/04/26 21:28:07 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

21/04/26 21:28:07 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

21/04/26 21:28:07 INFO mapred.MapTask: soft limit at 83886080

21/04/26 21:28:07 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

21/04/26 21:28:07 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

21/04/26 21:28:07 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

21/04/26 21:28:09 INFO mapred.MapTask: Spilling map output

21/04/26 21:28:09 INFO mapred.MapTask: bufstart = 0; bufend = 29274881; bufvoid = 104857600

21/04/26 21:28:09 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 12561604(50246416); length = 13652793/6553600

21/04/26 21:28:09 INFO mapred.MapTask: (EQUATOR) 39760640 kvi 9940156(39760624)

21/04/26 21:28:11 INFO mapred.MapTask: Finished spill 0

21/04/26 21:28:11 INFO mapred.MapTask: (RESET) equator 39760640 kv 9940156(39760624) kvi 7318728(29274912)

21/04/26 21:28:12 INFO mapred.MapTask: Spilling map output

21/04/26 21:28:12 INFO mapred.MapTask: bufstart = 39760640; bufend = 69035522; bufvoid = 104857600

21/04/26 21:28:12 INFO mapred.MapTask: kvstart = 9940156(39760624); kvend = 22501764(90007056); length = 13652793/6553600

21/04/26 21:28:12 INFO mapred.MapTask: (EQUATOR) 79521281 kvi 19880316(79521264)

21/04/26 21:28:13 INFO mapred.LocalJobRunner:

21/04/26 21:28:13 INFO mapred.MapTask: Starting flush of map output

21/04/26 21:28:13 INFO mapred.LocalJobRunner: map > sort

21/04/26 21:28:13 INFO mapreduce.Job: map 89% reduce 0%

21/04/26 21:28:14 INFO mapred.MapTask: Finished spill 1

21/04/26 21:28:14 INFO mapred.MapTask: (RESET) equator 79521281 kv 19880316(79521264) kvi 19146444(76585776)

21/04/26 21:28:14 INFO mapred.MapTask: Spilling map output

21/04/26 21:28:14 INFO mapred.MapTask: bufstart = 79521281; bufend = 81094888; bufvoid = 104857600

21/04/26 21:28:14 INFO mapred.MapTask: kvstart = 19880316(79521264); kvend = 19146448(76585792); length = 733869/6553600

21/04/26 21:28:14 INFO mapred.MapTask: Finished spill 2

21/04/26 21:28:14 INFO mapred.Merger: Merging 3 sorted segments