/**

*感谢@天才2012的博文

*http://blog.csdn.net/gzzaigcnforever/article/details/49025297

*等一系列优秀博文对我的分析工作产生了极大的帮助

*/

最近花了很多时间对Google的Camera子系统进行了从上到下的学习,着重学习了Android7.1.1下的从Application层到Native到HAL到mm-camera历时一个月,当然不敢说全面吃透只是把的学习成果正好整理出来也是帮助后面的学习者,对于HAL层之上的分析虽然7.1.1和6.0上也已经大不相同但是参看网上文章再自己看源码也可以搞懂,但对于HAL层的具体代码甚至mm-camera层目前的网上公开资源应该是缺乏的所以我选择先对这两块内容进行分享之后有时间也会逐渐补上上层的内容。

Android Camera2 Framework数据流

本文基于android7.1.1进行分析,Camera 系统从上到下 可以大致分为Camera API、CameraClient、CameraDevice、HAL interface而下图可以表现其中关系。

其中CameraAPI2 可以兼容到 Camera API1,但Client使用Camera2Client这里会导致从Framework到Application层的数据交换方式上产生差别,本文主要讨论这部分差别,而这里的数据交换指代Frame的真实数据。

数据从framework传递到上层

在framework层会创建一个Surface,而Surface持有一个ANativeWindow接口,ANativeWindow负责管理Buffer的创建与共享在Consumer端ANativeWindow创建Buffer,在Surface通过dequeuebuffer获取buffer handle到本地进行共享,在数据填充完后通过quenebuffer告诉Consumer当前buffer可用,这样就形成了一个buffer生成与消费的关系。这种模式是通过建立不同类型的Consumer,然后在Native层建立一个BufferQueue,并将这个BufferQueue的IGraphicBufferConsumer用于构建CPUConsumer,将IGraphicBufferProducter通过createStream给CameraDevice增加一个Stream。即是说我们是通过buffer从低向上传递数据。

详情参考文章http://blog.csdn.net/gzzaigcnforever/article/details/49025297

调用Camera2API CameraDeviceImpl的createCaptureSession()时会创建Strenm并初始化

binder::Status CameraDeviceClient::createStream(

const hardware::camera2::params::OutputConfiguration &outputConfiguration,

/*out*/

int32_t* newStreamId) {

ATRACE_CALL();

binder::Status res;

if (!(res = checkPidStatus(__FUNCTION__)).isOk()) return res;

Mutex::Autolock icl(mBinderSerializationLock);

sp bufferProducer = outputConfiguration.getGraphicBufferProducer();

bool deferredConsumer = bufferProducer == NULL;

int surfaceType = outputConfiguration.getSurfaceType();

bool validSurfaceType = ((surfaceType == OutputConfiguration::SURFACE_TYPE_SURFACE_VIEW) ||

(surfaceType == OutputConfiguration::SURFACE_TYPE_SURFACE_TEXTURE));

if (deferredConsumer && !validSurfaceType) {

ALOGE("%s: Target surface is invalid: bufferProducer = %p, surfaceType = %d.",

FUNCTION, bufferProducer.get(), surfaceType);

return STATUS_ERROR(CameraService::ERROR_ILLEGAL_ARGUMENT, "Target Surface is invalid");

}

......

sp binder = IInterface::asBinder(bufferProducer);

sp surface = new Surface(bufferProducer, useAsync);//创建一个本地Surface用于Product

ANativeWindow *anw = surface.get();

......

int streamId = camera3::CAMERA3_STREAM_ID_INVALID;

err = mDevice->createStream(surface, width, height, format, dataSpace,

static_cast(outputConfiguration.getRotation()),

&streamId, outputConfiguration.getSurfaceSetID());//创建Stream

if (err != OK) {

res = STATUS_ERROR_FMT(CameraService::ERROR_INVALID_OPERATION,

"Camera %d: Error creating output stream (%d x %d, fmt %x, dataSpace %x): %s (%d)",

mCameraId, width, height, format, dataSpace, strerror(-err), err);

} else {

mStreamMap.add(binder, streamId);

......

// Set transform flags to ensure preview to be rotated correctly.

res = setStreamTransformLocked(streamId);

*newStreamId = streamId;

}

return res;

}

可以参看下图,由于是直接用了其他博客里的图所以不是完全的准确但是大致相同。

CameraDevice

存在于源码下framework/av/services/camera/libcameraservice/devices3

-

Camera3Device

- Camera3Device::createStream

上文的mDevice->createStream

status_t Camera3Device::createStream(spconsumer, uint32_t width, uint32_t height, int format, android_dataspace dataSpace, camera3_stream_rotation_t rotation, int *id, int streamSetId, uint32_t consumerUsage) { ATRACE_CALL(); Mutex::Autolock il(mInterfaceLock); Mutex::Autolock l(mLock); ...... sp newStream; ...... //使用之前的surface创建Camera3OutputStream newStream = new Camera3OutputStream(mNextStreamId, consumer, width, height, blobBufferSize, format, dataSpace, rotation, mTimestampOffset, streamSetId); ...... newStream->setStatusTracker(mStatusTracker); /** * Camera3 Buffer manager is only supported by HAL3.3 onwards, as the older HALs ( < HAL3.2) * requires buffers to be statically allocated for internal static buffer registration, while * the buffers provided by buffer manager are really dynamically allocated. For HAL3.2, because * not all HAL implementation supports dynamic buffer registeration, exlude it as well. */ if (mDeviceVersion > CAMERA_DEVICE_API_VERSION_3_2) { newStream->setBufferManager(mBufferManager); } //添加到数组维护 res = mOutputStreams.add(mNextStreamId, newStream); ...... res = configureStreamsLocked(); ...... return OK; } - Camera3Device::configureStreamsLocked函数

在configureStreamsLocked的函数中,主要关注的是Camera3Device对当前所具有的所有的mInputStreams和mOutputStreams进行Config的操作,分别包括startConfiguration/finishConfiguration两个状态。

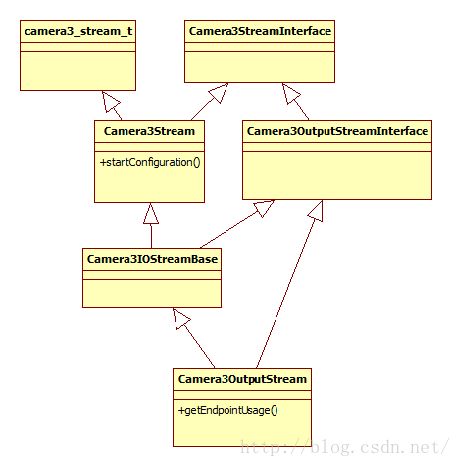

mOutputStreams.editValueAt(i)->startConfiguration()

这里的遍历所有输出stream即最终调用的函数入口为Camera3Stream::startConfiguration(),这里需要先看下Camera3OutputStream的整个结构,出现了Camera3Stream和Camera3IOStreamBase,两者是Input和Output stream所共有的,前者提供的更多的是对buffer的config、get/retrun buffer的操作,后者以维护当前的stream所拥有的buffer数目。另一个支路camera3_stream_t是一个和Camera HAL3底层进行stream信息交互的入口。 - Camera3Device::createStream

接下来是进行一些操作把buffer注册到HAL层为了缩减篇幅这部分直接看这篇博文http://blog.csdn.net/yangzhihuiguming/article/details/51831888

mHal3Device->ops->configure_streams(mHal3Device,&config);

调用这个方法注册到HAL层

HAL

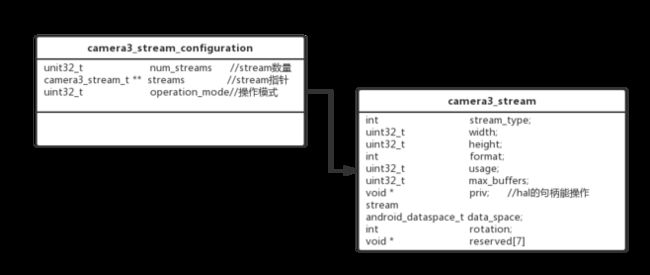

最终是调用到QCamera3HardwareInterface::configureStreamsPerfLocked可以看到

int QCamera3HardwareInterface::configureStreamsPerfLocked(

camera3_stream_configuration_t *streamList)

{

......

//首先关闭已satrt的Stream

for (List::iterator it = mStreamInfo.begin();

it != mStreamInfo.end(); it++) {

QCamera3ProcessingChannel *channel = (QCamera3ProcessingChannel*)(*it)->stream->priv;

if (channel) {

channel->stop();

}

(*it)->status = INVALID;

}

......

//然后把streamlist里的stream赋值给camera3_stream_t结构体

camera3_stream_t *jpegStream = NULL;

for (size_t i = 0; i < streamList->num_streams; i++) {

camera3_stream_t *newStream = streamList->streams[i];

......

if (newStream->format == HAL_PIXEL_FORMAT_BLOB) {

jpegStream = newStream;

}

}

......

//然后创建Channel 复制给newStream->priv

mPictureChannel = new QCamera3PicChannel(

mCameraHandle->camera_handle, mChannelHandle,

mCameraHandle->ops, captureResultCb,

setBufferErrorStatus, &padding_info, this, newStream,

mStreamConfigInfo.postprocess_mask[mStreamConfigInfo.num_streams],

m_bIs4KVideo, isZsl, mMetadataChannel,

(m_bIsVideo ? 1 : MAX_INFLIGHT_BLOB));

if (mPictureChannel == NULL) {

LOGE("allocation of channel failed");

pthread_mutex_unlock(&mMutex);

return -ENOMEM;

}

newStream->priv = (QCamera3ProcessingChannel*)mPictureChannel;

newStream->max_buffers = mPictureChannel->getNumBuffers();

......

//然后放入StreamInfo

for (List::iterator it=mStreamInfo.begin();

it != mStreamInfo.end(); it++) {

if ((*it)->stream == newStream) {

(*it)->channel = (QCamera3ProcessingChannel*) newStream->priv;

break;

}

}

}

当Camera3Device收到一个CaptureRequest会调用queueRequestList入队,当RequestList不为空Camera3Device里有个一直在轮询的线程RequestTread会调用到QCamera3HardwareInterface的process_capture_request

int QCamera3HardwareInterface::processCaptureRequest(

camera3_capture_request_t *request)

{

......

//先调用mm_camera_interface设置参数

for (uint32_t i = 0; i < mStreamConfigInfo.num_streams; i++) {

LOGI("STREAM INFO : type %d, wxh: %d x %d, pp_mask: 0x%x "

"Format:%d",

mStreamConfigInfo.type[i],

mStreamConfigInfo.stream_sizes[i].width,

mStreamConfigInfo.stream_sizes[i].height,

mStreamConfigInfo.postprocess_mask[i],

mStreamConfigInfo.format[i]);

}

rc = mCameraHandle->ops->set_parms(mCameraHandle->camera_handle,

mParameters);

......

//初始化所有channel

for (List::iterator it = mStreamInfo.begin();

it != mStreamInfo.end(); it++) {

QCamera3Channel *channel = (QCamera3Channel *)(*it)->stream->priv;

if ((((1U << CAM_STREAM_TYPE_VIDEO) == channel->getStreamTypeMask()) ||

((1U << CAM_STREAM_TYPE_PREVIEW) == channel->getStreamTypeMask())) &&

setEis)

rc = channel->initialize(is_type);

else {

rc = channel->initialize(IS_TYPE_NONE);

}

if (NO_ERROR != rc) {

LOGE("Channel initialization failed %d", rc);

pthread_mutex_unlock(&mMutex);

goto error_exit;

}

}

......

//同步sensor

c = mCameraHandle->ops->sync_related_sensors(

mCameraHandle->camera_handle, m_pRelCamSyncBuf);

......

//startChannel

for (List::iterator it = mStreamInfo.begin();

it != mStreamInfo.end(); it++) {

QCamera3Channel *channel = (QCamera3Channel *)(*it)->stream->priv;

LOGH("Start Processing Channel mask=%d",

channel->getStreamTypeMask());

rc = channel->start();

if (rc < 0) {

LOGE("channel start failed");

pthread_mutex_unlock(&mMutex);

goto error_exit;

}

}

......

//request

if (output.stream->format == HAL_PIXEL_FORMAT_BLOB) {

LOGD("snapshot request with output buffer %p, input buffer %p, frame_number %d",

output.buffer, request->input_buffer, frameNumber);

if(request->input_buffer != NULL){

rc = channel->request(output.buffer, frameNumber,

pInputBuffer, &mReprocMeta);

if (rc < 0) {

LOGE("Fail to request on picture channel");

pthread_mutex_unlock(&mMutex);

return rc;

}

}

}

}

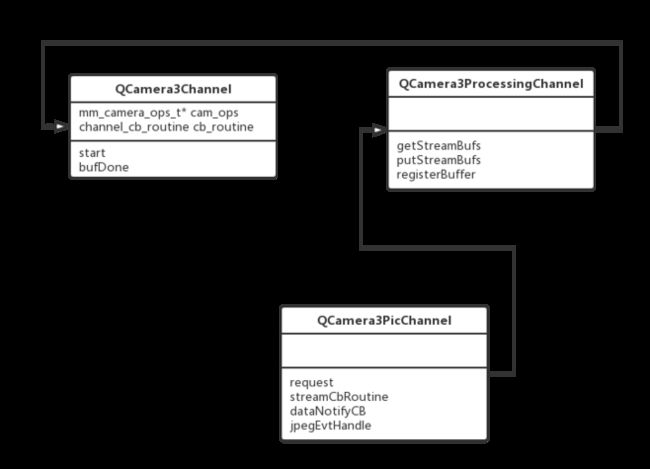

这里的代码很长当然中间是做了很多配置的,上面主要调用了Channel的initialize、start、request

首先是Channel间的关系,之间是继承关系

再来看QCameraPicChannel的initialize

int32_t QCamera3PicChannel::initialize(cam_is_type_t isType)

{

int32_t rc = NO_ERROR;

cam_dimension_t streamDim;

cam_stream_type_t streamType;

cam_format_t streamFormat;

mm_camera_channel_attr_t attr;

if (NULL == mCamera3Stream) {

ALOGE("%s: Camera stream uninitialized", __func__);

return NO_INIT;

}

if (1 <= m_numStreams) {

// Only one stream per channel supported in v3 Hal

return NO_ERROR;

}

mIsType = isType;

streamType = mStreamType;

streamFormat = mStreamFormat;

streamDim.width = (int32_t)mYuvWidth;

streamDim.height = (int32_t)mYuvHeight;

mNumSnapshotBufs = mCamera3Stream->max_buffers;

rc = QCamera3Channel::addStream(streamType, streamFormat, streamDim,

ROTATE_0, (uint8_t)mCamera3Stream->max_buffers, mPostProcMask,

mIsType);

if (NO_ERROR != rc) {

ALOGE("%s: Initialize failed, rc = %d", func, rc);

return rc;

}

/* initialize offline meta memory for input reprocess */

rc = QCamera3ProcessingChannel::initialize(isType);

if (NO_ERROR != rc) {

ALOGE("%s: Processing Channel initialize failed, rc = %d",

func, rc);

}

return rc;

}

调用了QCameraChannel的addStream再看源码

int32_t QCamera3Channel::addStream(cam_stream_type_t streamType,

cam_format_t streamFormat,

cam_dimension_t streamDim,

cam_rotation_t streamRotation,

uint8_t minStreamBufNum,

uint32_t postprocessMask,

cam_is_type_t isType,

uint32_t batchSize)

{

int32_t rc = NO_ERROR;

if (m_numStreams >= 1) {

ALOGE("%s: Only one stream per channel supported in v3 Hal", __func__);

return BAD_VALUE;

}

if (m_numStreams >= MAX_STREAM_NUM_IN_BUNDLE) {

ALOGE("%s: stream number (%d) exceeds max limit (%d)",

func, m_numStreams, MAX_STREAM_NUM_IN_BUNDLE);

return BAD_VALUE;

}

QCamera3Stream *pStream = new QCamera3Stream(m_camHandle,

m_handle,

m_camOps,

mPaddingInfo,

this);

if (pStream == NULL) {

ALOGE("%s: No mem for Stream", __func__);

return NO_MEMORY;

}

CDBG("%s: batch size is %d", func, batchSize);

rc = pStream->init(streamType, streamFormat, streamDim, streamRotation,

NULL, minStreamBufNum, postprocessMask, isType, batchSize,

streamCbRoutine, this);

if (rc == 0) {

mStreams[m_numStreams] = pStream;

m_numStreams++;

} else {

delete pStream;

}

return rc;

}

这里会init Stream再看init

int32_t QCamera3Stream::init(cam_stream_type_t streamType,

cam_format_t streamFormat,

cam_dimension_t streamDim,

cam_rotation_t streamRotation,

cam_stream_reproc_config_t* reprocess_config,

uint8_t minNumBuffers,

cam_feature_mask_t postprocess_mask,

cam_is_type_t is_type,

uint32_t batchSize,

hal3_stream_cb_routine stream_cb,

void *userdata)

{

int32_t rc = OK;

ssize_t bufSize = BAD_INDEX;

mm_camera_stream_config_t stream_config;

LOGD("batch size is %d", batchSize);

//mCamOps为mm_camera_interface

mHandle = mCamOps->add_stream(mCamHandle, mChannelHandle);

if (!mHandle) {

LOGE("add_stream failed");

rc = UNKNOWN_ERROR;

goto done;

}

// allocate and map stream info memory

mStreamInfoBuf = new QCamera3HeapMemory(1);

if (mStreamInfoBuf == NULL) {

LOGE("no memory for stream info buf obj");

rc = -ENOMEM;

goto err1;

}

rc = mStreamInfoBuf->allocate(sizeof(cam_stream_info_t));

if (rc < 0) {

LOGE("no memory for stream info");

rc = -ENOMEM;

goto err2;

}

mStreamInfo =

reinterpret_cast(mStreamInfoBuf->getPtr(0));

memset(mStreamInfo, 0, sizeof(cam_stream_info_t));

mStreamInfo->stream_type = streamType;

mStreamInfo->fmt = streamFormat;

mStreamInfo->dim = streamDim;

mStreamInfo->num_bufs = minNumBuffers;

mStreamInfo->pp_config.feature_mask = postprocess_mask;

mStreamInfo->is_type = is_type;

mStreamInfo->pp_config.rotation = streamRotation;

LOGD("stream_type is %d, feature_mask is %Ld",

mStreamInfo->stream_type, mStreamInfo->pp_config.feature_mask);

bufSize = mStreamInfoBuf->getSize(0);

if (BAD_INDEX != bufSize) {

//映射buffer到user space

rc = mCamOps->map_stream_buf(mCamHandle,

mChannelHandle, mHandle, CAM_MAPPING_BUF_TYPE_STREAM_INFO,

0, -1, mStreamInfoBuf->getFd(0), (size_t)bufSize,

mStreamInfoBuf->getPtr(0));

if (rc < 0) {

LOGE("Failed to map stream info buffer");

goto err3;

}

} else {

LOGE("Failed to retrieve buffer size (bad index)");

goto err3;

}

mNumBufs = minNumBuffers;

//reprocess_config由channel的addStream方法传入,源码里传入为NULL

if (reprocess_config != NULL) {

mStreamInfo->reprocess_config = *reprocess_config;

mStreamInfo->streaming_mode = CAM_STREAMING_MODE_BURST;

//mStreamInfo->num_of_burst = reprocess_config->offline.num_of_bufs;

mStreamInfo->num_of_burst = 1;

} else if (batchSize) {

if (batchSize > MAX_BATCH_SIZE) {

LOGE("batchSize:%d is very large", batchSize);

rc = BAD_VALUE;

goto err4;

}

else {

mNumBatchBufs = MAX_INFLIGHT_HFR_REQUESTS / batchSize;

mStreamInfo->streaming_mode = CAM_STREAMING_MODE_BATCH;

mStreamInfo->user_buf_info.frame_buf_cnt = batchSize;

mStreamInfo->user_buf_info.size =

(uint32_t)(sizeof(msm_camera_user_buf_cont_t));

mStreamInfo->num_bufs = mNumBatchBufs;

//Frame interval is irrelavent since time stamp calculation is not

//required from the mCamOps

mStreamInfo->user_buf_info.frameInterval = 0;

LOGD("batch size is %d", batchSize);

}

} else {

mStreamInfo->streaming_mode = CAM_STREAMING_MODE_CONTINUOUS;

}

// Configure the stream

stream_config.stream_info = mStreamInfo;

stream_config.mem_vtbl = mMemVtbl;

stream_config.padding_info = mPaddingInfo;

stream_config.userdata = this;//Stream本体

stream_config.stream_cb = dataNotifyCB;//这个为mm_camera层返回数据的回调

stream_config.stream_cb_sync = NULL;

rc = mCamOps->config_stream(mCamHandle,

mChannelHandle, mHandle, &stream_config);在config的时候会把回调传入底层

if (rc < 0) {

LOGE("Failed to config stream, rc = %d", rc);

goto err

4;

}

mDataCB = stream_cb;//这是从channel传过来的回调

mUserData = userdata;//Channel本体

mBatchSize = batchSize;

return 0;

err4:

mCamOps->unmap_stream_buf(mCamHandle,

mChannelHandle, mHandle, CAM_MAPPING_BUF_TYPE_STREAM_INFO, 0, -1);

err3:

mStreamInfoBuf->deallocate();

err2:

delete mStreamInfoBuf;

mStreamInfoBuf = NULL;

mStreamInfo = NULL;

err1:

mCamOps->delete_stream(mCamHandle, mChannelHandle, mHandle);

mHandle = 0;

mNumBufs = 0;

done:

return rc;

}

然后start就是调用了所有stream的start开启了dataProcRoutine

int32_t QCamera3Stream::start()

{

int32_t rc = 0;

mDataQ.init();

mTimeoutFrameQ.init();

if (mBatchSize)

mFreeBatchBufQ.init();

rc = mProcTh.launch(dataProcRoutine, this);

return rc;

}

其实在QCamera3Channel start的时候调用mm_camera_start,获取数据的过程已经开始了,mm_camera层有两个线程,一个是cmd_thread分发命令,还有一个是poll_thread轮询线程他和socket另一端建立pipeline不断尝试获取数据当数据获取到之后会逐层向上回调方法到hal层会回调QCamera3Stream的dataNotifyCB,mDataQ 为 QCameraQueue,这段代码还启动了一个线程方法体为dataProcRoutine

void QCamera3Stream::dataNotifyCB(mm_camera_super_buf_t *recvd_frame,

void *userdata)

{

CDBG("%s: E\n", __func__);

QCamera3Stream* stream = (QCamera3Stream *)userdata;

if (stream == NULL ||

recvd_frame == NULL ||

recvd_frame->bufs[0] == NULL ||

recvd_frame->bufs[0]->stream_id != stream->getMyHandle()) {

ALOGE("%s: Not a valid stream to handle buf", __func__);

return;

}

mm_camera_super_buf_t *frame =

(mm_camera_super_buf_t *)malloc(sizeof(mm_camera_super_buf_t));

if (frame == NULL) {

ALOGE("%s: No mem for mm_camera_buf_def_t", __func__);

stream->bufDone(recvd_frame->bufs[0]->buf_idx);

return;

}

*frame = *recvd_frame;

stream->processDataNotify(frame);//调用processDataNotify

return;

}

源码如下

int32_t QCamera3Stream::processDataNotify(mm_camera_super_buf_t *frame)

{

LOGD("E\n");

int32_t rc;

if (mDataQ.enqueue((void *)frame)) {

rc = mProcTh.sendCmd(CAMERA_CMD_TYPE_DO_NEXT_JOB, FALSE, FALSE);

} else {

LOGD("Stream thread is not active, no ops here");

bufDone(frame->bufs[0]->buf_idx);

free(frame);

rc = NO_ERROR;

}

LOGD("X\n");

return rc;

}

命令会触发dataProcRoutine里的,状态机

void *QCamera3Stream::dataProcRoutine(void *data)

{

int running = 1;

int ret;

QCamera3Stream *pme = (QCamera3Stream *)data;

QCameraCmdThread *cmdThread = &pme->mProcTh;

cmdThread->setName(mStreamNames[pme->mStreamInfo->stream_type]);

LOGD("E");

do {

do {

ret = cam_sem_wait(&cmdThread->cmd_sem);

if (ret != 0 && errno != EINVAL) {

LOGE("cam_sem_wait error (%s)",

strerror(errno));

return NULL;

}

} while (ret != 0);

// we got notified about new cmd avail in cmd queue

camera_cmd_type_t cmd = cmdThread->getCmd();

switch (cmd) {

case CAMERA_CMD_TYPE_TIMEOUT:

{

int32_t bufIdx = (int32_t)(pme->mTimeoutFrameQ.dequeue());

pme->cancelBuffer(bufIdx);

break;

}

case CAMERA_CMD_TYPE_DO_NEXT_JOB:

{

LOGD("Do next job");

mm_camera_super_buf_t *frame =

(mm_camera_super_buf_t *)pme->mDataQ.dequeue();

if (NULL != frame) {

//这个分支前两个最终都是会调用mDataCB这是channel层的回调

if (UNLIKELY(frame->bufs[0]->buf_type ==

CAM_STREAM_BUF_TYPE_USERPTR)) {

pme->handleBatchBuffer(frame);

} else if (pme->mDataCB != NULL) {

pme->mDataCB(frame, pme, pme->mUserData);

} else {

// no data cb routine, return buf here

pme->bufDone(frame->bufs[0]->buf_idx);

}

}

}

break;

case CAMERA_CMD_TYPE_EXIT:

LOGH("Exit");

/* flush data buf queue */

pme->mDataQ.flush();

pme->mTimeoutFrameQ.flush();

pme->flushFreeBatchBufQ();

running = 0;

break;

default:

break;

}

} while (running);

LOGD("X");

return NULL;

}

查看源码

void QCamera3PicChannel::streamCbRoutine(mm_camera_super_buf_t *super_frame,

QCamera3Stream *stream)

{

......

m_postprocessor.processData(frame);

free(super_frame);

return;

}

可以看到是调用了postProcessor的processData,postProcessor是在QCamera3PicChannel的request被start的

int32_t QCamera3PicChannel::request(buffer_handle_t *buffer,

uint32_t frameNumber,

camera3_stream_buffer_t *pInputBuffer,

metadata_buffer_t *metadata, int &indexUsed)

{

ATRACE_CALL();

//FIX ME: Return buffer back in case of failures below.

int32_t rc = NO_ERROR;

......

// Start postprocessor

startPostProc(reproc_cfg);

// Only set the perf mode for online cpp processing

if (reproc_cfg.reprocess_type == REPROCESS_TYPE_NONE) {

setCppPerfParam();

}

// Queue jpeg settings

rc = queueJpegSetting((uint32_t)index, metadata);

//false

if (pInputBuffer == NULL) {

Mutex::Autolock lock(mFreeBuffersLock);

uint32_t bufIdx;

if (mFreeBufferList.empty()) {

rc = mYuvMemory->allocateOne(mFrameLen);

if (rc < 0) {

LOGE("Failed to allocate heap buffer. Fatal");

return rc;

} else {

bufIdx = (uint32_t)rc;

}

} else {

List::iterator it = mFreeBufferList.begin();

bufIdx = *it;

mFreeBufferList.erase(it);

}

mYuvMemory->markFrameNumber(bufIdx, frameNumber);

mStreams[0]->bufDone(bufIdx);

indexUsed = bufIdx;

} else {

qcamera_fwk_input_pp_data_t *src_frame = NULL;

src_frame = (qcamera_fwk_input_pp_data_t *)calloc(1,

sizeof(qcamera_fwk_input_pp_data_t));

if (src_frame == NULL) {

LOGE("No memory for src frame");

return NO_MEMORY;

}

//填充metadata

rc = setFwkInputPPData(src_frame, pInputBuffer, &reproc_cfg, metadata,

NULL /*fwk output buffer*/, frameNumber);

if (NO_ERROR != rc) {

LOGE("Error %d while setting framework input PP data", rc);

free(src_frame);

return rc;

}

LOGH("Post-process started");

m_postprocessor.processData(src_frame);

}

return rc;

}

可以看见start了post processor 并且调用了processData,QCamera3PostProcessor的作用是调度jpeg编码任务和reprocess任务,QCamera3PicChannel的请求都要先进行reprocess这个是为了在编码前做一些其他工作的地方,然后会进行jpeg编码这就是QCamera3PostProcessor的作用以及名字里带了PP的类、channel、stream、queue代表了其中的数据要由QCamera3PostProcessor来处理。 init的时候会开启一个线程方法体为dataProcessRoutine,而start的时候会向方法体发送一个CAMERA_CMD_TYPE_START_DATA_PROC命令,而processData会发送CAMERA_CMD_TYPE_DO_NEXT_JOB命令。

int32_t QCamera3PostProcessor::processData(mm_camera_super_buf_t *input,

buffer_handle_t *output, uint32_t frameNumber)

{

LOGD("E");

pthread_mutex_lock(&mReprocJobLock);

// enqueue to post proc input queue

qcamera_hal3_pp_buffer_t *pp_buffer = (qcamera_hal3_pp_buffer_t *)malloc(

sizeof(qcamera_hal3_pp_buffer_t));

if (NULL == pp_buffer) {

LOGE("out of memory");

return NO_MEMORY;

}

memset(pp_buffer, 0, sizeof(*pp_buffer));

pp_buffer->input = input;

pp_buffer->output = output;//这里传入的是NULL

pp_buffer->frameNumber = frameNumber;

m_inputPPQ.enqueue((void *)pp_buffer);

if (!(m_inputMetaQ.isEmpty())) {

LOGD("meta queue is not empty, do next job");

m_dataProcTh.sendCmd(CAMERA_CMD_TYPE_DO_NEXT_JOB, FALSE, FALSE);

} else

LOGD("metadata queue is empty");

pthread_mutex_unlock(&mReprocJobLock);

return NO_ERROR;

}

看源码

void *QCamera3PostProcessor::dataProcessRoutine(void *data)

{

int running = 1;

int ret;

uint8_t is_active = FALSE;

uint8_t needNewSess = TRUE;

mm_camera_super_buf_t *meta_buffer = NULL;

LOGD("E");

QCamera3PostProcessor *pme = (QCamera3PostProcessor *)data;

QCameraCmdThread *cmdThread = &pme->m_dataProcTh;

cmdThread->setName("cam_data_proc");

do {

do {

ret = cam_sem_wait(&cmdThread->cmd_sem);//等待命令并阻塞

if (ret != 0 && errno != EINVAL) {

LOGE("cam_sem_wait error (%s)",

strerror(errno));

return NULL;

}

} while (ret != 0);

// we got notified about new cmd avail in cmd queue

camera_cmd_type_t cmd = cmdThread->getCmd();

switch (cmd) {

case CAMERA_CMD_TYPE_START_DATA_PROC://初始化队列

LOGH("start data proc");

is_active = TRUE;

needNewSess = TRUE;

pme->m_ongoingPPQ.init();

pme->m_inputJpegQ.init();

pme->m_inputPPQ.init();

pme->m_inputFWKPPQ.init();

pme->m_inputMetaQ.init();

cam_sem_post(&cmdThread->sync_sem);

break;

case CAMERA_CMD_TYPE_STOP_DATA_PROC:

{

......

}

break;

case CAMERA_CMD_TYPE_DO_NEXT_JOB:

{

LOGH("Do next job, active is %d", is_active);

/* needNewSess is set to TRUE as postproc is not re-STARTed

* anymore for every captureRequest */

needNewSess = TRUE;

if (is_active == TRUE) {

// check if there is any ongoing jpeg jobs

if (pme->m_ongoingJpegQ.isEmpty()) {//有需要jpeg编码任务的话

LOGD("ongoing jpeg queue is empty so doing the jpeg job");

// no ongoing jpeg job, we are fine to send jpeg encoding job

qcamera_hal3_jpeg_data_t *jpeg_job =

(qcamera_hal3_jpeg_data_t *)pme->m_inputJpegQ.dequeue();

if (NULL != jpeg_job) {

// add into ongoing jpeg job Q

pme->m_ongoingJpegQ.enqueue((void *)jpeg_job);

if (jpeg_job->fwk_frame) {

ret = pme->encodeFWKData(jpeg_job, needNewSess);

} else {

ret = pme->encodeData(jpeg_job, needNewSess);

}

if (NO_ERROR != ret) {

// dequeue the last one

pme->m_ongoingJpegQ.dequeue(false);

pme->releaseJpeg

JobData(jpeg_job);

free(jpeg_job);

}

}

}

// check if there are any framework pp jobs

if (!pme->m_inputFWKPPQ.isEmpty()) {

qcamera_fwk_input_pp_data_t *fwk_frame =

(qcamera_fwk_input_pp_data_t *) pme->m_inputFWKPPQ.dequeue();

if (NULL != fwk_frame) {

qcamera_hal3_pp_data_t *pp_job =

(qcamera_hal3_pp_data_t *)malloc(sizeof(qcamera_hal3_pp_data_t));

jpeg_settings_t *jpeg_settings =

(jpeg_settings_t *)pme->m_jpegSettingsQ.dequeue();

if (pp_job != NULL) {

memset(pp_job, 0, sizeof(qcamera_hal3_pp_data_t));

pp_job->jpeg_settings = jpeg_settings;

if (pme->m_pReprocChannel != NULL) {

if (NO_ERROR != pme->m_pReprocChannel->overrideFwkMetadata(fwk_frame)) {

LOGE("Failed to extract output crop");

}

// add into ongoing PP job Q

pp_job->fwk_src_frame = fwk_frame;

pme->m_ongoingPPQ.enqueue((void *)pp_job);

ret = pme->m_pReprocChannel->doReprocessOffline(fwk_frame, true);//进行reprocess

if (NO_ERROR != ret) {

// remove from ongoing PP job Q

pme->m_ongoingPPQ.dequeue(false);

}

} else {

LOGE("Reprocess channel is NULL");

ret = -1;

}

} else {

LOGE("no mem for qcamera_hal3_pp_data_t");

ret = -1;

}

if (0 != ret) {

// free pp_job

if (pp_job != NULL) {

free(pp_job);

}

// free frame

if (fwk_frame != NULL) {

free(fwk_frame);

}

}

}

}

LOGH("dequeuing pp frame");

pthread_mutex_lock(&pme->mReprocJobLock);

if(!pme->m_inputPPQ.isEmpty() && !pme->m_inputMetaQ.isEmpty()) {

qcamera_hal3_pp_buffer_t *pp_buffer =

(qcamera_hal3_pp_buffer_t *)pme->m_inputPPQ.dequeue();

meta_buffer =

(mm_camera_super_buf_t *)pme->m_inputMetaQ.dequeue();

jpeg_settings_t *jpeg_settings =

(jpeg_settings_t *)pme->m_jpegSettingsQ.dequeue();

pthread_mutex_unlock(&pme->mReprocJobLock);

qcamera_hal3_pp_data_t *pp_job =

(qcamera_hal3_pp_data_t *)malloc(sizeof(qcamera_hal3_pp_data_t));

if (pp_job == NULL) {

LOGE("no mem for qcamera_hal3_pp_data_t");

ret = -1;

} else if (meta_buffer == NULL) {

LOGE("failed to dequeue from m_inputMetaQ");

ret = -1;

} else if (pp_buffer == NULL) {

LOGE("failed to dequeue from m_inputPPQ");

ret = -1;

} else if (pp_buffer != NULL){

memset(pp_job, 0, sizeof(qcamera_hal3_pp_data_t));

pp_job->src_frame = pp_buffer->input;

pp_job->src_metadata = meta_buffer;

if (meta_buffer->bufs[0] != NULL) {

pp_job->metadata = (metadata_buffer_t *)

meta_buffer->bufs[0]->buffer;

}

pp_job->jpeg_settings = jpeg_settings;

pme->m_ongoingPPQ.enqueue((void *)pp_job);

if (pme->m_pReprocChannel != NULL) {

mm_camera_buf_def_t *meta_buffer_arg = NULL;

meta_buffer_arg = meta_buffer->bufs[0];

qcamera_fwk_input_pp_data_t fwk_frame;

memset(&fwk_frame, 0, sizeof(qcamera_fwk_input_pp_data_t));

fwk_frame.frameNumber = pp_buffer->frameNumber;

ret = pme->m_pReprocChannel->overrideMetadata(

pp_buffer, meta_buffer_arg,

pp_job->jpeg_settings,

fwk_frame);

if (NO_ERROR == ret) {

// add into ongoing PP job

ret = pme->m_pReprocChannel->doReprocessOffline(

&fwk_frame, true);

if (NO_ERROR != ret) {

// remove from ongoing PP job Q

pme->m_ongoingPPQ.dequeue(false);

}

}

} else {

LOGE("No reprocess. Calling processPPData directly");

ret = pme->processPPData(pp_buffer->input);

}

}

if (0 != ret) {

// free pp_job

if (pp_job != NULL) {

free(pp_job);

}

// free frame

if (pp_buffer != NULL) {

if (pp_buffer->input) {

pme->releaseSuperBuf(pp_buffer->input);

free(pp_buffer->input);

}

free(pp_buffer);

}

//free metadata

if (NULL != meta_buffer) {

pme->m_parent->metadataBufDone(meta_buffer);

free(meta_buffer);

}

} else {

if (pp_buffer != NULL) {

free(pp_buffer);

}

}

} else {

pthread_mutex_unlock(&pme->mReprocJobLock);

}

} else {

// not active, simply return buf and do no op

qcamera_hal3_jpeg_data_t *jpeg_job =

(qcamera_hal3_jpeg_data_t *)pme->m_inputJpegQ.dequeue();

if (NULL != jpeg_job) {

free(jpeg_job);

}

qcamera_hal3_pp_buffer_t* pp_buf =

(qcamera_hal3_pp_buffer_t *)pme->m_inputPPQ.dequeue();

if (NULL != pp_buf) {

if (pp_buf->input) {

pme->releaseSuperBuf(pp_buf->input);

free(pp_buf->input);

pp_buf->input = NULL;

}

free(pp_buf);

}

mm_camera_super_buf_t *metadata = (mm_camera_super_buf_t *)pme->m_inputMetaQ.dequeue();

if (metadata != NULL) {

pme->m_parent->metadataBufDone(metadata);

free(metadata);

}

qcamera_fwk_input_pp_data_t *fwk_frame =

(qcamera_fwk_input_pp_data_t *) pme->m_inputFWKPPQ.dequeue();

if (NULL != fwk_frame) {

free(fwk_frame);

}

}

}

break;

case CAMERA_CMD_TYPE_EXIT:

running = 0;

break;

default:

break;

}

} while (running);

LOGD("X");

return NULL;

}

一个从底层传过来的buffer会经过m_inputPPQ -> m_ongoingPPQ -> m_inputJpegQ -> m_ongoingJpegQ然后被编码,编码完成后会回调QCamera3PicChannel::jpegEvtHandle在这里面会回调mChannelCB即captureResultCb返回数据到native层

那么从Framework到Hal层的分析到这里就结束了,接下来进行mm-camera层分析请看下一篇。

http://www.jianshu.com/p/1baad2a5281d