主要介绍scikit-learn中的交叉验证

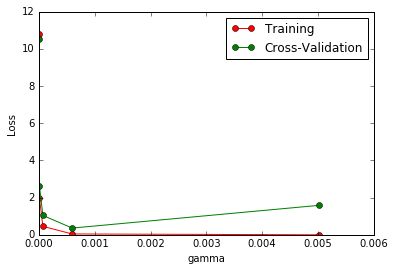

这一次的 sklearn 中我们用到了sklearn.learning_curve当中的另外一种, 叫做validation_curve,用这一种曲线我们就能更加直观看出改变模型中的参数的时候有没有过拟合(overfitting)的问题了. 这也是可以让我们更好的选择参数的方法.

Demo.py

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import load_digits

from sklearn.cross_validation import train_test_split

from sklearn.svm import SVC

from sklearn.learning_curve import validation_curve

from sklearn.cross_validation import cross_val_score

# 选取合适的参数gamma

# 加载数据集

digits = load_digits()

X = digits.data

y = digits.target

# 定义gamma参数

param_range = np.logspace(-6, -2.3, 5)

# 用SVM进行学习并记录loss

train_loss, test_loss = validation_curve(SVC(), X, y, param_name = 'gamma', param_range = param_range,

cv = 10, scoring = 'mean_squared_error')

# 训练误差均值

train_loss_mean = -np.mean(train_loss, axis = 1)

# 测试误差均值

test_loss_mean = -np.mean(test_loss, axis = 1)

# 绘制误差曲线

plt.plot(param_range, train_loss_mean, 'o-', color = 'r', label = 'Training')

plt.plot(param_range, test_loss_mean, 'o-', color = 'g', label = 'Cross-Validation')

plt.xlabel('gamma')

plt.ylabel('Loss')

plt.legend(loc = 'best')

plt.show()

结果: