RAID 0,1,5,10 的基础学习

RAID 0 :读写速度块但安全性不高

1.添加硬盘 1个5G

2.分区 2个 1G的分区

3.配置本地yum源

[root@localhost ~]# cat /etc/yum.repos.d/local.repo

[cnetos]

name=centos

baseurl=file:///opt/centos

gpgcheck=0

enabled=1

4.挂载

[root@localhost ~]# mkdir /opt/centos

[root@localhost ~]# mount /dev/cdrom /opt/centos

mount: /dev/sr0 is write-protected, mounting read-only

5.下载 mdadm

[root@localhost ~]# yum install mdadm -y

6.帮助文档

[root@localhost ~]# mdadm --help-options

Any parameter that does not start with ‘-’ is treated as a device name

or, for --examine-bitmap, a file name.

The first such name is often the name of an md device. Subsequent

names are often names of component devices.

Some common options are:

–help -h : General help message or, after above option,

mode specific help message

–help-options : This help message

–version -V : Print version information for mdadm

–verbose -v : Be more verbose about what is happening

–quiet -q : Don’t print un-necessary messages

–brief -b : Be less verbose, more brief

–export -Y : With --detail, --detail-platform or --examine use

key=value format for easy import into environment

–force -f : Override normal checks and be more forceful

–assemble -A : Assemble an array

–build -B : Build an array without metadata

--create -C : Create a new array

–detail -D : Display details of an array

–examine -E : Examine superblock on an array component

–examine-bitmap -X: Display the detail of a bitmap file

–examine-badblocks: Display list of known bad blocks on device

–monitor -F : monitor (follow) some arrays

–grow -G : resize/ reshape and array

–incremental -I : add/remove a single device to/from an array as appropriate

–query -Q : Display general information about how a

device relates to the md driver

–auto-detect : Start arrays auto-detected by the kernel

[root@localhost ~]# mdadm -C --help

–level= -l : raid level: 0,1,4,5,6,10,linear,multipath and synonyms

–raid-devices= -n : number of active devices in array

7.创建 mdadm

[root@localhost ~]# mdadm -C /dev/md0 -l 0 -n 2 /dev/sdb[1-2]

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

-C /dev/。。 raid名称

-l raid等级

-n 磁盘数量

8.查询

(1)查看所有运行的RAID阵列的状态

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0]

md0 : active raid0 sdb2[1] sdb1[0]

2095104 blocks super 1.2 512k chunks

unused devices:

(2)查询此raid盘的详情信息

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Tue Dec 29 01:14:34 2020

Raid Level : raid0

Array Size : 2095104 (2046.34 MiB 2145.39 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Dec 29 01:14:34 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : 290ea952:19e9d34d:403013e1:b9119c5f

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 18 1 active sync /dev/sdb2

(3)查询uuid

[root@localhost ~]# mdadm -Ds

ARRAY /dev/md0 metadata=1.2 name=localhost.localdomain:0 UUID=290ea952:19e9d34d:403013e1:b9119c5f

9.给raid0分区,格式化并挂载

(1)分区 1G

[root@localhost ~]# fdisk /dev/md0

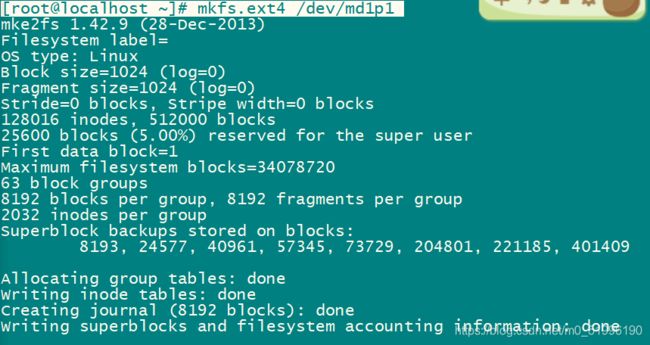

(2)格式化

[root@localhost ~]# mkfs.ext4 /dev/md0p1

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=128 blocks, Stripe width=256 blocks

65536 inodes, 262144 blocks

13107 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=268435456

8 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Allocating group tables: done

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

(3)挂载

[root@localhost ~]# mkdir /opt/md0

[root@localhost ~]# mount /dev/md0p1 /opt/md0

[root@localhost ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 18G 873M 17G 5% /

devtmpfs 903M 0 903M 0% /dev

tmpfs 913M 0 913M 0% /dev/shm

tmpfs 913M 8.5M 904M 1% /run

tmpfs 913M 0 913M 0% /sys/fs/cgroup

/dev/sda1 497M 125M 373M 25% /boot

tmpfs 183M 0 183M 0% /run/user/0

/dev/sr0 4.1G 4.1G 0 100% /opt/centos

/dev/md0p1 976M 2.6M 907M 1% /opt/md0

RAID 1 (提高了读写速度,也加强了系统的可靠性。缺点:硬盘的利用率低,只有50%)

1.分区

[root@localhost ~]# fdisk /dev/sdc (三个1G)

2.创建 (-C /dev/… raid名称, -l raid等级, -n 磁盘数量, -x:为热备盘数)

[root@localhost ~]# mdadm -C /dev/md1 -l 1 -n 2 -x 1 /dev/sdc[1-3]

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store ‘/boot’ on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

–metadata=0.90

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

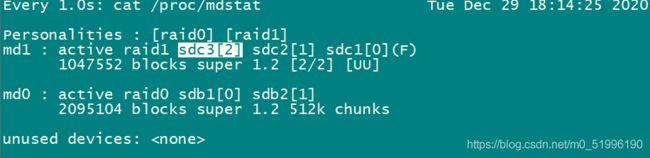

3.查看所有运行的RAID阵列的状态 (s 为空闲)

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc3[2](S) sdc2[1] sdc1[0]

1047552 blocks super 1.2 [2/2] [UU]

md0 : active raid0 sdb1[0] sdb2[1]

2095104 blocks super 1.2 512k chunks

unused devices:

4.给md1 分区 (一个500M)

[root@localhost ~]# fdisk /dev/md1

5.格式化并挂载

[root@localhost ~]# mkfs.ext4 /dev/md1p1

[root@localhost ~]# mkdir /opt/md1

[root@localhost ~]# mount /dev/md1p1 /opt/md1

[root@localhost ~]# df -h

6.设置故障:

(1)先设置动态查看设备状态 (watch: -n 可以实时查看改变的内容)

[root@localhost ~]# watch -n 1 cat /proc/mdstat

(2)将/dev/sdc1指定为故障状态

[root@localhost ~]# mdadm -f /dev/md1 /dev/sdc1

mdadm: set /dev/sdc1 faulty in /dev/md1

(3)删除故障盘并查看

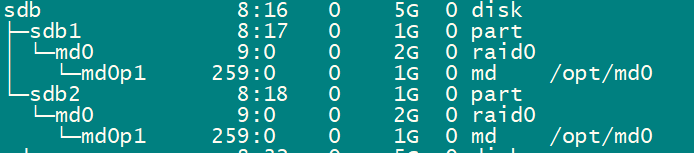

[root@localhost ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 500M 0 part /boot

└─sda2 8:2 0 19.5G 0 part

├─centos-root 253:0 0 17.5G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 5G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0

│ └─md0p1 259:0 0 1G 0 md /opt/md0

└─sdb2 8:18 0 1G 0 part

└─md0 9:0 0 2G 0 raid0

└─md0p1 259:0 0 1G 0 md /opt/md0

sdc 8:32 0 5G 0 disk

├─sdc1 8:33 0 1G 0 part

│ └─md1 9:1 0 1023M 0 raid1

│ └─md1p1 259:1 0 500M 0 md /opt/md1

├─sdc2 8:34 0 1G 0 part

│ └─md1 9:1 0 1023M 0 raid1

│ └─md1p1 259:1 0 500M 0 md /opt/md1

└─sdc3 8:35 0 1G 0 part

└─md1 9:1 0 1023M 0 raid1

└─md1p1 259:1 0 500M 0 md /opt/md1

sdd 8:48 0 5G 0 disk

sr0 11:0 1 4G 0 rom

[root@localhost ~]# mdadm -r /dev/md1 /dev/sdc1

mdadm: hot removed /dev/sdc1 from /dev/md1

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc3[2] sdc2[1]

1047552 blocks super 1.2 [2/2] [UU]

md0 : active raid0 sdb1[0] sdb2[1]

2095104 blocks super 1.2 512k chunks

unused devices:

RAID 5

1.分区 (3个主分区,1个热备盘)

[root@localhost ~]# fdisk /dev/sdd

[root@localhost ~]# fdisk /dev/sdb (1G)

[root@localhost ~]# lsblk

刷新

root@localhost ~]# partprobe

Warning: Unable to open /dev/sr0 read-write (Read-only file system). /dev/sr0 has been opened read-only.

Warning: Unable to open /dev/sr0 read-write (Read-only file system). /dev/sr0 has been opened read-only.

Warning: Unable to open /dev/sr0 read-write (Read-only file system). /dev/sr0 has been opened read-only.

2.创建 (-C /dev/… raid名称, -l raid等级, -n 磁盘数量, -x:为热备盘数)

[root@localhost ~]# mdadm -C /dev/md5 -l 5 -n 3 -x 1 /dev/sdd[1-4]

添加/dev/sdb3到/dev/md5

[root@localhost ~]# mdadm -a /dev/md5 /dev/sdb3

mdadm: added /dev/sdb3

[root@localhost ~]# fdisk /dev/md5 (1G)

3.格式化并挂载

[root@localhost ~]# mkfs.ext4 /dev/md5p1

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=128 blocks, Stripe width=256 blocks

65536 inodes, 262144 blocks

13107 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=268435456

8 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Allocating group tables: done

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

[

root@localhost ~]# mkdir /opt/md5

[root@localhost ~]# mount /dev/md5p1 /opt/md5

[root@localhost ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 18G 873M 17G 5% /

devtmpfs 903M 0 903M 0% /dev

tmpfs 913M 0 913M 0% /dev/shm

tmpfs 913M 8.6M 904M 1% /run

tmpfs 913M 0 913M 0% /sys/fs/cgroup

/dev/sda1 497M 125M 373M 25% /boot

tmpfs 183M 0 183M 0% /run/user/0

/dev/md0p1 976M 2.6M 907M 1% /opt/md0

/dev/md1p1 477M 2.3M 445M 1% /opt/md1

/dev/md5p1 976M 2.6M 907M 1% /opt/md5

4.设置故障:

(1)先设置动态查看设备状态 (watch: -n 可以实时查看改变的内容)

[root@localhost ~]# watch -n 1 cat /proc/mdstat

(2)将/dev/sdd1指定为故障状态

[root@localhost ~]# mdadm -f /dev/md5 /dev/sdd1

mdadm: set /dev/sdd1 faulty in /dev/md5

(3)删除故障盘并查看

[root@localhost ~]# mdadm -r /dev/md5 /dev/sdd1

mdadm: hot removed /dev/sdd1 from /dev/md5

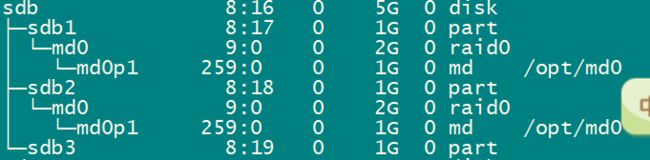

[root@localhost ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 500M 0 part /boot

└─sda2 8:2 0 19.5G 0 part

├─centos-root 253:0 0 17.5G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 5G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0

│ └─md0p1 259:0 0 1G 0 md /opt/md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0

│ └─md0p1 259:0 0 1G 0 md /opt/md0

├─sdb3 8:19 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5

│ └─md5p1 259:3 0 1G 0 md /opt/md5

└─sdb4 8:20 0 1G 0 part

sdd 8:48 0 5G 0 disk

├─sdd1 8:49 0 1G 0 part

├─sdd2 8:50 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5

│ └─md5p1 259:3 0 1G 0 md /opt/md5

├─sdd3 8:51 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5

│ └─md5p1 259:3 0 1G 0 md /opt/md5

└─sdd4 8:52 0 1G 0 part

└─md5 9:5 0 2G 0 raid5

└─md5p1 259:3 0 1G 0 md /opt/md5

sr0 11:0 1 4G 0 rom

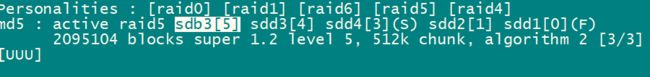

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdb3[5] sdd3[4] sdd2[1]

2095104 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

删除RAID

查看挂载情况

[root@localhost ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 18G 873M 17G 5% /

devtmpfs 903M 0 903M 0% /dev

tmpfs 913M 0 913M 0% /dev/shm

tmpfs 913M 8.6M 904M 1% /run

tmpfs 913M 0 913M 0% /sys/fs/cgroup

/dev/sda1 497M 125M 373M 25% /boot

tmpfs 183M 0 183M 0% /run/user/0

/dev/md0p1 976M 2.6M 907M 1% /opt/md0

/dev/md1p1 477M 2.3M 445M 1% /opt/md1

/dev/md5p1 976M 2.6M 907M 1% /opt/md5

卸载/dev/md0p1

[root@localhost ~]# umount /dev/md0p1

查看是那些盘或分区

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Tue Dec 29 01:14:34 2020

Raid Level : raid0

Array Size : 2095104 (2046.34 MiB 2145.39 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Dec 29 01:14:34 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : 290ea952:19e9d34d:403013e1:b9119c5f

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 18 1 active sync /dev/sdb2

关闭RAID

[root@localhost ~]# mdadm -S /dev/md0

mdadm: stopped /dev/md0

清除raid标识

[root@localhost ~]# mdadm --help

mdadm is used for building, managing, and monitoring

Linux md devices (aka RAID arrays)

Usage: mdadm --create device options…

Create a new array from unused devices.

mdadm --assemble device options…

Assemble a previously created array.

mdadm --build device options…

Create or assemble an array without metadata.

mdadm --manage device options…

make changes to an existing array.

mdadm --misc options... devices

report on or modify various md related devices.

mdadm --grow options device

resize/reshape an active array

mdadm --incremental device

add/remove a device to/from an array as appropriate

mdadm --monitor options…

Monitor one or more array for significant changes.

mdadm device options…

Shorthand for --manage.

Any parameter that does not start with ‘-’ is treated as a device name

or, for --examine-bitmap, a file name.

The first such name is often the name of an md device. Subsequent

names are often names of component devices.

For detailed help on the above major modes use --help after the mode

e.g.

mdadm --assemble --help

For general help on options use

mdadm --help-options

[root@localhost ~]# mdadm --misc --help

Usage: mdadm misc_option devices…

This usage is for performing some task on one or more devices, which

may be arrays or components, depending on the task.

The --misc option is not needed (though it is allowed) and is assumed

if the first argument in a misc option.

Options that are valid with the miscellaneous mode are:

–query -Q : Display general information about how a

device relates to the md driver

–detail -D : Display details of an array

–detail-platform : Display hardware/firmware details

–examine -E : Examine superblock on an array component

–examine-bitmap -X: Display contents of a bitmap file

–examine-badblocks: Display list of known bad blocks on device

--zero-superblock : erase the MD superblock from a device.

–run -R : start a partially built array

–stop -S : deactivate array, releasing all resources

–readonly -o : mark array as readonly

–readwrite -w : mark array as readwrite

–test -t : exit status 0 if ok, 1 if degrade, 2 if dead, 4 if missing

–wait -W : wait for resync/rebuild/recovery to finish

–action= : initiate or abort (‘idle’ or ‘frozen’) a ‘check’ or ‘repair’.

[root@localhost ~]# mdadm --misc --zero-superblock /dev/sdb[1-2]

RAID 10

标记故障并移除

[root@localhost ~]# mdadm -f /dev/md5 /dev/sdb3

mdadm: set /dev/sdb3 faulty in /dev/md5

[root@localhost ~]# mdadm -r /dev/md5 /dev/sdb3

mdadm: hot removed /dev/sdb3 from /dev/md5

查看一下分区

[root@localhost ~]# lsblk

sdb 8:16 0 5G 0 disk

├─sdb1 8:17 0 1G 0 part

├─sdb2 8:18 0 1G 0 part

└─sdb3 8:19 0 1G 0 part

进行硬盘分区(查看一下分区)

[root@localhost ~]# fdisk /dev/sdb

[root@localhost ~]# lsblk

sdb 8:16 0 5G 0 disk

├─sdb1 8:17 0 1G 0 part

├─sdb2 8:18 0 1G 0 part

├─sdb3 8:19 0 1G 0 part

└─sdb4 8:20 0 1G 0 part

创建RAID 10

[root@localhost ~]# mdadm -C /dev/md10 -l 10 -n 4 /dev/sdb[1-4]

mdadm: /dev/sdb3 appears to be part of a raid array:

level=raid5 devices=3 ctime=Tue Dec 29 19:23:17 2020

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md10 started.

[root@localhost ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 500M 0 part /boot

└─sda2 8:2 0 19.5G 0 part

├─centos-root 253:0 0 17.5G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 5G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md10 9:10 0 2G 0 raid10

│ └─md10p1 259:0 0 1G 0 md

├─sdb2 8:18 0 1G 0 part

│ └─md10 9:10 0 2G 0 raid10

│ └─md10p1 259:0 0 1G 0 md

├─sdb3 8:19 0 1G 0 part

│ └─md10 9:10 0 2G 0 raid10

│ └─md10p1 259:0 0 1G 0 md

└─sdb4 8:20 0 1G 0 part

└─md10 9:10 0 2G 0 raid10

└─md10p1 259:0 0 1G 0 md

查看所有运行的RAID阵列的状态

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md10 : active raid10 sdb4[3] sdb3[2] sdb2[1] sdb1[0]

2095104 blocks super 1.2 512K chunks 2 near-copies [4/4] [UUUU]

md5 : active raid5 sdd3[4] sdd4[3] sdd2[1]

2095104 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

md1 : active raid1 sdc3[2] sdc2[1]

1047552 blocks super 1.2 [2/2] [UU]

unused devices:

显示详情信息

[root@localhost ~]# mdadm -D /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Tue Dec 29 20:15:27 2020

Raid Level : raid10

Array Size : 2095104 (2046.34 MiB 2145.39 MB)

Used Dev Size : 1047552 (1023.17 MiB 1072.69 MB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Dec 29 20:15:38 2020

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Name : localhost.localdomain:10 (local to host localhost.localdomain)

UUID : 909aecc0:5eb3cbc1:331b0792:616b1486

Events : 17

Number Major Minor RaidDevice State

0 8 17 0 `active sync set-A /dev/sdb1`

1 8 18 1 `active sync set-B /dev/sdb2`

2 8 19 2 `active sync set-A /dev/sdb3`

3 8 20 3 `active sync set-B /dev/sdb4`

命令小总结:

创建

[root@localhost ~]# mdadm --create(-C) /dev/md1 --level=1(-l) --raid-devices=2 (-n)/dev/sdc1 /dev/sdd1

标记故障

[root@localhost aaa]# mdadm /dev/md1 --fail (-f)/dev/sdc1

移除

[root@localhost aaa]# mdadm /dev/md1 --remove(-r) /dev/sdc1

添加

[root@localhost aaa]# mdadm /dev/md1 --add (-a)/dev/sde1

显示详情信息

[root@localhost aaa]# mdadm --detail(-D) /dev/md1

停止

[root@localhost ~]# mdadm --stop (-S)/dev/md0

清除raid标识

[root@localhost ~]# mdadm --help

[root@localhost ~]# mdadm --misc --help

[root@localhost ~]# mdadm --misc --zero-superblock /dev/sdb[1-2]