基于Lending Club的数据分析实战项目【小白记录向】【一】

本实战项目基于Lending Club的数据集【数据集地址:https://github.com/H-Freax/lendingclub_analyse/data/】

本实战项目基于Colab环境

文章目录

- 简介

- 环境准备

- 载入数据

- 数据基本信息查看/分析

- BaseLine

-

- data preprocessing

- Algorithm

- Train

- Test

- 添加衍生变量

-

- CatBoostEncoder

-

- data preprocessing

- Algorithm

- Train

- Test

- 离散化

-

- 基于聚类continuous_open_acc

-

- data preprocessing

- Algorithm

- Train

- Test

- 改用指数性区间划分continuous_loan_amnt

-

- data preprocessing

- Algorithm

- Train

- Test

- 基于业务逻辑分析的衍生变量

-

- data preprocessing

- Algorithm

- Train

- Test

简介

本数据分析实战项目分为两篇,第一篇主要介绍了基于LightGBM的Baseline方法,以及三种添加衍生变量的方法,找到了四组可以提升效果的衍生变量,第二篇主要介绍了基于机器学习方法及深度学习网络方法的数据分析,同时对机器学习方法的集成以及将深度学习网络与机器学习方法的融合进行了实践。

环境准备

本项目采用lightgbm作为baseline

首先引入相关包

import lightgbm as lgb

import numpy as np

import pandas as pd

from sklearn.model_selection import KFold

from sklearn.metrics import accuracy_score

载入数据

seed = 42 # for the same data division

kf = KFold(n_splits=5, random_state=seed,shuffle=True)

df_train = pd.read_csv('train_final.csv')

df_test = pd.read_csv('test_final.csv')

数据基本信息查看/分析

查看df_train的基本信息

df_train.describe()

为了进行数据分析的时候先跳过one-hot编码的部分,先采用以下函数列出所有列名

df_train.columns.values

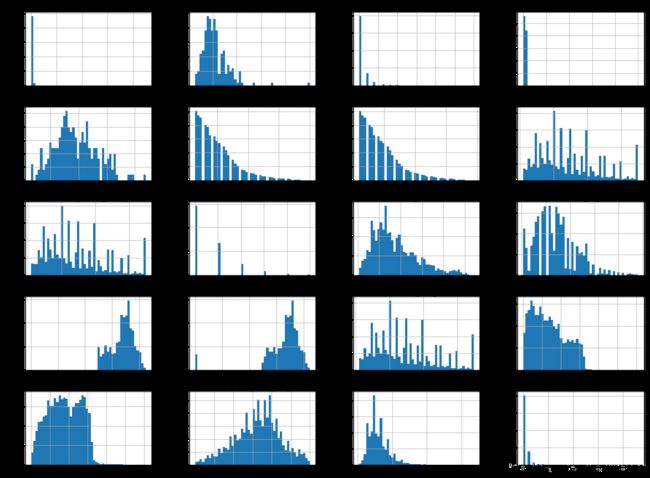

将one-hot编码对应的变量排除以后进行可视化,查看规律

import matplotlib.pyplot as plt

onehotlabels=['discrete_addr_state_1_one_hot',

'discrete_addr_state_2_one_hot', 'discrete_addr_state_3_one_hot',

'discrete_addr_state_4_one_hot', 'discrete_addr_state_5_one_hot',

'discrete_addr_state_6_one_hot', 'discrete_addr_state_7_one_hot',

'discrete_addr_state_8_one_hot', 'discrete_addr_state_9_one_hot',

'discrete_addr_state_10_one_hot', 'discrete_addr_state_11_one_hot',

'discrete_addr_state_12_one_hot', 'discrete_addr_state_13_one_hot',

'discrete_addr_state_14_one_hot', 'discrete_addr_state_15_one_hot',

'discrete_addr_state_16_one_hot', 'discrete_addr_state_17_one_hot',

'discrete_addr_state_18_one_hot', 'discrete_addr_state_19_one_hot',

'discrete_addr_state_20_one_hot', 'discrete_addr_state_21_one_hot',

'discrete_addr_state_22_one_hot', 'discrete_addr_state_23_one_hot',

'discrete_addr_state_24_one_hot', 'discrete_addr_state_25_one_hot',

'discrete_addr_state_26_one_hot', 'discrete_addr_state_27_one_hot',

'discrete_addr_state_28_one_hot', 'discrete_addr_state_29_one_hot',

'discrete_addr_state_30_one_hot', 'discrete_addr_state_31_one_hot',

'discrete_addr_state_32_one_hot', 'discrete_addr_state_33_one_hot',

'discrete_addr_state_34_one_hot', 'discrete_addr_state_35_one_hot',

'discrete_addr_state_36_one_hot', 'discrete_addr_state_37_one_hot',

'discrete_addr_state_38_one_hot', 'discrete_addr_state_39_one_hot',

'discrete_addr_state_40_one_hot', 'discrete_addr_state_41_one_hot',

'discrete_addr_state_42_one_hot', 'discrete_addr_state_43_one_hot',

'discrete_addr_state_44_one_hot', 'discrete_addr_state_45_one_hot',

'discrete_addr_state_46_one_hot', 'discrete_addr_state_47_one_hot',

'discrete_addr_state_48_one_hot', 'discrete_addr_state_49_one_hot',

'discrete_application_type_1_one_hot',

'discrete_application_type_2_one_hot',

'discrete_emp_length_1_one_hot', 'discrete_emp_length_2_one_hot',

'discrete_emp_length_3_one_hot', 'discrete_emp_length_4_one_hot',

'discrete_emp_length_5_one_hot', 'discrete_emp_length_6_one_hot',

'discrete_emp_length_7_one_hot', 'discrete_emp_length_8_one_hot',

'discrete_emp_length_9_one_hot', 'discrete_emp_length_10_one_hot',

'discrete_emp_length_11_one_hot', 'discrete_emp_length_12_one_hot',

'discrete_grade_1_one_hot', 'discrete_grade_2_one_hot',

'discrete_grade_3_one_hot', 'discrete_grade_4_one_hot',

'discrete_grade_5_one_hot', 'discrete_grade_6_one_hot',

'discrete_grade_7_one_hot', 'discrete_home_ownership_1_one_hot',

'discrete_home_ownership_2_one_hot',

'discrete_home_ownership_3_one_hot',

'discrete_home_ownership_4_one_hot',

'discrete_policy_code_1_one_hot', 'discrete_purpose_1_one_hot',

'discrete_purpose_2_one_hot', 'discrete_purpose_3_one_hot',

'discrete_purpose_4_one_hot', 'discrete_purpose_5_one_hot',

'discrete_purpose_6_one_hot', 'discrete_purpose_7_one_hot',

'discrete_purpose_8_one_hot', 'discrete_purpose_9_one_hot',

'discrete_purpose_10_one_hot', 'discrete_purpose_11_one_hot',

'discrete_purpose_12_one_hot', 'discrete_pymnt_plan_1_one_hot',

'discrete_sub_grade_1_one_hot', 'discrete_sub_grade_2_one_hot',

'discrete_sub_grade_3_one_hot', 'discrete_sub_grade_4_one_hot',

'discrete_sub_grade_5_one_hot', 'discrete_sub_grade_6_one_hot',

'discrete_sub_grade_7_one_hot', 'discrete_sub_grade_8_one_hot',

'discrete_sub_grade_9_one_hot', 'discrete_sub_grade_10_one_hot',

'discrete_sub_grade_11_one_hot', 'discrete_sub_grade_12_one_hot',

'discrete_sub_grade_13_one_hot', 'discrete_sub_grade_14_one_hot',

'discrete_sub_grade_15_one_hot', 'discrete_sub_grade_16_one_hot',

'discrete_sub_grade_17_one_hot', 'discrete_sub_grade_18_one_hot',

'discrete_sub_grade_19_one_hot', 'discrete_sub_grade_20_one_hot',

'discrete_sub_grade_21_one_hot', 'discrete_sub_grade_22_one_hot',

'discrete_sub_grade_23_one_hot', 'discrete_sub_grade_24_one_hot',

'discrete_sub_grade_25_one_hot', 'discrete_sub_grade_26_one_hot',

'discrete_sub_grade_27_one_hot', 'discrete_sub_grade_28_one_hot',

'discrete_sub_grade_29_one_hot', 'discrete_sub_grade_30_one_hot',

'discrete_sub_grade_31_one_hot', 'discrete_sub_grade_32_one_hot',

'discrete_sub_grade_33_one_hot', 'discrete_sub_grade_34_one_hot',

'discrete_sub_grade_35_one_hot', 'discrete_term_1_one_hot',

'discrete_term_2_one_hot','loan_status']

showdf_train=df_train.drop(columns=onehotlabels)

showdf_train.hist(bins=50,figsize=(20,15))

plt.show()

由于continuous_fico_range与continuous_last_fico_range存在上下界且有高相关性,故删去high的部分进行进一步可视化分析

from pandas.plotting import scatter_matrix

scatter_matrix(showdf_train.drop(columns=['continuous_fico_range_high','continuous_last_fico_range_high']),figsize=(40,35))

BaseLine

data preprocessing

X_train = df_train.drop(columns=['loan_status']).values

Y_train = df_train['loan_status'].values.astype(int)

X_test = df_test.drop(columns=['loan_status']).values

Y_test = df_test['loan_status'].values.astype(int)

# split data for five fold

five_fold_data = []

for train_index, eval_index in kf.split(X_train):

x_train, x_eval = X_train[train_index], X_train[eval_index]

y_train, y_eval = Y_train[train_index], Y_train[eval_index]

five_fold_data.append([(x_train, y_train), (x_eval, y_eval)])

X_train.shape, Y_train.shape

Algorithm

def get_model(param):

model_list = []

for idx, [(x_train, y_train), (x_eval, y_eval)] in enumerate(five_fold_data):

print('{}-th model is training:'.format(idx))

train_data = lgb.Dataset(x_train, label=y_train)

validation_data = lgb.Dataset(x_eval, label=y_eval)

bst = lgb.train(param, train_data, valid_sets=[validation_data])

model_list.append(bst)

return model_list

Train

param_base = {

'num_leaves': 31, 'objective': 'binary', 'metric': 'binary', 'num_round':1000}

param_fine_tuning = {

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 1000,

'learning_rate': 3e-3, 'feature_fraction': 0.6, 'bagging_fraction': 0.8}

# base param train

param_base_model = get_model(param_base)

# param fine tuning

param_fine_tuning_model = get_model(param_fine_tuning)

Test

def test_model(model_list):

data = X_test

five_fold_pred = np.zeros((5, len(X_test)))

for i, bst in enumerate(model_list):

ypred = bst.predict(data, num_iteration=bst.best_iteration)

five_fold_pred[i] = ypred

ypred_mean = (five_fold_pred.mean(axis=-2)>0.5).astype(int)

return accuracy_score(ypred_mean, Y_test)

base_score = test_model(param_base_model)

fine_tuning_score = test_model(param_fine_tuning_model)

print('base: {}, fine tuning: {}'.format(base_score, fine_tuning_score))

添加衍生变量

CatBoostEncoder

导入相关环境

pip install category_encoders

import category_encoders as ce #CatBoostEncoder的相关包

#Create the encoder

target_enc = ce.CatBoostEncoder(cols='continuous_open_acc')

target_enc.fit(df_train['continuous_open_acc'], df_train['loan_status'])

#Transform the features, rename columns with _cb suffix, and join to dataframe

train_CBE = df_train.join(target_enc.transform(df_train['continuous_open_acc']).add_suffix('_cb'))

test_CBE = df_test.join(target_enc.transform(df_test['continuous_open_acc']).add_suffix('_cb'))

data preprocessing

X_train = train_CBE.drop(columns=['loan_status']).values

Y_train = train_CBE['loan_status'].values.astype(int)

X_test = test_CBE.drop(columns=['loan_status']).values

Y_test = test_CBE['loan_status'].values.astype(int)

# split data for five fold

five_fold_data = []

for train_index, eval_index in kf.split(X_train):

x_train, x_eval = X_train[train_index], X_train[eval_index]

y_train, y_eval = Y_train[train_index], Y_train[eval_index]

five_fold_data.append([(x_train, y_train), (x_eval, y_eval)])

Algorithm

def get_model(param):

model_list = []

for idx, [(x_train, y_train), (x_eval, y_eval)] in enumerate(five_fold_data):

print('{}-th model is training:'.format(idx))

train_data = lgb.Dataset(x_train, label=y_train)

validation_data = lgb.Dataset(x_eval, label=y_eval)

bst = lgb.train(param, train_data, valid_sets=[validation_data])

model_list.append(bst)

return model_list

Train

param_base = {

'num_leaves': 31, 'objective': 'binary', 'metric': 'binary', 'num_round':1000}

param_fine_tuning = {

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 1000,

'learning_rate': 3e-3, 'feature_fraction': 0.6, 'bagging_fraction': 0.8}

param_fine_tuningfinal={

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 1200,

'learning_rate': 3e-3, 'feature_fraction': 0.6, 'bagging_fraction': 0.8}

# base param train

param_base_model = get_model(param_base)

# param fine tuning

param_fine_tuning_model = get_model(param_fine_tuning)

param_fine_tuningfinal_model = get_model(param_fine_tuningfinal)

Test

def test_model(model_list):

data = X_test

five_fold_pred = np.zeros((5, len(X_test)))

for i, bst in enumerate(model_list):

ypred = bst.predict(data, num_iteration=bst.best_iteration)

five_fold_pred[i] = ypred

ypred_mean = (five_fold_pred.mean(axis=-2)>0.5).astype(int)

return accuracy_score(ypred_mean, Y_test)

base_score = test_model(param_base_model)

fine_tuning_score = test_model(param_fine_tuning_model)

fine_tuningfinal_score=test_model(param_fine_tuningfinal_model)

print('base: {}, fine tuning: {} , fine tuning final: {}'.format(base_score, fine_tuning_score, fine_tuningfinal_score))

base: 0.91568, fine tuning: 0.91774 , fine tuning final: 0.91796

离散化

基于聚类continuous_open_acc

df_train.groupby('continuous_open_acc')['continuous_open_acc'].unique()

!pip install KMeans

from sklearn.cluster import KMeans

ddtrain=df_train['continuous_open_acc']

ddtest=df_test['continuous_open_acc']

data_reshape1=ddtrain.values.reshape((ddtrain.shape[0],1))

model_kmeans=KMeans(n_clusters=5,random_state=0)

kmeans_result=model_kmeans.fit_predict(data_reshape1)

traina=kmeans_result

data_reshape2=ddtest.values.reshape((ddtest.shape[0],1))

model_kmeans=KMeans(n_clusters=5,random_state=0)

kmeans_result=model_kmeans.fit_predict(data_reshape2)

testa=kmeans_result

train_KM = df_train.copy()

test_KM = df_test.copy()

train_KM['continuous_open_acc_km']=traina

test_KM['continuous_open_acc_km']=testa

data preprocessing

X_train = train_KM.drop(columns=['loan_status']).values

Y_train = train_KM['loan_status'].values.astype(int)

X_test = test_KM.drop(columns=['loan_status']).values

Y_test = test_KM['loan_status'].values.astype(int)

# split data for five fold

five_fold_data = []

for train_index, eval_index in kf.split(X_train):

x_train, x_eval = X_train[train_index], X_train[eval_index]

y_train, y_eval = Y_train[train_index], Y_train[eval_index]

five_fold_data.append([(x_train, y_train), (x_eval, y_eval)])

Algorithm

def get_model(param):

model_list = []

for idx, [(x_train, y_train), (x_eval, y_eval)] in enumerate(five_fold_data):

print('{}-th model is training:'.format(idx))

train_data = lgb.Dataset(x_train, label=y_train)

validation_data = lgb.Dataset(x_eval, label=y_eval)

bst = lgb.train(param, train_data, valid_sets=[validation_data])

model_list.append(bst)

return model_list

Train

param_base = {

'num_leaves': 31, 'objective': 'binary', 'metric': 'binary', 'num_round':1000}

param_fine_tuning = {

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 1000,

'learning_rate': 3e-3, 'feature_fraction': 0.6, 'bagging_fraction': 0.8}

param_fine_tuningfinal={

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 800,

'learning_rate': 6e-3, 'feature_fraction': 0.8, 'bagging_fraction': 0.6,'boosting':'goss','tree_learning':'feature','max_depth':20,'min_sum_hessian_in_leaf':100}

# # base param train

param_base_model = get_model(param_base)

# # param fine tuning

param_fine_tuning_model = get_model(param_fine_tuning)

param_fine_tuningfinal_model = get_model(param_fine_tuningfinal)

Test

def test_model(model_list):

data = X_test

five_fold_pred = np.zeros((5, len(X_test)))

for i, bst in enumerate(model_list):

ypred = bst.predict(data, num_iteration=bst.best_iteration)

five_fold_pred[i] = ypred

ypred_mean = (five_fold_pred.mean(axis=-2)>0.5).astype(int)

return accuracy_score(ypred_mean, Y_test)

base_score = test_model(param_base_model)

fine_tuning_score = test_model(param_fine_tuning_model)

fine_tuningfinal_score=test_model(param_fine_tuningfinal_model)

print('base: {}, fine tuning: {} , fine tuning final: {}'.format(base_score, fine_tuning_score, fine_tuningfinal_score))

base: 0.91598, fine tuning: 0.91776 , fine tuning final: 0.91874

改用指数性区间划分continuous_loan_amnt

train_ZQ = df_train.copy()

test_ZQ = df_test.copy()

trainbins=np.floor(np.log10(train_ZQ['continuous_loan_amnt'])) #取对数之后再向下取整

testbins=np.floor(np.log10(test_ZQ['continuous_loan_amnt']))

train_ZQ['continuous_loan_amnt_km']=trainbins

test_ZQ['continuous_loan_amnt_km']=testbins

data preprocessing

X_train = train_ZQ.drop(columns=['loan_status']).values

Y_train = train_ZQ['loan_status'].values.astype(int)

X_test = test_ZQ.drop(columns=['loan_status']).values

Y_test = test_ZQ['loan_status'].values.astype(int)

# split data for five fold

five_fold_data = []

for train_index, eval_index in kf.split(X_train):

x_train, x_eval = X_train[train_index], X_train[eval_index]

y_train, y_eval = Y_train[train_index], Y_train[eval_index]

five_fold_data.append([(x_train, y_train), (x_eval, y_eval)])

Algorithm

def get_model(param):

model_list = []

for idx, [(x_train, y_train), (x_eval, y_eval)] in enumerate(five_fold_data):

print('{}-th model is training:'.format(idx))

train_data = lgb.Dataset(x_train, label=y_train)

validation_data = lgb.Dataset(x_eval, label=y_eval)

bst = lgb.train(param, train_data, valid_sets=[validation_data])

model_list.append(bst)

return model_list

Train

param_base = {

'num_leaves': 31, 'objective': 'binary', 'metric': 'binary', 'num_round':1000}

param_fine_tuning = {

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 1000,

'learning_rate': 3e-3, 'feature_fraction': 0.6, 'bagging_fraction': 0.8}

param_fine_tuningfinal={

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 900,

'learning_rate': 7e-3, 'feature_fraction': 0.8, 'bagging_fraction': 0.6,'max_depth':20,'min_sum_hessian_in_leaf':100}

# base param train

param_base_model = get_model(param_base)

# param fine tuning

param_fine_tuning_model = get_model(param_fine_tuning)

param_fine_tuningfinal_model = get_model(param_fine_tuningfinal)

Test

def test_model(model_list):

data = X_test

five_fold_pred = np.zeros((5, len(X_test)))

for i, bst in enumerate(model_list):

ypred = bst.predict(data, num_iteration=bst.best_iteration)

five_fold_pred[i] = ypred

ypred_mean = (five_fold_pred.mean(axis=-2)>0.5).astype(int)

return accuracy_score(ypred_mean, Y_test)

base_score = test_model(param_base_model)

fine_tuning_score = test_model(param_fine_tuning_model)

fine_tuningfinal_score=test_model(param_fine_tuningfinal_model)

print('base: {}, fine tuning: {} , fine tuning final: {}'.format(base_score, fine_tuning_score, fine_tuningfinal_score))

base: 0.91586, fine tuning: 0.91764 , fine tuning final: 0.91842

基于业务逻辑分析的衍生变量

train_YW = df_train.copy()

test_YW = df_test.copy()

train_YW['installment_feat']=train_YW['continuous_installment'] / ((train_YW['continuous_annual_inc']+1) / 12)

test_YW['installment_feat']=test_YW['continuous_installment'] / ((test_YW['continuous_annual_inc']+1) / 12)

data preprocessing

X_train = train_YW.drop(columns=['loan_status']).values

Y_train = train_YW['loan_status'].values.astype(int)

X_test = test_YW.drop(columns=['loan_status']).values

Y_test = test_YW['loan_status'].values.astype(int)

# split data for five fold

five_fold_data = []

for train_index, eval_index in kf.split(X_train):

x_train, x_eval = X_train[train_index], X_train[eval_index]

y_train, y_eval = Y_train[train_index], Y_train[eval_index]

five_fold_data.append([(x_train, y_train), (x_eval, y_eval)])

Algorithm

def get_model(param):

model_list = []

for idx, [(x_train, y_train), (x_eval, y_eval)] in enumerate(five_fold_data):

print('{}-th model is training:'.format(idx))

train_data = lgb.Dataset(x_train, label=y_train)

validation_data = lgb.Dataset(x_eval, label=y_eval)

bst = lgb.train(param, train_data, valid_sets=[validation_data])

model_list.append(bst)

return model_list

Train

param_base = {

'num_leaves': 31, 'objective': 'binary', 'metric': 'binary', 'num_round':1000}

param_fine_tuning = {

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 1000,

'learning_rate': 3e-3, 'feature_fraction': 0.6, 'bagging_fraction': 0.8}

param_fine_tuningfinal={

'num_thread': 8,'num_leaves': 128, 'metric': 'binary', 'objective': 'binary', 'num_round': 900,

'learning_rate': 7e-3, 'feature_fraction': 0.8, 'bagging_fraction': 0.6,'max_depth':20,'min_sum_hessian_in_leaf':100}

# base param train

param_base_model = get_model(param_base)

# param fine tuning

param_fine_tuning_model = get_model(param_fine_tuning)

param_fine_tuningfinal_model = get_model(param_fine_tuningfinal)

Test

def test_model(model_list):

data = X_test

five_fold_pred = np.zeros((5, len(X_test)))

for i, bst in enumerate(model_list):

ypred = bst.predict(data, num_iteration=bst.best_iteration)

five_fold_pred[i] = ypred

ypred_mean = (five_fold_pred.mean(axis=-2)>0.5).astype(int)

return accuracy_score(ypred_mean, Y_test)

base_score = test_model(param_base_model)

fine_tuning_score = test_model(param_fine_tuning_model)

fine_tuningfinal_score=test_model(param_fine_tuningfinal_model)

print('base: {}, fine tuning: {} , fine tuning final: {}'.format(base_score, fine_tuning_score, fine_tuningfinal_score))

base: 0.9162, fine tuning: 0.91758 , fine tuning final: 0.91844