pytorch线性回归

Your first step towards deep learning

您迈向深度学习的第一步

In my previous posts we have gone through

在我以前的帖子中,我们已经看过

Deep Learning — Artificial Neural Network(ANN)

深度学习-人工神经网络(ANN)

Tensors — Basics of pytorch programming

张量-pytorch编程基础

Here we will try to solve the classic linear regression problem using pytorch tensors.

在这里,我们将尝试使用pytorch张量解决经典的线性回归问题。

1 What is Linear regression ?

1什么是线性回归?

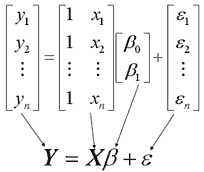

y = Ax + B.A = slope of curveB = bias (point that intersect y-axis)y=target variablex=feature variable

y = Ax + BA =曲线的斜率B =偏差(与y轴相交的点)y =目标变量x =特征变量

Linear regression matrix (Source : https://online.stat.psu.edu/stat462/node/132/) 线性回归矩阵(来源: https : //online.stat.psu.edu/stat462/node/132/ )1.1 Linear regression example 1:

1.1线性回归示例1 :

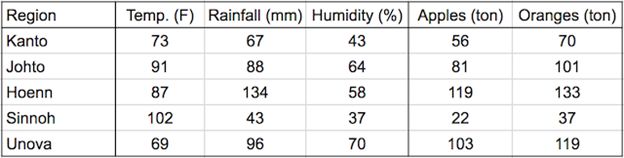

We’ll create a model that predicts crop yields for apples and oranges (target variables) by looking at the average temperature, rainfall and humidity (input variables or features) in a region. Here’s the training data:

我们将创建一个模型,通过查看区域中的平均温度,降雨量和湿度(输入变量或特征)来预测苹果和橙子的作物产量(目标变量)。 这是训练数据:

(Source: https://www.kaggle.com/aakashns/pytorch-basics-linear-regression-from-scratch) (来源: https : //www.kaggle.com/aakashns/pytorch-basics-linear-regression-from-scratch )yield_apple = w11 * temp + w12 * rainfall + w13 * humidity + b1

yield_apple = w11 *温度+ w12 *降雨+ w13 *湿度+ b1

yield_orange = w21 * temp + w22 * rainfall + w23 * humidity + b2

yield_orange = w21 *温度+ w22 *降雨+ w23 *湿度+ b2

Learning rate of linear regression is to find out these w,b values to make accurate predictions on unseen data. This optimization technique for linear regression is gradient descent which slightly adjusts weights many times to make better predictions.Below is the matrix representation

线性回归的学习率是找出这些w,b值以对看不见的数据做出准确的预测。 这种用于线性回归的优化技术是梯度下降,它多次多次调整权重以做出更好的预测。下面是矩阵表示

(Source : https://www.kaggle.com/aakashns/pytorch-basics-linear-regression-from-scratch) (来源: https : //www.kaggle.com/aakashns/pytorch-basics-linear-regression-from-scratch )1.1.1 import Libraries & load data set:

1.1.1导入库和加载数据集:

First we are importing necessary libraries , we are passing inputs and targets as numpy arrays first and then converting them into tensor.

首先,我们导入必要的库,首先将输入和目标作为numpy数组传递,然后将它们转换为张量。

#import Libraries

import torch

import torch.nn as nn

import pandas as pd

import numpy as np# Input (temp, rainfall, humidity)

inputs = np.array([[73, 67, 43], [91, 88, 64], [87, 134, 58],

[102, 43, 37], [69, 96, 70], [73, 67, 43],

[91, 88, 64], [87, 134, 58], [102, 43, 37],

[69, 96, 70], [73, 67, 43], [91, 88, 64],

[87, 134, 58], [102, 43, 37], [69, 96, 70]],

dtype='float32')# Targets (apples, oranges)

targets = np.array([[56, 70], [81, 101], [119, 133],

[22, 37], [103, 119], [56, 70],

[81, 101], [119, 133], [22, 37],

[103, 119], [56, 70], [81, 101],

[119, 133], [22, 37], [103, 119]],

dtype='float32')inputs = torch.from_numpy(inputs)

targets = torch.from_numpy(targets)inputs

targets1.1.2 Data set & Data Loader :

1.1.2数据集和数据加载器:

Here we are creating Tensor data set which allows us to access as tuples through indexing operation. Here our Tensor data set contains first element as input variables and second elements as target variables.

在这里,我们正在创建Tensor数据集,该数据集允许我们通过索引操作将其作为元组进行访问。 在这里,我们的Tensor数据集包含第一个元素作为输入变量,第二个元素作为目标变量。

Data loader helps us to split data into batches and we can split and shuffle them , which helps in randomizing the input to optimization where faster reduction of loss takes place.

数据加载器可帮助我们将数据拆分为多个批次,然后我们可以拆分和重新组合它们,这有助于将输入随机化到优化,从而可以更快地减少损失。

# Tensor Data set

from torch.utils.data import TensorDataset

# Define dataset

train_ds = TensorDataset(inputs, targets)

train_ds[0:3]#Data loader

from torch.utils.data import DataLoader

# Define data loader

batch_size = 5

train_dl = DataLoader(train_ds, batch_size, shuffle=True)1.1.3 Define model , loss and optimizer:

1.1.3定义模型,损失和优化器:

- We are defining model using nn.linear class. In nn.Linear(3,2) input dimension is 3,output dimension 2 ie number of input variables (temp,rainfall,humidity), output variables(apples,oranges)in our example. 我们正在使用nn.linear类定义模型。 在nn.Linear(3,2)中,输入维度为3,输出维度为2,即在我们的示例中,输入变量(温度,降雨,湿度),输出变量(苹果,橙色)的数量。

- list(model.parameters) will return list of weights and bias matrices. list(model.parameters)将返回权重和偏差矩阵的列表。

- torch.nn.functional module contains builtin loss function mean squared error loss which is mse_loss torch.nn.functional模块包含内置损失函数,均方误差损失为mse_loss

- torch.optim.SGD stands for Stochastic Gradient Descent optimizer, module.parameters is passed so that SGD optimizer knows which matrices to be modified during each step. lr=le-5 stands for learning rate which controls the amount by which parameters are modified. torch.optim.SGD代表随机梯度下降优化器,即module.parameters,以便SGD优化器知道在每个步骤中要修改的矩阵。 lr = le-5代表学习率,它控制参数修改的数量。

# Define linear model

model = nn.Linear(3, 2)

print(model.weight)

print(model.bias)# Parameters

list(model.parameters())# Define Loss

import torch.nn.functional as F

loss_fn = F.mse_loss# Define optimizer

opt = torch.optim.SGD(model.parameters(), lr=1e-5)1.1.4 Train & fit model , generate predictions:

1.1.4训练和拟合模型,生成预测:

The process flow is as follows

处理流程如下

1.Generate predictions2.Calculate the loss3.Compute gradients w.r.t the weights and biases4.Adjust the weights by subtracting a small quantity proportional to the gradient5.Reset the gradients to zero

1生成预测2计算损失3计算权重和偏差的梯度4通过减去与梯度成比例的少量调整权重5将梯度重置为零

Let us understand code line by line.

让我们逐行了解代码。

- Define a utility function fit which takes num_epochs,model,loss_fn,train_dl as arguments. 定义一个以num_epochs,model,loss_fn,train_dl作为参数的实用函数fit。

- To repeat for a given number of epochs we are creating for loop. 为了重复给定数量的纪元,我们创建了for循环。

- To get batches of data for every iteration we use data loader. 为了获得每次迭代的批量数据,我们使用数据加载器。

- generate predictions by passing xb into model. 通过将xb传递给模型来生成预测。

- calculate loss defined in loss_fn which takes pred,yb as arguments. 计算在loss_fn中定义的损耗,该损耗以pred,yb作为参数。

- loss.backward() computes dloss/dx for every parameter x which has requires_grad=True. These are accumulated into x.grad for every parameter x. loss.backward()为每个具有require_grad = True的参数x计算dloss / dx。 对于每个参数x,这些都累积到x.grad中。

- opt.step() used to update the parameters weights & biases opt.step()用于更新参数权重和偏差

- opt.zero_grad() resets the gradients to zero opt.zero_grad()将渐变重置为零

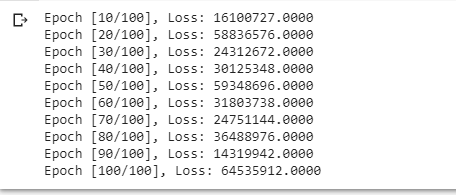

- The log statement prints the progress on every 10 th epoch log语句每10个周期打印一次进度

- fit(100, model, criterion , optimizer ,train_dl) Training the model for 100 epochs using the utility function we defined fit(100,model,criteria,optimizer,train_dl)使用我们定义的效用函数训练100个时期的模型

- preds=model(inputs) generate predictions preds = model(inputs)生成预测

# Utility function to train the model

def fit(num_epochs, model, loss_fn, opt, train_dl):

# Repeat for given number of epochs

for epoch in range(num_epochs):

# Train with batches of data

for xb,yb in train_dl:

# 1. Generate predictions

pred = model(xb)

# 2. Calculate loss

loss = loss_fn(pred, yb)

# 3. Compute gradients

loss.backward()

# 4. Update parameters using gradients

opt.step()

# 5. Reset the gradients to zero

opt.zero_grad()

# Print the progress

if (epoch+1) % 10 == 0:

print('Epoch [{}/{}], Loss: {:.4f}'.format(epoch+1, num_epochs, loss.item()))#fit model for 100 epochs

fit(100, model, criterion , optimizer ,train_dl)# Generate predictions

preds = model(inputs)

preds1.2 Linear regression example 2:

1.2线性回归示例2:

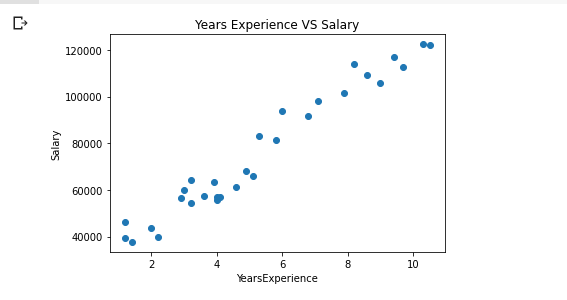

We will create a linear regression model and train it on given set of observations of experiences and respective salary and will try to predict salaries for new set of experiences. A simple linear regression problem.

我们将创建一个线性回归模型,并根据给定的一组经验观察值和相应的薪水对其进行训练,并尝试预测一组新的经验值。 一个简单的线性回归问题。

1.2.1 Import libraries & load data:

1.2.1导入库和加载数据:

Importing libraries and reading the data from csv file as pandas data frame.Visualizing our data set as a scatter plot using matplotlib.

导入库并从csv文件中读取数据作为pandas数据框。使用matplotlib将数据集可视化为散点图。

#Import libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

import torch

import torch.nn as nn#Loading the data set

data=pd.read_csv('/content/exp_vs_salary_Data.csv')#let us try to visualize our data

data.head()# lets visualize our data

import matplotlib.pyplot as plt

plt.scatter(data.YearsExperience,data.Salary)

plt.xlabel("YearsExperience")

plt.ylabel("Salary")

plt.title("Years Experience VS Salary")

plt.show()1.2.2 Train and test split and tensor creation:

1.2.2训练并测试分裂和张量创建:

Here we are splitting the data set into train and test data set with 80:20.Converting these train and test data sets onto pytorch tensors x_train,y_train,x_test,y_test.

这里我们以80:20将数据集分为训练和测试数据集,将这些训练和测试数据集转换为pytorch张量x_train,y_train,x_test,y_test。

#Splitting the dataset into training and testing dataset

train, test = train_test_split(data, test_size = 0.2)#Converting training data into tensors for Pytorch

X_train = torch.Tensor([[x] for x in list(train.YearsExperience)])

y_train = torch.torch.FloatTensor([[x] for x in list(train.Salary)])#Converting test data into tensors for Pytorch

X_test = torch.Tensor([[x] for x in list(test.YearsExperience)])

y_test = torch.torch.FloatTensor([[x] for x in list(test.Salary)])1.2.3 Tensor data set & Data set loader:

1.2.3 Tensor数据集和数据集加载器:

As we seen in previous example we are using tensor data set and data loader to pass the data set

如上例所示,我们使用张量数据集和数据加载器传递数据集

#Data set & Data set Loader

from torch.utils.data import TensorDataset

train_data=TensorDataset(X_train, y_train)

train_data[0:5]# Define data loader

from torch.utils.data import DataLoader

batch_size = 5

train_dl = DataLoader(train_data, batch_size, shuffle=True)1.2.3 Define model,loss,optimizer

1.2.3定义模型,损耗,优化器

Define linear model using nn.Linear where input dimension,output dimension is passed as parameters. Mean squared error is the loss function. SGD optimizer with a learning rate of 0.01 is set.

使用nn.Linear定义线性模型,其中输入尺寸,输出尺寸作为参数传递。 均方误差是损失函数。 设置学习率为0.01的SGD优化器。

# Define model

model = nn.Linear(1, 1) # nn.Linear(in_features,out_features)

print(model.weight)

print(model.bias)# printing the model Parameters

print(list(model.parameters()))#Define the loss function

loss_fun = nn.MSELoss()# Define SGD optimizer with learning rate 0.01

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)alternatively we can define a linear regressor as below.Let us understand this class in detail.

或者我们可以如下定义线性回归器。让我们详细了解此类。

- Creating a LinearRegression class that inherits nn.Module. 创建一个继承nn.Module的LinearRegression类。

- __init__ method for constructor is invoked when an object is created for the class. 为类创建对象时,将调用用于构造函数的__init__方法。

- super().__init__() initializes the constructor of parent class. super().__ init __()初始化父类的构造函数。

- Creating object for PyTorch’s Linear class with parameters in_features and out_features. 使用参数in_features和out_features为PyTorch的Linear类创建对象。

- Defining the forward function for passing the inputs to the regressor object initialized by the constructor returns predicted values 定义用于将输入传递给构造函数初始化的回归对象的正向函数将返回预测值

class LinearRegression(nn.Module):

def __init__(self, in_size, out_size):

super().__init__()

self.lin = nn.Linear(in_features = in_size, out_features = out_size)

def forward(self, X):

pred = self.lin(X)

return(pred)1.2.4 Train & fit model , generate predictions:

1.2.4训练和拟合模型,生成预测:

Training the model for 100 epochs and creating predictions

训练100个时代的模型并创建预测

# Utility function to train the model

def lrmodel(num_epochs, model, loss_fun, optimizer, train_dl):

# Repeat for given number of epochs

for epoch in range(num_epochs):

# Train with batches of data

for xb,yb in train_dl:

# 1. Generate predictions

pred = model(xb)

# 2. Calculate loss

loss = loss_fun(pred, yb)

# 3. Compute gradients

loss.backward()

# 4. Update parameters using gradients

optimizer.step()

# 5. Reset the gradients to zero

optimizer.zero_grad()

# Print the progress

if (epoch+1) % 10 == 0:

print('Epoch [{}/{}], Loss: {:.4f}'.format(epoch+1, num_epochs, loss.item()))#Training for 100 epochs

num_epochs=100

lrmodel(num_epochs, model, loss_fun, optimizer, train_dl)# Generate predictions

preds = model(X_train)

preds# Compare with targets

y_train1.2.5 Predicting for unseen data

1.2.5预测看不见的数据

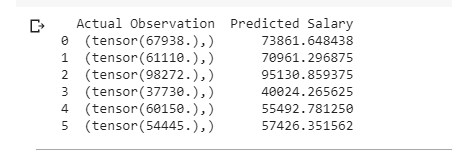

x_test is passed on to the model and predictions is compared with actual values which will give us the accuracy of our model on unseen data.

x_test传递给模型,并将预测与实际值进行比较,这将使我们在看不见的数据上获得模型的准确性。

#Predicting for X_test

y_pred_test = model(X_test)#Converting predictions from tensor objects into a listy_pred_test = [y_pred_test[x].item() for x in range(len(y_pred_test))]

# Comparing Actual and predicted values

df = {}

df['Actual Observation'] = y_test

df['Predicted Salary'] = y_pred_test

df = pd.DataFrame(df)

print(df)In my next post I will be discussing on logistic regression using pytorch tensors.

在我的下一篇文章中,我将讨论使用pytorch张量的逻辑回归。

Please find the github link for the code — https://github.com/Arun-purakkatt/Deep_Learning_Pytorch

请找到代码的github链接— https://github.com/Arun-purakkatt/Deep_Learning_Pytorch

Stay connected : https://www.linkedin.com/in/arun-purakkatt-mba-m-tech-31429367/

保持联系: https : //www.linkedin.com/in/arun-purakkatt-mba-m-tech-31429367/

Credits & References :

学分和参考:

https://pytorch.org/docs/stable/tensors.html

https://pytorch.org/docs/stable/tensors.html

https://jovian.ml/aakashns/02-linear-regression

https://jovian.ml/aakashns/02-linear-regression

https://www.deeplearningwizard.com/deep_learning/practical_pytorch/pytorch_linear_regression/

https://www.deeplearningwizard.com/deep_learning/practical_pytorch/pytorch_linear_regression/

https://github.com/fastai/fastai_old/tree/master/dev_nb

https://github.com/fastai/fastai_old/tree/master/dev_nb

翻译自: https://medium.com/analytics-vidhya/linear-regression-with-pytorch-147fed55f138

pytorch线性回归