深度学习(五):两个句子相似度相关模型学习

在这之前学习了使用Tensorflow框架,利用深度学习模型TextCnn和RnnAttention解决文本分类问题,这都是打基础的学习,我主要研究的是类案推送和量刑预测。所以这里我就开始踏入文本相似度计算模型的研究中。

我主要参照的模板是:蚂蚁金融的一个NLP比赛。最近主要研究了LSTM模型、Bi-LSTM模型、ESSM模型、ESIM模型这四个模型。其中前边两个代码跑通了,后面两个模型还没弄透,后边再写后边两个模型的代码,先记录下自己对前面两个模型的代码实现。

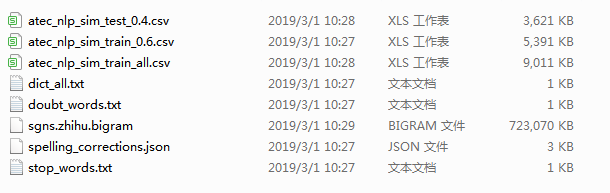

数据集:金融NLP数据集

1. LSTM模型的代码实现:

该模型主要实现了两个句子长度不一的输入和LSTMRNN的参数复用(reuse)。

参考博客网址:https://blog.csdn.net/sinat_31188625/article/details/72675627

损失函数:模型预测得分与真实得分的均方差

优化方法:AdaDelta

class LstmRNN(object):

def singleRNN(self, batch_size, x, scope, cell='lstm', reuse=None):

if cell == 'gru':

with tf.variable_scope('grucell' + scope, reuse=reuse, dtype=tf.float32):

used_cell = tf.contrib.rnn.GRUCell(self.hidden_neural_size, reuse=tf.get_variable_scope().reuse)

else:

with tf.variable_scope('lstmcell' + scope, reuse=reuse, dtype=tf.float32):

used_cell = tf.contrib.rnn.BasicLSTMCell(self.hidden_neural_size, forget_bias=1.0, state_is_tuple=True,

reuse=tf.get_variable_scope().reuse)

with tf.variable_scope('cell_init_state' + scope, reuse=reuse, dtype=tf.float32):

self.cell_init_state = used_cell.zero_state(batch_size, dtype=tf.float32)

with tf.name_scope('RNN_' + scope), tf.variable_scope('RNN_' + scope, dtype=tf.float32):

outs, _ = tf.nn.dynamic_rnn(used_cell, x, initial_state=self.cell_init_state, time_major=False,

dtype=tf.float32)

return outs

def __init__(self, config, batch_size, sequence_length, is_training=True):

self.keep_prob = config.keep_prob

embed_dim = config.embed_dim

self.hidden_neural_size = config.hidden_neural_size

# feeds需要的参数

self.input_data_s1 = tf.placeholder(tf.float32, [None, sequence_length, embed_dim])

self.input_data_s2 = tf.placeholder(tf.float32, [None, sequence_length, embed_dim])

self.target = tf.placeholder(tf.float32, [None])

self.mask_s1 = tf.placeholder(tf.float32, [None, sequence_length])

self.mask_s2 = tf.placeholder(tf.float32, [None, sequence_length])

# lstm_output_layer层

with tf.name_scope('lstm_output_layer'):

self.cell_outputs1 = self.singleRNN(batch_size, x=self.input_data_s1, scope='side1', cell='lstm', reuse=None)

self.cell_outputs2 = self.singleRNN(batch_size, x=self.input_data_s2, scope='side1', cell='lstm', reuse=True)

# Sentence_Layer层

with tf.name_scope('Sentence_Layer'):

self.sent1 = tf.reduce_sum(self.cell_outputs1 * self.mask_s1[:, :, None], axis=1)

self.sent2 = tf.reduce_sum(self.cell_outputs2 * self.mask_s2[:, :, None], axis=1)

# 输出层

with tf.name_scope("loss"):

diff = tf.abs(tf.subtract(self.sent1, self.sent2), name='err_l1')

diff = tf.reduce_sum(diff, axis=1)

self.sim = tf.clip_by_value(tf.exp(-1.0 * diff), 1e-7, 1.0 - 1e-7)

self.loss = tf.square(tf.subtract(self.sim, tf.clip_by_value((self.target - 1.0) / 4.0, 1e-7, 1.0 - 1e-7)))

# 计算准确度

with tf.name_scope('cost'):

self.cost = tf.reduce_mean(self.loss)

self.trueCost = tf.reduce_mean(tf.square(tf.subtract(self.sim * 4.0 + 1.0, self.target)))

cost_summary = tf.summary.scalar('cost_summary', self.cost)

mse_summary = tf.summary.scalar('mse_summary', self.trueCost)

if not is_training:

return

self.summary = tf.summary.merge([cost_summary, mse_summary]) # ??

# 训练优化器选择 train

with tf.name_scope('train'):

tvars = tf.trainable_variables()

grads, _ = tf.clip_by_global_norm(tf.gradients(self.cost, tvars), config.max_grad_norm)

optimizer = tf.train.AdadeltaOptimizer(learning_rate=0.0001, epsilon=1e-6)

self.train_op = optimizer.apply_gradients(zip(grads, tvars))

2. Bi_LSTM模型代码实现:

参考代码:https://github.com/zheng5yu9/siamese_dssm

class SiameseBiLstm(object):

# 定义BiLSTM神经单元

def bi_lstm(self, embedding_size, layer_size, keep_prob):

with tf.name_scope('fw_rnn'), tf.variable_scope('fw_rnn'):

_cells = [tf.nn.rnn_cell.LSTMCell(embedding_size, forget_bias=1.0, state_is_tuple=True) for _ in range(layer_size)]

multicell_fw = tf.nn.rnn_cell.MultiRNNCell(_cells, state_is_tuple=True)

dropoutcells_fw = tf.nn.rnn_cell.DropoutWrapper(multicell_fw, output_keep_prob=keep_prob, state_keep_prob=1.0)

with tf.name_scope('bw_rnn'), tf.variable_scope('bw_rnn'):

_cells = [tf.nn.rnn_cell.LSTMCell(embedding_size, forget_bias=1.0, state_is_tuple=True) for _ in range(layer_size)]

dropoutcells_bw = multicell_bw = tf.nn.rnn_cell.MultiRNNCell(_cells, state_is_tuple=True)

tf.nn.rnn_cell.DropoutWrapper(multicell_bw, input_keep_prob=1.0, output_keep_prob=keep_prob, state_keep_prob=1.0)

return dropoutcells_fw, dropoutcells_bw

# 改变输入x的shape,得到神经网络输入所需的格式

def transform_inputs(self, inputs, embedding_size, sequence_length):

inputs_transpose = tf.transpose(inputs, perm=[1, 0, 2])

inputs_transpose = tf.reshape(inputs_transpose, shape=[-1, embedding_size])

inputs_transpose = tf.split(inputs_transpose, sequence_length, 0)

return inputs_transpose

# 定义随机变量w

def weight_variables(self, shape, name):

initial_value = tf.truncated_normal(shape, mean=0.0, stddev=0.3, dtype=tf.float32)

variable = tf.Variable(initial_value=initial_value, trainable=True, name=name, dtype=tf.float32)

return variable

# 定义随机变量b

def bias_variables(self, shape, name):

# todo bias 如何赋值

initial_value = tf.constant(value=1.0, dtype=tf.float32, shape=shape)

variable = tf.Variable(initial_value=initial_value, trainable=True, name=name, dtype=tf.float32)

return variable

# 计算loss使用的方法

def contrastive_loss(self, Ew, y):

# todo 平方还是其他值?

tmp1 = y * tf.square(y - Ew)

tmp2 = (1 - y) * tf.square(tf.maximum(Ew, 0) - y)

return tf.reduce_sum(tmp1 + tmp2)

# 计算calculation时使用

def score(self, d1, d2):

numberator = tf.reduce_sum(tf.multiply(d1, d2), axis=1)

denominator1 = tf.sqrt(tf.reduce_sum(tf.square(d1), axis=1))

denominator2 = tf.sqrt(tf.reduce_sum(tf.square(d2), axis=1))

Ew = numberator / (denominator1 * denominator2)

return Ew

def __init__(self, layer_size, sequence_length, embedding_size, grad_clip):

# feeds需要的参数

self.input_x1 = tf.placeholder(dtype=tf.float32, shape=[None, sequence_length, embedding_size], name='input_x1')

self.input_x2 = tf.placeholder(dtype=tf.float32, shape=[None, sequence_length, embedding_size], name='input_x2')

self.input_y = tf.placeholder(dtype=tf.float32, shape=[None], name='input_y')

self.dropout_keep_prob = tf.placeholder(tf.float32, name="dropout_keep_prob")

# 惩罚项

self.l2_reg_lambda = 0.05

self.l2_loss = tf.constant(0, dtype=tf.float32)

with tf.variable_scope('output'):

multicell_fw, multicell_bw = self.bi_lstm(embedding_size, layer_size, self.dropout_keep_prob)

input_x1_transform = self.transform_inputs(self.input_x1, embedding_size, sequence_length)

input_x2_transform = self.transform_inputs(self.input_x2, embedding_size, sequence_length)

output1, _, _ = tf.nn.static_bidirectional_rnn(multicell_fw, multicell_bw, input_x1_transform, dtype=tf.float32)

tf.get_variable_scope().reuse_variables()

output2, _, _ = tf.nn.static_bidirectional_rnn(multicell_fw, multicell_bw, input_x2_transform, dtype=tf.float32)

output1 = tf.nn.relu(output1)

output2 = tf.nn.relu(output2)

output1 = tf.reduce_mean(output1, axis=0)

output2 = tf.reduce_mean(output2, axis=0)

self.output1 = output1

self.output2 = output2

with tf.variable_scope('fc1'):

weight_fc1 = self.weight_variables([2 * embedding_size, 1], 'weight_fc1')

bias_fc1 = self.bias_variables([1], 'bias_fc1')

d1 = tf.nn.xw_plus_b(output1, weight_fc1, bias_fc1, name='d1')

self.l2_loss += tf.nn.l2_loss(weight_fc1)

self.d1 = tf.nn.relu(d1)

with tf.variable_scope('fc2'):

weight_fc2 = self.weight_variables([2 * embedding_size, 1], 'weight_fc2')

bias_fc2 = self.bias_variables([1], 'bias_fc2')

d2 = tf.nn.xw_plus_b(output2, weight_fc2, bias_fc2, name='d2')

self.l2_loss += tf.nn.l2_loss(weight_fc2)

self.d2 = tf.nn.relu(d2)

with tf.variable_scope('calculation'):

numberator = tf.sqrt(tf.reduce_sum(tf.multiply(d1, d2), axis=1))

denominator1 = tf.sqrt(tf.reduce_sum(tf.square(d1), axis=1))

denominator2 = tf.sqrt(tf.reduce_sum(tf.square(d2), axis=1))

self.Ew = 2 * numberator / (denominator1 * denominator2)

self.Ew = tf.nn.sigmoid(self.Ew)

# 计算损失

with tf.variable_scope('loss'):

self.loss_pure = self.contrastive_loss(self.Ew, self.input_y) # 输出self.loss_pure

self.loss = self.loss_pure + self.l2_loss # sbl.loss会在train处用merged的方式输出

# 训练

with tf.variable_scope('train'):

variables = tf.trainable_variables()

grads = tf.gradients(self.loss, variables)

grads_cliped, _ = tf.clip_by_global_norm(grads, grad_clip)

optimizer = tf.train.AdamOptimizer(0.001)

self.optimizer = optimizer.apply_gradients(list(zip(grads_cliped, variables))) # 输出self.optimizer

未完待续!!