【前言】

此篇文章宗旨,在于忘记时,方便查阅。【原因】

为什么要自定义相机拍照?因为系统的相机拍照无法满足项目的需求。【了解】

首先了解一下使用AVFoundation做拍照和视频录制开发用到的相关类:

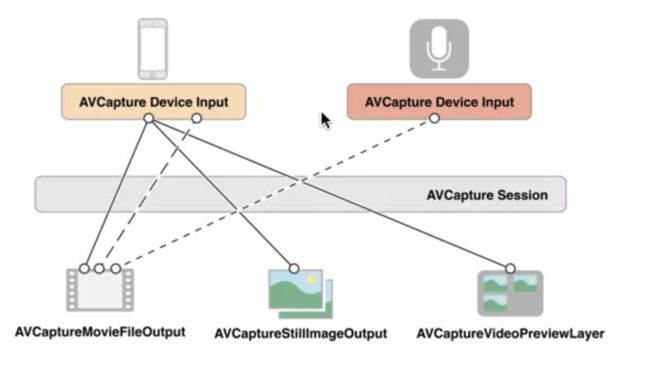

AVCaptureSession:媒体(音、视频)捕获会话,负责把捕获的音视频数据输出到输出设备中。一个AVCaptureSession可以有多个输入输出:如图:

AVCaptureDevice:输入设备,包括麦克风、摄像头,通过该对象可以设置物理设备的一些属性(例如相机聚焦、白平衡等)。

AVCaptureDeviceInput:设备输入数据管理对象,可以根据AVCaptureDevice创建对应的AVCaptureDeviceInput对象,该对象将会被添加到AVCaptureSession中管理。

AVCaptureOutput:输出数据管理对象,用于接收各类输出数据,通常使用对应的子类AVCaptureAudioDataOutput、AVCaptureStillImageOutput、AVCaptureVideoDataOutput、AVCaptureFileOutput,该对象将会被添加到AVCaptureSession中管理。

注意:

1.前面几个对象的输出数据都是NSData类型,而AVCaptureFileOutput代表数据以文件形式输出,类似的,AVCcaptureFileOutput也不会直接创建使用,通常会使用其子类:AVCaptureAudioFileOutput、AVCaptureMovieFileOutput。

2.当把一个输入或者输出添加到AVCaptureSession之后AVCaptureSession就会在所有相符的输入、输出设备之间建立连接(AVCaptionConnection):

- AVCaptureVideoPreviewLayer:相机拍摄预览图层,是CALayer的子类,使用该对象可以实时查看拍照或视频录制效果,创建该对象需要指定对应的AVCaptureSession对象。

使用AVFoundation拍照和录制视频的一般步骤如下:

1.创建AVCaptureSession对象。 2.使用AVCaptureDevice的静态方法获得需要使用的设备,例如拍照和录像> 3.就需要获得摄像头设备,录音就要获得麦克风设备。 4.利用输入设备AVCaptureDevice初始化AVCaptureDeviceInput对象。 5.初始化输出数据管理对象,如果要拍照就初始化> 6.AVCaptureStillImageOutput对象;如果拍摄视频就初始化AVCaptureMovieFileOutput对象。 7.将数据输入对象AVCaptureDeviceInput、数据输出对象AVCaptureOutput添加到>媒体会话管理对象AVCaptureSession中。 8.创建视频预览图层AVCaptureVideoPreviewLayer并指定媒体会话,添加图层到显示容器中,调用AVCaptureSession的startRuning方法开始捕获。 9.将捕获的音频或视频数据输出到指定文件。【思维图】

相机属于系统硬件,这就需要我们来手动调用iPhone的相机硬件,分为以下步骤:

【代码详细过程】

GGCamerManager.h#import#import #import @protocol GGCamerManagerDelegate @optional; @end @interface GGCamerManager : NSObject /// 代理对象 @property (nonatomic,weak) id delegate; /* * 相机初始化 */ - (instancetype)initWithParentView:(UIView *)view; /** * 拍照 * * @param block 原图 比例图 裁剪图 (原图是你照相机摄像头能拍出来的大小,比例图是按照原图的比例去缩小一倍,裁剪图是你设置好的摄像范围的图片) */ - (void)takePhotoWithImageBlock:(void(^)(UIImage *originImage,UIImage *scaledImage,UIImage *croppedImage))block; /** * 切换前后镜 * * isFrontCamera (void(^)(NSString *))callback; */ - (void)switchCamera:(BOOL)isFrontCamera didFinishChanceBlock:(void(^)(id))block; /** * 切换闪光灯模式 * (切换顺序:最开始是auto,然后是off,最后是on,一直循环) */ - (void)switchFlashMode:(UIButton*)sender; @end GGCamerManager.m

#import "GGCamerManager.h" #import "UIImage+DJResize.h" @interface GGCamerManager ()/// 捕获设备,通常是前置摄像头,后置摄像头,麦克风(音频输入) @property (nonatomic, strong) AVCaptureDevice *device; /// AVCaptureDeviceInput 代表输入设备,他使用AVCaptureDevice 来初始化 @property (nonatomic, strong) AVCaptureDeviceInput *input; /// 输出图片 @property (nonatomic ,strong) AVCaptureStillImageOutput *imageOutput; /// session:由他把输入输出结合在一起,并开始启动捕获设备(摄像头) @property (nonatomic, strong) AVCaptureSession *session; /// 图像预览层,实时显示捕获的图像 @property (nonatomic ,strong) AVCaptureVideoPreviewLayer *previewLayer; /// 切换前后镜动画结束之后 @property (nonatomic, copy) void (^finishBlock)(void); @end @implementation GGCamerManager #pragma mark - 初始化 - (instancetype)init { self = [super init]; if (self) { [self setup]; } return self; } - (instancetype)initWithParentView:(UIView *)view { self = [super init]; if (self) { [self setup]; [self configureWithParentLayer:view]; } return self; } #pragma mark - 布置UI - (void)setup { self.session = [[AVCaptureSession alloc] init]; self.previewLayer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:self.session]; self.previewLayer.videoGravity = AVLayerVideoGravityResizeAspectFill; //加入输入设备(前置或后置摄像头) [self addVideoInputFrontCamera:NO]; //加入输出设备 [self addStillImageOutput]; } /** * 添加输入设备 * * @param front 前或后摄像头 */ - (void)addVideoInputFrontCamera:(BOOL)front { NSArray *devices = [AVCaptureDevice devices]; AVCaptureDevice *frontCamera; AVCaptureDevice *backCamera; for (AVCaptureDevice *device in devices) { if ([device hasMediaType:AVMediaTypeVideo]) { if ([device position] == AVCaptureDevicePositionBack) { backCamera = device; } else { frontCamera = device; } } } NSError *error = nil; if (front) { AVCaptureDeviceInput *frontFacingCameraDeviceInput = [AVCaptureDeviceInput deviceInputWithDevice:frontCamera error:&error]; if (!error) { if ([_session canAddInput:frontFacingCameraDeviceInput]) { [_session addInput:frontFacingCameraDeviceInput]; self.input = frontFacingCameraDeviceInput; } else { NSLog(@"Couldn't add front facing video input"); } }else{ NSLog(@"你的设备没有照相机"); } } else { AVCaptureDeviceInput *backFacingCameraDeviceInput = [AVCaptureDeviceInput deviceInputWithDevice:backCamera error:&error]; if (!error) { if ([_session canAddInput:backFacingCameraDeviceInput]) { [_session addInput:backFacingCameraDeviceInput]; self.input = backFacingCameraDeviceInput; } else { NSLog(@"Couldn't add back facing video input"); } }else{ NSLog(@"你的设备没有照相机"); } } if (error) { [XHToast showCenterWithText:@"您的设备没有照相机"]; } } /** * 添加输出设备 */ - (void)addStillImageOutput { AVCaptureStillImageOutput *tmpOutput = [[AVCaptureStillImageOutput alloc] init]; NSDictionary *outputSettings = [[NSDictionary alloc] initWithObjectsAndKeys:AVVideoCodecJPEG,AVVideoCodecKey,nil];//输出jpeg tmpOutput.outputSettings = outputSettings; [_session addOutput:tmpOutput]; self.imageOutput = tmpOutput; } - (void)configureWithParentLayer:(UIView *)parent { parent.userInteractionEnabled = YES; if (!parent) { [XHToast showCenterWithText:@"请加入负载视图"]; return; } self.previewLayer.frame = parent.bounds; [parent.layer addSublayer:self.previewLayer]; [self.session startRunning]; } #pragma mark - 切换闪光灯模式(切换顺序:最开始是auto,然后是off,最后是on,一直循环) - (void)switchFlashMode:(UIButton*)sender{ Class captureDeviceClass = NSClassFromString(@"AVCaptureDevice"); if (!captureDeviceClass) { [XHToast showCenterWithText:@"您的设备没有拍照功能"]; return; } NSString *imgStr = @""; AVCaptureDevice *device = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo]; [device lockForConfiguration:nil]; if ([device hasFlash]) { if (device.flashMode == AVCaptureFlashModeOff) { device.flashMode = AVCaptureFlashModeOn; imgStr = @"flashing_on.png"; } else if (device.flashMode == AVCaptureFlashModeOn) { device.flashMode = AVCaptureFlashModeAuto; imgStr = @"flashing_auto.png"; } else if (device.flashMode == AVCaptureFlashModeAuto) { device.flashMode = AVCaptureFlashModeOff; imgStr = @"flashing_off.png"; } if (sender) { [sender setImage:[UIImage imageNamed:imgStr] forState:UIControlStateNormal]; } } else { [XHToast showCenterWithText:@"您的设备没有闪光灯功能"]; } [device unlockForConfiguration]; } /** * 前后镜 * * isFrontCamera */ - (void)switchCamera:(BOOL)isFrontCamera didFinishChanceBlock:(void(^)(id))block; { if (!_input) { if (block) { block(@""); } [XHToast showCenterWithText:@"您的设备没有摄像头"]; return; } if (block) { self.finishBlock = [block copy]; } CABasicAnimation *caAnimation = [CABasicAnimation animationWithKeyPath:@"opacity"]; // caAnimation.removedOnCompletion = NO; // caAnimation.fillMode = kCAFillModeForwards; caAnimation.fromValue = @(0); caAnimation.toValue = @(M_PI); caAnimation.duration = 1.f; caAnimation.repeatCount = 1; caAnimation.delegate = self; caAnimation.timingFunction = [CAMediaTimingFunction functionWithName:kCAMediaTimingFunctionDefault]; [self.previewLayer addAnimation:caAnimation forKey:@"anim"]; dispatch_async(dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{ [self.session beginConfiguration]; [self.session removeInput:self.input]; [self addVideoInputFrontCamera:isFrontCamera]; [self.session commitConfiguration]; dispatch_async(dispatch_get_main_queue(), ^{ }); }); } - (void)animationDidStop:(CAAnimation *)anim finished:(BOOL)flag { if (self.finishBlock) { self.finishBlock(); } } /** * 拍照 * * @param block */ - (void)takePhotoWithImageBlock:(void (^)(UIImage *, UIImage *, UIImage *))block { AVCaptureConnection *videoConnection = [self findVideoConnection]; if (!videoConnection) { NSLog(@"你的设备没有照相机"); [XHToast showCenterWithText:@"您的设备没有照相机"]; return; } __weak typeof(self) weak = self; [self.imageOutput captureStillImageAsynchronouslyFromConnection:videoConnection completionHandler:^(CMSampleBufferRef imageDataSampleBuffer, NSError *error) { NSData *imageData = [AVCaptureStillImageOutput jpegStillImageNSDataRepresentation:imageDataSampleBuffer]; UIImage *originImage = [[UIImage alloc] initWithData:imageData]; NSLog(@"originImage=%@",originImage); CGFloat squareLength = weak.previewLayer.bounds.size.width; CGFloat previewLayerH = weak.previewLayer.bounds.size.height; // CGFloat headHeight = weak.previewLayer.bounds.size.height - squareLength; // NSLog(@"heeadHeight=%f",headHeight); CGSize size = CGSizeMake(squareLength*2, previewLayerH*2); UIImage *scaledImage = [originImage resizedImageWithContentMode:UIViewContentModeScaleAspectFill bounds:size interpolationQuality:kCGInterpolationHigh]; NSLog(@"scaledImage=%@",scaledImage); CGRect cropFrame = CGRectMake((scaledImage.size.width - size.width) / 2, (scaledImage.size.height - size.height) / 2, size.width, size.height); NSLog(@"cropFrame:%@", [NSValue valueWithCGRect:cropFrame]); UIImage *croppedImage = [scaledImage croppedImage:cropFrame]; NSLog(@"croppedImage=%@",croppedImage); UIDeviceOrientation orientation = [UIDevice currentDevice].orientation; if (orientation != UIDeviceOrientationPortrait) { CGFloat degree = 0; if (orientation == UIDeviceOrientationPortraitUpsideDown) { degree = 180;// M_PI; } else if (orientation == UIDeviceOrientationLandscapeLeft) { degree = -90;// -M_PI_2; } else if (orientation == UIDeviceOrientationLandscapeRight) { degree = 90;// M_PI_2; } croppedImage = [croppedImage rotatedByDegrees:degree]; scaledImage = [scaledImage rotatedByDegrees:degree]; originImage = [originImage rotatedByDegrees:degree]; } if (block) { block(originImage,scaledImage,croppedImage); } }]; } /** * 查找摄像头连接设备 * * */ - (AVCaptureConnection *)findVideoConnection { AVCaptureConnection *videoConnection = nil; for (AVCaptureConnection *connection in _imageOutput.connections) { for (AVCaptureInputPort *port in connection.inputPorts) { if ([[port mediaType] isEqual:AVMediaTypeVideo]) { videoConnection = connection; break; } } if (videoConnection) { break; } } return videoConnection; } @end

【参考文章】

- Video Gravity 视频播放时的拉伸方式

- 30分钟搞定iOS自定义相机

- iOS拍照,视频录制-自定义拍照,视频录制控件