参考内容:

tensorflow中文社区

tensorflow官网教程

写在MNIST前

先浏览新手入门介绍和基本用法

Getting Started With TensorFlow

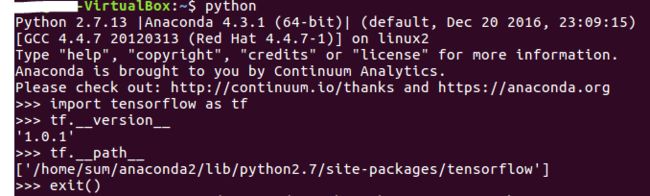

1. 查看tensorflow安装版本和路径

在python中import tensorflow as tf,通过tf.__version__和tf.__path__可以查看安装版本和路径如下:

2. 三维数据平面拟合

在使用tensorflow函数时参考其官网API。根据tensorflow中文社区中新手入门简介,试用一下tensorflow,代码如下:

-VirtualBox:~$ python

Python 2.7.13 |Anaconda 4.3.1 (64-bit)| (default, Dec 20 2016, 23:09:15)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-1)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

Anaconda is brought to you by Continuum Analytics.

Please check out: http://continuum.io/thanks and https://anaconda.org

>>> import tensorflow as tf

>>> import numpy as np

#x_data为2*100矩阵,y_data为1*100矩阵,初始化y_data = [0.100, 0.200] * x_data + 0.300

>>> x_data = np.float32(np.random.rand(2, 100))

>>> y_data = np.dot([0.100, 0.200], x_data) + 0.300

#构造一个线性模型,初始化权值矩阵W和偏置b,线性模型y = W * x_data + b

>>> b = tf.Variable(tf.zeros([1]))

>>> W = tf.Variable(tf.random_uniform([1,2], -1.0, 1.0))

>>> y = tf.matmul(W, x_data) + b

#最小化方差

>>> loss = tf.reduce_mean(tf.square(y-y_data))

>>> optimizer = tf.train.GradientDescentOptimizer(0.5)

>>> train = optimizer.minimize(loss)

#初始化变量,因为tf.initialize_all_variables()函数已被移除,根据提示使用tf.global_variables_initializer()进行初始化设置

>>> init = tf.initialize_all_variables()

WARNING:tensorflow:From :1: initialize_all_variables (from tensorflow.python.ops.variables) is deprecated and will be removed after 2017-03-02.

Instructions for updating:

Use `tf.global_variables_initializer` instead.

>>> init = tf.global_variables_initializer()

#启动图

>>> sess = tf.Session()

>>> sess.run(init)

#拟合平面,在命令行时注意python中的TAB缩进

>>> for step in xrange(0, 201):

... sess.run(train)

... if step%20 == 0:

... print step, sess.run(W), sess.run(b)

...

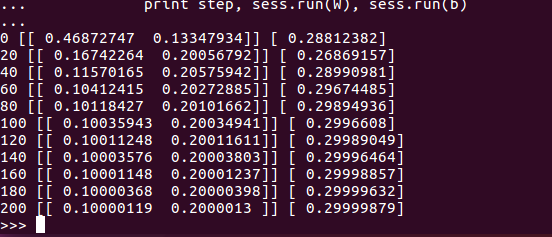

得到最佳拟合结果 W: [[0.100 0.200]], b: [0.300],本次运行结果如下:

MNIST机器学习入门

MNIST For ML Beginners

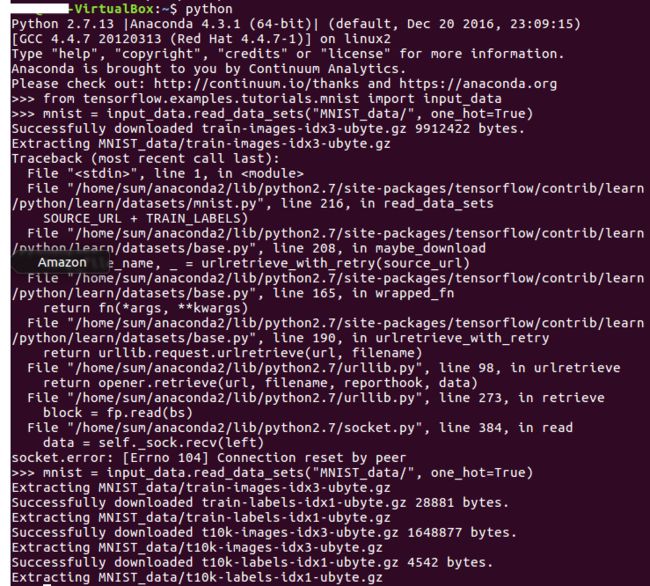

1. MNIST数据下载

按照TF官网的说明,使用如下代码下载并读取MNIST数据集。

~$ python

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

此时,mnist.train[55000]、mnist.validation[5000]、mnist.test[10000]中已经保存了训练数据、验证数据和测试数据。mnist.train.images [55000×784]和mnist.train.labels[55000×10]中保存了训练数据图片和标签。

根据

/anaconda2/lib/python2.7/site-packages/tensorflow/examples/tutorials/mnist/input_data.py文件可以看到

from tensorflow.contrib.learn.python.learn.datasets.mnist import read_data_sets,因此在

/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py中可以找到相关数据读取的函数。

2. MNIST初试

softmax函数参见实现回归模型的前一部分。

Cross-entropy交叉熵作为loss function,其中y是预测的值分布,y'是我们样本中的label值。

Tensorflow不单独地运行单一的复杂计算,而是先用图描述一系列可交互的计算操作,然后全部一起在Python之外运行,避免过多的开销。

仅使用softmax函数,没有用到mnist.validation中的数据,实现MNIST识别如下:

-VirtualBox:~$ python

...

>>> from tensorflow.examples.tutorials.mnist import input_data

>>> mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

Extracting MNIST_data/train-images-idx3-ubyte.gz

Extracting MNIST_data/train-labels-idx1-ubyte.gz

Extracting MNIST_data/t10k-images-idx3-ubyte.gz

Extracting MNIST_data/t10k-labels-idx1-ubyte.gz

#实现回归模型

>>> import tensorflow as tf

>>> x = tf.placeholder(tf.float32, [None, 784])

>>> W = tf.Variable(tf.zeros([784, 10]))

>>> b = tf.Variable(tf.zeros([10]))

>>> y = tf.nn.softmax(tf.matmul(x, W) + b)

#训练模型

>>> y_ = tf.placeholder(tf.float32, [None, 10])

>>> cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y), reduction_indices=[1]))

>>> train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy)

>>> sess = tf.InteractiveSession()

>>> tf.global_variables_initializer().run()

>>> for _ in range(1000):

... batch_xs, batch_ys = mnist.train.next_batch(100)

... sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys})

...

#评估模型

>>> correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))

>>> accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

>>> print(sess.run(accuracy, feed_dict={x: mnist.test.images, y_: mnist.test.labels}))

0.9183

>>>

这里,通过使用InteractiveSession类,可以更加灵活地构建代码。它能在运行图的时候,插入一些计算图,这些计算图是由某些操作(operations)构成的。这对于工作在交互式环境中的人们来说非常便利,比如使用IPython。如果没有使用InteractiveSession,那么需要在启动session之前构建整个计算图,然后启动该计算图。

MNIST深入

Deep MNIST for Experts

使用CNN实现MNIST识别

-VirtualBox:~$ python

...

#读入数据

>>> from tensorflow.examples.tutorials.mnist import input_data

>>> mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

Extracting MNIST_data/train-images-idx3-ubyte.gz

Extracting MNIST_data/train-labels-idx1-ubyte.gz

Extracting MNIST_data/t10k-images-idx3-ubyte.gz

Extracting MNIST_data/t10k-labels-idx1-ubyte.gz

>>> import tensorflow as tf

>>> sess = tf.InteractiveSession()

#定义占位符x和y_

>>> x = tf.placeholder(tf.float32, shape=[None, 784])

>>> y_ = tf.placeholder(tf.float32, shape=[None, 10])

#定义用于初始化的两个函数

>>> def weight_variable(shape):

... initial = tf.truncated_normal(shape, stddev=0.1)

... return tf.Variable(initial)

...

>>> def bias_variable(shape):

... initial = tf.constant(0.1, shape=shape)

... return tf.Variable(initial)

...

#定义卷积和池化的函数

#卷积使用1步长(stride size),0边距(padding size)的模板,保证输出和输入大小相同

#池化用简单传统的2x2大小的模板做max pooling,因此输出的长宽会变为输入的一半

>>> def conv2d(x, W):

... return tf.nn.conv2d(x, W, strides=[1,1,1,1], padding='SAME')

...

>>> def max_pool_2x2(x):

... return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

...

#第一层卷积,卷积在每个5x5的patch中算出32个特征

>>> W_conv1 = weight_variable([5,5,1,32])

>>> b_conv1 = bias_variable([32])

>>> x_image = tf.reshape(x, [-1,28,28,1])

#第2、第3维对应图片的宽、高,最后一维代表图片的颜色通道数(因为是灰度图所以这里的通道数为1,如果是rgb彩色图,则为3)

>>> h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

>>> h_pool1 = max_pool_2x2(h_conv1)

>>>

#第二层卷积,每个5x5的patch会得到64个特征

>>> W_conv2 = weight_variable([5,5,32,64])

>>> b_conv2 = bias_variable([64])

>>> h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

>>> h_pool2 = max_pool_2x2(h_conv2)

>>>

#有1024个神经元的全连接层,此时图片大小为7*7

>>> W_fc1 = weight_variable([7*7*64, 1024])

>>> b_fc1 = bias_variable([1024])

>>> h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

>>> h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

>>>

#为了减少过拟合,在输出层之前加入dropout。用一个placeholder代表一个神经元的输出在dropout中保持不变的概率。

#这样可以在训练过程中启用dropout,在测试过程中关闭dropout。

>>> keep_prob = tf.placeholder(tf.float32)

>>> h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

#softmax输出层

>>> W_fc2 = weight_variable([1024, 10])

>>> b_fc2 = bias_variable([10])

>>> y_conv = tf.matmul(h_fc1_drop, W_fc2) + b_fc2

#应为 y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

>>>

#训练和评估模型

#用更加复杂的ADAM优化器来做梯度最速下降,在feed_dict中加入额外的参数keep_prob来控制dropout比例

>>> cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=y_conv))

>>> train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

>>> correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y_,1))

>>> accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

>>> sess.run(tf.global_variables_initializer())

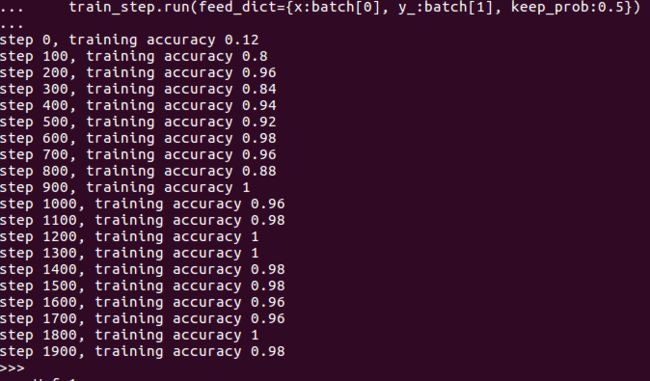

>>> for i in range(2000): #为减少训练时间,降低迭代次数

... batch = mnist.train.next_batch(50)

... if i%100 == 0:

... train_accuracy = accuracy.eval(feed_dict={x:batch[0], y_:batch[1], keep_prob:1.0})

... print("step %d, training accuracy %g"%(i, train_accuracy))

... train_step.run(feed_dict={x:batch[0], y_:batch[1], keep_prob:0.5})

...

#检测模型的准确率

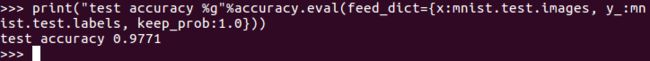

print("test accuracy %g"%accuracy.eval(feed_dict={x:mnist.test.images, y_:mnist.test.labels, keep_prob:1.0}))

Tensorflow.CNN

为了避免之后github访问不通,先clone了Tensorflow models上的内容

mkdir TensorFlowModels

cd TensorFluwModels

git clone https://github.com/tensorflow/models.git

TensorFlow运作方式入门

TensorFlow Mechanics 101

浏览运作方式入门

卷积神经网络

CNN

CNN基于CIFAR-10数据集,其任务是对一组32x32RGB的图像进行分类,这些图像涵盖了10个类别:飞机, 汽车, 鸟, 猫, 鹿, 狗, 青蛙, 马, 船以及卡车。