ELK 日志采集框架(八):功能测试与告警集成

1 部署日志模块

1.1 打包

参考博文: ELK 日志采集框架(二):日志模块开发

下载源码,打包成jar包,上传到服务器的 /usr/local/

1.2 部署

[root@localhost software]# cd /usr/local

[root@localhost local]# ll

-rw-r--r--. 1 root root 252554263 3月 27 18:20 collector.jar

1.3 启动

[root@localhost local]# nohup java -jar collector.jar

2 启动服务

2.1 启动 zookeeper

[root@localhost software]# /opt/module/zookeeper-3.4.10/bin/zkServer.sh start

2.2 启动 kafka

[root@localhost software]# /usr/local/kafka_2.12/bin/kafka-server-start.sh /usr/local/kafka_2.12/config/server.properties &

2.3 启动 elasticsearch

[root@localhost software]# /usr/local/elasticsearch-7.4.2/bin/elasticsearch

2.4 启动 filebeat

[root@localhost software]# /usr/local/filebeat-7.4.2/filebeat &

2.5 启动logstash

[root@localhost software]# nohup /usr/local/logstash-7.4.2//bin/logstash -f /usr/local/logstash-7.4.2/script/logstash-script.conf &

3 kibana配置

访问 kibana的 管理页面 192.168.51.4:5601 选择 Management -> Kinaba - Index Patterns

3.1 配置全量日志索引

3.1.1 定义索引模式

Create index pattern -> index pattern 输入 app-log-*

如果按照之前的教程已经汉化成功的话,直接选择索引模式,创建索引就可以

3.1.2 配置设置

Time Filter field name 选择 currentDateTime

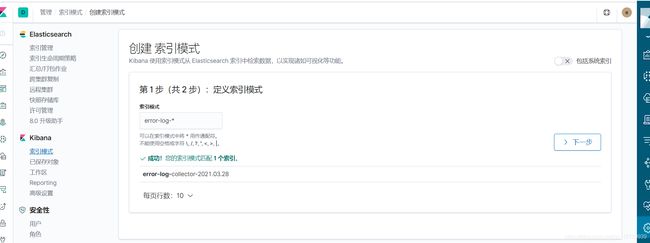

3.2 配置错误日志索引

3.2.1 定义索引模式

Create index pattern -> index pattern 输入 err-log-*

如果按照之前的教程已经汉化成功的话,直接选择索引模式,创建索引就可以

3.2.2 配置设置

Time Filter field name 选择 currentDateTime

如果按照之前的教程已经汉化成功的话,选择时间筛选字段为

currentDateTime

4 访问测试

4.1 访问日志模块

访问 index日志 方法: http://192.168.51.4:8001/index

**访问 err日志 方法: **http://192.168.51.4:8001/err

4.2 查看kafka数据

filebeat 将 日志里面的数据,写入到 kafka中。

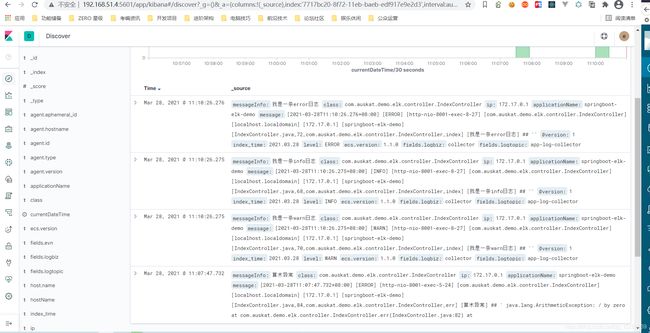

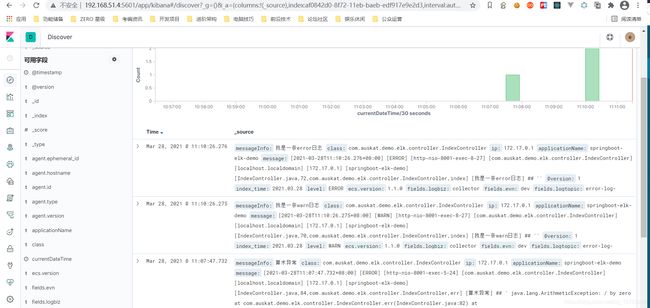

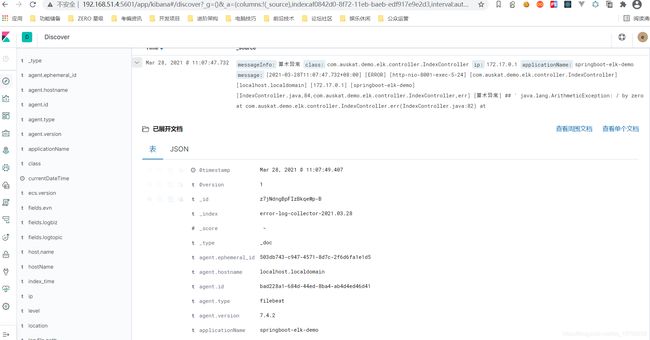

4.3 查看kibana数据

4.3.1 全量日志信息

4.3.2 错误日志信息

5 集成告警

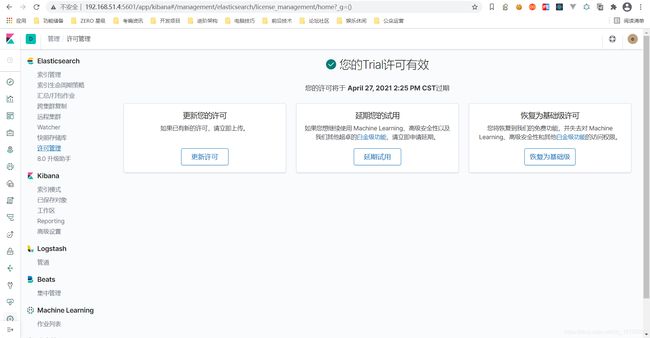

5.1 kibana许可证

我这里没有申请kibana许可证,采用的试用30天的白金版。

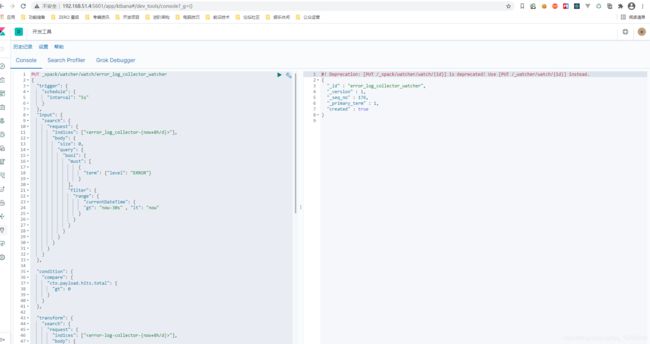

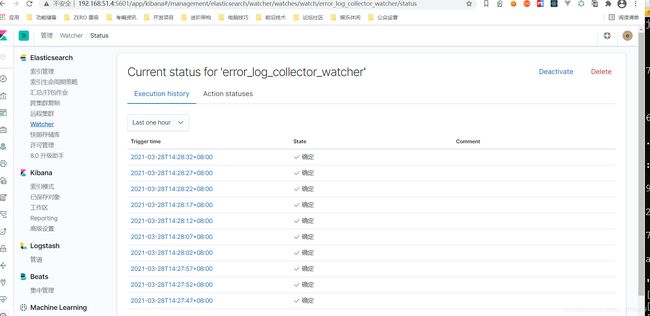

5.2 watch操作

开发工具 => 设置一个 watch监控

5.2.1 创建 watcher

## 创建一个watcher,比如定义一个trigger 每个5s钟看一下input里的数据

PUT _xpack/watcher/watch/error_log_collector_watcher

{

"trigger": {

"schedule": {

"interval": "5s"

}

},

"input": {

"search": {

"request": {

"indices": ["" ],

"body": {

"size": 0,

"query": {

"bool": {

"must": [

{

"term": {

"level": "ERROR"}

}

],

"filter": {

"range": {

"currentDateTime": {

"gt": "now-30s" , "lt": "now"

}

}

}

}

}

}

}

}

},

"condition": {

"compare": {

"ctx.payload.hits.total": {

"gt": 0

}

}

},

"transform": {

"search": {

"request": {

"indices": ["" ],

"body": {

"size": 1,

"query": {

"bool": {

"must": [

{

"term": {

"level": "ERROR"}

}

],

"filter": {

"range": {

"currentDateTime": {

"gt": "now-30s" , "lt": "now"

}

}

}

}

},

"sort": [

{

"currentDateTime": {

"order": "desc"

}

}

]

}

}

}

},

"actions": {

"test_error": {

"webhook" : {

"method" : "POST",

"url" : "http://192.168.51.4:8001/accurateWatch",

"body" : "{\"title\": \"异常错误告警\", \"applicationName\": \"{

{#ctx.payload.hits.hits}}{

{_source.applicationName}}{

{/ctx.payload.hits.hits}}\", \"level\":\"告警级别P1\", \"body\": \"{

{#ctx.payload.hits.hits}}{

{_source.messageInfo}}{

{/ctx.payload.hits.hits}}\", \"executionTime\": \"{

{#ctx.payload.hits.hits}}{

{_source.currentDateTime}}{

{/ctx.payload.hits.hits}}\"}"

}

}

}

}

5.2.2 查看一个watcher

GET _xpack/watcher/watch/error_log_collector_watcher

5.2.3 删除一个watcher

DELETE _xpack/watcher/watch/error_log_collector_watcher

5.2.4 执行watcher

POST _xpack/watcher/watch/error_log_collector_watcher/_execute

5.2.5 查看执行结果

GET /.watcher-history*/_search?pretty{ "sort" : [ { "result.execution_time" : "desc" } ], "query": { "match": { "watch_id": "error_log_collector_watcher" } }}

5.3 自定义索引模板

#PUT _template/error-log-{ "template": "error-log-*", "order": 0, "settings": { "index": { "refresh_interval": "5s" } }, "mappings": { "dynamic_templates": [ { "message_field": { "match_mapping_type": "string", "path_match": "message", "mapping": { "norms": false, "type": "text", "analyzer": "ik_max_word", "search_analyzer": "ik_max_word" } } }, { "throwable_field": { "match_mapping_type": "string", "path_match": "throwable", "mapping": { "norms": false, "type": "text", "analyzer": "ik_max_word", "search_analyzer": "ik_max_word" } } }, { "string_fields": { "match_mapping_type": "string", "match": "*", "mapping": { "norms": false, "type": "text", "analyzer": "ik_max_word", "search_analyzer": "ik_max_word", "fields": { "keyword": { "type": "keyword" } } } } } ], "properties": { "hostName": { "type": "keyword" }, "ip": { "type": "ip" }, "level": { "type": "keyword" }, "currentDateTime": { "type": "date" } } }}

5.4 告警信息

5.4.1 告警列表

5.4.2 告警详细数据

5.4.3 触发告警

6 相关信息

-

第①篇:ELK 日志采集框架(一):架构设计

-

第②篇:ELK 日志采集框架(二):日志模块开发

-

第③篇:ELK 日志采集框架(三):Filebeat安装与配置

-

第④篇:ELK 日志采集框架(四):Kafka安装与配置

-

第⑤篇:ELK 日志采集框架(五):Logstash安装与配置

-

第⑥篇:ELK 日志采集框架(六):ElasticSearch安装与配置

-

第⑦篇:ELK 日志采集框架(七):Kibana安装与配置

-

第⑧篇:ELK 日志采集框架(八):功能测试与告警集成

博文不易,辛苦各位猿友点个关注和赞,感谢