最网最全python框架--scrapy(体系学习,爬取全站校花图片),学完显著提高爬虫能力(附源代码),突破各种反爬

个人公众号 yk 坤帝

后台回复scrapy 获取全部源代码

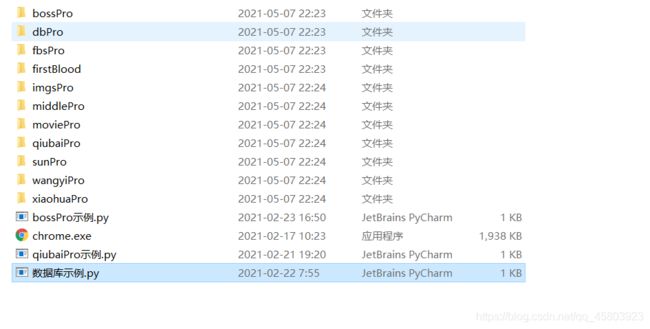

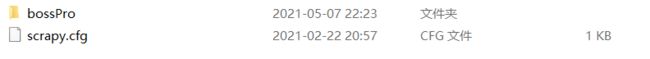

1.boss直聘爬虫(bossPro)

2.豆瓣爬虫(db)

3.fbsPro

4.百度爬虫(firstblood)

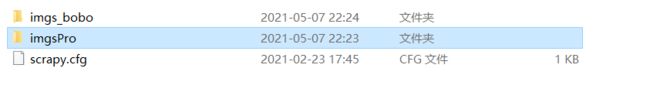

5.校花全站图片爬虫(imgsPro)

6.代理ip配置爬虫(middlePro)

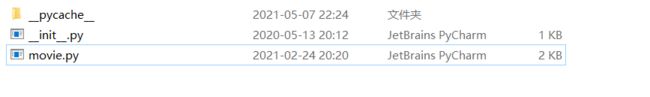

7.全站视频爬虫(moviePro)

8.糗图百科视频,图片,视频下载(qiubaiPro)

9.最新问政-阳光热线问政平台–相关政策全站爬虫(sunPro)

10.网易新闻全站信息爬虫(wangyiPro)

11.中国校花网,全站校花图片爬取(xiaohuaPro)

12.数据库示例

# -*- coding: utf-8 -*-

# 大二

# 2021年2月23日星期二

# 寒假开学开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

from bossPro.items import BossproItem

class BossSpider(scrapy.Spider):

name = 'boss'

#allowed_domains = ['www.xxx.com']

start_urls = ['https://www.zhipin.com/c101010100/?query=python&ka=sel-city-101010100']

url = 'https://www.zhipin.com/job_detail/bd1e60815ee5d3741nV92Nu1GVZY.html?ka=search_list_jname_1_blank&lid=8iXPDpH593w.search.%d'

page_num = 2

def parse_detail(self,response):

item = response.meta['item']

job_desc = response.xpath('//*[@id="main"]/div[3]/div/div[2]/div[2]/div[1]/div//text()').extract()

job_desc = ''.join(job_desc)

item['job_desc'] = job_desc

print(job_desc)

yield item

def parse(self, response):

li_list = response.xpath('//*[@id="main"]/div/div[2]/ul/li')

print(li_list)

for li in li_list:

item = BossproItem()

job_name = li.xpath('.//span[@class="job-name"]a/text()').extract_first()

item['job_name'] = job_name

print(job_name)

detail_url ='https://www.zhipin.com/' + li.xpath('.//span[@class="job-name"]a/@href').extract_first()

yield scrapy.Request(detail_url,callback=self.parse_detail,meta={

'item':item})

if self.page_num <=3:

new_url = format(self.url%self.page_num)

self.page_num += 1

yield scrapy.Request(new_url,callback = self.parse)

items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class BossproItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

job_name = scrapy.Field()

job_desc = scrapy.Field()

#pass

middlewares.py

# -*- coding: utf-8 -*-

# Define here the models for your spider middleware

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

class BossproSpiderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, dict or Item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request, dict

# or Item objects.

pass

def process_start_requests(self, start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

class BossproDownloaderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

piplelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

class BossproPipeline:

def process_item(self, item, spider):

print(item)

return item

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for bossPro project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'bossPro'

SPIDER_MODULES = ['bossPro.spiders']

NEWSPIDER_MODULE = 'bossPro.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

# SPIDER_MIDDLEWARES = {

# 'bossPro.middlewares.BossproSpiderMiddleware': 543,

# }

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'bossPro.middlewares.BossproDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'bossPro.pipelines.BossproPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

# 大二

# 2021年3月4日

# 寒假开学时间3月7日

# -*- coding: utf-8 -*-

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

class DbSpider(scrapy.Spider):

name = 'db'

#allowed_domains = ['www.xxx.com']

start_urls = ['https://www.douban.com/group/topic/157797102/']

def parse(self, response):

li_list = response.xpath('//*[@id="comments"]/li')

# print(li_list)

for li in li_list:

# print(li)

#/html/body/div[3]/div[1]/div/div[1]/ul[2]/li[1]/div[2]/p

comments = li.xpath('./div[2]/p/text()').extract_first()

print(comments)

3.fbsPro

# -*- coding: utf-8 -*-

# 大二

# 2021年2月24日星期三

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

from scrapy_redis.spiders import RedisCrawlSpider

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from fbsPro.items import FbsproItem

class FbsSpider(RedisCrawlSpider):

name = 'fbs'

#allowed_domains = ['www.xxx.com']

#start_urls = ['http://www.xxx.com/']

redis_key = 'sun'

rules = (

Rule(LinkExtractor(allow=r'Items/'), callback='parse_item', follow=True),

)

def parse_item(self, response):

item = {

}

#item['domain_id'] = response.xpath('//input[@id="sid"]/@value').get()

#item['name'] = response.xpath('//div[@id="name"]').get()

#item['description'] = response.xpath('//div[@id="description"]').get()

item = FbsproItem()

yield item

4.百度爬虫(firstblood)

first.py

# -*- coding: utf-8 -*-

# 大二

# 2021年2月19日星期五

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

class FirstSpider(scrapy.Spider):

name = 'first'

#allowed_domains = ['www.xxx.com']

start_urls = ['https://www.baidu.com/','https://www.sogou.com']

def parse(self, response):

print(response)

# -*- coding: utf-8 -*-

# 大二

# 2021年2月23日星期二

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

from imgsPro.items import ImgsproItem

class ImgSpider(scrapy.Spider):

name = 'img'

#allowed_domains = ['www.xxx.com']

start_urls = ['https://sc.chinaz.com/tupian/']

def parse(self, response):

div_list = response.xpath('//div[@id="container"]/div')

for div in div_list:

src ='https:' + div.xpath('./div/a/img/@src2').extract_first()

print(src)

item = ImgsproItem()

item['src'] = src

yield item

6.代理ip配置爬虫(middlePro)

middle.py

# -*- coding: utf-8 -*-

# 大二

# 2021年2月23日

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

class MiddleSpider(scrapy.Spider):

name = 'middle'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://www.baidu.com/s?wd=ip']

def parse(self, response):

page_text = response.text

with open('ip.html','w',encoding= 'utf-8') as fp:

fp.write(page_text)

movie.py

# -*- coding: utf-8 -*-

# 大二

# 2021年2月24日星期三

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from moviePro.items import MovieproItem

from redis import Redis

class MovieSpider(CrawlSpider):

name = 'movie'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://www.4567kan.com/frim/index1.html']

rules = (

Rule(LinkExtractor(allow=r'/frim/index1-\d+\.html'), callback='parse_item', follow=True),

)

conn = Redis(host = '127.0.0.1', port = 6379)

def parse_item(self, response):

li_list = response.xpath('/html/body/div[1]/div/div/div/div[2]/ul/li')

for li in li_list:

detail_url = 'http://www.4567kan.com/' + li.xpath('./div/a/@href').extract_first()

ex = self.conn.sadd('urls',detail_url)

if ex == 1:

print('该url没有被爬取过,可以进行数据的爬取')

yield scrapy.Request(url = detail_url, callback= self.parst_detail)

else:

print('数据还没有更新,暂无新数据可爬!')

def parst_detail(self,response):

item = MovieproItem()

item['name'] = response.xpath('/html/body/div[1]/div/div/div/div[2]/h1/text()').extract_first()

item['desc'] = response.xpath('/html/body/div[1]/div/div/div/div[2]/p[5]/span[2]//text()').extract()

item['desc'] = ''.join(item['desc'])

yield item

8.糗图百科视频,图片,视频下载(qiubaiPro)

qiubai.py

qiubai.py

# -*- coding: utf-8 -*-

# 大二

# 2021年2月19日星期五

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

from qiubaiPro.items import QiubaiproItem

class QiubaiSpider(scrapy.Spider):

name = 'qiubai'

#allowed_domains = ['www.xxx.com']

start_urls = ['https://www.qiushibaike.com/text/']

# def parse(self, response):

# div_list = response.xpath('//div[@id="content"]/div[1]/div[2]/div')

# all_data = []

# for div in div_list:

# auther = div.xpath('./div[1]/a[2]/h2/text()')[0].extract()

# #auther = div.xpath('./div[1]/a[2]/h2/text()').extract_first()

# content = div.xpath('./a[1]/div/span//text()').extract()

# content = ','.join(content)

# print(auther,content)

# dic = {

# auther:'auther',

# content:'auther'

# }

# all_data.append(dic)

# return all_data

# 大二

# 2021年2月21日星期日

# 寒假开学时间3月7日

def parse(self, response):

div_list = response.xpath('//div[@id="content"]/div[1]/div[2]/div')

#all_data = []

for div in div_list:

auther = div.xpath('./div[1]/a[2]/h2/text()')[0].extract()

#匿名用户

#auther = div.xpath('./div[1]/a[2]/h2/text() | ./div[1]/span/h2/text()')[0].extract()

#auther = div.xpath('./div[1]/a[2]/h2/text()').extract_first()

content = div.xpath('./a[1]/div/span//text()').extract()

content = ','.join(content)

item = QiubaiproItem()

item['auther'] = auther

item['content'] = content

yield item

9.最新问政-阳光热线问政平台–相关政策全站爬虫(sunPro)

sun.py

# -*- coding: utf-8 -*-

# 大二

# 2021年2月24日星期三

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

class SunSpider(CrawlSpider):

name = 'sun'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://wz.sun0769.com/political/index/politicsNewest?id=1&page=1']

link = LinkExtractor(allow=r'id=1&page=\d+')

rules = (

Rule(link, callback='parse_item', follow=True),

)

def parse_item(self, response):

print(response)

10.网易新闻全站信息爬虫(wangyiPro)

wangyi.py

# -*- coding: utf-8 -*-

# 大二

# 2021年2月23日星期二

# 寒假开学时间3月7号

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import scrapy

from wangyiPro.items import WangyiproItem

from selenium import webdriver

class WangyiSpider(scrapy.Spider):

name = 'wangyi'

#allowed_domains = ['www.xxx.com']

start_urls = ['https://news.163.com/']

models_urls = []

def __init__(self):

self.bro = webdriver.Chrome()

def parse(self, response):

li_list = response.xpath('//*[@id="js_festival_wrap"]/div[3]/div[2]/div[2]/div[2]/div/ul/li')

alist = [3,4,6,7,8]

for index in alist:

model_url = li_list[index].xpath('./a/@href').extract_first()

self.models_urls.append(model_url)

for url in self.models_urls:

yield scrapy.Request(url,callback= self.parse_model)

def parse_model(self,response):

div_list = response.xpath('/html/body/div[1]/div[3]/div[4]/div[1]/div/div/ul/li/div/div')

for div in div_list:

title = div.xpath('./div/div[1]/h3/a/text()').extract_first()

new_detail_url = div.xpath('./div/div[1]/h3/a/@href').extract_first()

item = WangyiproItem()

item['title'] = title

yield scrapy.Request(url = new_detail_url,callback=self.parse_detail)

def parse_detail(self,response):

content = response.xpath('//*[@id="content"]//text()').extract()

content = ''.join(content)

item = response.mata['item']

item['content'] = content

yield item

11.中国校花网,全站校花图片爬取(xiaohuaPro)

xiaohua.py

-- coding: utf-8 --

大二

2021年2月22日星期一

寒假开学时间3月7日

个人公众号 yk 坤帝

后台回复scrapy 获取全部源代码

import scrapy

class XiaohuaSpider(scrapy.Spider):

name = ‘xiaohua’

#allowed_domains = [‘www.xxx.com’]

start_urls = [‘http://www.xiaohuar.com/daxue/’]

url = ‘http://www.xiaohuar.com/daxue/index_%d.html’

page_num = 2

def parse(self, response):

li_list = response.xpath('//*[@id="wrap"]/div/div/div')

for li in li_list:

img_name = li.xpath('./div/div[1]/a/text()').extract_first()

print(img_name)

#li.xpath('./div/a/img/@src')

if self.page_num <= 11:

new_url = format(self.url%self.page_num)

self.page_num += 1

yield scrapy.Request(new_url,callback=self.parse)

middlewares.py

# -*- coding: utf-8 -*-

# Define here the models for your spider middleware

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

class XiaohuaproSpiderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, dict or Item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request, dict

# or Item objects.

pass

def process_start_requests(self, start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

class XiaohuaproDownloaderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for xiaohuaPro project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'xiaohuaPro'

SPIDER_MODULES = ['xiaohuaPro.spiders']

NEWSPIDER_MODULE = 'xiaohuaPro.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

# Obey robots.txt rules

LOG_LEVEL = 'ERROR'

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'xiaohuaPro.middlewares.XiaohuaproSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'xiaohuaPro.middlewares.XiaohuaproDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'xiaohuaPro.pipelines.XiaohuaproPipeline': 300,

#}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

12.数据库示例

# 大二

# 2021年2月21日星期日

# 寒假开学时间3月7日

# 个人公众号 yk 坤帝

# 后台回复scrapy 获取全部源代码

import pymysql

# 链接数据库

# 参数1:mysql服务器所在主机ip

# 参数2:用户名

# 参数3:密码

# 参数4:要链接的数据库名

# db = pymysql.connect("localhost", "root", "200829", "wj" )

db = pymysql.connect("192.168.31.19", "root", "200829", "wj" )

# 创建一个cursor对象

cursor = db.cursor()

sql = "select version()"

# 执行sql语句

cursor.execute(sql)

# 获取返回的信息

data = cursor.fetchone()

print(data)

# 断开

cursor.close()

db.close()