【深度学习基础】PyTorch实现ResNet亲身实践

【深度学习基础】PyTorch实现ResNet亲身实践

- 1 论文关键信息

-

- 1. 1 核心-Residual Block

- 1.2 BN 层和激活单元

- 1.3 ResNet-18,ResNet-34,ResNet-50,ResNet-101,ResNet-152

- 2. PyTorch实现

-

- 2.1 实现BN_CONV_ReLU结构

- 2.2 实现basic block的结构

- 2.3 实现bottleNeck的结构

- 2.4 实现ResNet构造类

- 2.5 定义ResNet构建函数

- 2.6 测试搭建的网络

这篇博文字数不多,文字部分是复现时需要关注的。

1 论文关键信息

1. 1 核心-Residual Block

核心思想是:训练残差,传统cnn卷积层可以将y = F(w, x) 看做目标函数,而resnet可以的目标函数可视为 y = F (w, x) + x;凯明大神发现训练残差相比传统的结构,可以使得网络可以做得更深,更容易训练,并且减缓过拟合现象。

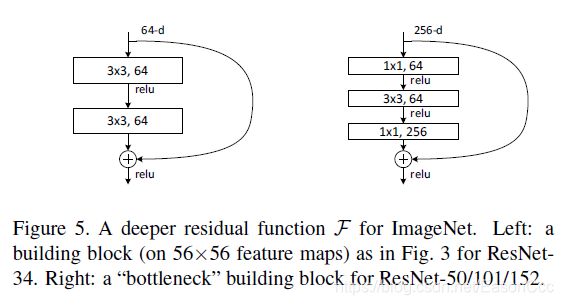

原文中提出了两种block,如上图,左边的我们不妨称作basic block,右边的称为bottle neck。结构都是在卷积层后面,添加一跳short cut,将输入与卷积层组的输出相加。注意观察结构,basic block中包含两个卷积层,卷积核数量相同,卷积核均为3x3; bottle neck的结构是前两组滤波核数量相同,第三层滤波核数量是前两组的4倍,第二层尺寸3x3,其余两层尺寸是1x1。这样一看,结构还是挺简单的(事后诸葛亮)。

1.2 BN 层和激活单元

原文中提出,在每个卷积层后面,ReLU激活单元前面,使用BN操作。但是,这里有一个地方不明确,也就是short cut部分——是先分别对卷积层输出和输入做BN,再相加;还是先相加,再对相加的结果做BN操作? 我没有从文中得到答案,并且我觉得这个地方影响没那么大,就使用了后者。

1.3 ResNet-18,ResNet-34,ResNet-50,ResNet-101,ResNet-152

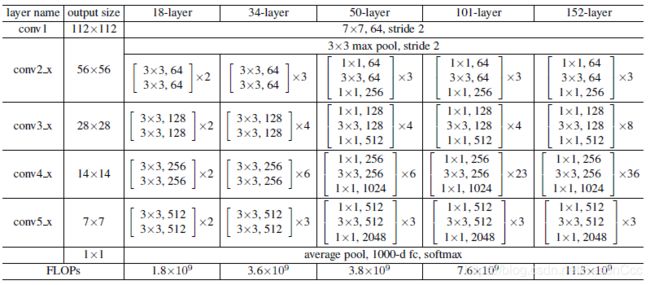

原文提出了这五种网络,可以根据计算性能挑选合适的实现。下面这张图也是复现需要特别关注的:

分析:

这五种网络从大的层面上来讲,都具有五个组,作者对它们进行了命名:conv1,conv2_x, conv3_x, conv4_x, conv5_x;五组的输出尺寸都是一样的,分别是112x112, 56x56, 28x28, 14x14, 7x7。我们可以看到,在经过每组卷积层组之后,尺寸降低了一半。

原始输入为224x224,用7x7,stride=2卷积,得到112x112的输出,我们可以知道conv1的padding=3;conv2_x的输出和输入相同,卷积核尺寸为3x3,我们可以知道做了same padding,stride=1;conv3_x, conv4_x, conv5_x的输出均减半,没有使用池化层,所以这几组也是做了same padding,第一个block第一层的stride=2,其它层stride=1。

知道上面这些参数后,我们就可以开始搭建网络了。

2. PyTorch实现

2.1 实现BN_CONV_ReLU结构

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchsummary import summary

class BN_Conv2d(nn.Module):

"""

BN_CONV, default activation is ReLU

"""

def __init__(self, in_channels: object, out_channels: object, kernel_size: object, stride: object, padding: object,

dilation=1, groups=1, bias=False, activation=nn.ReLU(inplace=True)) -> object:

super(BN_Conv2d, self).__init__()

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size, stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=bias),

nn.BatchNorm2d(out_channels)]

if activation is not None:

layers.append(activation)

self.seq = nn.Sequential(*layers)

def forward(self, x):

return self.seq(x)

2.2 实现basic block的结构

class BasicBlock(nn.Module):

"""

basic building block for ResNet-18, ResNet-34

"""

message = "basic"

def __init__(self, in_channels, out_channels, strides, is_se=False):

super(BasicBlock, self).__init__()

self.is_se = is_se

self.conv1 = BN_Conv2d(in_channels, out_channels, 3, stride=strides, padding=1, bias=False) # same padding

self.conv2 = BN_Conv2d(out_channels, out_channels, 3, stride=1, padding=1, bias=False, activation=None)

if self.is_se:

self.se = SE(out_channels, 16)

# fit input with residual output

self.short_cut = nn.Sequential()

if strides is not 1:

self.short_cut = nn.Sequential(

nn.Conv2d(in_channels, out_channels, 1, stride=strides, padding=0, bias=False),

nn.BatchNorm2d(out_channels)

)

def forward(self, x):

out = self.conv1(x)

out = self.conv2(out)

if self.is_se:

coefficient = self.se(out)

out = out * coefficient

out = out + self.short_cut(x)

return F.relu(out)

上面这里不明确的还是short cut那部分的BN层加在哪里,我是加在卷积和输入相加之后。需要注意的是,由于在conv3_x, conv4_x, conv5_x这几组中,第一层的stride=2,所以这种情况下我们要对输入进行处理。

2.3 实现bottleNeck的结构

class BottleNeck(nn.Module):

"""

BottleNeck block for RestNet-50, ResNet-101, ResNet-152

"""

message = "bottleneck"

def __init__(self, in_channels, out_channels, strides, is_se=False):

super(BottleNeck, self).__init__()

self.is_se = is_se

self.conv1 = BN_Conv2d(in_channels, out_channels, 1, stride=1, padding=0, bias=False) # same padding

self.conv2 = BN_Conv2d(out_channels, out_channels, 3, stride=strides, padding=1, bias=False)

self.conv3 = BN_Conv2d(out_channels, out_channels * 4, 1, stride=1, padding=0, bias=False, activation=None)

if self.is_se:

self.se = SE(out_channels * 4, 16)

# fit input with residual output

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels * 4, 1, stride=strides, padding=0, bias=False),

nn.BatchNorm2d(out_channels * 4)

)

def forward(self, x):

out = self.conv1(x)

out = self.conv2(out)

out = self.conv3(out)

if self.is_se:

coefficient = self.se(out)

out = out * coefficient

out = out + self.shortcut(x)

return F.relu(out)

注意,由于最后一个卷积层是的输出的层数变为了四倍,需要对输入进行操作,尺寸匹配才能相加。

2.4 实现ResNet构造类

class ResNet(nn.Module):

"""

building ResNet_34

"""

def __init__(self, block: object, groups: object, num_classes=1000) -> object:

super(ResNet, self).__init__()

self.channels = 64 # out channels from the first convolutional layer

self.block = block

self.conv1 = nn.Conv2d(3, self.channels, 7, stride=2, padding=3, bias=False)

self.bn = nn.BatchNorm2d(self.channels)

self.pool1 = nn.MaxPool2d(3, 2, 1)

self.conv2_x = self._make_conv_x(channels=64, blocks=groups[0], strides=1, index=2)

self.conv3_x = self._make_conv_x(channels=128, blocks=groups[1], strides=2, index=3)

self.conv4_x = self._make_conv_x(channels=256, blocks=groups[2], strides=2, index=4)

self.conv5_x = self._make_conv_x(channels=512, blocks=groups[3], strides=2, index=5)

self.pool2 = nn.AvgPool2d(7)

patches = 512 if self.block.message == "basic" else 512 * 4

self.fc = nn.Linear(patches, num_classes) # for 224 * 224 input size

def _make_conv_x(self, channels, blocks, strides, index):

"""

making convolutional group

:param channels: output channels of the conv-group

:param blocks: number of blocks in the conv-group

:param strides: strides

:return: conv-group

"""

list_strides = [strides] + [1] * (blocks - 1) # In conv_x groups, the first strides is 2, the others are ones.

conv_x = nn.Sequential()

for i in range(len(list_strides)):

layer_name = str("block_%d_%d" % (index, i)) # when use add_module, the name should be difference.

conv_x.add_module(layer_name, self.block(self.channels, channels, list_strides[i]))

self.channels = channels if self.block.message == "basic" else channels * 4

return conv_x

def forward(self, x):

out = self.conv1(x)

out = F.relu(self.bn(out))

out = self.pool1(out)

out = self.conv2_x(out)

out = self.conv3_x(out)

out = self.conv4_x(out)

out = self.conv5_x(out)

out = self.pool2(out)

out = out.view(out.size(0), -1)

out = F.softmax(self.fc(out))

return out

因为这五种ResNet的结构从大的角度上讲都是一致的,写一个_make_conv_x的函数来构造那些卷积层组。需要注意的是,其中要区分Block的种类,我这里通过在两个block中设置静态属性message作为标签,用作判断条件。

上面还需要注意的一点是,由于pytorch的交叉熵函数实现包括了log_softmax操作,所以网络最后一层softmax可不加,这里加上是为了避免与原文表格给出的网络结构不一致。

2.5 定义ResNet构建函数

def ResNet_18(num_classes=1000):

return ResNet(block=BasicBlock, groups=[2, 2, 2, 2], num_classes=num_classes)

def ResNet_34(num_classes=1000):

return ResNet(block=BasicBlock, groups=[3, 4, 6, 3], num_classes=num_classes)

def ResNet_50(num_classes=1000):

return ResNet(block=BottleNeck, groups=[3, 4, 6, 3], num_classes=num_classes)

def ResNet_101(num_classes=1000):

return ResNet(block=BottleNeck, groups=[3, 4, 23, 3], num_classes=num_classes)

def ResNet_152(num_classes=1000):

return ResNet(block=BottleNeck, groups=[3, 8, 36, 3], num_classes=num_classes)

与原文给出表格中的网络结构一致。

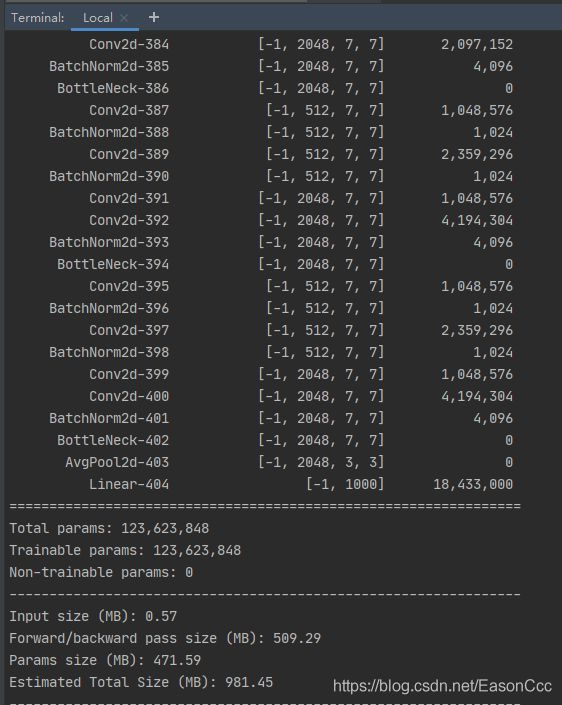

2.6 测试搭建的网络

def test():

# net = ResNet_18()

# net = ResNet_34()

# net = ResNet_50()

# net = ResNet_101()

net = ResNet_152()

summary(net, (3, 224, 224))

test()

输出如下:

至此,ResNet搭建完毕,你可以用它训练数据集。需要注意,如果使用原文实现,在使用时要将图像resize到(224, 224)的大小;如果你的图像与该尺寸相差很大,建议调整网络的conv1来适当调整网络结构。