kubernetes 原理 安装

文章目录

- 介绍k8s

- 一. k8s集群的安装

-

-

- 1.1 k8s原理

- 1.2 master节点安装etcd(k8s数据库)

- 1.3 master节点安装k8s服务(apiserver,controller-manager,scheduler)

- 1.4 node节点安装kubernetes

- 1.5 修改下载地址 *****

-

- 1.5所有节点配置flannel网络 跨宿主机局域网

-

-

- master节点:

- node节点:

-

- 检查:

-

- 二、配置master为镜像仓库

-

- 所有节点

- master节点

- 2:什么是k8s,k8s有什么功能?

-

- 2.1 k8s的核心功能

- 2.2 k8s的历史

-

- 2.4 k8s的应用场景

- 三、k8s常用的资源

-

-

- 3.1 创建pod资源

-

- 首先上传nginx镜像到registry仓库

-

- 问题1:为什么创建一个pod资源,需要至少启动两个容器?

- 问题2:什么是pod资源

- master节点(实现两个业务nginx+alpine)

- 3.2 ReplicationController资源

-

- 创建一个rc,启动5个pod副本,相同的资源不能相同,但是不同类型的资源可以相同

- pod镜像状态

-

- 四、rc的滚动升级

-

- 3.3 service资源

-

- 总结:

- 3.4 deployment资源

-

- 创建deployment*****

-

- deployment升级和回滚

- 3.5 tomcat+mysql练习

- 四 k8s的附加组件

-

- 4.1 dns服务

-

- 4.2 namespace命令空间

- 4.3 健康检查和可用性检查

-

- 4.3.1 探针的种类

- 4.3.2 探针的检测方法

- 4.3.3 liveness探针的exec使用(用命令测试容器是否健康)

- 4.3.4 liveness探针的httpGet使用(用浏览器返回值判断,常用)

- 4.3.5 liveness探针的tcpSocket使用(测试端口是否可被连接)

- 4.3.6 readiness探针的httpGet使用(可用后加入负载均衡)

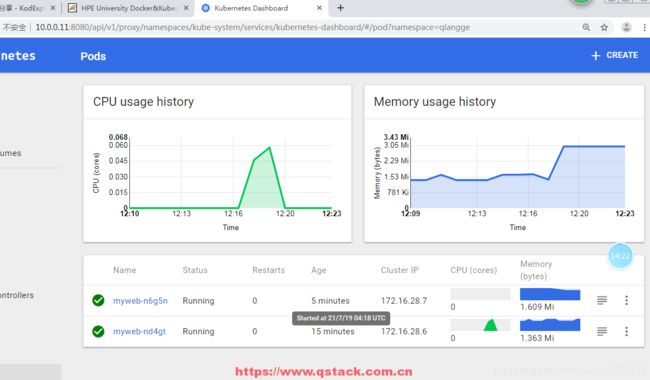

- 4.4 dashboard服务(web界面操纵k8s资源 监控内存cpu)

- 4.5 通过apiservicer反向代理访问service

- 五: k8s弹性伸缩

-

- 5.1 安装heapster监控

- 5.2 弹性伸缩

- 六:持久化存储

-

- 6.1 k8s存储类型 emptyDir类型:(日志存储 跟随pod的创建而创建 删除而删除)

- 6.2 HostPath:(将数据保存到本地 缺点:node节点数据不共享,只能挂在到nfs)

- 6.3 k8s存储类型 nfs类型:

- 6.3 nfs: #nfs持久化类型

- 6.4 pv和pvc:

-

- 6.4.1:创建pv和pvc

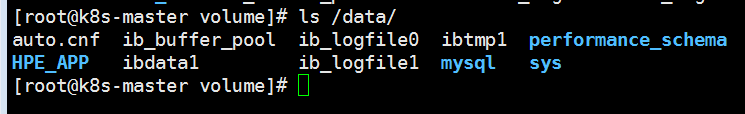

- 6.4.2:创建mysql-rc,pod模板里使用volume

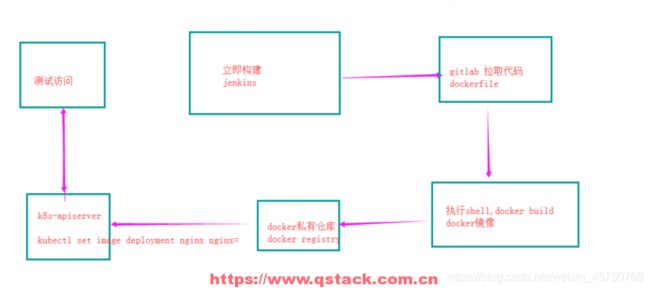

- 7:使用jenkins实现k8s持续更新

-

- 7.1: 安装gitlab并上传代码(node节点也可以是其他服务器)

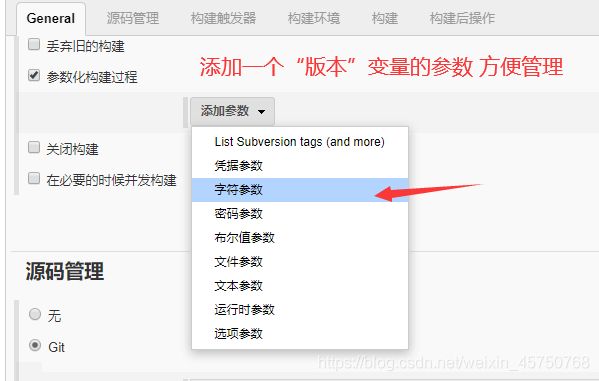

- 7.2 安装jenkins,并自动构建docker镜像

-

-

-

- 1:安装jenkins

- 2:访问jenkins

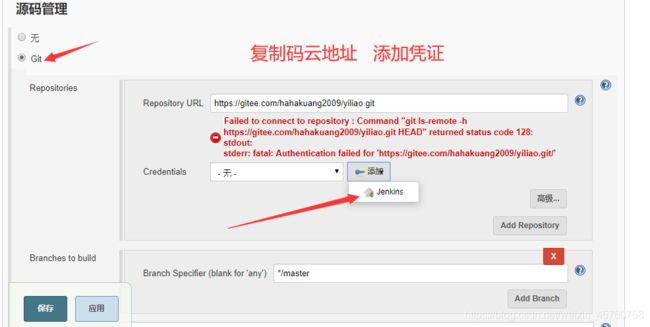

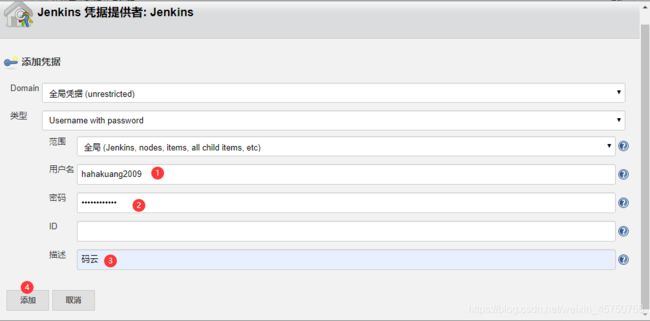

- 3:配置jenkins拉取gitlab代码凭据

- 点击jenkins立即构建,自动构建docker镜像并上传到私有仓库

-

-

- 7.3 代码内容更新 重新提交代码并打标签

- 7.4 回滚旧版本

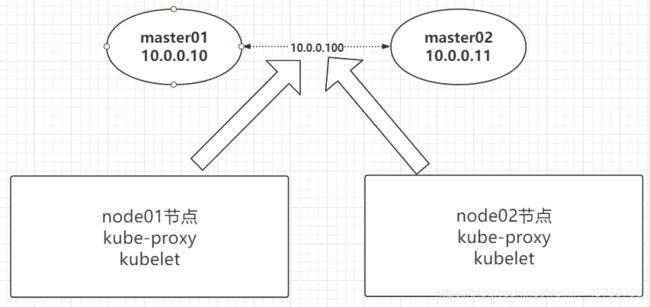

- 八、 k8s高可用 mastert节点 keepalived

-

-

- 8.1: 安装配置etcd高可用集群

- 8.2 安装配置master01的api-server,controller-manager,scheduler(127.0.0.1:8080)

- 8.3 安装配置master02的api-server,controller-manager,scheduler(127.0.0.1:8080)

- 8.4 为master01和master02安装配置Keepalived

- 8.5: 所有node节点kubelet,kube-proxy指向api-server的vip

-

- 九、二进制安装k8s 配置https传输

-

- 9.1 k8s的架构

- 9.2 k8s的安装

-

- 9.2.1 颁发证书:

-

- 在node3节点上(证书颁发平台)

- 9.2.2 部署etcd集群 etcd--etcd

-

- 安装etcd服务

- master节点

- node1和node2需修改

- 9.2.3 master节点的安装

-

- master节点

- 安装controller-manager服务

- 安装scheduler服务

- 验证master节点

- 9.2.4 node节点的安装(切换节点)

-

- 设置集群参数

- master节点上

- node节点

- 安装kube-proxy服务

- 在node1节点上配置kube-proxy

- 9.2.5 配置flannel网络

-

- 在master节点上

- 十、 静态pod创建 secrets资源

-

- 10.1 静态pod 动态pod 介绍

- 10.2 静态pod创建 node节点

- 10.3 secrets资源 (给密码第二次加密)

-

- 10.3.1 介绍

- 10.3.2 方式一

-

- 10.4.1方式二

- 10.5 k8s常用服务 部署coredns服务

-

- 10.5.1 部署dns服务

- 10.5.2 部署dashboard服务(监控)

- 10.6 k8s的映射

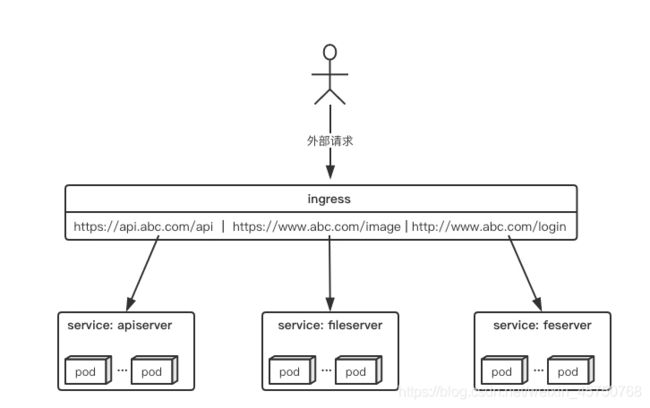

- ingress七层负载均衡(根据域名创建负载均衡)

介绍k8s

k8s是一个容器的编排系统

k8s是一个docker管理平台

k8s的功能:自愈,服务的自动发现,弹性伸缩,滚动升级,配置文件和账号密码

k8s历史 2014年开始。2015年7月开源,对外发布。发版速度,一年四个版本。

k8s:基于谷歌15年运维经验。brog(基于c和python),kubernetes(基于go)

k8s一切皆资源

pod资源:静态pod 动态pod

rc资源:过度

deployment:dp

daemon set

job:一次性的

cronjob

statful set

svc:四层负载均衡

ingress:7层负载均衡 可以根据url实现负载

持久化存储:

emptydir

hostPath

nfs

pv

pvc

动态存储

…

配置文件:

账号密码:

RBAC:角色 用户 绑定关系

k8s附加组件:

dns

dashboard

heapster

prometheus

EFK

k8s使用外部数据库:

一. k8s集群的安装

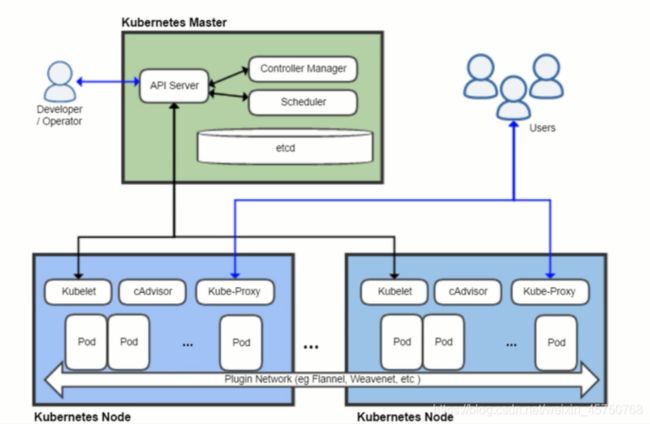

1.1 k8s原理

- master 控制节点

apiserver:核心服务控制,所有命令否是它发的 。 (解析并执行kubectl所有命令 所有组件连接到apiserver)

controller-manager:监工 保证有指定数量的容器是否运行

scheduler:调度器选择合适的node

etcd: 数据库nosql结构 great get delete - node 管理容器

kubelet:调用docker 名字唯一

cAdvisor:监控组件

kube-Proxy:负载均衡和服务的自动发现pod

pod:容器被封装在pod中

Plugin Network:跨宿主机 容器间的通讯

创建一个pod

扩展组件

| 组件名称 | 说明 |

|---|---|

| kube-dns | 负责为整个集群提供DNS服务 |

| Ingress Controller | 为服务提供外网入口 |

| Heapster | 提供资源监控 |

| Dashboard | 提供GUI |

| Federation | 提供跨可用区的集群 |

| Fluentd-elasticsearch | 提供集群日志采集、存储与查询 |

1.2 master节点安装etcd(k8s数据库)

yum install etcd -yvim /etc/etcd/etcd.conf修改配置文件

3行: ETCD_DATA_DIR="/var/lib/etcd/default.etcd" etcd存储路径

6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" etcd节点监听地址 #可以写多行

21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379" etcd节点在集群的名字

systemctl start etcd.service开启服务

systemctl enable etcd.service

etcd是key volume的数据库 所以 可以设置key和取key

#etcdctl set testdir/testkey0 0

#etcdctl get testdir/testkey0

检测集群是否是健康的

#etcdctl cluster-health

etcd原生支持做集群,

1.3 master节点安装k8s服务(apiserver,controller-manager,scheduler)

- 安装k8s

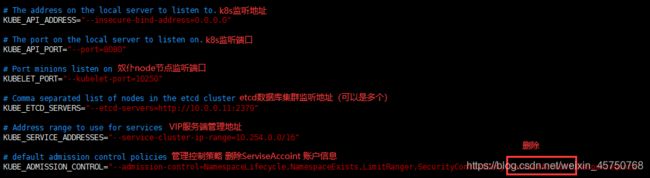

yum install kubernetes-master.x86_64 -y - 修改API-server核心服务配置文件

vim /etc/kubernetes/apiserver

8行: KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

11行:KUBE_API_PORT="--port=8080"

14行: KUBELET_PORT="--kubelet-port=10250"

17行:KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379" #可以写多行

23行:KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

- scheduler调度器和controller manager的配置文件

vim /etc/kubernetes/config帮助controller-manager, scheduler找到apiserver

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://127.0.0.1:8080" #指向API-server地址 单个(上锁)

systemctl restart kube-apiserver.service

systemctl restart kube-scheduler.service

systemctl restart kube-controller-manager.service

kk遇到坑了 master配置高可用/etc/kubernetes/config中的**KUBE_MASTER=**填写多个mast地址,./var/log/message显示无法解析多个地址。master01更换成本机地址后,master02提示lock is held by master01 and has not yet expired提示etcd已经给master01上锁数据无法写入以为出现问题,其实正常。因为etcd只能给一个master写入数据,一台master连接上,另外一台就无法写入。当一台连接etcd的mastar挂了之后,另外一台master自动连接etcd

![]()

- 启动服务

systemctl restart kube-apiserver.service

systemctl restart kube-scheduler.service

systemctl restart kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl enable kube-controller-manager.service

systemctl enable kube-apiserver.service

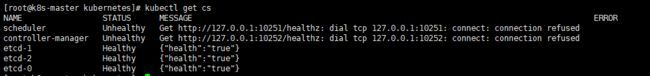

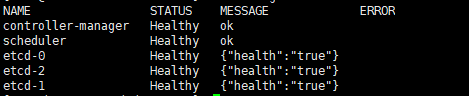

4 . 检查服务是否安装正常

#kubectl get componentstatus

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

controller-manager Healthy ok

1.4 node节点安装kubernetes

- 安装node节点包

[root@k8s-node-1 ~]#yum install kubernetes-node.x86_64 -y

yum install conntrack-tools.x86_64 - 编辑节点配置文件 kubelet proxy指向api-server

vi /etc/kubernetes/config

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.0.0.11:8080" # #指向API-server地址 单个

22行:KUBE_MASTER="--master=http://10.0.0.11:8080" 指向API-server地址

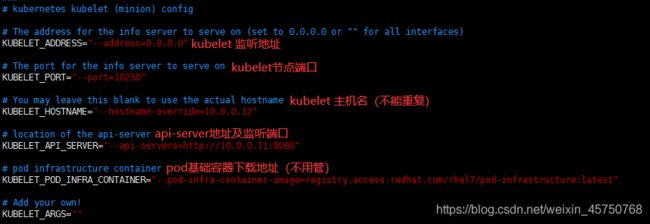

vim /etc/kubernetes/kubelet kubelet的配置文件

5行:KUBELET_ADDRESS="--address=0.0.0.0" 监听地址

8行:KUBELET_PORT="--port=10250" 监听端口,需要和服务端端口一致

11行:KUBELET_HOSTNAME="--hostname-override=10.0.0.12" 每个节点的名字,必须是唯一的

14行:KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080" kubelet找API-server的地址, apii-server地址

-

启动服务

systemctl restart kube-proxy.service

systemctl restart kubelet.service

systemctl enable kubelet.service

systemctl enable kube-proxy.service -

在master节点检查

[root@k8s-master ~]#kubectl get nodes

NAME STATUS AGE

10.0.0.12 Ready 6m

10.0.0.13 Ready 3s

1.5 修改下载地址 *****

问题:k8s 默认从红帽上寻找镜像

解决方法: 所有node节点 上传pod镜像并

- 上传 pod-infrastructure:latest镜像到私有仓库

[root@k8s-master ~]#docker tag docker.io/tianyebj/pod-infrastructure:latest 10.0.0.11:5000/podinfrastructure: latest

[root@k8s-master ~]#docker push 10.0.0.11:5000/pod-infrastructure:latest - node节点:

vim /etc/kubernetes/kubelet

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/pod-infrastructure:latest"

systemctl restart kubelet.service

3. master服务端检查

[root@k8s-master ~]#kubectl get pod -o wide显示详细

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 44m 172.18.22.2 10.0.0.13

1.5所有节点配置flannel网络 跨宿主机局域网

flannel和overlay网络类似需要数据库,flannel需要etcd数据库

- 下载网络

yum install flannel -y - 修改配置文件

vim /etc/sysconfig/flanneld

#sed -i 's#http://127.0.0.1:2379#http://10.0.0.11:2379#g' /etc/sysconfig/flanneld

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://10.0.0.11:2379" #可以是多个

3. etcd数据库 开启

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16" }'

#etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16","Backend": {"Type": "vxlan"} }'

4. 启动服务

systemctl restart flannel

systemctl enable flannel

master节点:

- master创建key 配置flannel的网段

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16" }'

#etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16","Backend": {"Type": "vxlan"} }' - 创建仓库 可以是master 也可以是其他服务器

systemctl restart docker

systemctl restart flanneld.service

systemctl enable flanneld.service

#自动生成/usr/lib/systemd/system/docker.service.d/flannel.conf的配置文件

node节点:

-

修改docker启动文件,每次启动docker自动将iptables的FORWARD的DROP 改为ACCEPT,使容器之间网络互通,

vim /usr/lib/systemd/system/docker.service

#在[Service]区域下增加一行

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

-

重启服务

systemctl daemon-reload

systemctl restart docker

检查:

ifconfig检查 flannel.1 的网络 和其他服务器容器ip地址段一样

#docker版本太新 master节点网段和node节点网段不一样则添加 #则在master 或者node添加vim /usr/lib/systemd/system/docker.service 添加**$DOCKER_NETWORK_OPTIONS**

[Service]

EnvironmentFile=-/run/flannel/docker

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock

#检查cat /run/flannel/docker查看地址范围

DOCKER_OPT_BIP="--bip=172.18.15.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=true"

DOCKER_OPT_MTU="--mtu=1472"

DOCKER_NETWORK_OPTIONS=" --bip=172.18.15.1/24 --ip-masq=true --mtu=1472"

ifconfig 发现多了一个flannel0的网络

二、配置master为镜像仓库

所有节点

vi /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"insecure-registries": ["10.0.0.11:5000"]

}

systemctl restart docker

master节点

上传registry.并docker导入

docker load -i registry.tar.gz

docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry

测试仓库是否成功

①打标签

docker tag alpine:latest 10.0.0.11:5000/apline:latest

② 上传镜像到仓库

docker push 10.0.0.11:5000/apline:latest

The push refers to a repository [10.0.0.11:5000/apline]

1bfeebd65323: Pushed

latest: digest: sha256:57334c50959f26ce1ee025d08f136c2292c128f84e7b229d1b0da5dac89e9866 size: 528

③ 仓库网页查看

http://10.0.0.11:5000/v2/_catalog

ls /opt/myregistry/docker/registry/v2/repositories/

2:什么是k8s,k8s有什么功能?

k8s是一个docker集群的管理工具

k8s是容器的编排工具

2.1 k8s的核心功能

自愈: 重新启动失败的容器,在节点不可用时,替换和重新调度节点上的容器,对用户定义的

健康检查不响应的容器会被中止,并且在容器准备好服务之前不会把其向客户端广播。

弹性伸缩: 通过监控容器的cpu的负载值,如果这个平均高于80%,增加容器的数量,如果这个平均

低于10%,减少容器的数量

服务的自动发现和负载均衡: 不需要修改您的应用程序来使用不熟悉的服务发现机制,

Kubernetes 为容器提供了自己的 IP 地址和一组容器的单个 DNS 名称,并可以在它们之间进

行负载均衡。

滚动升级和一键回滚: Kubernetes 逐渐部署对应用程序或其配置的更改,同时监视应用程序

运行状况,以确保它不会同时终止所有实例。 如果出现问题,Kubernetes会为您恢复更改,利

用日益增长的部署解决方案的生态系统。

私密配置文件管理. web容器里面,数据库的账户密码(测试库密码)

2.2 k8s的历史

2014年 docker容器编排工具,立项

2015年7月 发布kubernetes 1.0, 加入cncf基金会 孵化

2016年,kubernetes干掉两个对手,docker swarm,mesos marathon 1.2版

2017年 1.5 1.9

2018年 k8s 从cncf基金会 毕业项目1.10 1.11 1.12

2019年: 1.13, 1.14 ,1.15,1.16 1.17

cncf :cloud native compute foundation 孵化器

kubernetes (k8s): 希腊语 舵手,领航者 容器编排领域,

谷歌15年容器使用经验,borg容器管理平台,使用golang重构borg,kubernetes

##] 2.3 k8s的安装方式

学习:

yum安装 1.5 最容易安装成功,最适合学习的

源码编译安装

难度最大 可以安装最新版

生产:

二进制安装

步骤繁琐 可以安装最新版 生产推荐 shell,ansible,saltstack ansible 安装K8s

https://github.com/easzlab/kubeasz

kubeadm 安装最容易, 网络 可以安装最新版 生产推荐

minikube 适合开发人员体验k8s, 网络

2.4 k8s的应用场景

k8s最适合跑微服务项目!

微服务:一个功能一个站点,一个功能就是一个域名

微服务相当于拆业务,将一个网站拆成很多个小网站

微服务的好处: 支持更大的用户访问量,业务的稳定性更强,代码的更新和发布更加快捷

微服务对于运维的影响:工作量变大,因为架构多需要ansible自动化代码上线.ELK日志分析处理,

微服务部署复杂度,维护工作量变大很多 开发环境一套微服务,测试环境一套微服务,预生产环境

一套微服务,生产环境一套微服务,工作量倍增,所以需要docker快速部署微服务,docker多的时候

用k8s解决docker的管理问题

三、k8s常用的资源

3.1 创建pod资源

pod是最小资源单位.

任何的一个k8s资源都可以由yml清单文件来定义

yml文件,最重要的就是缩进

k8s yaml的主要组成 : 顶格写的四个

| yaml主要组成 | |

|---|---|

| apiVersion: v1 | api版本 |

| kind: pod | 资源类型 |

| metadata: | 属性 |

| spec: | 详细 |

首先上传nginx镜像到registry仓库

- master上创建 *.yml(让pod启动一个nginx业务容器)

# mkdir /k8s_yaml/pod

# vim k8s_pod.yml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- master节点创建pod资源

# kubectl create -f ./k8s_pod.yml - 检查创建是否成功

# kubectl get pod

检查指定的pod信息,每个pod的名字必须唯一

# kubectl describe pod nginx

创建失败:默认从红帽上寻找镜像

问题1:为什么创建一个pod资源,需要至少启动两个容器?

pod是最小资源单位.

pod基础容器 和nginx公用一个ip地址 k8s干的

pod和nginx 两个容器绑定一起 就可以实现k8s的高级功能

pod: 实现k8s高级功能

nginx: 实现业务功能

问题2:什么是pod资源

pod资源:至少由两个容器组成,pod基础容器和业务容器组成(最多1+4),pod资源中端口不能冲突

pod配置文件2:

创建一个pod资源通过scheduler调度到了node2节点,node2启动了两个容器 分别是 10.0.0.11:5000/nginx:1.13镜像和

10.0.0.11:5000/pod-infrastructure:latest镜像

k8s 原生实现了很多高级功能

nginx容器就是一个非常普通的容器,无法实现k8s的功能,想要实现高级功能需要安装k8s探测服务

pod基础容器实现k8s的高级功能,nginx实现业务功能,只需要绑一块就能实现k8s的高级功能

只需要创建一个pod资源,都会启动一个pod基础容器+pod资源中的业务资源

172.18.78.2 到底绑定的是哪个容器?

发现nginx的网络是共用pod-infrastructure(pod基础容器)容器的ip地址

pod资源:

master节点(实现两个业务nginx+alpine)

vim k8s_pod2.yml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- name: alpine

image: 10.0.0.11:5000/alpine:latest

command: ["sleep","1000"]

pod是k8s最小的资源单位

3.2 ReplicationController资源

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod

k8s一切皆资源

rc会保证pod的持久性,rc指定数量的pod 就算删除了一个pod还是会自动创建出来的,当一个节点挂掉以后,会不断检测修复不可用节点,当检测修复失败以后,就会把挂掉的节点上的pod驱除,pod资源就会调度到另一个节点上面。

rc创建的标签相同 最年轻的pod将被删除

rc和pod之间是标签选择器来关联的

| k8s资源的常见操作: | |

|---|---|

| kubectl create -f xxx.yaml | 创建资源 |

| kubectl get pod / rc / node | 查看某一个资源列表 |

| kubectl describe pod(资源类型) nginx(资源名字) | 查看某一个具体资源的详细信息 |

| kubectl delete pod(资源类型) nginx(资源名字) | 删除某一个具体资源或者 |

| kubectl delete -f xxx.yaml | 删除指定资源的 yaml文件 |

| kubectl edit pod/rc(资源类型) nginx(资源名字) | 编辑指定资源的yaml文件,是对etcd数据库中直接操作 |

| kubectl scale rc nginx replicas=xxx | 指定rc资源的模板数量 |

| kubectl explain | 查看帮助 |

创建一个rc,启动5个pod副本,相同的资源不能相同,但是不同类型的资源可以相同

- 编写一个ymal

vim k8s_rc.yml

apiVersion: v1

kind: ReplicationController

metadata: #标签选择器 标签选择器和pod的标签必须一致,

name: nginx

spec:

replicas: 5 #副本5

selector: #pod的标签

app: myweb #标签选择器 选择pod中的标签管理

template: #模板 以下没有指定pod模板的名字是因为每个pod的名字不能相同,让他自动生成

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- 创建

kubectl create -f k8s_rc.yml

删除pod kubectl delete pod pod名

查看pod标签 kubectl get pod o

wide showlabels

不同的RC 创建pod 会打上自己的标签 只调用自己标签的pod 所以不会冲突

pod镜像状态

| pod状态: | |

|---|---|

| Terminating | 是终止 移除状态 |

| Running | 正在运行状态 |

| pending | 没有合适的节点,scheduler服务挂了 |

| CrashLoopBackOFF | 奔溃状态,经常重启会出现 |

| Unkown kod | 所在节点挂掉了 |

| InvalidImageName | 无效的镜像名 |

| InvalidImageName: | 无法解析镜像名称,镜像名称出现两个冒号或者地址语法问题会出现 |

| ErrImagePull | 连接不了docker仓库,pull不下来,通用的拉取镜像出错 |

| ImageInspectError: | 无法校验镜像 |

| ErrImageNeverPull: | 策略禁止拉取镜像 |

| ImagePullBackOff: | 正在重试拉取 |

| RegistryUnavailable: | 连接不到镜像中心 |

| CreateContainerConfigError: | 不能创建kubelet使用的容器配置 |

| CreateContainerError: | 创建容器失败 |

| m.internalLifecycle.PreStartContainer | 执行hook报错 |

| RunContainerError: | 启动容器失败 |

| PostStartHookError: | 执行hook报错 |

| ContainersNotInitialized: | 容器没有初始化完毕 |

| ContainersNotReady: | 容器没有准备完毕 |

| ContainerCreating: | 容器创建中 |

| PodInitializing: | pod初始化中 |

| DockerDaemonNotReady: | docker还没有完全启动 |

| NetworkPluginNotReady: | 网络插件还没有完全启动 |

四、rc的滚动升级

nginx1.13升级到nginx1.15

-

在私有仓库上上传1.15

-

滚动升级

kubectl rolling-update nginx -f k8s_rc2.yml --update-period=5s

#回滚kubectl rolling-update nginx2 -f k8s_rc1.yml --update-period=1s

3.3 service资源

k8s中有三种ip地址:

node ip: node的节点ip地址

pod ip : 容器id

clusterIP: VIP

service: 提供负载均衡和服务的自动发现 ,帮助pod暴露端口,实现端口映射

service的负载均衡功能由iptables实现

创建一个service

apiVersion: v1

kind: Service #简称svc

metadata:

name: myweb #service的名字

spec:

type: NodePort #默认ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 30000 #node port

targetPort: 80 #pod port

selector:

#选择标签为myweb的pod 进行负载均衡

app: myweb2 #指定的pod标签,为哪些pod做负载端口映射

kubectl get service 检查是否创建成功,查看service状态

node节点 netstat -lntup 查看端口是否创建成功

2 调整rc的副本数

#kubectl scale rc nginx --replicas=2

#kubectl edit

pod_name /bin/bash #进入pod容器,不用通过node节点,直接在master端进

入容器

修改nodePort范围 可以设置vip的端口范围

vim /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=3000-50000" #server端口范围定义

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" #server网段定义

重启生效

systemctl restart kube-apiserver.service

命令行非交互式创建service资源,缺点不能自定义宿主机端口

kubectl expose rc nginx --type=NodePort --port=80(vip端口) --targetport=80(容器端口)

service默认使用iptables来实现负载均衡, k8s 1.8新版本中推荐使用lvs(四层负载均衡 传输层

tcp,udp)

总结:

pod资源name必须唯一,标签可以相同。(如果相同,表示在一个rc组中)

spec:执行具体过程。

pod是k8s最小的资源单位

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod

其他所有资源都是管理pod的

每一个pod根据rc中的模板创建的

3.4 deployment资源

有rc在滚动升级之后,会造成服务访问中断,于是k8s引入了deployment资源

创建deployment*****

apiVersion: extensions/v1beta1 #扩展的v1beta版

kind: Deployment

metadata:

name: nginx

spec:

replicas: 3 #副本数量

strategy: #滚动升级策略

rollingUpdate:

maxSurge: 1 #在3个的基础上多起一个 3+1

maxUnavailable: 1 #最多不可用副本的数量 3-1

type: RollingUpdate

minReadySeconds: 30 #升级间隔30s

template: #模板

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources: #pod中声明 cpu的资源限制

limits: #最小

cpu: 100m

requests: #最大

cpu: 100m

创建kubectl create -f deployment.yml

kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

临时修改配置文件直接升级kubectl edit deployment nginx

deployment升级和回滚

命令行创建deployment

kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

命令行升级版本

kubectl set image deployment nginx nginx=10.0.0.11:5000/nginx:1.15

查看deployment所有历史版本

kubectl rollout history deployment nginx

deployment回滚到上一个版本

kubectl rollout undo deployment nginx

deployment回滚到指定版本

kubectl rollout undo deployment nginx --to-revision=2

3.5 tomcat+mysql练习

四 k8s的附加组件

k8s集群中dns服务的作用,就是将svc的名称解析成对应VIP地址

k8sdns:将service的名字解析成VIP地址

4.1 dns服务

将服务固定到有镜像的节点,就可以实现秒起服务

kubectl get -n kube-system pod

安装dns服务

- 下载dns_docker镜像包(node2节点10.0.0.13)

wget http://192.168.37.200/191127/docker_k8s_dns.tar.gz - 导入docker_k8s_dns.tar.gz镜像包(node2节点10.0.0.13)

- 创建dns服务

vim skydns.yaml

spec:

nodeName: 10.0.0.13

containers:

kubectl create -f skydns.yaml

kubectl create -f skydns-svc.yaml

- 检查

kubectl get all --namespace=kube-system - 修改所有node节点kubelet的配置文件

vim /etc/kubernetes/kubelet固定vip地址与配置文件中的一致

KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local"

systemctl restart kubelet

- 修改tomcat-rc.yml

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql' #修改前值是VIP

kubectl delete -f .

kubectl create -f .

7. 验证

4.2 namespace命令空间

namespace做资源隔离

在yaml文件中metadata:中添加

metadata:

name: mysql

namespace: tomcat #名字任意

4.3 健康检查和可用性检查

4.3.1 探针的种类

livenessProbe:健康状态检查,周期性检查服务是否存活,检查结果失败,将重启容器(容器一旦不健康就会kill掉启新的)

readinessProbe:可用性检查,周期性检查服务是否可用,不可用将从service的endpoints中移除

4.3.2 探针的检测方法

- exec:执行一段命令 返回值为0则正常, 非0则不正常

- httpGet:检测某个 http 请求的返回状态码 2xx,3xx正常, 4xx,5xx错误

- tcpSocket:测试某个端口是否能够连接

4.3.3 liveness探针的exec使用(用命令测试容器是否健康)

vi nginx_pod_exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: exec

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5 #容器启动初始检查时间 大于服务启动时间

periodSeconds: 5 #检查的频率(以秒为单位)。默认为10秒。最小值为1

timeoutSeconds: 5 #检查超时的时间。默认为1秒。最小值为1

successThreshold: 1 #检查一次成功的就成功。默认为1.活跃度必须为1。最小值为1。

failureThreshold: 1 #检查一次失败就算失败,失败x超时时间=最终随时间。当Pod成功启动且检查失败时,Kubernetes将在放弃之前尝试failureThreshold次。

#放弃生存检查意味着重新启动Pod。而放弃就绪检查,Pod将被标记为未就绪。默认为3.最小值为1。

4.3.4 liveness探针的httpGet使用(用浏览器返回值判断,常用)

vi nginx_pod_httpGet.yaml

iapiVersion: v1

kind: Pod

metadata:

name: httpget

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

4.3.5 liveness探针的tcpSocket使用(测试端口是否可被连接)

vi nginx_pod_tcpSocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: tcpSocket

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- tail -f /etc/hosts

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 3

4.3.6 readiness探针的httpGet使用(可用后加入负载均衡)

vi nginx-rc-httpGet.yaml

vi nginx-rc-httpGet.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: readiness

spec:

replicas: 2

selector:

app: readiness

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /qiangge.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

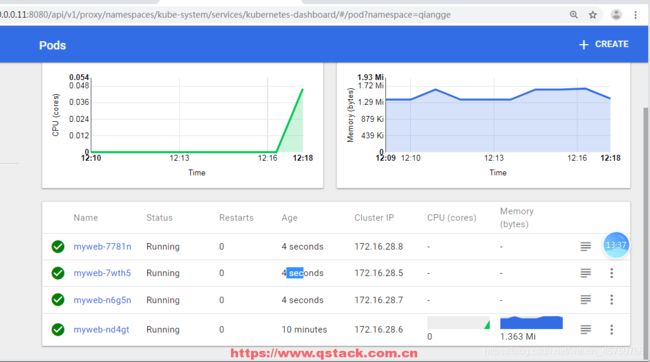

4.4 dashboard服务(web界面操纵k8s资源 监控内存cpu)

1:上传并导入镜像,打标签

2:创建dashborad的deployment和service

3:访问http://10.0.0.11:8080/ui/

4.5 通过apiservicer反向代理访问service

service被外界访问有两种形式

- NodePort类型

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30008

- ClusterIP类型(api-server的反向代理)

type: ClusterIP

ports:

- port: 80

targetPort: 80

url# 代表锚点

http://10.0.0.11:8080/api/v1/proxy/namespaces/命令空间/services/service的名字/

#例子:

http://10.0.0.11:8080/api/v1/proxy/namespaces/qiangge/services/wordpress

五: k8s弹性伸缩

k8s弹性伸缩,需要附加插件heapster监控

heapster 负责收集信息 node节点上的cadvisor负责采集星期,

haepster 需要influxdb数据库支持

haepster 的数据库通过grafana出图

最后 dashboard调用grafana出图

5.1 安装heapster监控

1:上传并导入镜像,打标签

docker_heapster_influxdb.tar.gz

docker_heapster_grafana.tar.gz

docker_heapster.tar.gz

ls *.tar.gz

for n in ls *.tar.gz;do docker load -i $n ;done

docker tag docker.io/kubernetes/heapster_grafana:v2.6.0 10.0.0.11:5000/heapster_grafana:v2.6.0

docker tag docker.io/kubernetes/heapster_influxdb:v0.5 10.0.0.11:5000/heapster_influxdb:v0.5

docker tag docker.io/kubernetes/heapster:canary 10.0.0.11:5000/heapster:canary

2:上传配置文件,kubectl create -f .

修改配置文件:

#heapster-controller.yaml

spec:

nodeName: 10.0.0.13

containers:

- name: heapster

image: 10.0.0.11:5000/heapster:canary

imagePullPolicy: IfNotPresent

#influxdb-grafana-controller.yaml

spec:

nodeName: 10.0.0.13

containers:

3:打开dashboard验证

5.2 弹性伸缩

弹性收缩: 动态调整pod数量,压力大,平均cpu使用率高,自动增加pod数量,压力小,平均cpu使用

率低,自动减少pod数量,依赖于监控,来监控cpu的使用率

弹性伸缩: 扩容的最大值,缩容的最小值,扩容的触发条件例如cpu平均使用率=60%等

kubectl autoscale 创建HPA资源

HPA(Horizontal Pod Autoscaler)是kubernetes(以下简称k8s)的一种资源对象,能够

根据某些指标对在statefulSet、replicaController、replicaSet等集合中的pod数量进行动态

伸缩,使运行在上面的服务对指标的变化有一定的自适应能力。

HPA目前支持四种类型的指标,分别是Resource、Object、External、Pods。其中在稳定版

本autoscaling/v1中只支持对CPU指标的动态伸缩,在测试版本autoscaling/v2beta2中支持

memory和自定义指标的动态伸缩,并以annotation的方式工作在autoscaling/v1版本中。

1:修改rc的配置文件

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

2:创建弹性伸缩规则(命令行测试)

kubectl autoscale deploy nginx-deployment --max=8 --min=1 --cpu-percent=5

kubectl autoscale deploy(资源类型) nginxdeployment(资源名字) max=8(最大可创建pod

副本数) min=1(最小可创建pod副本数) cpupercent=5 (通过cpu负载来 扩容或缩容的 cpu值 生产数值60%)

3:测试

yum install httpd-tools.x86_64

ab -n 1000000 -c 40 http://10.0.0.12:33218/index.html

kubectl get hpa查看负载情况

六:持久化存储

数据持久化类型:容器删除在创建还是有数据 类似于docker run v的卷

6.1 k8s存储类型 emptyDir类型:(日志存储 跟随pod的创建而创建 删除而删除)

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

#name与下面的对应 与containers同级emptuDir存储 存日志 创建一个空目录 跟随pod生命周期

volumes:

- name: mysq-data

emptyDir: {

}

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

#配置持久化目录

volumeMounts:

- name: mysq-data

mountPath: /var/lib/mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

6.2 HostPath:(将数据保存到本地 缺点:node节点数据不共享,只能挂在到nfs)

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

#hostPath模式 名字与下面相同

volumes:

- name: mysq-data

hostPath:

path: /data/mysql-data

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

#设置挂载目录

volumeMounts:

- mountPath: /var/lib/mysql

name: mysq-data

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

6.3 k8s存储类型 nfs类型:

① 首先所有节点安装 nfs-utils

yum install nfs-utils -y

② 创建存储共享目录 服务端 master

mkdir /data

③ 编辑nfs-utils的配置文件 服务端 master

vim /etc/exports

/data 10.0.0.0/24(rw,sync,no_root_squash,no_all_squash)

所有节点nfs重启

systemctl restart rpcbind

systemctl enable rpcbind

systemctl restart nfs

systemctl enable nfs

④ 编辑yml文件

spec:

nodeName: 10.0.0.13

volumes: #持久化类型

- name: mysql

6.3 nfs: #nfs持久化类型

path: /data/tomcat_mysql #持久化存储目录路径 这里的目录需要提前在master, nfs服务端创建这个目录

server: 10.0.0.11 #nfs服务端地址

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports: - containerPort: 3306

volumeMounts: - mountPath: /var/lib/mysql #要持久化的容器中的目录

name: mysql

⑤ 最后在节点上检查: df -Th|grep nfs

6.4 pv和pvc:

pv: persistent volume 全局资源,k8s集群

pvc: persistent volume claim, 局部资源属于某一个namespace

k8s 项目完整的配置: 需要持久化存储

6.4.1:创建pv和pvc

6.4.2:创建mysql-rc,pod模板里使用volume

volumes:

- name: mysql

persistentVolumeClaim:

claimName: tomcat-mysql

6.4.3: 验证持久化

验证方法1:删除mysql的pod,数据库不丢

kubectl delete pod mysql-gt054

7:使用jenkins实现k8s持续更新

| ip地址 | 服务 | 内存 |

|---|---|---|

| 10.0.0.11 | kube-apiserver 8080 | 1G |

| 10.0.0.12 | kube-apiserver 8080 | 1G |

| 10.0.0.13 | jenkins(tomcat + jdk) 8080 | 2G |

7.1: 安装gitlab并上传代码(node节点也可以是其他服务器)

- Git 全局设置(不要是master节点 8080端口与api-server冲突)

git config --global user.name "hahakuang2009"

git config --global user.email "[email protected]" - 在本地解压代码并上传

wget http://192.168.12.253/file/yiliao.tar.gz

tar xf yiliao.tar.gz

cd到代码目录中

cd /opt/yiliao - 编写dockerfile文件(传代码前自行测试)

vim dockerfile

FROM 10.0.0.11:5000/nginx:1.13

Add . /usr/share/nginx/html

-

初始化:

git init

新增的文件加入到Git的索引中

git add .

提交到本地

git commit -m 'first commit'

添加远端仓库地址

git remote add origin https://gitee.com/hahakuang2009/yiliao.git

推送到远端仓库

git push -u origin master

7.2 安装jenkins,并自动构建docker镜像

1:安装jenkins

cd /opt/

wget http://192.168.12.201/191216/apache-tomcat-8.0.27.tar.gz

wget http://192.168.12.201/191216/jdk-8u102-linux-x64.rpm

wget http://192.168.12.201/191216/jenkin-data.tar.gz

wget http://192.168.12.201/191216/jenkins.war

rpm -ivh jdk-8u102-linux-x64.rpm

mkdir /app -p

tar xf apache-tomcat-8.0.27.tar.gz -C /app

rm -fr /app/apache-tomcat-8.0.27/webapps/*

mv jenkins.war /app/apache-tomcat-8.0.27/webapps/ROOT.war

tar xf jenkin-data.tar.gz -C /root

/app/apache-tomcat-8.0.27/bin/startup.sh

netstat -lntup |grep 8080

2:访问jenkins

访问http://10.0.0.12:8080/,默认账号密码admin:123456

3:配置jenkins拉取gitlab代码凭据

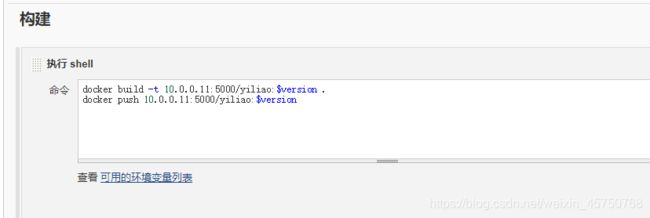

点击jenkins立即构建,自动构建docker镜像并上传到私有仓库

docker build -t 10.0.0.11:5000/yiliao:$version .

docker push 10.0.0.11:5000/yiliao:$version

7.3 代码内容更新 重新提交代码并打标签

7.4 回滚旧版本

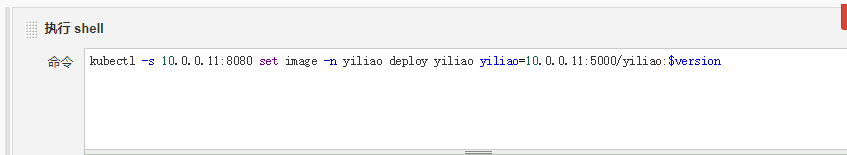

kubectl -s 10.0.0.11:8080 set image -n yiliao deploy yiliao yiliao=10.0.0.11:5000/yiliao:$version

- 创建svc

kubectl expose -n yiliao deployment yiliao --port=80 --target-port=80 --type=NodePort - 创建namespcae

kubectl create namespace yiliao

八、 k8s高可用 mastert节点 keepalived

8.1: 安装配置etcd高可用集群

#所有节点安装etcd

yum install etcd -y

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_NAME="node1" #节点的名字

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.0.0.11:2380" #节点的同步数据的地址

ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379" #节点对外提供服务的地址

ETCD_INITIAL_CLUSTER="node1=http://10.0.0.11:2380,node2=http://10.0.0.12:2380,node3=http://10.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

systemctl enable etcd

systemctl restart etcd

[root@k8s-master tomcat_demo]# etcdctl cluster-health

member 9e80988e833ccb43 is healthy: got healthy result from http://10.0.0.11:2379

member a10d8f7920cc71c7 is healthy: got healthy result from http://10.0.0.13:2379

member abdc532bc0516b2d is healthy: got healthy result from http://10.0.0.12:2379

cluster is healthy

#修改flannel

vim /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://10.0.0.11:2379,http://10.0.0.12:2379,http://10.0.0.13:2379"

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16" }'

#etcdctl mk /atomic.io/network/config ‘{ “Network”: “172.18.0.0/16”,“Backend”: {“Type”: “vxlan”} }’

systemctl restart flanneld

systemctl restart docker

8.2 安装配置master01的api-server,controller-manager,scheduler(127.0.0.1:8080)

vim /etc/kubernetes/apiserver

KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379,http://10.0.0.12:2379,http://10.0.0.13:2379"

vim /etc/kubernetes/config

KUBE_MASTER="--master=http://127.0.0.1:8080"

systemctl restart kube-apiserver.service

systemctl restart kube-controller-manager.service kube-scheduler.service

8.3 安装配置master02的api-server,controller-manager,scheduler(127.0.0.1:8080)

yum install kubernetes-master.x86_64 -y

scp -rp 10.0.0.11:/etc/kubernetes/apiserver /etc/kubernetes/apiserver

scp -rp 10.0.0.11:/etc/kubernetes/config /etc/kubernetes/config

systemctl stop kubelet.service

systemctl disable kubelet.service

systemctl stop kube-proxy.service

systemctl disable kube-proxy.service

systemctl enable kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl restart kube-controller-manager.service

systemctl restart kube-scheduler.service

systemctl restart kube-apiserver.service

8.4 为master01和master02安装配置Keepalived

yum install keepalived.x86_64 -y

vi /etc/keepalived/keepalived.conf

#master01配置:

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL_11

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.10

}

}

#master02配置

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL_12

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.10

}

}

systemctl enable keepalived

systemctl start keepalived

8.5: 所有node节点kubelet,kube-proxy指向api-server的vip

vim /etc/kubernetes/kubelet

KUBELET_API_SERVER="--api-servers=http://10.0.0.10:8080"

vim /etc/kubernetes/config

KUBE_MASTER="--master=http://10.0.0.10:8080"

systemctl restart kubelet.service kube-proxy.service

九、二进制安装k8s 配置https传输

9.1 k8s的架构

- etcd-etcd

- api-server–etcd

- api-server–kubelet

- api-server–kube-proxy

- flanny–etcd

9.2 k8s的安装

环境准备(修改ip地址,主机名,host解析)

| 主机 | ip | ip 软件 |

|---|---|---|

| k8s-master | 10.0.0.11 | etcd,api-server,controller-manager,scheduler |

| k8s-node1 | 100.0.12 | etcd,kubelet,kube-proxy,docker,flannel |

| k8s-node2 | 10.0.0.13 | ectd,kubelet,kube-proxy,docker,flannel |

| k8s-node3 | 10.0.0.14 | kubelet,kube-proxy,docker,flannel |

9.2.1 颁发证书:

准备证书颁发工具

cfssl(生成证书)

cfssl-certinfo(验证证书)

cfssl-json(生成证书)

在node3节点上(证书颁发平台)

- [root@k8s-node3 ~]#

mkdir /opt/softs

[root@k8s-node3 ~]#cd /opt/softs

[root@k8s-node3 softs]# rz -E

wget http://192.168.12.253/k8s-tools/cfssl

wget http://192.168.12.253/k8s-tools/cfssl-certinfo

wget http://192.168.12.253/k8s-tools/cfssl-json

[root@k8s-node3 softs]#ls

cfssl cfssl-certinfo cfssl-json - [root@k8s-node3 softs]#

chmod +x /opt/softs/*

[root@k8s-node3 softs]#ln -s /opt/softs/* /usr/bin/

- [root@k8s-node3 softs]#

mkdir /opt/certs

[root@k8s-node3 softs]#cd /opt/certs

编辑ca证书配置文件

vi /opt/certs/ca-config.json

i{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

编辑ca证书请求配置文件

vi /opt/certs/ca-csr.json

i{

"CN": "kubernetes-ca",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}

生成CA证书和私钥

- 生成证书在签发

[root@k8s-node3 certs]#cfssl gencert -initca ca-csr.json | cfssl-json -bare ca -

2020/09/27 17:20:56 [INFO] generating a new CA key and certificate from CSR

2020/09/27 17:20:56 [INFO] generate received request

2020/09/27 17:20:56 [INFO] received CSR

2020/09/27 17:20:56 [INFO] generating key: rsa-2048

2020/09/27 17:20:56 [INFO] encoded CSR

2020/09/27 17:20:56 [INFO] signed certificate with serial number 409112456326145160001566370622647869686523100724

[root@k8s-node3 certs]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

9.2.2 部署etcd集群 etcd–etcd

主机名 ip 角色

k8s-master 10.0.0.11 etcd lead

k8s-node1 10.0.0.12 etcd follow

k8s-node2 10.0.0.13 etcd follow

颁发etcd节点之间通信的证书

vi /opt/certs/etcd-peer-csr.json

i{

"CN": "etcd-peer",

"hosts": [

"10.0.0.11",

"10.0.0.12",

"10.0.0.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

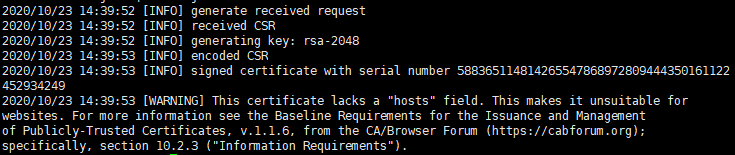

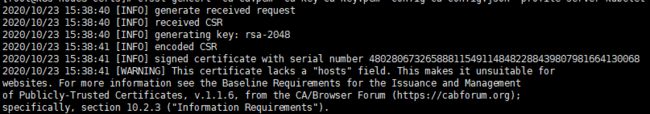

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssl-json -bare etcd-peer

2020/09/27 17:29:49 [INFO] generate received request

2020/09/27 17:29:49 [INFO] received CSR

2020/09/27 17:29:49 [INFO] generating key: rsa-2048

2020/09/27 17:29:49 [INFO] encoded CSR

2020/09/27 17:29:49 [INFO] signed certificate with serial number 15140302313813859454537131325115129339480067698

2020/09/27 17:29:49 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-node3 certs]# ls etcd-peer*

etcd-peer.csr etcd-peer-csr.json etcd-peer-key.pem etcd-peer.pem

安装etcd服务

在k8s-master,k8s-node1,k8s-node2上

yum install etcd -y

#在node3上发送证书到/etc/etcd目录

[root@k8s-node3 certs]# scp -rp *.pem [email protected]:/etc/etcd/

[root@k8s-node3 certs]# scp -rp *.pem [email protected]:/etc/etcd/

[root@k8s-node3 certs]# scp -rp *.pem [email protected]:/etc/etcd/

master节点

[root@k8s-master ~]# chown -R etcd:etcd /etc/etcd/*.pem

vim /etc/etcd/etcd.conf

iETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.0.0.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

ETCD_NAME="node1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="node1=https://10.0.0.11:2380,node2=https://10.0.0.12:2380,node3=https://10.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_AUTO_TLS="true"

ETCD_PEER_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_PEER_AUTO_TLS="true"

node1和node2需修改

vim /etc/etcd/etcd.conf

ETCD_LISTEN_PEER_URLS="https://10.0.0.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.0.0.12:2379,http://127.0.0.1:2379"

ETCD_NAME="node2"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.12:2379,http://127.0.0.1:2379"

#3个etcd节点同时启动

systemctl start etcd

systemctl enable etcd

#验证

[root@k8s-master ~]# etcdctl member list

55fcbe0adaa45350: name=node3 peerURLs=https://10.0.0.13:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.13:2379 isLeader=false

cebdf10928a06f3c: name=node1 peerURLs=https://10.0.0.11:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.11:2379 isLeader=false

f7a9c20602b8532e: name=node2 peerURLs=https://10.0.0.12:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.12:2379 isLeader=true

9.2.3 master节点的安装

安装api-server服务

上传kubernetes-server-linux-amd64-v1.15.4.tar.gz到node3上,然后解压

[root@k8s-node3 softs]# ls

cfssl cfssl-certinfo cfssl-json kubernetes-server-linux-amd64-v1.15.4.tar.gz

[root@k8s-node3 softs]# tar xf kubernetes-server-linux-amd64-v1.15.4.tar.gz

[root@k8s-node3 softs]#ls

cfssl cfssl-certinfo cfssl-json kubernetes kubernetes-server-linux-amd64-v1.15.4.tar.gz

[root@k8s-node3 softs]# cd /opt/softs/kubernetes/server/bin/

[root@k8s-node3 bin]# scp -rp kube-apiserver kube-controller-manager kube-scheduler kubectl [email protected]:/usr/sbin/

[email protected]’s password:

kube-apiserver 100% 157MB 45.8MB/s 00:03

kube-controller-manager 100% 111MB 51.9MB/s 00:02

kube-scheduler 100% 37MB 49.3MB/s 00:00

kubectl 100% 41MB 42.4MB/s 00:00

签发client证书

[root@k8s-node3 bin]# cd /opt/certs/

[root@k8s-node3 certs]#

vi /opt/certs/client-csr.json

i{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json | cfssl-json -bare client

[root@k8s-node3 certs]# ls client*

client.csr client-csr.json client-key.pem client.pem

签发kube-apiserver证书

[root@k8s-node3 certs]#

vi /opt/certs/apiserver-csr.json

i{

"CN": "apiserver",

"hosts": [

"127.0.0.1",

"10.254.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.0.0.11",

"10.0.0.12",

"10.0.0.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

#注意10.254.0.1为clusterIP网段的第一个ip,做为pod访问api-server的内部ip,oldqiang在这一块被坑了很久

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json | cfssl-json -bare apiserver

[root@k8s-node3 certs]#ls apiserver*

apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem

配置api-server服务

master节点

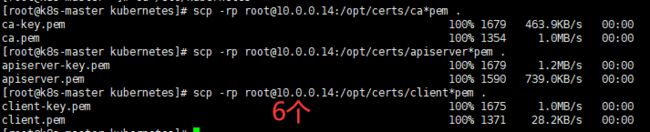

#拷贝证书

[root@k8s-master ~]# mkdir /etc/kubernetes

[root@k8s-master ~]# cd /etc/kubernetes

[root@k8s-master kubernetes]# scp -rp [email protected]:/opt/certs/ca*pem .

ca-key.pem

ca.pem

[root@k8s-master kubernetes]# scp -rp [email protected]:/opt/certs/apiserver*pem .

apiserver-key.pem

apiserver.pem

[root@k8s-master kubernetes]# scp -rp [email protected]:/opt/certs/client*pem .

client-key.pem

client.pem

[root@k8s-master kubernetes]# ls

apiserver-key.pem apiserver.pem ca-key.pem ca.pem client-key.pem client.pem

#api-server审计日志规则

[root@k8s-master kubernetes]#

vi audit.yaml

iapiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

#Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

vi /usr/lib/systemd/system/kube-apiserver.service

i[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

[Service]

ExecStart=/usr/sbin/kube-apiserver \

--audit-log-path /var/log/kubernetes/audit-log \

--audit-policy-file /etc/kubernetes/audit.yaml \

--authorization-mode RBAC \

--client-ca-file /etc/kubernetes/ca.pem \

--requestheader-client-ca-file /etc/kubernetes/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \

--etcd-cafile /etc/kubernetes/ca.pem \

--etcd-certfile /etc/kubernetes/client.pem \

--etcd-keyfile /etc/kubernetes/client-key.pem \

--etcd-servers https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379 \

--service-account-key-file /etc/kubernetes/ca-key.pem \

--service-cluster-ip-range 10.254.0.0/16 \

--service-node-port-range 30000-59999 \

--kubelet-client-certificate /etc/kubernetes/client.pem \

--kubelet-client-key /etc/kubernetes/client-key.pem \

--log-dir /var/log/kubernetes/ \

--logtostderr=false \

--tls-cert-file /etc/kubernetes/apiserver.pem \

--tls-private-key-file /etc/kubernetes/apiserver-key.pem \

--v 2

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@k8s-master kubernetes]# mkdir /var/log/kubernetes

[root@k8s-master kubernetes]# systemctl daemon-reload

[root@k8s-master kubernetes]# systemctl start kube-apiserver.service

[root@k8s-master kubernetes]# systemctl enable kube-apiserver.service

#有问题看日志

#检验

[root@k8s-master kubernetes]# kubectl get cs

安装controller-manager服务

[root@k8s-master kubernetes]#

vi /usr/lib/systemd/system/kube-controller-manager.service

i[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=kube-apiserver.service

[Service]

ExecStart=/usr/sbin/kube-controller-manager \

--cluster-cidr 172.18.0.0/16 \

--log-dir /var/log/kubernetes/ \

--master http://127.0.0.1:8080 \

--service-account-private-key-file /etc/kubernetes/ca-key.pem \

--service-cluster-ip-range 10.254.0.0/16 \

--root-ca-file /etc/kubernetes/ca.pem \

--logtostderr=false \

--v 2

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@k8s-master kubernetes]# systemctl daemon-reload

[root@k8s-master kubernetes]# systemctl start kube-controller-manager.service

[root@k8s-master kubernetes]# systemctl enable kube-controller-manager.service

安装scheduler服务

[root@k8s-master kubernetes]#

vi /usr/lib/systemd/system/kube-scheduler.service

i[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=kube-apiserver.service

[Service]

ExecStart=/usr/sbin/kube-scheduler \

--log-dir /var/log/kubernetes/ \

--master http://127.0.0.1:8080 \

--logtostderr=false \

--v 2

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@k8s-master kubernetes]# systemctl daemon-reload

[root@k8s-master kubernetes]# systemctl start kube-scheduler.service

[root@k8s-master kubernetes]#systemctl enable kube-scheduler.service

验证master节点

[root@k8s-master kubernetes]# kubectl get cs

9.2.4 node节点的安装(切换节点)

安装kubelet服务

在node3节点上签发证书

[root@k8s-node3 bin]# cd /opt/certs/

[root@k8s-node3 certs]#

vi kubelet-csr.json

i{

"CN": "kubelet-node",

"hosts": [

"127.0.0.1",

"10.0.0.11",

"10.0.0.12",

"10.0.0.13",

"10.0.0.14",

"10.0.0.15"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

[root@k8s-node3 certs]#

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

[root@k8s-node3 certs]# ls kubelet*

kubelet.csr kubelet-csr.json kubelet-key.pem kubelet.pem

#生成kubelet启动所需的kube-config文件

[root@k8s-node3 certs]# ln -s /opt/softs/kubernetes/server/bin/kubectl /usr/sbin/

设置集群参数

[root@k8s-node3 certs]#

kubectl config set-cluster myk8s \

--certificate-authority=/opt/certs/ca.pem \

--embed-certs=true \

--server=https://10.0.0.11:6443 \

--kubeconfig=kubelet.kubeconfig

Cluster “myk8s” set.

![]()

#设置客户端认证参数

[root@k8s-node3 certs]# kubectl config set-credentials k8s-node --client-certificate=/opt/certs/client.pem --client-key=/opt/certs/client-key.pem --embed-certs=true --kubeconfig=kubelet.kubeconfig

User “k8s-node” set.

![]()

#生成上下文参数

[root@k8s-node3 certs]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=kubelet.kubeconfig

Context “myk8s-context” created.

![]()

#切换默认上下文

[root@k8s-node3 certs]# kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

Switched to context “myk8s-context”.

![]()

#查看生成的kube-config文件

[root@k8s-node3 certs]# ls kubelet.kubeconfig

kubelet.kubeconfig

master节点上

[root@k8s-master ~]# cd /root/

vi k8s-node.yaml

iapiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

[root@k8s-master ~]# kubectl create -f k8s-node.yaml

clusterrolebinding.rbac.authorization.k8s.io/k8s-node created

![]()

node节点

#安装docker-ce

过程略

systemctl start docker

vim /etc/docker/daemon.json

i{

"registry-mirrors": ["https://registry.docker-cn.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

systemctl restart docker.service

systemctl enable docker.service

[root@k8s-node1 ~]# mkdir /etc/kubernetes

[root@k8s-node1 ~]# cd /etc/kubernetes

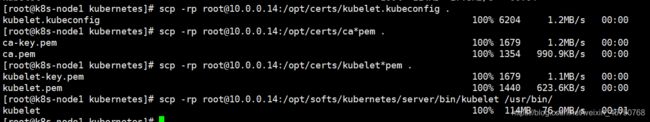

[root@k8s-node1 kubernetes]# scp -rp [email protected]:/opt/certs/kubelet.kubeconfig .

[root@k8s-node1 kubernetes]# scp -rp [email protected]:/opt/certs/ca*pem .

[root@k8s-node1 kubernetes]# scp -rp [email protected]:/opt/certs/kubelet*pem .

[root@k8s-node1 kubernetes]# scp -rp [email protected]:/opt/softs/kubernetes/server/bin/kubelet /usr/bin/

多个node节点上

[root@k8s-node1 kubernetes]# mkdir /var/log/kubernetes

[root@k8s-node1 kubernetes]#

vi /usr/lib/systemd/system/kubelet.service

i[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/bin/kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 10.254.230.254 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on=false \

--client-ca-file /etc/kubernetes/ca.pem \

--tls-cert-file /etc/kubernetes/kubelet.pem \

--tls-private-key-file /etc/kubernetes/kubelet-key.pem \

--hostname-override 10.0.0.12 \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig /etc/kubernetes/kubelet.kubeconfig \

--log-dir /var/log/kubernetes/ \

--pod-infra-container-image t29617342/pause-amd64:3.0 \

--logtostderr=false \

--v=2

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@k8s-node1 kubernetes]# systemctl daemon-reload

[root@k8s-node1 kubernetes]# systemctl start kubelet.service

[root@k8s-node1 kubernetes]# systemctl enable kubelet.service

master节点验证

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.0.12 Ready 15m v1.15.4

10.0.0.13 Ready 16s v1.15.4

安装kube-proxy服务

在node3节点上签发证书

[root@k8s-node3 ~]# cd /opt/certs/

[root@k8s-node3 certs]#

vi /opt/certs/kube-proxy-csr.json

i{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

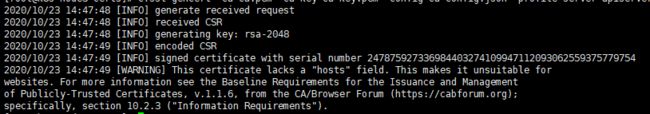

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json | cfssl-json -bare kube-proxy-client

2020/09/28 15:01:44 [INFO] generate received request

2020/09/28 15:01:44 [INFO] received CSR

2020/09/28 15:01:44 [INFO] generating key: rsa-2048

2020/09/28 15:01:45 [INFO] encoded CSR

2020/09/28 15:01:45 [INFO] signed certificate with serial number 450584845939836824125879839467665616307124786948

2020/09/28 15:01:45 [WARNING] This certificate lacks a “hosts” field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 (“Information Requirements”).

[root@k8s-node3 certs]# ls kube-proxy-c*

kube-proxy-client.csr kube-proxy-client-key.pem kube-proxy-client.pem kube-proxy-csr.json

#生成kube-proxy启动所需要kube-config

[root@k8s-node3 certs]# kubectl config set-cluster myk8s \

--certificate-authority=/opt/certs/ca.pem \

--embed-certs=true \

--server=https://10.0.0.11:6443 \

--kubeconfig=kube-proxy.kubeconfig

Cluster “myk8s” set.

[root@k8s-node3 certs]# kubectl config set-credentials kube-proxy \

--client-certificate=/opt/certs/kube-proxy-client.pem \

--client-key=/opt/certs/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

User “kube-proxy” set.

[root@k8s-node3 certs]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

Context “myk8s-context” created.

[root@k8s-node3 certs]# kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig Switched to context "myk8s-context".

[root@k8s-node3 certs]# ls kube-proxy.kubeconfig

kube-proxy.kubeconfig

[root@k8s-node3 certs]# scp -rp kube-proxy.kubeconfig [email protected]:/etc/kubernetes/

[email protected]’s password:

kube-proxy.kubeconfig

[root@k8s-node3 certs]# scp -rp kube-proxy.kubeconfig [email protected]:/etc/kubernetes/

[email protected]’s password:

kube-proxy.kubeconfig

[root@k8s-node3 bin]# scp -rp kube-proxy [email protected]:/usr/bin/

[email protected]’s password:

kube-proxy

[root@k8s-node3 bin]# scp -rp kube-proxy [email protected]:/usr/bin/

[email protected]’s password:

kube-proxy

在node1节点上配置kube-proxy

[root@k8s-node1 ~]#

vi /usr/lib/systemd/system/kube-proxy.service

i[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

ExecStart=/usr/bin/kube-proxy \

--kubeconfig /etc/kubernetes/kube-proxy.kubeconfig \

--cluster-cidr 172.18.0.0/16 \

--hostname-override 10.0.0.12 \

--logtostderr=false \

--v=2

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@k8s-node1 ~]# systemctl daemon-reload

[root@k8s-node1 ~]# systemctl start kube-proxy.service

[root@k8s-node1 ~]# systemctl enable kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

9.2.5 配置flannel网络

所有节点安装flannel

yum install flannel -y

mkdir /opt/certs/

在node3上分发证书

[root@k8s-node3 ~]# cd /opt/certs/

[root@k8s-node3 certs]# scp -rp ca.pem client*pem [email protected]:/opt/certs/

[email protected]’s password:

ca.pem

client-key.pem

client.pem

[root@k8s-node3 certs]# scp -rp ca.pem client*pem [email protected]:/opt/certs/

[email protected]’s password:

ca.pem

client-key.pem

client.pem

[root@k8s-node3 certs]# scp -rp ca.pem client*pem [email protected]:/opt/certs/

[email protected]’s password:

ca.pem

client-key.pem

client.pem

在master节点上

etcd创建flannel的key

#通过这个key定义pod的ip地址范围

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16","Backend": {"Type": "vxlan"} }'

#注意可能会失败提示

Error: x509: certificate signed by unknown authority

#重试一次就好了

配置启动flannel

vi /etc/sysconfig/flanneld

第4行:FLANNEL_ETCD_ENDPOINTS="https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379"

第8行不变:FLANNEL_ETCD_PREFIX="/atomic.io/network"

第11行:FLANNEL_OPTIONS="-etcd-cafile=/opt/certs/ca.pem -etcd-certfile=/opt/certs/client.pem -etcd-keyfile=/opt/certs/client-key.pem"

systemctl start flanneld.service

systemctl enable flanneld.service

#验证

[root@k8s-master ~]# ifconfig flannel.1

flannel.1: flags=4163

inet 172.18.43.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::30d9:50ff:fe47:599e prefixlen 64 scopeid 0x20

ether 32:d9:50:47:59:9e txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

在node1和node2上

[root@k8s-node1 ~]# vim /usr/lib/systemd/system/docker.service

将ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

修改为ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock

增加一行ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

[root@k8s-node1 ~]# systemctl daemon-reload

[root@k8s-node1 ~]# systemctl restart docker

#验证,docker0网络为172.18网段就ok了

[root@k8s-node1 ~]# ifconfig docker0

docker0: flags=4099

inet 172.18.41.1 netmask 255.255.255.0 broadcast 172.18.41.255

ether 02:42:07:3e:8a:09 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

验证k8s集群的安装

[root@k8s-master ~]# kubectl run nginx --image=nginx:1.13 --replicas=2

#多等待一段时间,再查看pod状态

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6459cd46fd-8lln4 1/1 Running 0 3m27s 172.18.41.2 10.0.0.12

nginx-6459cd46fd-xxt24 1/1 Running 0 3m27s 172.18.96.2 10.0.0.13

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort service/nginx exposed

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.254.0.1 443/TCP 6h46m

nginx NodePort 10.254.160.83 80:41760/TCP 3s

#打开浏览器访问http://10.0.0.12:41760,能访问就ok了

后续更新中

弹性伸缩

--horizontal-pod-autoscaler-use-rest-clients

反向代理访问service

http://10.0.0.11:8001/api/v1/namespaces/default/services/myweb/proxy/

http://10.0.0.11:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

十、 静态pod创建 secrets资源

10.1 静态pod 动态pod 介绍

pod资源至少由两个pod组成,一个基础容器pod+业务容器

动态pod,这个pod的yaml文件从etcd获取的

静态pod,kubelet本地读取配置文件

10.2 静态pod创建 node节点

- 创建目录

mkdir /etc/kubernetes/manifest

cd /etc/kubernetes/manifest - 修改kubelet启动参数 (配置文件中指定目录路径)

vim /usr/lib/systemd/system/kubelet.service

--pod-manifest-path /etc/kubernetes/manifest \

- 加载参数重启服务

systemctl daemon-reload

systemctl restart kubelet.service - 创建yaml文件 删除标签

vi k8s_pod.yaml

iapiVersion: v1

kind: Pod

metadata:

name: static-pod

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

保存即生效

10.3 secrets资源 (给密码第二次加密)

10.3.1 介绍

Secret资源的功能类似于ConfigMap,但它专用于存放敏感数据,如密码、数字证书、私钥、令牌和ssh key等。

10.3.2 方式一

- 安装hurbor镜像仓库

过程略 - 命令行创建secret

kubectl create secret docker-registry regcred --docker-server=blog.oldqiang.com --docker-username=admin --docker-password=a123456 [email protected]

secret 名称docker-registry、仓库地址blog.oldqiang.com、命名空间名称regcred、镜像仓库用户名admin、镜像仓库密码a123456、邮箱地址

#验证

[root@k8s-master ~]#kubectl get secrets

NAME TYPE DATA AGE

default-token-vgc4l kubernetes.io/service-account-token 3 2d19h

regcred kubernetes.io/dockerconfigjson 1 114s

- 创建pod

vim k8s_pod.yaml

iapiVersion: v1

kind: Pod

metadata:

name: static-pod

spec:

nodeName: 10.0.0.12

imagePullSecrets:

- name: regcred

containers:

- name: nginx

image: blog.oldqiang.com/oldboy/nginx:1.13

ports:

- containerPort: 80

10.4.1方式二

kubectl create secret docker-registry harbor-secret --namespace=default --docker-username=admin --docker-password=a123456 --docker-server=blog.oldqiang.com

vi k8s_sa_harbor.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: docker-image

namespace: default

imagePullSecrets:

- name: harbor-secret

vi k8s_pod.yaml

iapiVersion: v1

kind: Pod

metadata:

name: static-pod

spec:

serviceAccount: docker-image

containers:

- name: nginx

image: blog.oldqiang.com/oldboy/nginx:1.13

ports:

- containerPort: 80

### 10.4 configmap资源

vi /opt/81.conf

server {

listen 81;

server_name localhost;

root /html;

index index.html index.htm;

location / {

}

}

kubectl create configmap 81.conf --from-file=/opt/81.conf

#验证

kubectl get cm

vi k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: nginx-config

configMap:

name: 81.conf

items:

- key: 81.conf

path: 81.conf

containers:

- name: nginx

image: nginx:1.13

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/conf.d

ports:

- containerPort: 80

name: port1

- containerPort: 81

name: port2

10.5 k8s常用服务 部署coredns服务

介绍:

skydns kube-dns 一个pod,4个容器。

core-dns 一个pod,1个容器。

k8s之RBAC

RBAC基于角色的访问控制–全拼Role-Based Access Control

角色:role:局部角色 属于某个namespace

clusterrole: 属于全局namespace

用户:sa(namespace)

用户绑定:rolebinding clusterrolebinding

10.5.1 部署dns服务

vi coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

beta.kubernetes.io/os: linux

nodeName: 10.0.0.13

containers:

- name: coredns

image: coredns/coredns:1.3.1

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 100Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

- name: tmp

mountPath: /tmp

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: tmp

emptyDir: {

}

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.254.230.254

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

#测试

yum install bind-utils.x86_64 -y

dig @10.254.230.254 kubernetes.default.svc.cluster.local +short

10.5.2 部署dashboard服务(监控)

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

vi kubernetes-dashboard.yaml

#修改镜像地址

image: registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

#修改service类型为NodePort类型

spec:

type: NodePort

ports:

- port: 443

nodePort: 30001

targetPort: 8443

kubectl create -f kubernetes-dashboard.yaml

#使用火狐浏览器访问https://10.0.0.12:30001

vim dashboard_rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

image-20201026172158228

#解决Google浏览器不能打开kubernetes dashboard方法

mkdir key && cd key

#生成证书

openssl genrsa -out dashboard.key 2048

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=10.0.0.11'

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

#删除原有的证书secret

kubectl delete secret kubernetes-dashboard-certs -n kube-system

#创建新的证书secret

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n

kube-system

#查看pod

kubectl get pod -n kube-system

kubectl delete pod --all -n kube-system

10.6 k8s的映射

[root@k8s-master yingshe]# cat mysql_endpoint.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: mysql

subsets:

- addresses:

- ip: 10.0.0.13

ports:

- name: mysql

port: 3306

protocol: TCP

[root@k8s-master yingshe]# vim mysql_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- name: mysql

port: 3306

protocol: TCP

targetPort: 3306

type: ClusterIP

kube-proxy: ipvs模式

后续更新中

弹性伸缩

–horizontal-pod-autoscaler-use-rest-clients

语法:

kubectl taint node [node] key=value[effect]

其中[effect] 可取值: [ NoSchedule | PreferNoSchedule | NoExecute ]

NoSchedule: 一定不能被调度

PreferNoSchedule: 尽量不要调度

NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

示例:

kubectl taint node node1 key1=value1:NoSchedule

kubectl taint node node1 key1=value1:NoExecute

kubectl taint node node1 key2=value2:NoSchedule

ingress七层负载均衡(根据域名创建负载均衡)

介绍:

Traefik 是一款开源的反向代理与负载均衡工具。它最大的优点是能够与常见的微服务系统直接整合,可以实现自动化动态配置。目前支持 Docker、Swarm、Mesos/Marathon、 Mesos、Kubernetes、Consul、Etcd、Zookeeper、BoltDB、Rest API 等等后端模型。

创建两个资源

- 启ingress控制器的(rbac+ingressn+控制器的deployment+控制器的svc

- 创建ingress规则

traefik实战

1:创建rbac

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

直接在集群中创建即可:

kubectl create -f rbac.yaml

serviceaccount “traefik-ingress-controller” created

clusterrole.rbac.authorization.k8s.io “traefik-ingress-controller” created

clusterrolebinding.rbac.authorization.k8s.io “traefik-ingress-controller” created

2:部署traefik服务

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: traefik-ingress-controller

namespace: kube-system

labels:

k8s-app: traefik-ingress-lb

spec:

replicas: 1

selector:

matchLabels:

k8s-app: traefik-ingress-lb

template:

metadata:

labels:

k8s-app: traefik-ingress-lb

name: traefik-ingress-lb

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

tolerations:

- operator: "Exists"

nodeSelector:

kubernetes.io/hostname: master

containers:

- image: traefik:latest

imagePullPolicy: IfNotPresent

name: traefik-ingress-lb

ports:

- name: http

containerPort: 80

- name: admin

containerPort: 8080

args:

- --api

- --kubernetes

- --logLevel=INFO

---

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress-lb

ports:

- protocol: TCP

port: 80

name: web

- protocol: TCP

port: 8080

name: admin

type: NodePort

直接创建上面的资源对象即可:

$ kubectl create -f traefik.yaml

deployment.extensions “traefik-ingress-controller” created

service “traefik-ingress-service” created

traefik 还提供了一个 web ui 工具,就是上面的 8080 端口对应的服务,为了能够访问到该服务,我们这里将服务设置成的 NodePort:

[root@master ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.254.0.10 53/UDP,53/TCP,9153/TCP 11d

kubernetes-dashboard NodePort 10.254.227.243 443:30000/TCP 126m

traefik-ingress-service NodePort 10.254.0.16 80:30293/TCP,8080:32512/TCP 23s

现在在浏览器中输入 10.0.0.11:32512就可以访问到 traefik 的 dashboard 了:

1568741972895

3:创建Ingress 对象

创建一个 ingress 对象