本文仅练习爬虫程序的编写,并无保存任何数据,网址接口已经打码处理。

目标:http://xxx.com

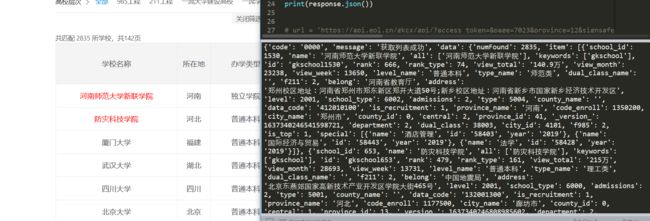

我们通过分析网络请求可以看到有这两个json文件:

https://xxx.cn/www/2.0/schoolprovinceindex/2018/318/12/1/1.json

https://xxx..cn/www/2.0/schoolspecialindex/2018/31/11/1/1.json

其中318是学校id,12是省份id,代表的是天津

分别对应着学校各省分数线以及和各专业分数线

因此我们当前页面的代码为:

import requests

HEADERS = {

"Accept": "text/html,application/xhtml+xml,application/xml;",

"Accept-Language": "zh-CN,zh;q=0.8",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:67.0) Gecko/20100101 Firefox/67.0",

'Referer': 'https://xxx.cn/school/search'

}

url = 'https://xxx.cn/www/2.0/schoolprovinceindex/2018/1217/12/1/1.json'

response = requests.get(url,headers=HEADERS)

print(response.json())

接下来我们就要想办法获取学校id了,同样我们分析到:

https://xxxl.cn/gkcx/api/?uri=apigkcx/api/school/hotlists

通过post如下数据:

data = {"access_token":"","admissions":"","central":"","department":"","dual_class":"","f211":"","f985":"","is_dual_class":"","keyword":"","page":2,"province_id":"","request_type":1,"school_type":"","size":20,"sort":"view_total","type":"","uri":"apigkcx/api/school/hotlists"}

我们可以看到一个参数是page,对应着页码:

所以我们这部分的代码为:

import requests

HEADERS = {

"Accept": "text/html,application/xhtml+xml,application/xml;",

"Accept-Language": "zh-CN,zh;q=0.8",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:67.0) Gecko/20100101 Firefox/67.0",

'Referer': 'https://xxx.cn/school/search'

}

url = 'https://xxx.cn/gkcx/api/?uri=apigkcx/api/school/hotlists'

data = {"access_token":"","admissions":"","central":"","department":"","dual_class":"","f211":"","f985":"","is_dual_class":"","keyword":"","page":2,"province_id":"","request_type":1,"school_type":"","size":20,"sort":"view_total","type":"","uri":"apigkcx/api/school/hotlists"}

response = requests.post(url,headers=HEADERS,data=data)

print(response.json())

我们处理一下就可以获得学校的id,为了美观和之后数据处理我们加到字典里,

import requests

HEADERS = {

"Accept": "text/html,application/xhtml+xml,application/xml;",

"Accept-Language": "zh-CN,zh;q=0.8",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:67.0) Gecko/20100101 Firefox/67.0",

'Referer': 'https://xxx.cn/school/search'

}

school_info = []

def get_schoolid(pagenum):

url = 'https://xxx.cn/gkcx/api/?uri=apigkcx/api/school/hotlists'

data = {"access_token":"","admissions":"","central":"","department":"","dual_class":"","f211":"","f985":"","is_dual_class":"","keyword":"","page":pagenum,"province_id":"","request_type":1,"school_type":"","size":20,"sort":"view_total","type":"","uri":"apigkcx/api/school/hotlists"}

response = requests.post(url,headers=HEADERS,data=data)

school_json = response.json()

schools = school_json['data']['item']

for school in schools:

school_id = school['school_id']

school_name = school['name']

school_dict = {

'id':school_id,

'name':school_name

}

school_info.append(school_dict)

def main():

get_schoolid(2)

print(school_info)

if __name__ == '__main__':

main()

结果如下:

因为之后我们想要遍历所有页面的学校id,所以保留了一个pagenum参数,用作循环。

接下来就是添加上获取相应简略信息以及详细专业分数:

import requests

HEADERS = {

"Accept": "text/html,application/xhtml+xml,application/xml;",

"Accept-Language": "zh-CN,zh;q=0.8",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:67.0) Gecko/20100101 Firefox/67.0",

'Referer': 'https://xxx.cn/school/search'

}

school_info = []

simple_list = []

pro_list = []

name_list = []

def get_schoolid(pagenum):

url = 'https://xxx.cn/gkcx/api/?uri=apigkcx/api/school/hotlists'

data = {"access_token":"","admissions":"","central":"","department":"","dual_class":"","f211":"","f985":"","is_dual_class":"","keyword":"","page":pagenum,"province_id":"","request_type":1,"school_type":"","size":20,"sort":"view_total","type":"","uri":"apigkcx/api/school/hotlists"}

response = requests.post(url,headers=HEADERS,data=data)

school_json = response.json()

schools = school_json['data']['item']

for school in schools:

school_id = school['school_id']

school_name = school['name']

school_dict = {

'id':school_id,

'name':school_name

}

school_info.append(school_dict)

def get_info(id,name):

simple_url = 'https://xxx.cn/www/2.0/schoolprovinceindex/2018/%s/12/1/1.json'%id

simple_response = requests.get(simple_url,headers=HEADERS)

simple_info = simple_response.json()['data']['item'][0]

simple_infodict = {

'name':name,

'max':simple_info['max'],

'min':simple_info['min'],

'average':simple_info['average'],

'local_batch_name':simple_info['local_batch_name']

}

simple_list.append(simple_infodict)

def get_score(id,name):

professional_url = 'https://xxx.cn/www/2.0/schoolspecialindex/2018/%s/12/1/1.json'%id

professional_response = requests.get(professional_url,headers=HEADERS)

for pro_info in professional_response.json()['data']['item']:

pro_dict = {

'name':name,

'spname':pro_info['spname'],

'max':pro_info['max'],

'min':pro_info['min'],

'average':pro_info['average'],

'min_section':pro_info['min_section'],

'local_batch_name':pro_info['local_batch_name']

}

pro_list.append(pro_dict)

def main():

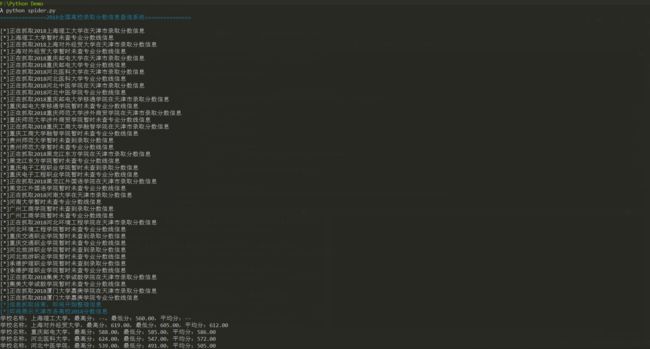

print('\033[0;36m='*15+'2018全国高校录取分数信息查询系统'+'='*15+'\033[0m'+'\n')

get_schoolid(1)

for school in school_info:

id = school['id']

name = school['name']

try:

get_info(id,name)

print('[*]正在抓取2018%s在天津市录取分数信息'%name)

except:

print('[*]%s暂时未查到录取分数信息'%name)

try:

get_score(id,name)

print('[*]正在抓取2018%s专业分数线信息'%name)

except:

print('[*]%s暂时未查专业分数线信息'%name)

print('\033[0;36m[*]信息抓取结束,即将开始整理信息\033[0m')

print('\033[0;36m[*]即将展示天津市各高校2018分数信息\033[0m')

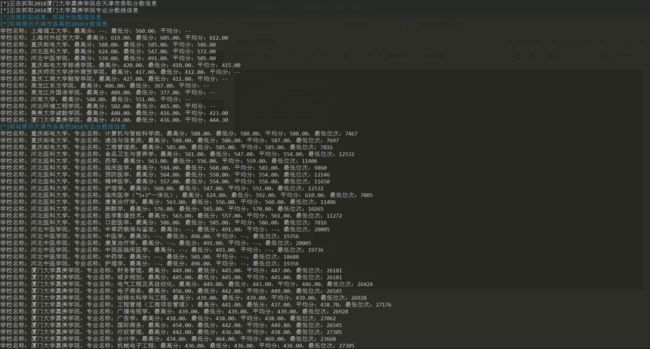

for school in simple_list:

print('学校名称:{name},最高分:{max},最低分:{min},平均分:{average}'.format(**school))

print('\033[0;36m[*]即将展示天津市各高校2018专业分数线信息\033[0m')

for school in pro_list:

print('学校名称:{name},专业名称:{spname},最高分:{max},最低分:{min},平均分:{average},最低位次:{min_section}'.format(**school))

if __name__ == '__main__':

main()

因为一共有142页,io密集型可以使用多线程提高爬虫速度,但是要注意共同变量的问题,由于之前总结过python多线程的相关内容,接下来我们可以通过pandas保存到excel,我们可以先将字典转换成dataframe,然后保存为excel。

也可以通过pyecharts等进行数据分析。