Hive 部署

https://cwiki.apache.org/confluence/display/Hive/Home#Home-UserDocumentation

https://cwiki.apache.org/confluence/display/Hive/GettingStarted

Apache Hive

Apache Hive™ 数据仓库提供对分布式存储内大数据集的读、写、管理功能,以及提供SQL语法查询。

在 Apache Hadoop™ 之上,Hive 提供如下特性:

- 提供了通过SQL访问数据的工具,以此支持数仓任务:比如ETL,报表,数据分析。

- 提供了对各类型的数据结构化的方法。

- 可以直接访问存储于Apache HDFS™的文件,或者其他数据存储系统:比如Apache HBase™。

- 通过 Apache Tez™, Apache Spark™, or MapReduce执行查询。

- HPL-SQL过程语言。

- 通过Hive LLAP, Apache YARN and Apache Slider子查询。

Hive 提供标准 SQL 功能,并包含了后来的 SQL:2003, SQL:2011, and SQL:2016的很多特性供分析使用。

Hive's SQL 还可以通过 user defined functions (UDFs), user defined aggregates (UDAFs), and user defined table functions (UDTFs)进行user code 扩展。

没有以 "Hive format" 存储数据的说法。 Hive 支持使用逗号、tab分隔符 (CSV/TSV) 文本文件、Apache Parquet™、 Apache ORC™等格式。 用户可以扩展格式,请参阅Developer Guide 下的 File Formats 和 Hive SerDe。

Hive 不是设计于在线分析 (OLTP) 工作,最好用于传统数据仓库任务(离线分析)。

Hive 设计于最大化弹性伸缩(动态的添加主机到Hadoop集群)、性能、可扩展性、容错,以及输入格式的松耦合。

Hive 组件有 HCatalog 和 WebHCat。

- HCatalog 是Hadoop的表和存储管理层,使用户可以使用不同的数据处理工具(比如Pig和MapReduce)轻松地在网格上读写数据。

- WebHCat 提供服务来运行Hadoop MapReduce (or YARN), Pig, Hive 任务。还可以使用HTTP接口(REST 类型)操作Hive元数据。

Installation and Configuration

Requirements

- Java 1.7

注意: Hive versions 1.2 后需要 Java 1.7 及以上的版本。 Hive versions 0.14 to 1.1 适配 Java 1.6 。强烈推荐用户迁移到 Java 1.8 (see HIVE-8607。Java7环境编译,Java8环境测试。). - Hadoop 2.x (首选), 1.x (不被Hive 2.0.0 及以后版本支持).

Hive 版本在0.13之前,支持Hadoop 0.20.x, 0.23.x。 - Hive 生产环境通常为 Linux 和 Windows。 Mac 通常作为开发环境。本文适用于 Linux 和 Mac。Windows环境的配置略有不同。

Installing Hive from a Stable Release

- 解压软件包

[root@hadoop opt]# mv ~/apache-hive-3.1.2-bin.tar.gz /opt

[root@hadoop opt]# tar xzvf apache-hive-3.1.2-bin.tar.gz

[root@hadoop opt]# useradd hive

[root@hadoop opt]# chown -R hive:hive apache-hive-3.1.2-bin

- 设置环境变量

HIVE_HOME指向安装目录 - 添加

$HIVE_HOME/bin到PATH

JAVA_HOME=/usr/local/jdk/jdk1.8.0_202

HADOOP_HOME=/opt/hadoop-3.1.4

HIVE_HOME=/opt/apache-hive-3.1.2-bin

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME:$HIVE_HOME

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export JAVA_HOME HADOOP_HOME HIVE_HOME

export PATH

export CLASSPATH

Running Hive

Hive 需要调用 Hadoop,如上所示:

- 需要在path配置Hadoop, 或者

- export HADOOP_HOME=

另外,还需要使用如下HDFS命令创建 /tmp 和 /user/hive/warehouse (aka hive.metastore.warehouse.dir) 并在Hive创建表之前设置chmod g+w。

[hadoop@hadoop hadoop-3.1.4]$ $HADOOP_HOME/bin/hadoop fs -mkdir /tmp

[hadoop@hadoop hadoop-3.1.4]$ $HADOOP_HOME/bin/hadoop fs -mkdir /user/hive/

[hadoop@hadoop hadoop-3.1.4]$ $HADOOP_HOME/bin/hadoop fs -mkdir /user/hive/warehouse

[hadoop@hadoop hadoop-3.1.4]$ $HADOOP_HOME/bin/hadoop fs -chmod 777 /tmp

[hadoop@hadoop hadoop-3.1.4]$ $HADOOP_HOME/bin/hadoop fs -chmod g+w /user/hive/warehouse

[hadoop@hadoop hadoop-3.1.4]$ bin/hdfs dfs -ls /

Found 2 items

drwxrwxrwx - hadoop supergroup 0 2020-10-22 18:41 /tmp

drwxr-xr-x - hadoop supergroup 0 2020-10-22 18:42 /user

[hadoop@hadoop hadoop-3.1.4]$ bin/hdfs dfs -ls /user

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2020-10-22 18:39 /user/hadoop

drwxr-xr-x - hadoop supergroup 0 2020-10-22 18:42 /user/hive

[hadoop@hadoop hadoop-3.1.4]$ bin/hdfs dfs -chown -R hive /user/hive

[hadoop@hadoop hadoop-3.1.4]$ bin/hdfs dfs -ls /user

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2020-10-22 18:39 /user/hadoop

drwxr-xr-x - hive supergroup 0 2020-10-22 18:42 /user/hive

[hadoop@hadoop hadoop-3.1.4]$ bin/hdfs dfs -ls /user/hive

Found 1 items

drwxrwxr-x - hive supergroup 0 2020-10-22 18:42 /user/hive/warehouse

测试hive账户上传文件

[hive@hadoop ~]$ ls

test

[hive@hadoop ~]$ $HADOOP_HOME/bin/hdfs dfs -put test

[hive@hadoop ~]$ $HADOOP_HOME/bin/hdfs dfs -ls /user/hive

Found 2 items

-rw-r--r-- 1 hive supergroup 0 2020-10-22 19:06 /user/hive/test

drwxrwxr-x - hive supergroup 0 2020-10-22 18:42 /user/hive/warehouse

[hive@hadoop ~]$ $HADOOP_HOME/bin/hdfs dfs -put test /user/hive/warehouse

[hive@hadoop ~]$ $HADOOP_HOME/bin/hdfs dfs -ls /user/hive/warehouse

Found 1 items

-rw-r--r-- 1 hive supergroup 0 2020-10-22 19:07 /user/hive/warehouse/test

[hive@hadoop ~]$ $HADOOP_HOME/bin/hdfs dfs -rm /user/hive/warehouse/test

Deleted /user/hive/warehouse/test

[hive@hadoop ~]$ $HADOOP_HOME/bin/hdfs dfs -rm /user/hive/test

Deleted /user/hive/test

设置HIVE_HOME

$ export HIVE_HOME=

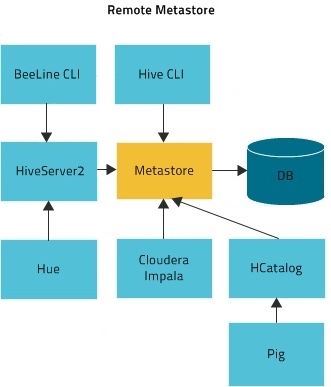

Remote Metastore Server

https://cwiki.apache.org/confluence/display/Hive/AdminManual+Metastore+Administration

在远程metastore的设置中,所有的Hive客户端会连接到metastore server。Metastore server依次查询datastore(比如MySQL)请求元数据。 Metastore server 和 client 以 Thrift 协议通信。自Hive 0.5.0后,你可以使用如下命令启动 Thrift server :

[hive@hadoop apache-hive-3.1.2-bin]$ bin/hive --service metastore &

对于早与版本0.5.0的Hive, 需要通过直接执行Java来运行 Thrift server:

$JAVA_HOME/bin/java -Xmx1024m -Dlog4j.configuration=file://$HIVE_HOME/conf/hms-log4j.properties -Djava.library.path=$HADOOP_HOME/lib/native/Linux-amd64-64/ -cp $CLASSPATH org.apache.hadoop.hive.metastore.HiveMetaStore

如果你直接执行Java,那么你需要配置好JAVA_HOME, HIVE_HOME, HADOOP_HOME;CLASSPATH应该包含Hadoop, Hive (lib and auxlib),and Java jars。

Running Hive CLI

Hive CLI 文档

[hive@hadoop apache-hive-3.1.2-bin]$ bin/hive

which: no hbase in (/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/local/jdk/jdk1.8.0_202/bin:/opt/hadoop-3.1.4:/opt/apache-hive-3.1.2-bin:/home/hive/.local/bin:/home/hive/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-3.1.4/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = b31ef87c-a414-4971-93e9-03cab446385d

Logging initialized using configuration in jar:file:/opt/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Hive Session ID = b8104850-ccab-46fa-8359-508a61d6a172

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show tables;

OK

Time taken: 1.097 seconds

hive> quit;

Running HiveServer2 and Beeline

自Hive 2.1起,我们需要执行如下的schematool 命令作为初始化步骤。比如,我们可以使用 "derby" 作为 db type。

初始化过程,参考 Hive Schema Tool 。

https://docs.cloudera.com/documentation/enterprise/5-6-x/topics/cdh_ig_hive_metastore_configure.html

https://cwiki.apache.org/confluence/display/Hive/AdminManual+Metastore+Administration

$ $HIVE_HOME/bin/schematool -dbType -initSchema

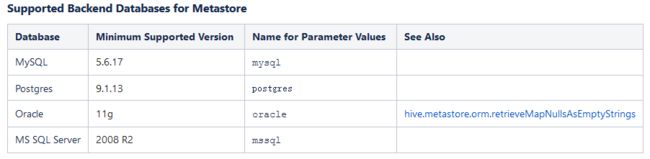

配置Remote Metastore 准备工作

安装mysql5.6,并配置mysql> CREATE DATABASE metastore; mysql> CREATE USER 'hive'@'%' IDENTIFIED BY 'hive'; mysql> REVOKE ALL PRIVILEGES, GRANT OPTION FROM 'hive'@'%'; mysql> GRANT ALL PRIVILEGES ON metastore.* TO 'hive'@'%'; mysql> FLUSH PRIVILEGES; mysql> quit;安装mysql-connector-java

[root@hadoop conf]# yum install mysql-connector-java.noarch 并拷贝 [root@hadoop conf]# cp /usr/share/java/mysql-connector-java.jar /opt/apache-hive-3.1.2-bin/lib/拷贝

$HADOOP_HOME/share/hadoop/common/lib/guava-27.0-jre.jar覆盖$HIVE_HOME/lib/guava-19.0.jar

创建hive-site.xml,使metastore连接到MySQL。如下(根据cloudera配置修改):

javax.jdo.option.ConnectionURL

jdbc:mysql://10.0.31.65/metastore

the URL of the MySQL database

javax.jdo.option.ConnectionDriverName

com.mysql.cj.jdbc.Driver

javax.jdo.option.ConnectionUserName

hive

javax.jdo.option.ConnectionPassword

hive

datanucleus.autoCreateSchema

false

datanucleus.fixedDatastore

true

datanucleus.autoStartMechanism

SchemaTable

hive.metastore.uris

thrift://10.0.31.65:9083

IP address (or fully-qualified domain name) and port of the metastore host

hive.metastore.schema.verification

true

执行初始化,指定dbType mysql

[hive@hadoop apache-hive-3.1.2-bin]$ $HIVE_HOME/bin/schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-3.1.4/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://10.0.31.65/metastore

Metastore Connection Driver : com.mysql.cj.jdbc.Driver

Metastore connection User: hive

Starting metastore schema initialization to 3.1.0

Initialization script hive-schema-3.1.0.mysql.sql

Initialization script completed

schemaTool completed

[hive@hadoop apache-hive-3.1.2-bin]$

HiveServer2

HiveServer2(HS2)是一个服务器接口,它使远程客户机能够对HIVE执行查询并检索结果。

HiveServer2 有自己的CLI Beeline。因为Beeline而导致HiveCLI 被弃用,因为它缺乏HiveServer2的多用户、安全性和其他功能。

要从shell运行HiveServer2和Beeline:

$ $HIVE_HOME/bin/hiveserver2

$ $HIVE_HOME/bin/beeline -u jdbc:hive2://$HS2_HOST:$HS2_PORT

Beeline启动需要配置 HiveServer2的JDBC URL ,取决于HiveServer2启动的地址和端口。默认是localhost:10000,所以地址类似 jdbc:hive2://localhost:10000。

基于测试目的,可以在同一进程启动 Beeline 和 HiveServer2 ,就类似于 HiveCLI 的使用方式:

$ $HIVE_HOME/bin/beeline -u jdbc:hive2://

Beeline – Command Line Shell

范例:

[hive@hadoop apache-hive-3.1.2-bin]$ bin/hiveserver2 &

[hive@hadoop apache-hive-3.1.2-bin]$ bin/beeline -u jdbc:hive2://

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-3.1.4/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://

Hive Session ID = d9889ac7-8b44-417c-a415-a0c35e5de9aa

20/10/28 00:11:48 [main]: WARN session.SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.2 by Apache Hive

0: jdbc:hive2://> show tables;

OK

+-----------+

| tab_name |

+-----------+

+-----------+

No rows selected (2.033 seconds)

0: jdbc:hive2://> !quit

Closing: 0: jdbc:hive2://

HiveServer2 高级配置

配置文件 hive-site.xml

hive --service hiveserver2 --hiveconf hive.server2.thrift.port=10000 --hiveconf hive.root.logger=INFO,console

HiveServer2 客户端

beeline -u jdbc:hive2://192.168.0.51:10000/training_db -n username -p password -e "select current_date()"

beeline -u jdbc:hive2://192.168.0.51:10000/training_db -n impadmin -p impetus --silent=true --outputformat=csv2 -e "select * from stud"

Running HCatalog

如果以二进制版本安装Hive,hcat命令已经在hcatalog/bin目录内了。除了hcat -g和hcat -p,大多情况下hcat都可以用 hive命令代替。注意,hcat命令使用-p标记来设置权限,但是hive使用-p来指派端口。

HCatalog server 和Hive metastore一样,启动了Hive metastore就可以了。

HCatalog CLI 文档

Hive CLI 文档

HCatalog 安装文档.

Hive 0.11.0版本以上,运行HCatalog server

$ $HIVE_HOME/hcatalog/sbin/hcat_server.sh

Hive 0.11.0版本以上,运行HCatalog 命令行工具

$ $HIVE_HOME/hcatalog/bin/hcat

更多信息参考 HCatalog Installation from Tarball and HCatalog CLI in the HCatalog manual.

范例:

[hive@hadoop apache-hive-3.1.2-bin]$ $HIVE_HOME/hcatalog/bin/hcat -e 'show tables;'

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hadoop-3.1.4/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2020-10-28 00:28:33,218 INFO conf.HiveConf: Found configuration file file:/opt/apache-hive-3.1.2-bin/conf/hive-site.xml

Hive Session ID = 1dcb43c9-2c02-4401-8be4-4bc3760d0de6

2020-10-28 00:28:36,048 INFO SessionState: Hive Session ID = 1dcb43c9-2c02-4401-8be4-4bc3760d0de6

2020-10-28 00:28:37,482 INFO session.SessionState: Created HDFS directory: /tmp/hive/hive/1dcb43c9-2c02-4401-8be4-4bc3760d0de6

2020-10-28 00:28:37,504 INFO session.SessionState: Created local directory: /tmp/hive/1dcb43c9-2c02-4401-8be4-4bc3760d0de6

2020-10-28 00:28:37,508 INFO session.SessionState: Created HDFS directory: /tmp/hive/hive/1dcb43c9-2c02-4401-8be4-4bc3760d0de6/_tmp_space.db

2020-10-28 00:28:37,614 INFO ql.Driver: Compiling command(queryId=hive_20201028002837_d4f757e8-ea5c-458f-bacf-afeba2b149a0): show tables

2020-10-28 00:28:40,761 INFO metastore.HiveMetaStoreClient: Trying to connect to metastore with URI thrift://hadoop:9083

2020-10-28 00:28:40,796 INFO metastore.HiveMetaStoreClient: Opened a connection to metastore, current connections: 1

2020-10-28 00:28:40,820 INFO metastore.HiveMetaStoreClient: Connected to metastore.

2020-10-28 00:28:40,820 INFO metastore.RetryingMetaStoreClient: RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hive (auth:SIMPLE) retries=1 delay=1 lifetime=0

2020-10-28 00:28:41,147 INFO ql.Driver: Concurrency mode is disabled, not creating a lock manager

2020-10-28 00:28:41,209 INFO ql.Driver: Semantic Analysis Completed (retrial = false)

2020-10-28 00:28:41,317 INFO ql.Driver: Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:tab_name, type:string, comment:from deserializer)], properties:null)

2020-10-28 00:28:41,453 INFO exec.ListSinkOperator: Initializing operator LIST_SINK[0]

2020-10-28 00:28:41,467 INFO ql.Driver: Completed compiling command(queryId=hive_20201028002837_d4f757e8-ea5c-458f-bacf-afeba2b149a0); Time taken: 3.896 seconds

2020-10-28 00:28:41,467 INFO reexec.ReExecDriver: Execution #1 of query

2020-10-28 00:28:41,468 INFO ql.Driver: Concurrency mode is disabled, not creating a lock manager

2020-10-28 00:28:41,468 INFO ql.Driver: Executing command(queryId=hive_20201028002837_d4f757e8-ea5c-458f-bacf-afeba2b149a0): show tables

2020-10-28 00:28:41,485 INFO ql.Driver: Starting task [Stage-0:DDL] in serial mode

2020-10-28 00:28:41,511 INFO ql.Driver: Completed executing command(queryId=hive_20201028002837_d4f757e8-ea5c-458f-bacf-afeba2b149a0); Time taken: 0.043 seconds

OK

2020-10-28 00:28:41,512 INFO ql.Driver: OK

2020-10-28 00:28:41,512 INFO ql.Driver: Concurrency mode is disabled, not creating a lock manager

2020-10-28 00:28:41,523 INFO exec.ListSinkOperator: RECORDS_OUT_INTERMEDIATE:0, RECORDS_OUT_OPERATOR_LIST_SINK_0:0,

Time taken: 4.004 seconds

2020-10-28 00:28:41,542 INFO session.SessionState: Deleted directory: /tmp/hive/hive/1dcb43c9-2c02-4401-8be4-4bc3760d0de6 on fs with scheme hdfs

2020-10-28 00:28:41,551 INFO session.SessionState: Deleted directory: /tmp/hive/1dcb43c9-2c02-4401-8be4-4bc3760d0de6 on fs with scheme file

2020-10-28 00:28:41,558 INFO metastore.HiveMetaStoreClient: Closed a connection to metastore, current connections: 0

Running WebHCat (Templeton)

Hive 0.11.0版本以上,运行WebHCat server

$ $HIVE_HOME/hcatalog/sbin/webhcat_server.sh

更多信息参考 WebHCat manual 内的 WebHCat Installation 部分。

[hive@hadoop apache-hive-3.1.2-bin]$ $HIVE_HOME/hcatalog/sbin/webhcat_server.sh

Lenght of string is non zero

usage: /opt/apache-hive-3.1.2-bin/hcatalog/sbin/webhcat_server.sh [start|startDebug|stop|foreground]

start Start the Webhcat Server

startDebug Start the Webhcat Server listening for debugger on port 5005

stop Stop the Webhcat Server

foreground Run the Webhcat Server in the foreground

[hive@hadoop apache-hive-3.1.2-bin]$

[hive@hadoop apache-hive-3.1.2-bin]$

[hive@hadoop apache-hive-3.1.2-bin]$

[hive@hadoop apache-hive-3.1.2-bin]$

[hive@hadoop apache-hive-3.1.2-bin]$ $HIVE_HOME/hcatalog/sbin/webhcat_server.sh start

Lenght of string is non zero

webhcat: starting ...

webhcat: /opt/hadoop-3.1.4/bin/hadoop jar /opt/apache-hive-3.1.2-bin/hcatalog/sbin/../share/webhcat/svr/lib/hive-webhcat-3.1.2.jar org.apache.hive.hcatalog.templeton.Main

webhcat: starting ... started.

webhcat: done