hadoop实验二

一、实验目的

- 理解HDFS在Hadoop体系结构中的角色

- 熟练使用HDFS操作常用的Shell命令

- 熟悉HDFS操作常用的Java API

二、实验平台

- 操作系统:

- Hadoop版本:

- JDK版本:

- Java IDE:

三、实验内容和要求

- 利用Hadoop提供的Shell命令完成下列任务:

- 向HDFS中上传任意文本文件,如果指定的文件在HDFS中已经存在,由用户指定是追加到原有文件末尾还是覆盖原有的文件。

cd /usr/local/hadoop

./bin/hdfs dfs -mkdir -p /user/hadoop

./bin/hdfs dfs -put test.txt /user/hadoop/test

./bin/hdfs dfs -appendToFile local.txt /user/hadoop/test/test.txt

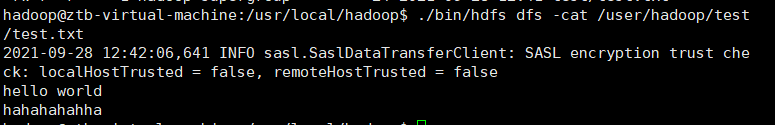

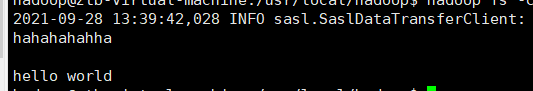

/bin/hdfs dfs -cat /user/hadoop/test/test.txt

- 从HDFS中下载指定文件,如果本地文件与要下载的文件名称相同,则自动对下载的文件重命名。

if $(hadoop fs -test -e /usr/local/hadoop/test.txt);

then $(hadoop fs -copyToLocal /user/hadoop/test/test.txt ./test.txt);

else $(hadoop fs -copyToLocal /user/hadoop/test/test.txt ./test2.txt);

fi

![]()

- 将HDFS中指定文件的内容输出到终端中。

hadoop fs -cat /user/hadoop/test/test.txt

- 显示HDFS中指定的文件的读写权限、大小、创建时间、路径等信息。

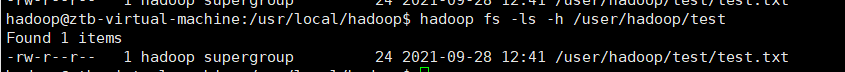

hadoop fs -ls -h /user/hadoop/test/test.txt

- 给定HDFS中某一个目录,输出该目录下的所有文件的读写权限、大小、创建时间、路径等信息,如果该文件是目录,则递归输出该目录下所有文件相关信息。

hadoop fs -ls -h /user/hadoop/test

- 提供一个HDFS内的文件的路径,对该文件进行创建和删除操作。如果文件所在目录不存在,则自动创建目录。

if $(hadoop fs -test -d /user/hadoop/test/test1);

then $(hadoop fs -touchz /user/hadoop/test/test1);

else $(hadoop fs -mkdir -p /user/hadoop/test/test1 );

fi

#查询

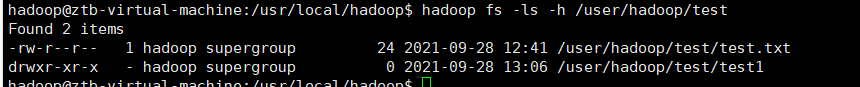

/usr/local/hadoop$ hadoop fs -ls -h /user/hadoop/test

- 提供一个HDFS的目录的路径,对该目录进行创建和删除操作。创建目录时,如果目录文件所在目录不存在则自动创建相应目录;删除目录时,由用户指定当该目录不为空时是否还删除该目录;

hadoop fs -rm -r /user/hadoop/test/test1

![]()

- 向HDFS中指定的文件追加内容,由用户指定内容追加到原有文件的开头或结尾;

向末尾进行追加

hadoop fs -appendToFile local.txt /user/hadoop/test/test.txt

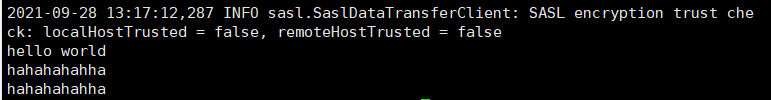

hadoop fs -cat /user/hadoop/test/test.txt

向开头追加

追加到原文件的开头,在 HDFS 中不存在与这种操作对应的命令,因此,无法使用一条命令来完成。可以先移动到本地进行操作,再进行上传覆盖(如果要修改test.txt,就将test.txt插入到local.txt后面,然后将local.txt上传)

hadoop fs -get /user/hadoop/test/test.txt

cat test.txt >> local.txt

hadoop fs -copyFromLocal -f local.txt /user/hadoop/test/test.txt

hadoop fs -cat /user/hadoop/test/test.txt

- 删除HDFS中指定的文件;

hadoop fs -rm /user/hadoop/test/test.txt

hadoop fs -ls /user/hadoop/test

- 删除HDFS中指定的目录,由用户指定目录中如果存在文件时是否删除目录;

if $(hadoop fs -test -d /user/hadoop/test/test1);

then $(hadoop fs -rm -r /user/hadoop/test/test1);

else $(hadoop fs -rm -r /user/hadoop/test/test1 );

fi

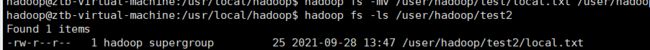

-在HDFS中,将文件从源路径移动到目的路径。

hadoop fs -mv /user/hadoop/test/local.txt /user/hadoop/test2

hadoop fs -ls /user/hadoop/test2

- 编程实现一个类“MyFSDataInputStream”,该类继承“org.apache.hadoop.fs.FSDataInputStream”,要求如下:

- 实现按行读取HDFS中指定文件的方法“readLine()”。

- 如果读到文件末尾,则返回空,否则返回文件一行的文本。

package hadoop1;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;

import org.apache.hadoop.fs.Path;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.net.URL;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.*;

public class ShowTheContent extends FSDataInputStream {

public ShowTheContent(InputStream in) {

super(in);

}

/**

* 实现按行读取 每次读入一个字符,遇到"\n"结束,返回一行内容

*/

public static String readline(BufferedReader br) throws IOException {

char[] data = new char[1024];

int read = -1;

int off = 0;

// 循环执行时,br 每次会从上一次读取结束的位置继续读取

// 因此该函数里,off 每次都从 0 开始

while ((read = br.read(data, off, 1)) != -1) {

if (String.valueOf(data[off]).equals("\n")) {

off += 1;

break;

}

off += 1;

}

if (off > 0) {

return String.valueOf(data);

} else {

return null;

}

}

/**

* 读取文件内容

*/

public static void cat(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataInputStream in = fs.open(remotePath);

BufferedReader br = new BufferedReader(new InputStreamReader(in));

String line = null;

while ((line = ShowTheContent.readline(br)) != null) {

System.out.println(line);

}

br.close();

in.close();

fs.close();

}

/**

* 主函数

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name", "hdfs://localhost:9000");

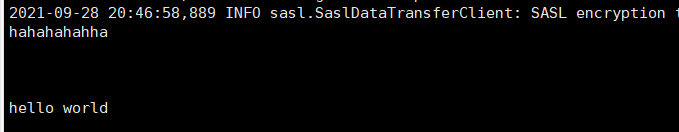

String remoteFilePath = "/user/hadoop/test2/local.txt"; // HDFS 路径

try {

ShowTheContent.cat(conf, remoteFilePath);

} catch (Exception e) {

e.printStackTrace();

}

}

}

hadoop jar ./jar/2.jar

- 查看Java帮助手册或其它资料,用“java.net.URL”和“org.apache.hadoop.fs.FsURLStreamHandlerFactory”编程完成输出HDFS中指定文件的文本到终端中。

package hadoop2;

import org.apache.hadoop.fs.FSDataInputStream;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.net.MalformedURLException;

import java.net.URL;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import java.io.*;

import java.net.URL;

public class MyFSDataInputStream {

static {

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

}

/**

* 主函数

*/

public static void main(String[] args) throws Exception {

String remoteFilePath = "hdfs:///user/hadoop/test2/local.txt"; // HDFS 文件

InputStream in = null;

try {

/* 通过 URL 对象打开数据流,从中读取数据 */

in = new URL(remoteFilePath).openStream();

IOUtils.copyBytes(in, System.out, 4096, false);

} finally {

IOUtils.closeStream(in);

}

}

}

hadoop jar ./jar/1.jar

- 请分别新建文件file1.txt、file2.txt、file3.txt、file4.abc和file5.abc,上述文件内容如下表所示,

| 文件名称 | 文件内容 |

|---|---|

| file1.txt | this is file1.txt |

| file2.txt | this is file2.txt |

| file3.txt | this is file3.txt |

| file4.abc | this is file4.abc |

| file5.abc | this is file5.abc |

将这些文件上传到HDFS的“/user/hadoop”目录下。请参考授课讲义第3章 分布式文件系统HDFS的7.3 HDFS常用Java API及应用案例,编写Java应用程序,实现从该目录中过滤出所有后缀名不为“.abc”的文件,对过滤之后的文件进行读取,并将这些文件的内容合并到文件“/user/hadoop/merge.txt”中。

package hadoop3;

import java.io.File;

import java.io.IOException;

import java.io.PrintStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.PathFilter;

class MyPathFilter implements PathFilter {

String reg = null;

MyPathFilter(String reg) {

this.reg = reg;

}

public boolean accept(Path path) {

//①

if(path.toString().matches(reg)) {

return true;

}

return false;

}

}

public class Merge {

Path inputPath = null;

Path outputPath = null;

public Merge(String input, String output) {

this.inputPath = new Path(input);

this.outputPath = new Path(output);

}

public void doMerge() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fsSource = FileSystem.get(URI.create(inputPath.toString()), conf);

FileSystem fsDst = FileSystem.get(URI.create(outputPath.toString()), conf);

FileStatus[] sourceStatus = fsSource.listStatus(inputPath, new MyPathFilter(".*\\.txt"));

FSDataOutputStream fsdos = fsDst.create(outputPath);

PrintStream ps = new PrintStream(System.out);

for (FileStatus sta : sourceStatus) {

System.out.println("path : " + sta.getPath() + " file size : " + sta.getLen() +

" auth: " + sta.getPermission());

/*File file = new File(sta.getPath() + "");

if (!file.isFile()) {

continue;

}*/

System.out.println("next");

FSDataInputStream fsdis = fsSource.open(sta.getPath());

byte[] data = new byte[1024];

int read = -1;

while ((read = fsdis.read(data)) > 0) {

ps.write(data, 0, read);

fsdos.write(data, 0 ,read);

}

fsdis.close();

}

ps.close();

fsdos.close();

}

public static void main(String[] args) throws IOException{

Merge merge = new Merge(

"hdfs://localhost:9000/user/hadoop",

"hdfs://localhost:9000/user/hadoop/merge.txt"

);

merge.doMerge();

}

}

hadoop@ztb-virtual-machine:/usr/local/hadoop$ hadoop jar ./jar/3.jar

path : hdfs://localhost:9000/user/hadoop/file1.txt file size : 18 auth: rw-r--r--

next

2021-09-28 21:15:47,808 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

this is file1.txt

path : hdfs://localhost:9000/user/hadoop/file2.txt file size : 18 auth: rw-r--r--

next

this is file2.txt

path : hdfs://localhost:9000/user/hadoop/file3.txt file size : 18 auth: rw-r--r--

next

this is file3.txt

2021-09-28 21:15:48,248 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

详细请看

https://blog.csdn.net/qq_50596778